Announcing MinIO Batch Framework – Feature #1: Batch Replication

Batch processing is a way of consistently and reliably working with large amounts of data with little to no user interaction. Enterprises typically prepare and analyze data from multiple sources using batch operations. The individual jobs that gather, transform and combine data are batched together to run more efficiently.

MinIO customers use batch processing to build data analytics and AI/ML pipelines, and copy or move data between MinIO deployments, because it processes data rapidly, eliminates the requirement for user intervention and boosts efficiency by running during off-hours or low system utilization. As we’ve worked with customers, we learned that the combination of batch processing and Lambda Notifications is ideal for converting files between formats, combining data from separate sources and manipulating non-streaming data (as in already saved to files) for analysis.

As a result, we developed the MinIO Batch Framework to enable you to run batch operations directly on your MinIO deployment. The first operation that we’re providing via the Batch Framework is Batch Replication, available in MinIO version RELEASE.2022-10-08T20-11-00Z and later.

MinIO to MinIO Batch Replication

We recently released our first batch functionality, Batch Replication, enabling the replication of objects between buckets of multiple MinIO deployments. The entire contents of a bucket can be replicated or specific objects using flags and file descriptors (including wildcards). Batch Replication can be triggered as a push or a pull depending on your requirements.

While MinIO admins have long had Active-Active Replication available, that was designed to copy objects in the background that were created after the replication rule was configured. MinIO has hundreds of customers that rely on active-active replication to keep deployments synchronized.

Batch Replication can be used to replicate existing objects, including objects that were added to a bucket before any replication rule was configured, objects that previously failed to replicate due to an incorrectly configured rule, objects that were already replicated to a different target bucket, or objects that have already been replicated. Batch Replication provides an easy way to fill a new bucket, in a new location, with objects. With this new capability, you can replicate any number of objects with minimal code in a single job, view replication status, and be notified when replication is complete.

Advantages of Batch Replication include the ability to leverage the entire MinIO cluster for processing rather than a single workstation running the MinIO Client (using mc mirror). This also removes the workstation as a potential single point of failure, and removes the network between cluster and client as a bottleneck. Users don’t need special permissions to replicate objects because batches run on the server side. In the event that objects aren’t replicated, the MinIO Batch Framework will retry the operation, only attempting to replicate the failed objects.

When to use Batch Replication

One way to use Batch Replication is to push data to a cloud in order to make it available for cloud-native analytics. For example, one customer is replacing legacy Hadoop workloads with cloud-native workloads. The goal is to expose data to cloud-native applications, like Google BigQuery or Starburst, running in the cloud. They can now accomplish this programmatically with Batch Replication. After objects are pushed to the cloud-native MinIO target, a notification is issued and the team can then kick off their Apache Spark jobs.

Batch Replication figures prominently in ETL workflows where bucket notifications are used to trigger the next step (pre-processing, processing, post-processing). In this use case, data is saved to MinIO where it is created, perhaps by an IoT device at the edge or by a transactional database in the datacenter, then batch replicated to the data lake. Once this is complete, a notification kicks off the next processing step by invoking scripts that transform raw data into formats, such as Parquet, Avro and ORC, required for ML processing using KubeFlow or H2O, and data lakehouses like Delta Lake and Apache Iceberg. As pre-processed objects are written, bucket notification kicks off the execution of an ML or analytics workflow.

Batch Replication is a key enabler of multi cloud object storage strategies. Enterprises have data scattered and siloed across many locations and clouds. Batch Replication connects clouds, and connects the edge and the datacenter to the cloud. You are now free to push and pull data wherever you need it and can make the most of it. You are free to replicate everything from a bucket or a subset of objects. Simply configure Batch Replication, let MinIO do the work and notify you when replication is complete.

Running your first Batch Replication

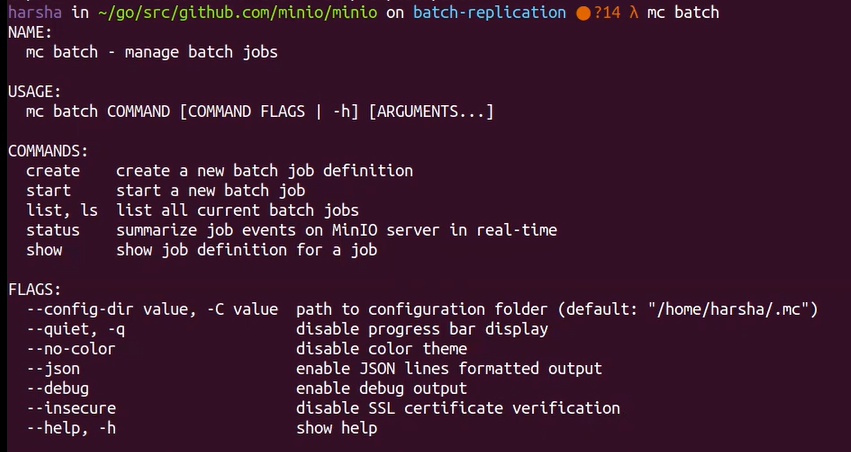

This tutorial will walk you through using the mc batch command. This command provides a way to create a yaml file that defines a batch job and then run it on a periodic basis. You can stop, suspend and resume a Batch Replication. This functionality is available in MinIO for both single node and distributed architectures.

We are going to install MinIO locally and then Batch Replicate to a bucket on MinIO Play.

Download and install MinIO. Record the access key and secret key.

Download and install MinIO Client.

Create a bucket and copy some files into it. The contents of the files are not important because we’re demonstrating Batch Replication. However, do not use files containing sensitive information as we will ultimately be replicating to MinIO Play, a public MinIO Server.

mc mb myminio/testbucket/

mc mirror /usr/bin myminio/testbucket/

mc mb play/<Your Initials>-testbucket/This will copy roughly 1 GB of data to the new bucket on your MinIO deployment and prepare for Batch Replication by creating the target bucket.

Create and define the Batch Replication:

mc batch generate myminio/ replicateThis creates a replication.yaml file that you may then edit to configure the replication job.

This file (shown below) contains origin bucket, filters/flags, and destination target (bucket and credentials).

replicate:

apiVersion: v1

# source of the objects to be replicated

source:

type: TYPE # valid values are "s3"

bucket: BUCKET

prefix: PREFIX

# NOTE: if source is remote then target must be "local"

# endpoint: ENDPOINT

# credentials:

# accessKey: ACCESS-KEY

# secretKey: SECRET-KEY

# sessionToken: SESSION-TOKEN # Available when rotating credentials are used

# target where the objects must be replicated

target:

type: TYPE # valid values are "s3"

bucket: BUCKET

prefix: PREFIX

# NOTE: if target is remote then source must be "local"

# endpoint: ENDPOINT

# credentials:

# accessKey: ACCESS-KEY

# secretKey: SECRET-KEY

# sessionToken: SESSION-TOKEN # Available when rotating credentials are used

# optional flags based filtering criteria

# for all source objects

flags:

filter:

newerThan: "7d" # match objects newer than this value (e.g. 7d10h31s)

olderThan: "7d" # match objects older than this value (e.g. 7d10h31s)

createdAfter: "date" # match objects created after "date"

createdBefore: "date" # match objects created before "date"

## NOTE: tags are not supported when "source" is remote.

# tags:

# - key: "name"

# value: "pick*" # match objects with tag 'name', with all values starting with 'pick'

## NOTE: metadata filter not supported when "source" is non MinIO.

# metadata:

# - key: "content-type"

# value: "image/*" # match objects with 'content-type', with all values starting with 'image/'

notify:

endpoint: "https://notify.endpoint" # notification endpoint to receive job status events

token: "Bearer xxxxx" # optional authentication token for the notification endpoint

retry:

attempts: 10 # number of retries for the job before giving up

delay: "500ms" # least amount of delay between each retryNote that the notification endpoint has not been configured for this tutorial. When configured, notifications will be available at that endpoint when Batch Replication completes.

You can create and run multiple Batch Replication jobs at the same time; there are no predefined limits.

Start Batch Replication with the following:

mc batch start alias/ ./replicate.yaml

Successfully start 'replicate' job `E24HH4nNMcgY5taynaPfxu` on '2022-09-26 17:19:06.296974771 -0700 PDT'You will see a message that the replication job started successfully and the time.

You can list all batch jobs:

mc batch list alias/

ID TYPE USER STARTED

E24HH4nNMcgY5taynaPfxu replicate minioadmin 1 minute agoYou can also check the status of batch jobs:

mc batch status myminio/ E24HH4nNMcgY5taynaPfxu

●∙∙

Objects: 28766

Versions: 28766

Throughput: 3.0 MiB/s

Transferred: 406 MiB

Elapsed: 2m14.227222868s

CurrObjName: share/doc/xml-core/examples/foo.xmlcatalogsTo see running batch jobs and their configuration:

mc batch describe myminio/ E24HH4nNMcgY5taynaPfxu

replicate:

apiVersion: v1

<content truncated>When Batch Replication is complete, you can compare bucket contents to verify it was successful. Running the command below will return nothing if the buckets contain the same objects.

mc diff myminio/testbucket play/<Your Initials>-testbucketBuild Multi-Cloud Magic with Batch Replication

The recent introduction of the MinIO Batch Framework and the first operation, Batch Replication, to MinIO enables you to build workflows that push data wherever you need it, and notify you when replication is complete. Ingest data at the edge, in the cloud or in the datacenter and push/pull it to the rich cornucopia of applications available in the cloud. Leverage best-of-breed applications seamlessly, using Batch Replication, for example, to provide data to BigQuery on GCP, KubeFlow on Azure, and Spark and Iceberg on AWS. Batch Replication gives you the freedom and control needed for multicloud success.

Download MinIO and get started replicating today. Any questions? Reach out to us on Slack.