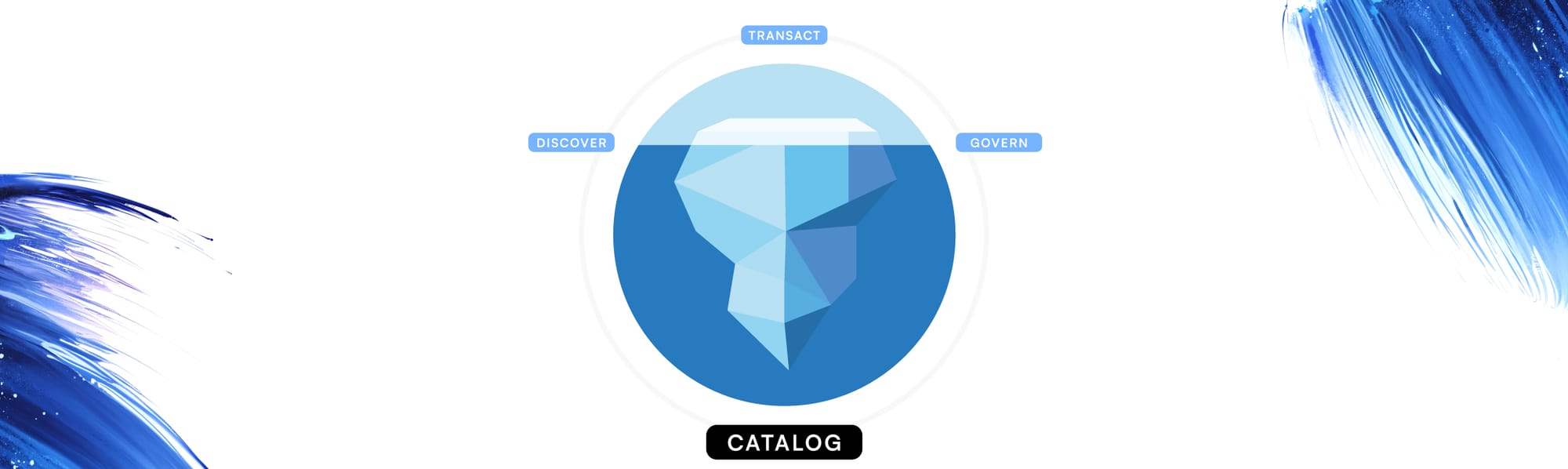

Discover, Transact, Govern? Unpacking the Iceberg Catalog API Standard's True Scope.

In data engineering, open standards are foundational for building interoperable, evolvable, and non-proprietary systems. Apache Iceberg, an open table format, is a prime example. Along with compute, Iceberg brings structure and reliability to data lakes. When coupled with high-performance object storage like MinIO AIStor, Iceberg unlocks new avenues for creating next-generation, high-performance, cost-effective, and scalable architectures.

However, this powerful table format standard is only part of the solution. How applications and engines discover, manage, and interact with these tables' metadata also demands a common language. This recognition brings us to the Iceberg Catalog API – a community-driven effort to standardize these interactions. But what exactly does this standard define, and what are its implications for developers building with Iceberg?

A Commendable Effort: The Iceberg Community and Catalog API Standardization

The Apache Iceberg community's push for a standard Catalog API is a significant step. Such standardization efforts are crucial because they directly translate to tangible benefits: engines and tools can connect to diverse catalog implementations with less friction, the risk of lock-in to a specific catalog service diminishes, and both client-side and server-side development is simplified against a standard specification. This collaborative approach strengthens the entire Iceberg ecosystem. With this standardized foundation in place, one might wonder how it compares to the feature set often associated with general-purpose data catalogs.

The Modern Data Catalog: A Universe of Possibilities

When developers hear "data catalog," a broad spectrum of capabilities often comes to mind. Modern, comprehensive data catalogs typically aim to provide robust data discovery, rich technical and business metadata management, integrated data governance (including security policies and fine-grained access control like RBAC), end-to-end data lineage tracking, data quality insights, and detailed audit trails. They're often envisioned as central hubs for understanding and managing an organization's data assets. So, how does the focused Iceberg Catalog API standard relate to this expansive vision?

The Iceberg Catalog API Standard: A Focused Lens on Core Needs

Contrasting the vast array of features in a general data catalog with the Apache Iceberg Catalog API standard reveals an intentional focus. Instead of attempting to standardize every conceivable cataloging function, the Iceberg API standard concentrates on the fundamental operations essential for table interoperability and state management. This deliberate scope ensures a lean, widely adoptable interface.

This approach aligns with a fundamental engineering principle at Minio: simplicity scales. By concentrating on essential, well-defined functionalities, systems, and standards, we can achieve greater robustness, improved performance, and easier adoption, while avoiding the pitfalls of over-complexity.

Understanding this precise focus is crucial, so let's examine the core functionalities mandated by Iceberg Catalog, starting with how tables are located.

Related: What is a Catalog and Why Do You Need One?

Standardized Table Discovery: A Core Mandate

A primary, standardized capability of the Iceberg Catalog API is table discovery. Iceberg tables reside in object stores, which can be vast and complex. The Catalog API standard provides the "map" to these tables by defining how a table's name is resolved to a pointer, typically the path to its current root metadata file (e.g., vN.metadata.json). This file is the entry point for any engine to understand a table's schema, partitioning, snapshot history, and current state. Standard operations like loadTable, tableExists, and listTables ensure that any Iceberg-compatible tool can reliably locate and interpret tables managed by a compliant catalog. The catalog's specified role here isn't to hold all metadata, but to reliably provide this current pointer. Once a table is discovered, how does Iceberg address its modification?

Iceberg Transactions: The Catalog API's Pivotal Role

Beyond discovery, the Iceberg Catalog API is indispensable for enabling Iceberg's hallmark ACID (Atomicity, Consistency, Isolation, Durability) transactional capabilities. These properties bring reliability to data lake operations. Iceberg Catalog underpins these guarantees through its definition of atomic commit operations. When changes are made to a single table (e.g., an append, update, or schema change), Iceberg Catalog defines how these are committed transactionally. This typically involves a POST request to the REST endpoint /v1/{prefix}/namespaces/{ns}/tables/{table} (or its programmatic equivalents like t.commitTable()), where the catalog atomically updates the table's metadata pointer from an old root metadata to a new one. This atomicity is crucial.

Furthermore, Iceberg Catalog specifies a dedicated multi-table transaction commit API for scenarios requiring atomicity across multiple tables (e.g., POST /v1/{prefix}/transactions/commit or its programmatic equivalents like t.commitTransaction()). In all these commit operations, the fundamental principle dictated by the Iceberg Catalog is the atomic update of metadata pointers by the catalog, ensuring data integrity. With discovery and transactions clearly within its purview, what does Iceberg Catalog say about governing access to these tables?

The Governance "Gap": What Iceberg Catalog Intentionally Doesn't Dictate

While the Iceberg Catalog API standard provides robust mechanisms for table discovery and transactional updates, it's equally essential for developers to understand what it doesn't comprehensively address: data governance. Features like fine-grained Role-Based Access Control (RBAC), detailed audit logging of data access, data lineage, or enforcement of specific security policies are not explicitly defined within the current API standard. Iceberg Catalog focuses on the mechanics of table structure and state management, leaving the implementation of comprehensive governance models largely to the specific catalog services or higher-level tools. This intentional omission allows flexibility and integration with diverse enterprise security frameworks. So, if the Iceberg Catalog doesn't dictate these governance features, how are these critical needs met in practice?

Bridging the Divide: Specialized Catalogs for Comprehensive Governance

The absence of a prescribed governance framework within the Iceberg Catalog API standard means that developers and organizations must look to specific catalog implementations or external tools to fulfill these requirements. Many Iceberg-compatible catalog solutions (like Apache Polaris, Nessie, Gravitano, and others) build upon the Iceberg Catalog API by offering value-added governance layers. These extensions often include APIs and mechanisms for managing permissions, roles, and auditing, tailored to their specific architecture. Thus, while the Iceberg ensures core interoperability for table operations, achieving robust data governance necessitates choosing a catalog service that explicitly provides advanced governance features or integrating with dedicated governance platforms. This distinction leads us to key takeaways for any team working with Iceberg.

Performance Considerations

When optimizing your Iceberg data lakehouse, it’s essential to consider performance from various perspectives. While the Iceberg Catalog is responsible for managing data discovery and transactions, frequent commits can overload the catalog service. For most operations, such as queries, data ingestion, and compaction, the performance is primarily determined by the underlying object storage.

Iceberg's architecture includes numerous data files and layers of metadata files (such as table metadata, manifest lists, and manifest files), making fast and efficient access to the storage tier crucial. Low latency for metadata lookups and high throughput for data scans ensure that your data lakehouse responds quickly and scales effectively.

This highlights the importance of a high-performance object storage solution like MinIO AIStor. Designed for demanding workloads, AIStor offers the exceptional throughput and low latency necessary to accommodate Iceberg's diverse I/O patterns—from swiftly accessing many small metadata files to streaming large data files. By optimizing the object storage layer, MinIO AIStor enables your Iceberg data lakehouse to achieve its performance objectives, effectively supporting even the most intensive analytics and AI/ML applications.

Iceberg Catalog's Scope on Discover, Transact, and Govern – Clarified

So, when we pose the question: discover, transact, govern? What does the Iceberg Catalog API Standard truly dictate? This exploration has aimed to unpack its precise scope for developers and architects. The verdict is clear:

For discovery, Iceberg provides a robust and essential framework. It mandates the mechanisms by which Iceberg tables are consistently registered, found, and understood by any compliant engine, ensuring your data is always locatable within object storage.

For transactions, the Iceberg Catalog API is pivotal. It defines the atomic commit operations that underpin Iceberg's reliability, enabling safe, concurrent modifications and consistent views of your data.

However, Iceberg Catalog currently draws a distinct boundary regarding comprehensive Governance, encompassing fine-grained access controls, detailed auditing, and overarching security policies. It does not prescribe these features, leaving their implementation to specific catalog solutions or auxiliary governance tools.

The critical takeaway from unpacking Iceberg Catalog’s true scope is this: a robust, common foundation for discovering tables and executing reliable transactions. For robust governance, however, your technical strategy must extend beyond the bare standard to leverage the extended capabilities of the chosen catalog implementation or integrate dedicated governance systems. This clarity empowers you to design and implement Iceberg-based data platforms that are powerful, interoperable, secure, and well-managed according to your organization's needs.

Please feel free to reach out to us at hello@min.io or on our Slack.