Load Balancing with MinIO Firewall

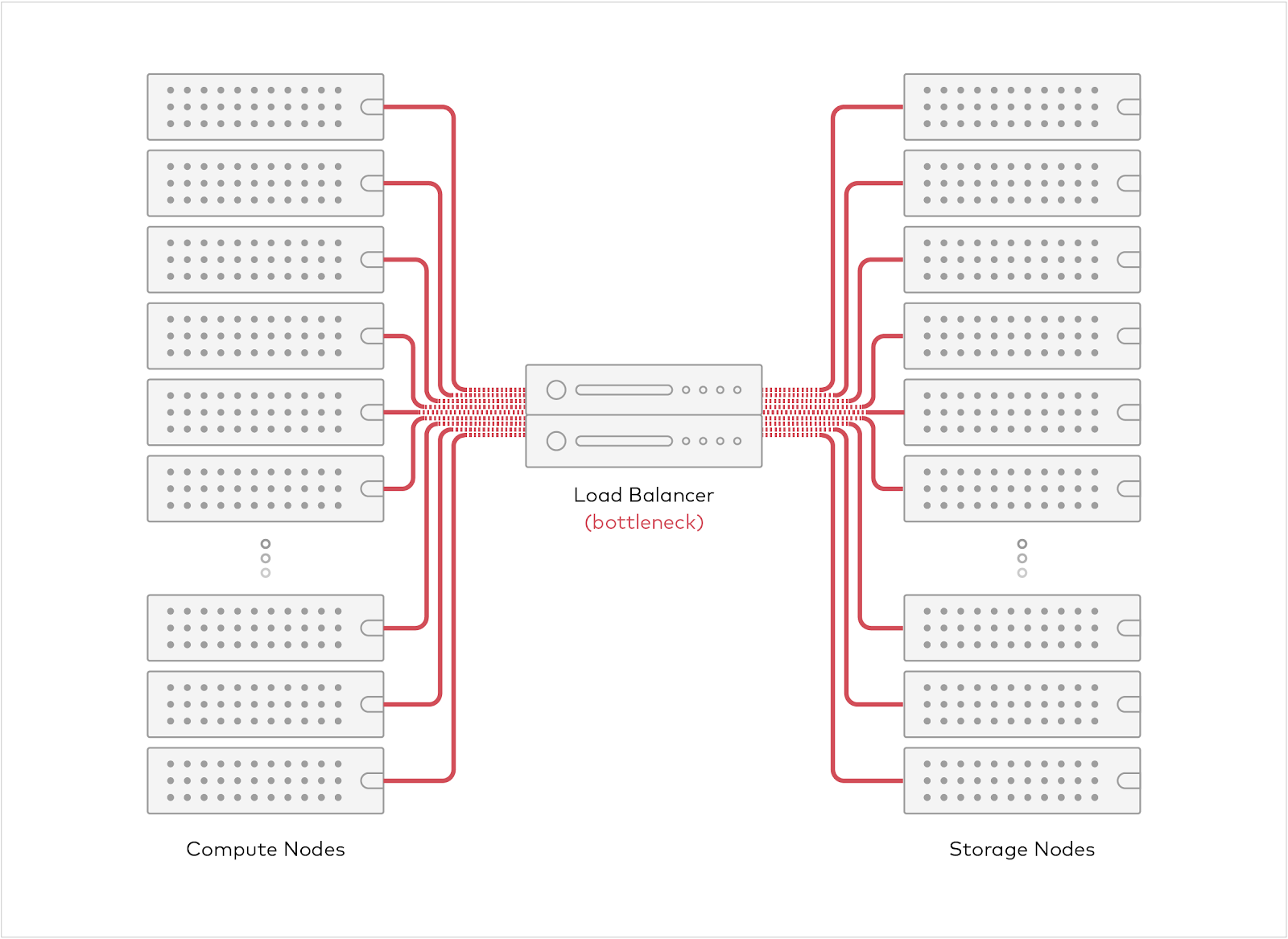

Modern data processing environments move terabytes of data between the compute and storage nodes on each run. One of the fundamental requirements in a load balancer is to distribute the traffic without compromising on performance. The introduction of a load balancer layer between the storage and compute nodes as a separate appliance often ends up impairing the performance. Traditional load balancer appliances have limited aggregate bandwidth and introduce an extra network hop. This architectural limitation is also true for software-defined load balancers running on commodity servers.

This becomes an issue in the modern data processing environment where it is common to have 100s to 1000s of nodes pounding on the storage servers concurrently.

This is where a Load Balancer comes in handy. But did you know you can use MinIO Firewall to meet these high-performance load balancing needs? The Load Balancer in MinIO Firewall solves the network bottleneck by taking a sidecar approach instead. It runs as a tiny sidecar process alongside each of the client applications. This way, the applications can communicate directly with the servers without an extra physical hop. Since each of the clients run their own sidecar in a shared-nothing model, you can scale the Load Balancer to any number of clients.

In a cloud-native environment like Kubernetes, MinIO Firewall runs as a sidecar container. It is fairly easy to enable the Load Balancing configuration without any modification to your application binary or container image.

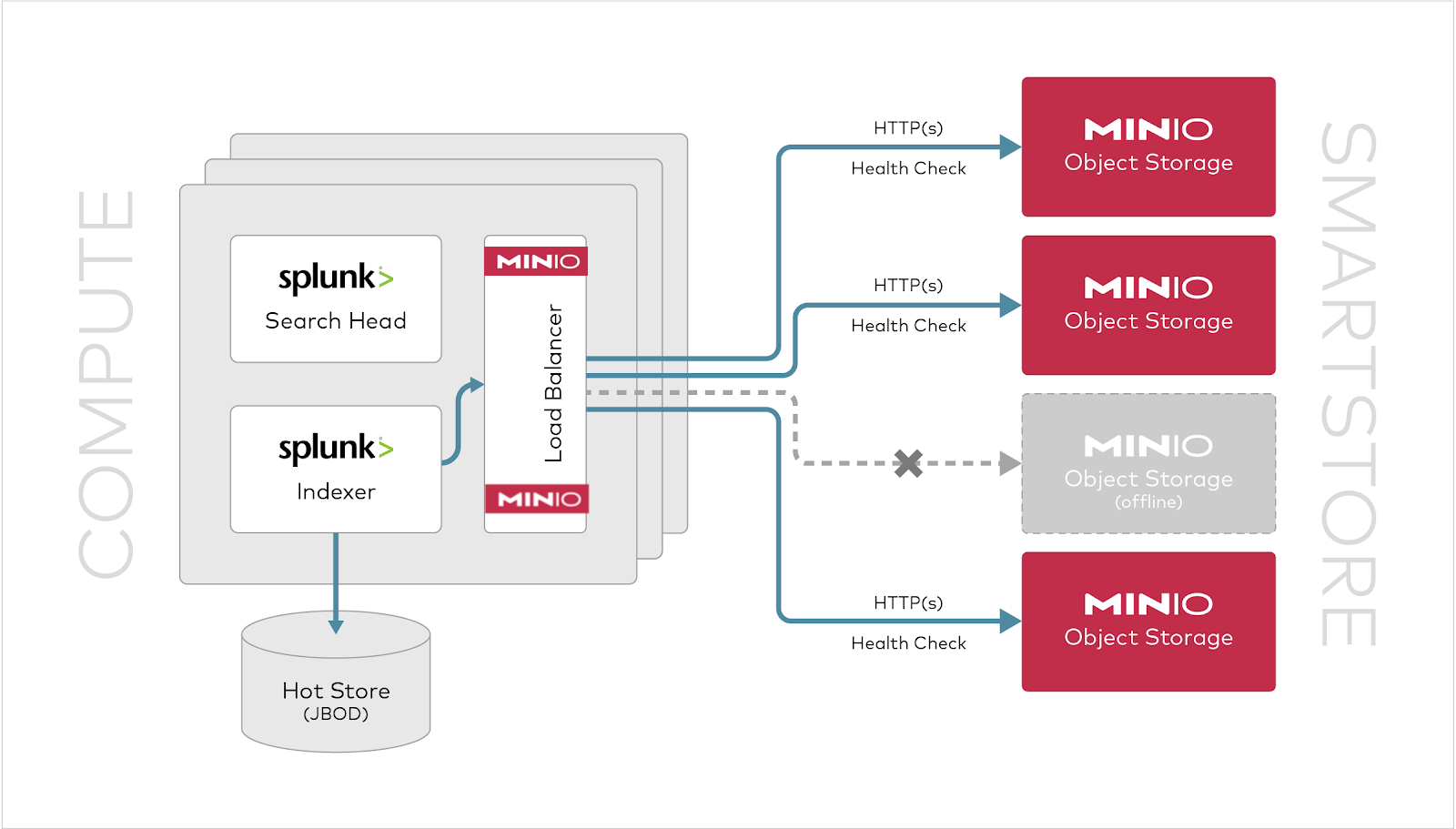

Let’s look at a specific application example: Splunk.

In this case we will use MinIO’s as a high-performance AWS S3-compatible object storage as a SmartStore endpoint for Spunk. Please refer to Leveraging MinIO for Splunk SmartStore S3 Storage whitepaper for an in-depth review.

Splunk runs multiple indexers on a distributed set of nodes to spread the workloads. MinIO Firewall Load Balancer sits in between the Indexers and the MinIO cluster to provide the appropriate load balancing and failover capability. Because the Load Balancer is based on a shared-nothing architecture, each sidecar is deployed independently along the side of the Splunk indexer. As a result, Splunk now talks to the local MinIO Firewall Load Balancer process and the Firewall becomes the interface to MinIO. These indexers talk to the object storage server via HTTP RESTful AWS S3 API.

MinIO Firewall Load Balancer constantly monitors the MinIO cluster of servers for availability using the readiness service API. For legacy applications, it will fallback to port-reachability for readiness checks. This readiness API is a standard requirement in the Kubernetes landscape. If any of the MinIO servers go down, Sidekick will automatically reroute the S3 requests to other servers until the failed server comes back online. Applications get the benefit of the circuit breaker design pattern for free.

Final Thoughts

Take the opportunity to fire up the entire AIStor Feature Set. We have superb documentation and the legendary SUBNET where the Engineers are ready to help you on your way.

If you have any questions be sure to reach out to us at hello@min.io !