Simplifying Multi-Tenant Object Storage as a Service with Kubernetes and MinIO Operator

This post was updated on 1.12.22.

Object storage as a service is the hottest concept in storage today. The reason is straightforward: object storage is the storage class of the cloud and the ability to provision it seamlessly to applications or developers makes it immensely valuable to enterprises of any size.

The challenge is that object storage as a service has traditionally been very difficult to deliver. Overly complex, hard to tune for performance, prone to failure at scale etc. While systems like Kubernetes offer powerful tools for automating the deployment and management of these systems, the overall problem of complexity remains unsolved as administrators must still invest significant time and effort to deploy even a small scale object storage resource.

By combining Kubernetes with our new Operator and our Operator Console graphical user interface, MinIO is changing that dynamic in a big way. It should be stated upfront that MinIO has always obsessed over simplicity. It permeates everything we do, every design decision we make, every line of code we write.

Nonetheless, we saw even more opportunity for simplification. To do this we created the MinIO Operator and the MinIO kubectl plugin to facilitate the deployment and management of MinIO Object Storage on Kubernetes. While the Operator commands were critical for users already proficient with Kubernetes, we also wanted to address a wider audience so we created a Graphical User Interface for the Operator and incorporated it into our new MinIO Operator Console to enable anyone in the organization to create, deploy and manage object storage as a service.

Kubernetes is the platform of the Internet. Given its massive adoption, we chose to remain consistent with the Kubernetes way of doing things. This meant not using any specialized tools or services to setup MinIO.

The effect is that the MinIO Operator works on any Kubernetes distribution, be it OpenShift, vSphere 7.0u1, Rancher or stock upstream. Further, MinIO will work on any public cloud provider such as Amazon's EKS (Elastic Kubernetes Engine), Google's GKE (Google Kubernetes Engine), Google's Anthos or Azure's AKS (Azure Kubernetes Service).

Pretty much all you need to get started on any distribution of Kubernetes is some storage device that can be presented to Kubernetes either via Local Persistent Volumes or with a CSI Driver.

Let’s start with a review on using MinIO with the kubectl plugin and a kustomize based approach. You'll need to install the kubectl tool on a computer with network access to the Kubernetes cluster. See Install and Set Up kubectl for installation instructions. You may need to contact your Kubernetes administrator for assistance in configuring your kubectl installation for access to the Kubernetes cluster.

Installation

kubectl plugin

To install MinIO Operator we can leverage it's kubectl plugin which can be installed via krew

After which we can install the Operator by simply doing

Installation with kustomize

Alternatively for anyone who prefers a kustomize based approach our repository supports installing specific tags, of course you can also use this as the base for your kustomization.yaml file

Provisioning Object Storage

The analogy we used to represent a MinIO Object Storage cluster is Tenant. We did this to communicate that with the MinIO Operator one can allocate multiple Tenants within the same Kubernetes cluster. Each tenant, in turn, can have different capacity (i.e: a small 500GB tenant vs a 100TB tenant), resources (1000m CPU and 4Gi RAM vs 4000m CPU and 16Gi RAM) and servers (4 pods vs 16 pods), as well a separate configurations regarding Identity Providers, Encryption and versions.

Let's start by creating a small tenant with 16Ti capacity across 4 nodes. We will first create a namespace for the tenant to be installed called `minio-tenant-1` and then place the tenant there using the `kubectl minio tenant create` command.

Pay close attention to the storage class. Here we will use the cluster's default storage class - called standard, but you should use whatever storage class can accommodate 16Ti (or 1Ti persistent volumes).

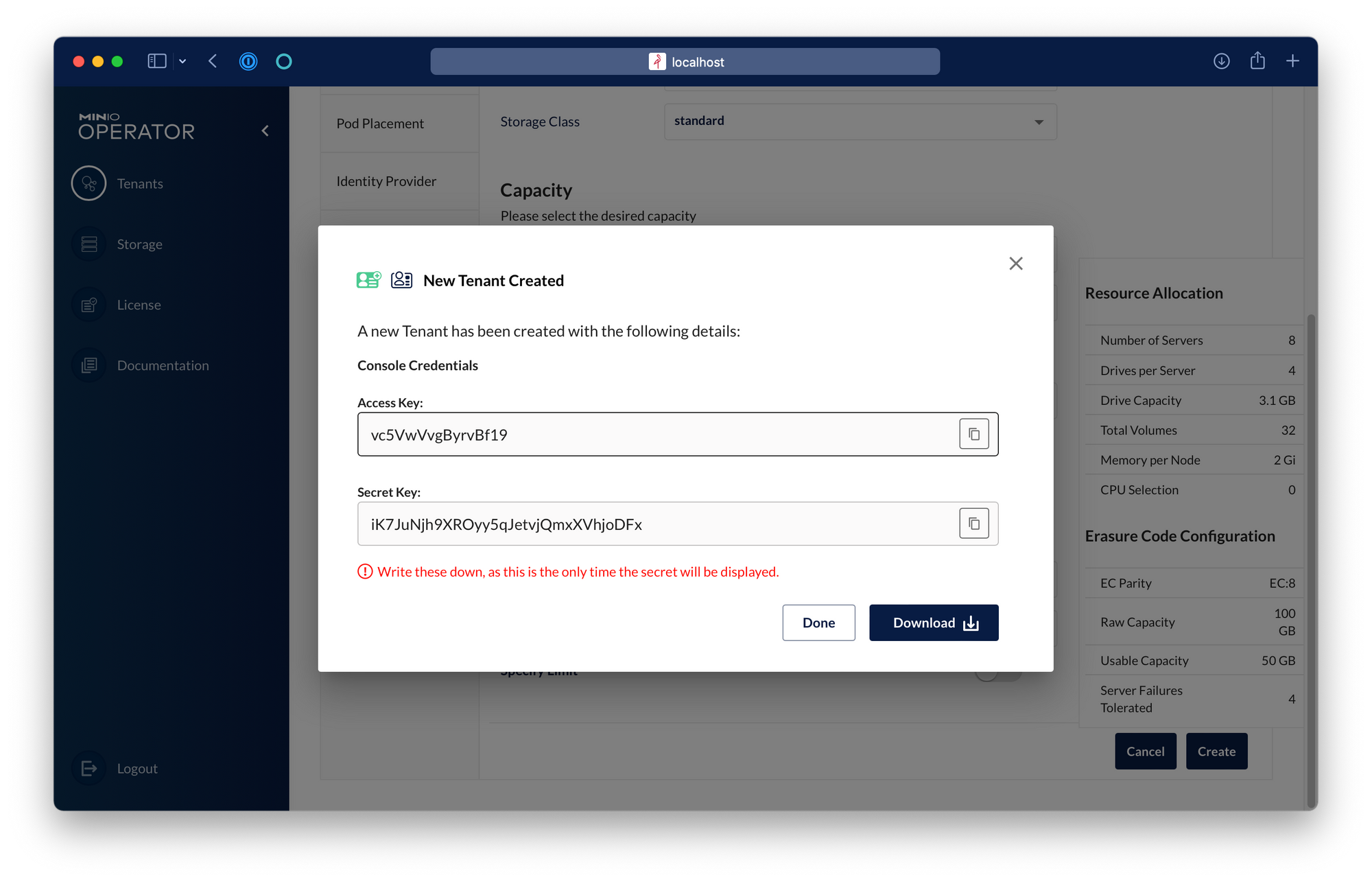

This command will output the credentials needed to connect to this tenant. MinIO only displays these credentials once, so make sure you copy them to a secure location.

Usually a tenant takes a few minutes to provision while the MinIO Operator requests TLS certificates for MinIO and the Operator Console via Kubernetes Certificate Signing Requests, you can check the progress by doing:

This will tell you your tenant’s current state:

After a few minutes the tenant should report an Initialized state, indicating your Object Storage cluster is ready:

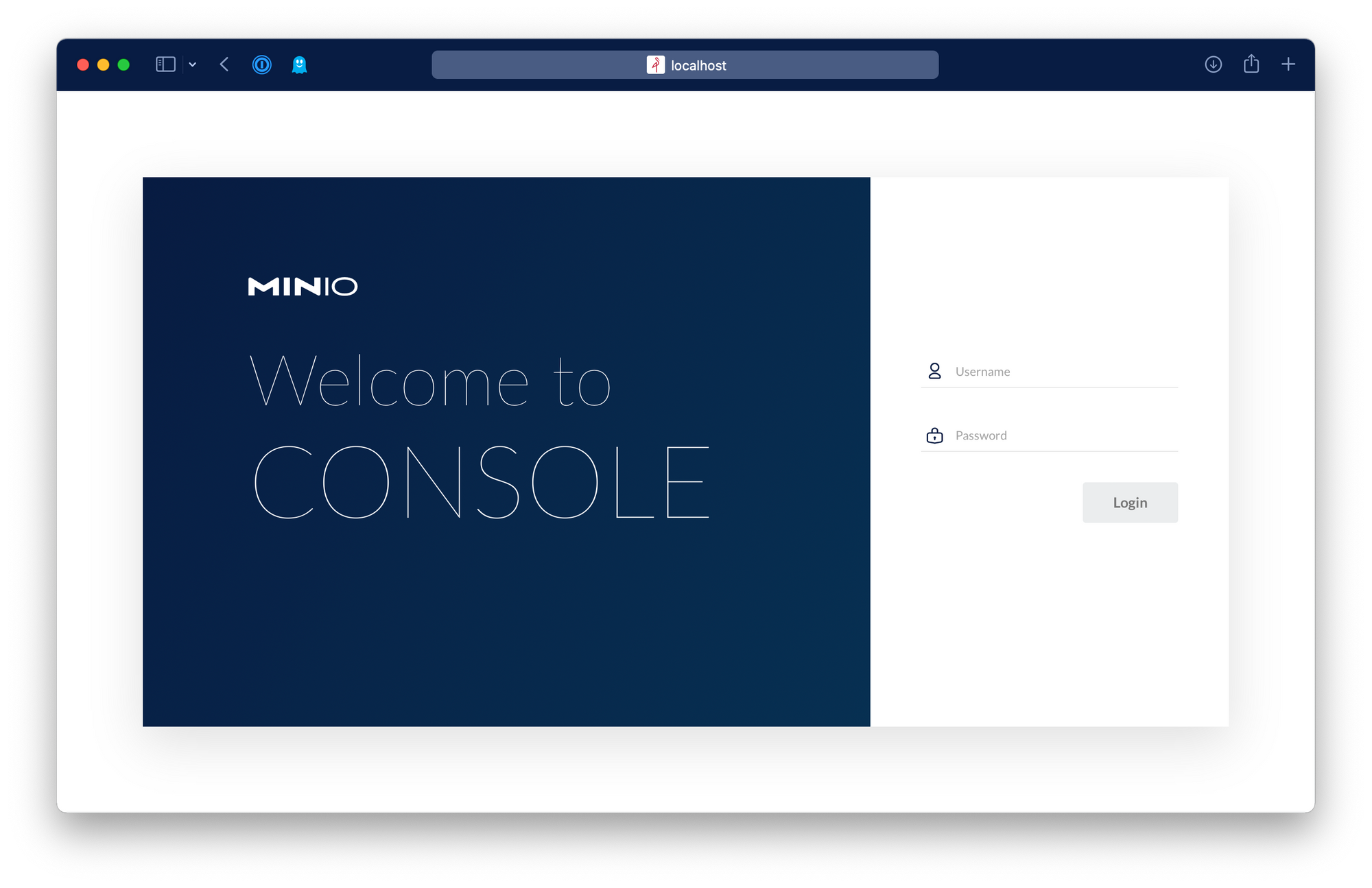

That's it, our Object Storage cluster is up and running, we can access it via kubectl port-forward. To access MinIO's Console:

And then go to https://localhost:9443/ in your local browser

Pretty easy right?

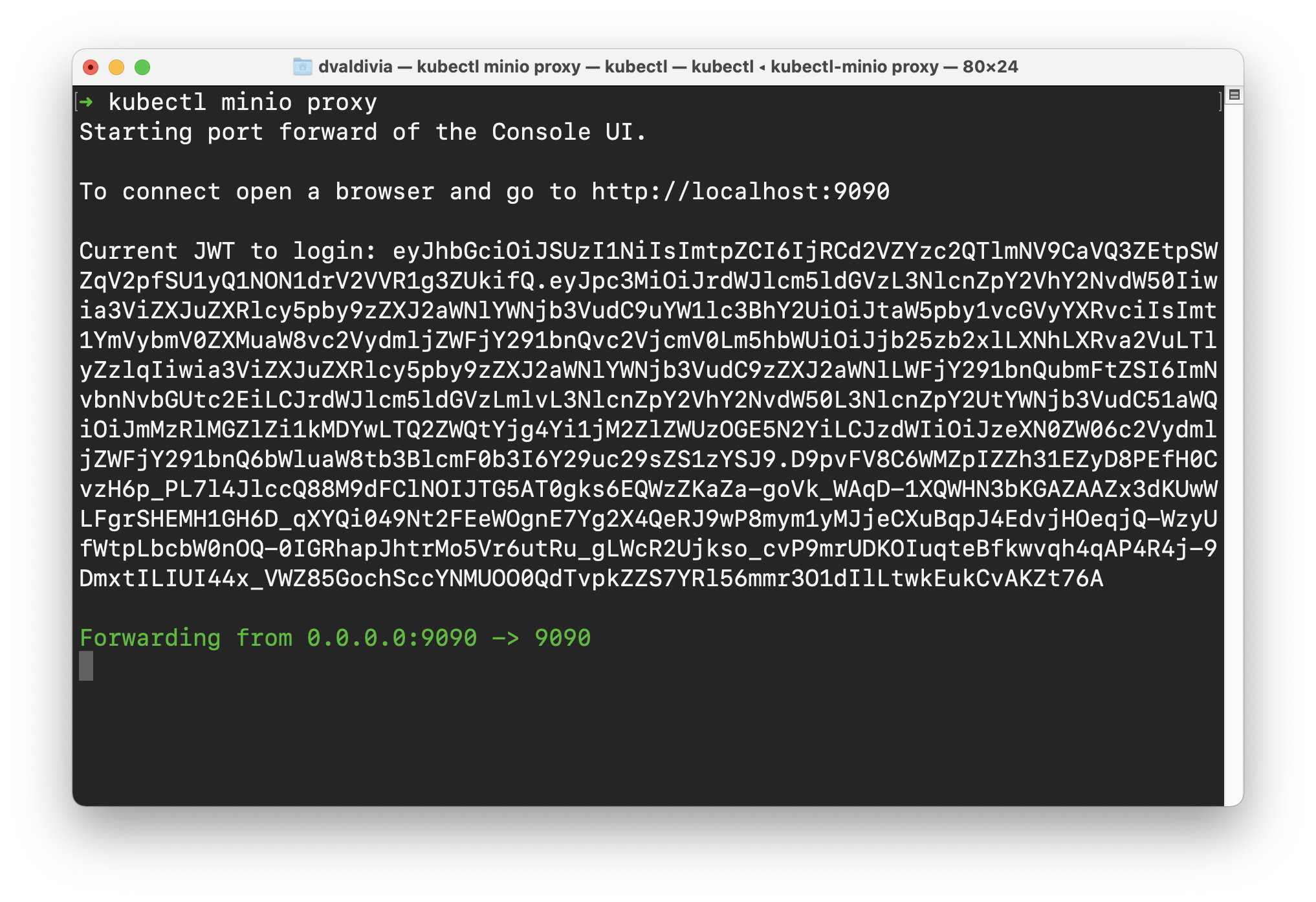

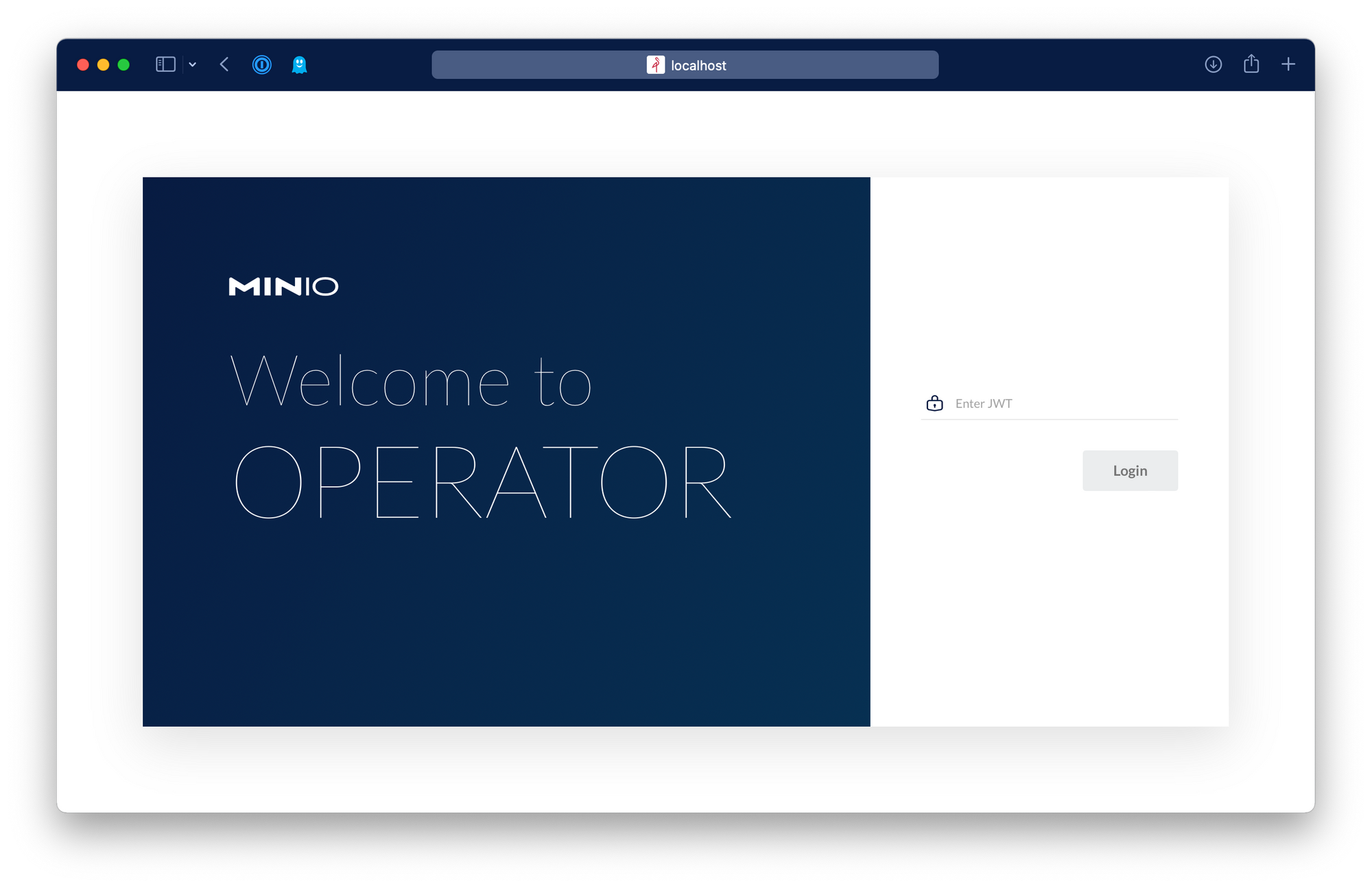

But now let's stop, rewind and remix to add a tenant using the MinIO Console for Operator (a.k.a Operator UI). To access it we can simply run the kubectl minio proxy command. This will tell us how to access the Operator UI.

As you can see, it's telling you to visit your local browser's http://localhost:9090/login and it's also telling you the JWT to access the Console UI

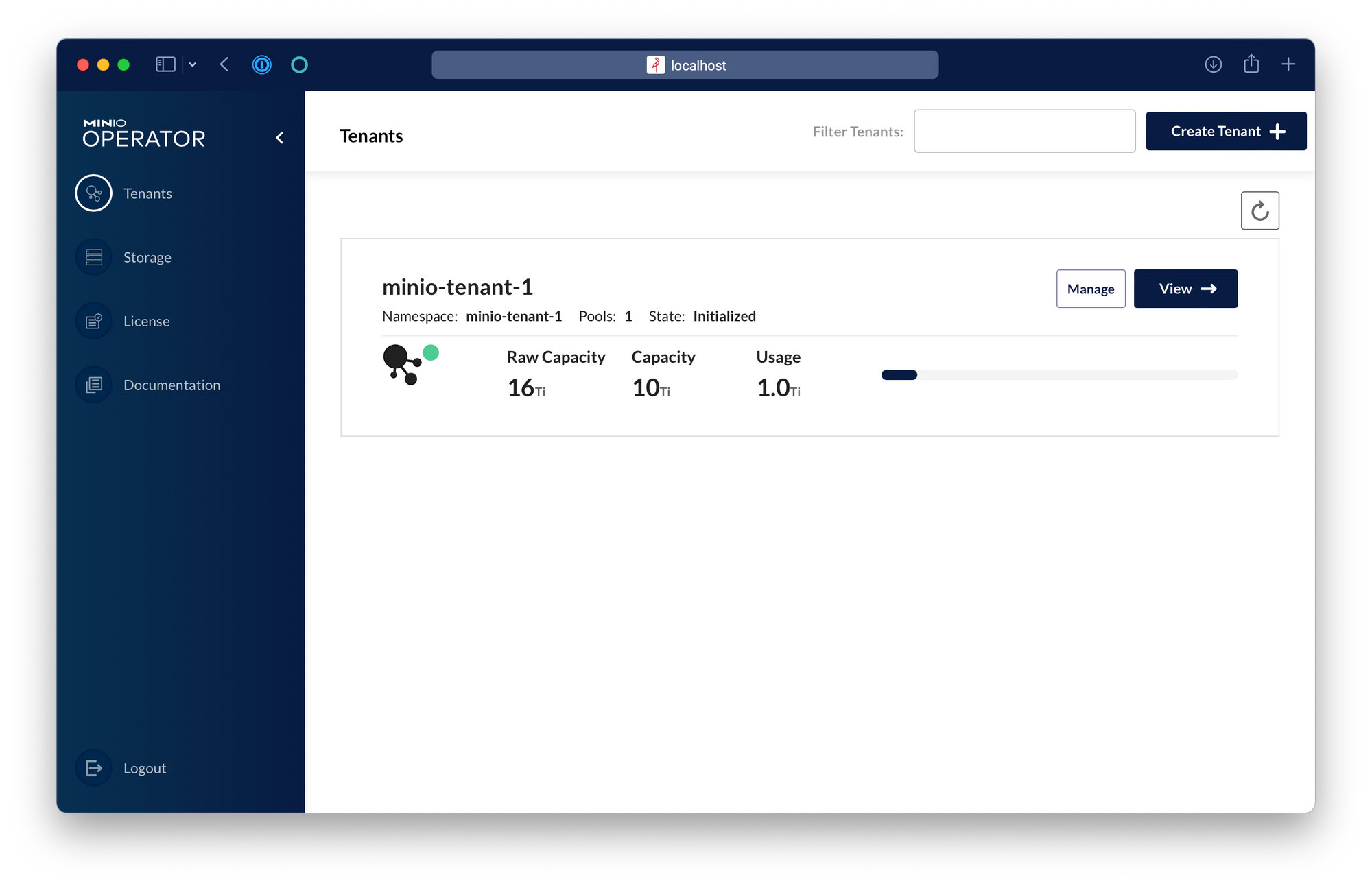

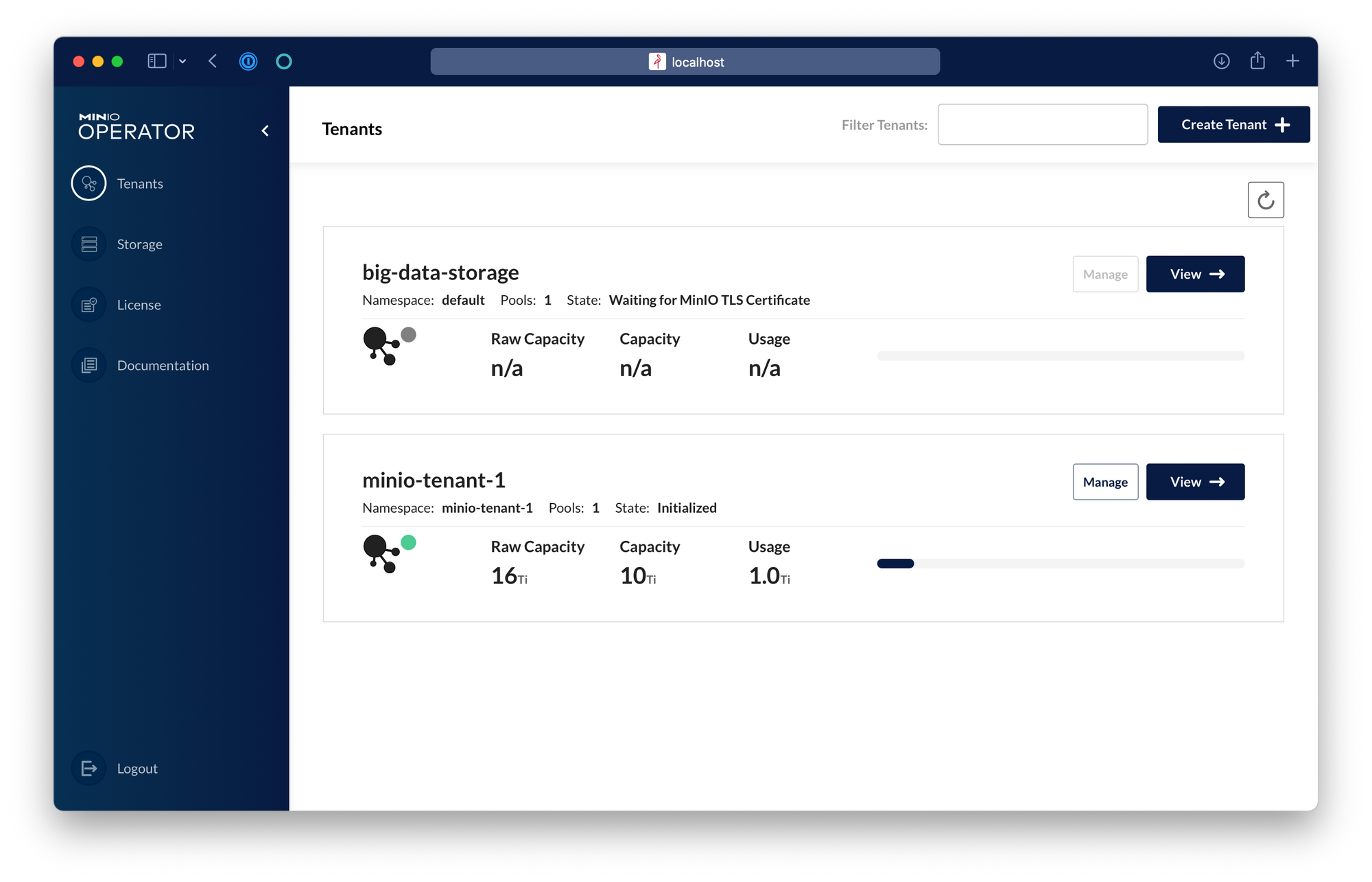

Inside the Operator UI we can see the tenant that we provisioned previously using the kubectl plugin.

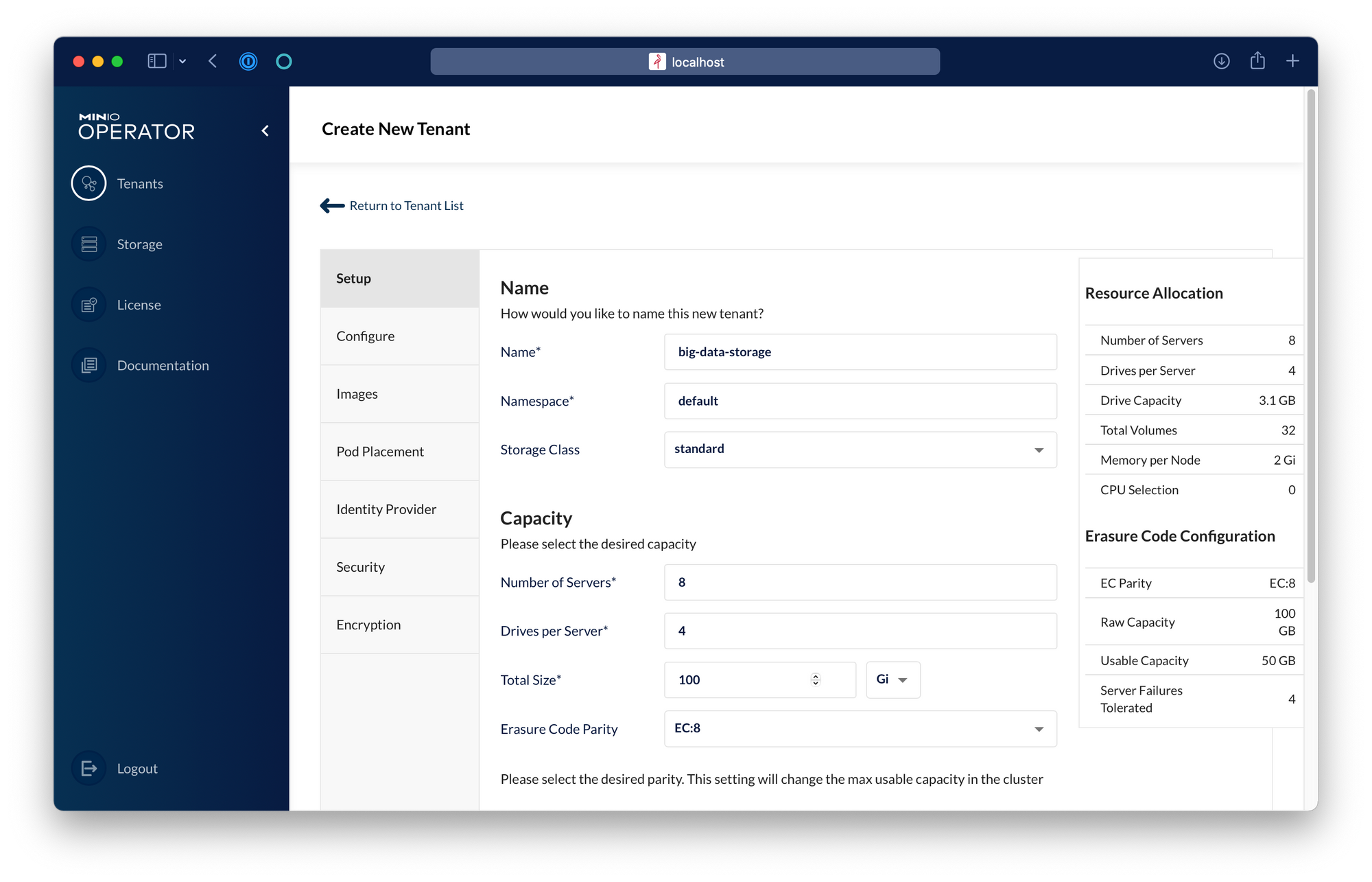

To add another one, hit Create Tenant. The first screen will ask a few configuration questions:

1) name the tenant

2) select a namespace

3) select a storage class

4) size your tenant

If you wish to configure an Identity Provider, TLS Certificates, Encryption or Resources for this tenant I invite you to play with the Sections on the left where these configuration options reside.

In this screen you can size your tenant with number of servers, number of drivers per server and desired raw capacity additionally you can get a preview of the usable capacity and the SLA guarantees with each erasure coding parity value you pick.

Now click Create. That is it.

Going back to the list of tenants we can see our original cli-provisioned tenant next to the tenant created using the Operator UI. These processes are equivalent. It is only personal preference as to which you select.

Finally, if you are curious about how to provision a MinIO tenant via good old yamls you can get the definition of a tenant and get familiar with with our Custom Resource Definition:

Which returns

Conclusion

We've gone to great lengths to simplify the deployment and management of MinIO on Kubernetes. It is simple to install the Operator and use it to create tenants either by command line or by graphical user interface. This, however, is just a subset of the features of MinIO on Kubernetes. Each MinIO Tenant has the full feature set available with baremetal deployments - so you can migrate your existing MinIO deployments to Kubernetes with full confidence in functionality

I encourage you to try the MinIO Operator yourself and explore other cool features such as using the Prometheus Metrics and Audit Log, or securing your MinIO Tenant with an external Identity Provider such as LDAP/Active Directory or an OpenID provider.

No matter what approach you take, the ability to provision muti-tenant, object storage as a service is now within the skill set of a wide range of IT administrators, developers and architects.