S3 Benchmark: MinIO on HDDs

High performance object storage is one of the hotter topics in the enterprise today.

On the one hand, object storage has become an indispensable part of the enterprise storage strategy (public or private cloud) - carrying the vast, vast majority of the enterprise burden when measured in TBs or PBs.

On the other hand, object storage has traditionally served a relatively low utility role for the enterprise - that of data archive, the source of backups and the recovery point for disasters. This is a function of the performance characteristics of legacy object storage solutions.

This is changing. Object storage is becoming more performant, bringing new use cases and more utility along with it.

Amazon upped the game, providing a service that outperforms the appliance vendors, but for enterprises seeking “Hadoop-like” performance from object storage you need to need to look for software designed to deliver throughput.

This is where MinIO comes in. When we got started a little over four years ago, the idea was to architect an object storage system that was simple, secure, scalable and fast. Very, very fast.

So what is fast?

It is a good question and it comes with tradeoffs. Exotic hardware can cover up poorly written software but that solution has limitations and destroys the price/performance curve.

Fast is about software. Software that takes full advantage of the underlying hardware - whether it be commodity or exotic. But make no mistake, fast needs to work on readily available, commodity hardware.

We have recently published the first in a series of performance benchmarks. We start with off the shelf hardware, easily obtained networking and standard compute instances.

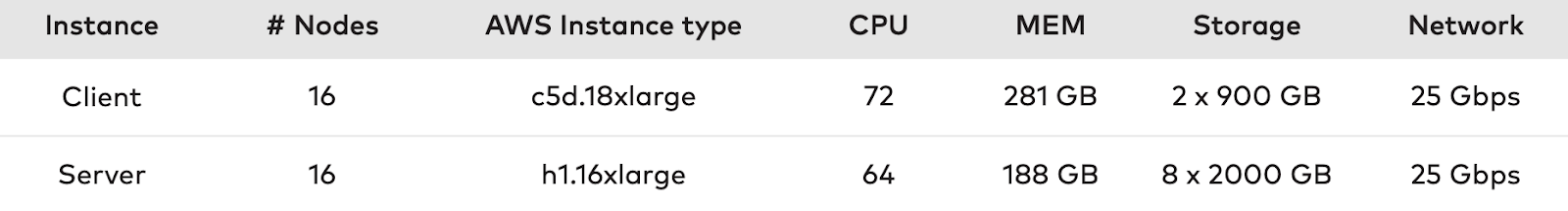

For the purpose of this benchmark, MinIO utilized AWS bare-metal, storage optimized instances with local hard disk drives and 25 GbE networking.

MinIO selected the S3-benchmark by wasabi-tech to perform our benchmark tests. This tool conducts benchmark tests from a single client to a single endpoint. During our evaluation, this simple tool produced consistent and reproducible results over multiple runs.

The results were superb.

MinIO was able to achieve 10.81 GB/sec read performance and 8.57 GB/sec write performance using the commodity setup listed above. Effectively, this means that the MinIO Object Server was limited by the throughput of the hard disk drives which is much lower than the network. There was, however, additional performance available on the 25GbE network as it was not fully saturated.

Given that the bandwidth of the drives was entirely utilized during these tests, higher throughput can be expected if additional drives were available.

Production deployments, however, often demand encryption and that comes with some overhead. MinIO’s highly optimized implementation encryption algorithms leverage SIMD - minimizing the overhead. Indeed, the difference is negligible or “in the noise” in our testing - allowing enterprises to run encryption as the default set up.

The details matter when it comes to benchmarks, and we have published a paper on how these results were produced so that they can be replicated by third parties.

The takeaway for enterprises, however, is pretty clear. At this throughput, a whole new suite of workloads come into play. Spark, Presto, Tensorflow to name a few. Further, the disaggregated architecture provides significant flexibility to tune your HW. We have a similar paper on NVMe here.

If you would like to dive deeper into the results, drop us a note on hello@min.io or hit the form at the bottom of the page.