Scaling up MinIO Internal Connectivity

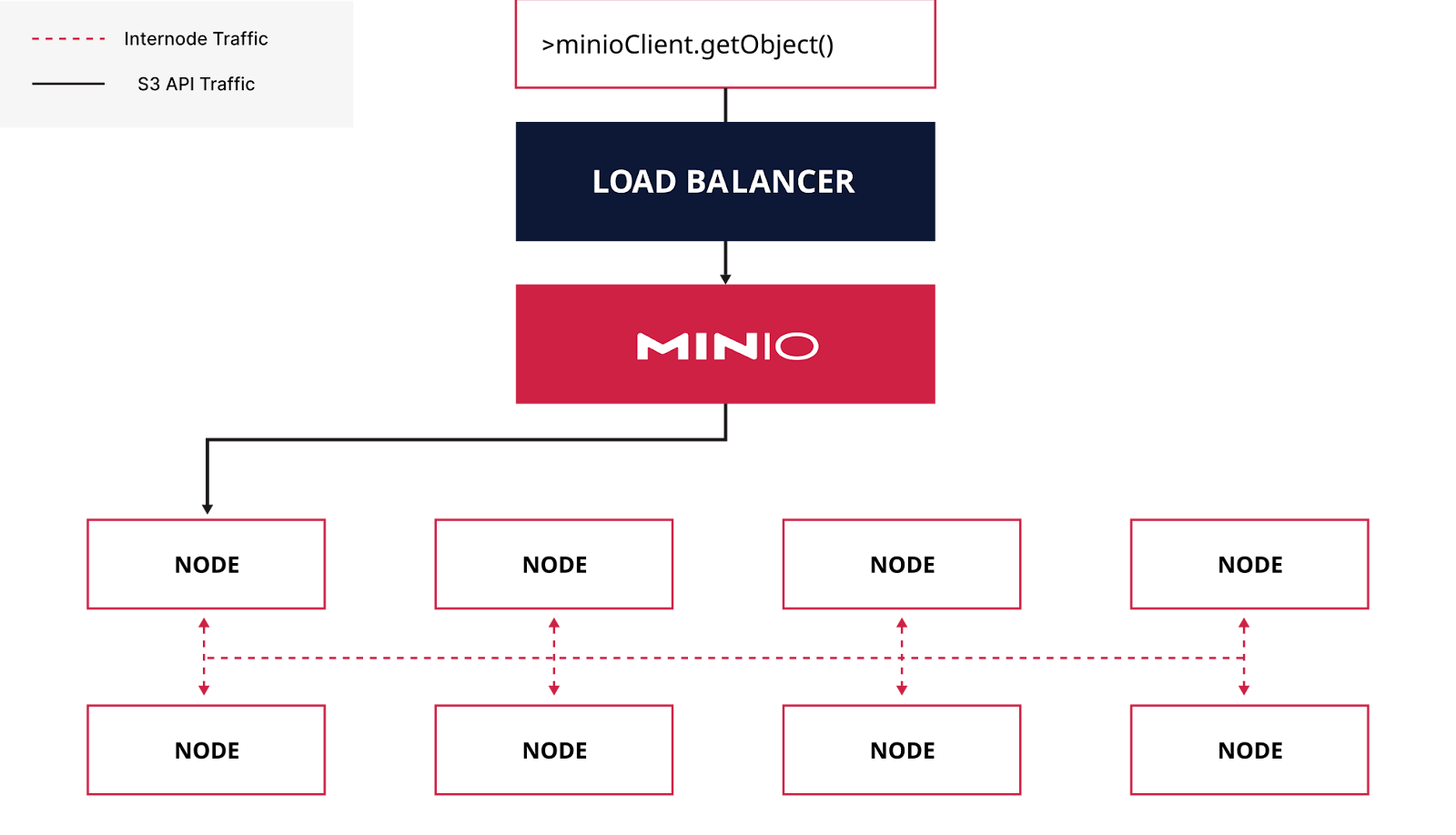

A MinIO cluster operates as a uniform cluster. This means that any request must be seamlessly handled by any server. As a consequence, servers need to coordinate between themselves. This has so far been handled with traditional HTTP RPC requests - and this has served us well.

Whenever server A would like to call server B an HTTP request would be made. We utilize HTTP keep-alive so we don’t have to create raw connections for every request.

We have experimented with HTTP/2, but we have had to drop it due to unreliable handling of hanging requests which lead to a big connection buildup and non-responding requests.

Scaling HTTP

However, as with 100s of servers per cluster (which is increasingly typical) we were beginning to see some scaling issues with this approach.

When there are N servers we can expect each server to want to talk to N-1 servers. That means that any server in a cluster with 100 servers would need to talk to 99 servers, meaning 99 inbound and 99 outbound. This is by itself not any issue and it will work fine.

However, issues can begin to arise as the cluster gets under high load. Not all requests are instantly served, and things like listing operations will typically take a while to complete - either because of disks taking time to complete requests or when waiting for clients to absorb the data.

Having these long-running requests would mean that a lot of connections would be kept open, meaning that new calls would need to open new requests. Similar effects could be seen with big bursts of incoming requests, where a high number of connections would be created to serve these.

With 100s of servers, this would in a few cases lead to undesirable effects where the systems didn’t respond too well under high load.

Two-way communication

To preempt this growing into a future issue, we decided to explore our options and look into options to allow MinIO to grow seamlessly. Having each server connect to all other servers wasn’t in itself an issue - the issue arises when each server has multiple long-running to the same servers.

We identified two cases we wanted to solve. First of all is small requests with small payloads. Typically these are rather sensitive to latency and a single request taking longer will typically result in the original request taking longer. Second of all, we wanted to have long-running requests with small, streaming payloads not hold a connection open for the duration of the call.

HTTP requests are technically two-way, meaning you send a request payload and receive a response. However for regular HTTP requests once you start sending back a response you can no longer receive anything on the connection. HTTP/2 does allow for two-way communication, but we didn’t want to go down that route and have to abandon it for the reasons stated above.

We also considered a regular TCP socket as well as stateless UDP packets. Those would have been viable options for a new product, but we discarded those as it would require network configuration changes for many installs and wouldn’t provide a smooth transition.

So for the communication we chose Websockets, since they provide A) Two-way communication B) Binary messages C) Clean integration into existing connectivity and D) Good performance.

Since connections are two-way we use a single connection between any two servers and only distinguish between server and client on individual messages.

Features

In the initial implementation, we support two types of operations. The connection is authenticated when established, so individual requests do not have authentication. Connections are continuously monitored, so hanging connections can be quickly identified and reestablished.

Requests

First is simple request -> response requests that provide all data as part of the call and receive all data as a single reply. This is aimed for small, but low latency roundtrips.

This is optimized for many, small messages and we aim to optimize the number of messages and the latency. There is no cancellation propagation, only a timeout specified by the caller. If the caller is no longer interested in the result the response is simply ignored.

Streams

Streams provide fully controlled two-way communication. Streams will contain an initial payload and can provide both input and output streams of data. The streams are congestion-controlled and provide remote cancellation along with timeouts.

This is meant for low throughput streams that would otherwise keep connections open with little traffic while the request is running. Streams perform regular checks on whether the remote is still running to ensure that requests will not hang while waiting for a response that will never show up.

Messages

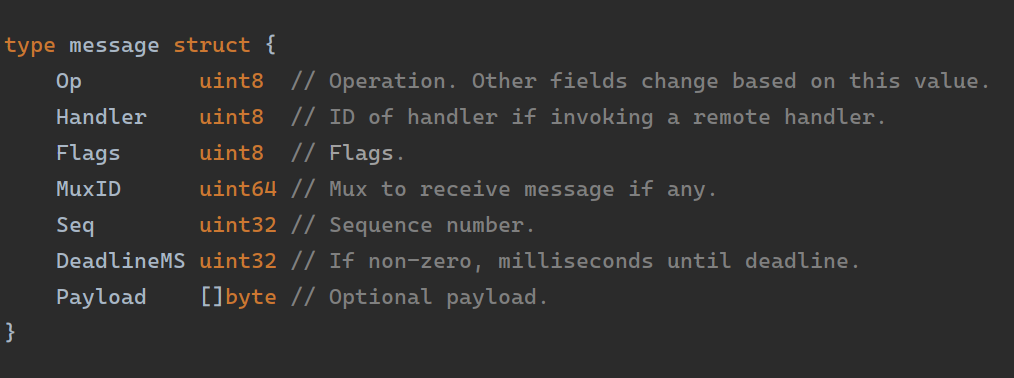

We provide lightweight messages with this base structure:

We use MessagePack as a lightweight and simple serializer. The “Op” defines the meaning of fields and the routing of messages.

Messages can optionally have a checksum, which we enable for connections without TLS. We allow several messages to be merged into a single message to reduce the number of individual writes when several messages are queued up.

Performance

While performance was never a primary goal of this change we also want to make sure that it will perform reasonably well and not add a bottleneck to performance when deployed.

Benchmarking single Request -> Response. 512 byte request payload and response, and varying number of concurrent callers. Tested on 16 cores/32 thread CPU. First, we start with 2 servers:

With 2 “servers”, we reach a limit of the single connection between each at approximately 1 million 2x512 byte messages per second. At this point, latency will keep increasing since we have reached the limit of each connection. This indicates we have plenty of throughput between any two servers.

For reference, the pure HTTP rest API maxed out at around 20,000 requests per second with a similar setup and no authentication or middleware.

Let’s observe scaling on multiple servers. We benchmark performance with 32 virtual servers:

With separate servers, we can observe that we appear to reach a limit of 6M messages for a single server per second.

Let’s also benchmark streams. We benchmark the most common scenario, a request with 512 byte payload and 10 individual responses with 512 bytes each. We limit the buffer of each request to 1 message, so we ensure that latency due to flow control is also included.

We count each response as one operation.

Compared to single requests the added control-flow adds a small bit of overhead. But overall even if streams are more complex the difference to single-shot requests is minimal.

Looking at 32 servers we see the 1 message buffering allows for better throughput.

Conclusion & Future Plans

The rewrite of the internal connection was released in RELEASE.2023-12-02T10-51-33Z and is now used for all locking operations, as well as a dozen storage-related calls. We are happy to see that so far no regressions related to this rather large change have been reported.

We are confident this will help MinIO scale even stronger in the future by preemptively removing a potential scaling bottleneck. We are happy to see that our solution provides almost 2 orders of magnitude higher message throughput.

We will continue to add more remote calls to the new connectivity as it makes sense. We do not expect to move all remote calls, as separate connections still make sense for transferring big amounts of data like file streams.