Fast and Efficient Search with OpenSearch and MinIO

In this post we look at how search, and specifically OpenSearch can help us identify patterns or see trends in our ever growing data. For example if you were looking at operational data, if you have a service that misbehaves seemingly at random, you would want to go back as far as possible to identify a pattern and uncover the cause. This is not just for applications, but also a plethora of logs from every device imaginable that need to be kept around for a reasonable amount of time for compliance and troubleshooting purposes. However, storing several months/years worth of searchable data on fast storage (such as NVMe) can take up a lot of costly drive space. Generally data from the last few weeks is the most searched so that is stored on the fastest hardware. But as the data gets older, it becomes less useful for immediate troubleshooting and doesn’t need to be on expensive hardware - even if it still holds a secret or two.

The question becomes, how do we search this archival data quickly without sacrificing performance. Is it possible to have the best of both worlds?

Cue in OpenSearch; a Apache Lucene based distributed search and analytics engine. You can perform full-text searches on data once it is added to the OpenSearch indices. Any application that requires search has a use case with OpenSearch, for example, you could use it to build a search feature into your application, DevOps engineers can put OpenSearch to work as a log analytics engine and backend engineers can put trace data via collectors such as OpenTelemetry to get better insight into app performance. With built-in, feature-rich search and visualization, you can pinpoint infrastructure issues such as running out of disk space, getting error status codes and more and surface them in dashboards before they wreak havoc on operations.

However, as log data grows, it is sometimes not practical to keep all of it on one node or even a single cluster. We like OpenSearch because it has a distributed design, not unlike MinIO, which stores your data and processes requests in parallel. MinIO is very simple to get up and running with just a simple binary. You can start off on your laptop with a single node single drive configuration and grow that into production with multi-drive, multi-node and multi-site – with the same feature set that you’ve come to expect in Enterprise grade storage software. Not only can you build a distributed OpenSearch cluster but you can also subdivide the responsibilities of the various nodes in the cluster as it grows. You can have nodes with large disks to store data, nodes with a lot of RAM for indexing and nodes with a lot of CPU but less disk to manage the state of the cluster.

As your data grows, you can tier off old/archival data to a MinIO bucket so SSD/NVMe storage can be kept for the latest data being added to the cluster. Moreover, you can search these snapshots directly while they are stored in the MinIO bucket. Logically, when moving snapshots it’s important to consider that accessing data from remote drives is slower than accessing it from local drives, so generally higher latencies on search queries are expected, but the increased storage efficiency is frequently worth it. With MinIO, you are getting the fastest network object storage available. Distributed MinIO on a high speed network can outperform local storage -- MinIO has created a comprehensive blueprint for data infrastructure to support exascale AI and other large scale data lake workloads. It is called the MinIO DataPod. Why? Because exascale data is the reality that is common today in today's enterprise. Unlike other, slower object storage, MinIO can seamlessly access these snapshots as if they were local on the OpenSearch cluster, saving valuable time, network bandwidth and team effort when searching for insights in siphoned log data in a matter of seconds depending on the size of the result. Compare this to local restore that takes hours to perform the operation before even being able to query the data.

OpenSearch is more efficient when used with storage tiering, which decreases the total cost of ownership for it, plus you get the added benefits of writing data to MinIO that is immutable, versioned and protected by erasure coding. In addition, using OpenSearch tiering with MinIO object storage makes data files available to other cloud native machine learning and analytics applications.

Infrastructure

Let's set up OpenSearch and MinIO using Docker and go through some of the features to showcase their capabilities.

OpenSearch

We’ll create a custom Docker image because we’ll build it with a custom plugin to connect to our MinIO object store.

Build the custom Docker image

Run the following Docker command to spin up a container using the image we downloaded earlier

Curl to localhost port 9200 with the default admin credentials to verify that OpenSearch is running

You should see an output similar to below

We can check the container status as well

MinIO

We’ll bring up a single MinIO node with 4 disks. MinIO runs anywhere and always makes full use of underlying hardware. In this overview we will use containers created using Docker.

For the 4 disks, create the directories on the host for minio:

Launch the Docker container with the following specifications for the MinIO node:

The above will launch a MinIO service in Docker with the console port listening on 20091 on the host. It will also mount the local directories we created as volumes in the container and this is where MinIO will store its data. You can access your MinIO service via http://localhost:20091.

If you see 4 Online, that means you’ve successfully set up the Minio node with 4 drives.

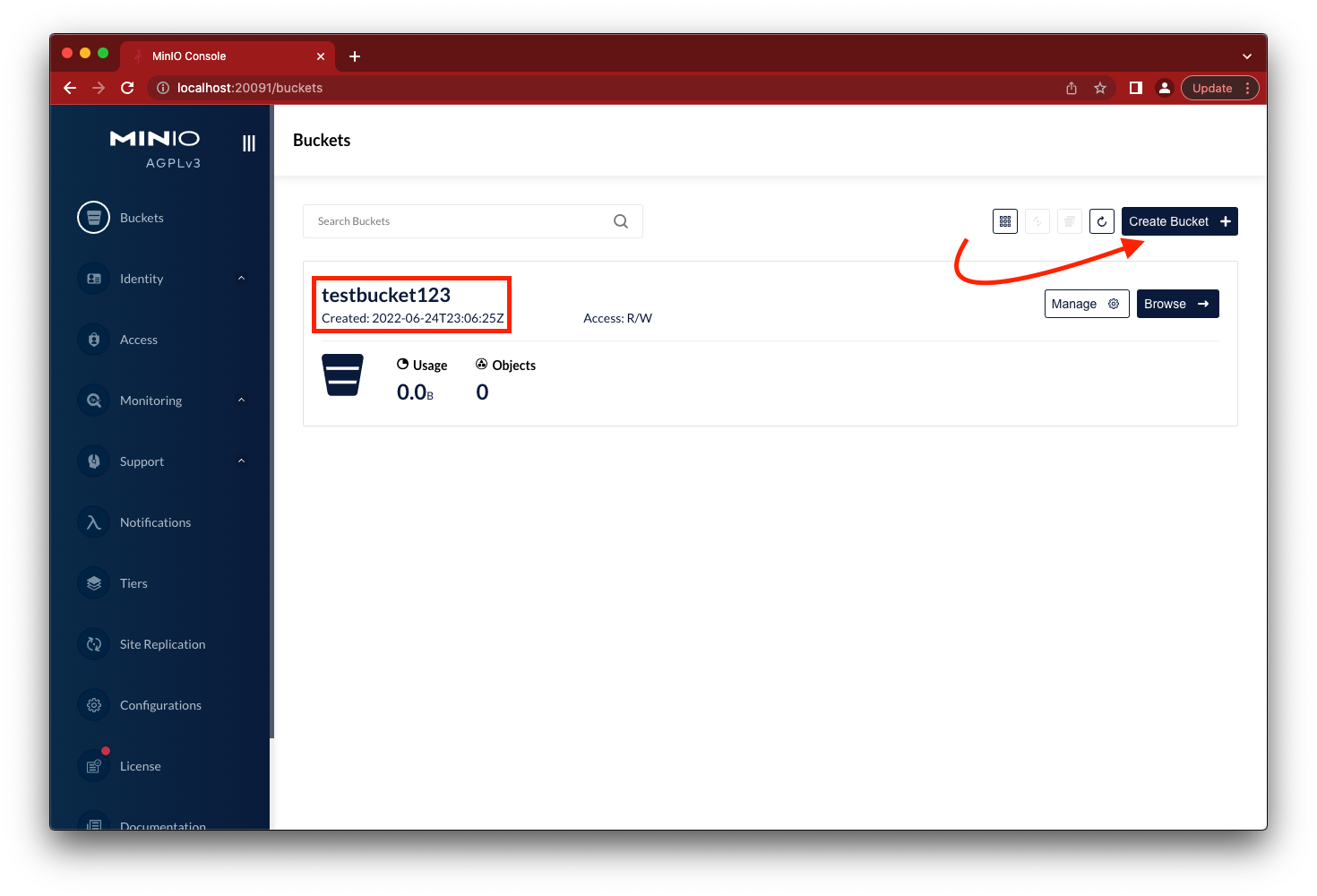

Go to the browser and load the MinIO console located at http://localhost:20091. Log in using minioadmin and minioadmin for username and password respectively. Click on the Create Bucket button and create testbucket123.

While you’re in the MinIO Console, note that there is a point-in-time metrics dashboard that you can use for quick and easy monitoring of your object storage cluster.

Set Up MinIO Repository

In order to set up a searchable snapshot index, you need to set up a few prerequisites and configuration to your OpenSearch cluster. We’ll go through them in detail here.

In opensearch.yaml create a node and define the node role

Lets register the MinIO bucket using the _snapshot API

Now that we have the repository, let’s go ahead and create a searchable snapshot.

Searchable Snapshot

In order to make a snapshot, we need to make an API call using the repository we created earlier.

Let’s check the status of the snapshot

Let’s see this snapshot on the MinIO side as well

As you can see above, there is a copy of the snapshot we took using the OpenSearch API in the MinIO bucket.

Now you must be wondering, “we’ve taken the snapshot, but how do we restore it so we can analyze and search against the backed up indices?” Instead of restoring the entire snapshot in the traditional sense, although that is very much possible with OpenSearch, we’ll show you how you can more efficiently search the snapshot while it's still stored on MinIO.

The most important configuration change we need to make is to set storage_type to remote_snapshot. This setting tells OpenSearch whether the snapshot will be restored locally to be searched or if it will be searched remotely while stored on MinIO.

Let’s list all the indices to see if the remote_snapshot type exists

As you can see it's pretty simple to get MinIO configured as an OpenSearch remote repository.

Take Back Your Logs

By leveraging MinIO as a backend for OpenSearch, you can not only take searchable snapshots, but also create non-searchable (also called ‘local’) snapshots and use them as regular backups that can be restored to other clusters for disaster recovery or be enriched with additional data for further analysis.

All that being said, we need to be aware of certain potential pitfalls of using a remote repository for a snapshot location. The access speeds are more or less fixed by the speed and performance of MinIO, which is typically gated by network bandwidth. Please be aware that in a public cloud such as AWS S3, you may be also charged on a per-request basis for retrieval, so users should closely monitor any costs incurred. Searching remote data can sometimes impact the performance of other queries running on the same node. It is generally recommended that engineers take advantage of node roles and create dedicated nodes with the search role for performance-critical applications.

If you have any questions on how to use OpenSearch with MinIO be sure to reach out to us on Slack!