Building on the Lessons from Kafka: How AutoMQ and MinIO Solve Cost and Elasticity Challenges

co-author: Kaiming Wan

Apache Kafka is the de facto standard in streaming due to its excellent design and powerful capabilities. Not only has it defined the architecture of modern streaming, but its unique distributed log abstraction has also provided unprecedented capabilities for real-time data stream processing and analysis. Kafka's success lies in its ability to meet the demands of high throughput and low latency data processing, and over the years, it has developed an extremely rich vendor ecosystem widely used in various production scenarios.

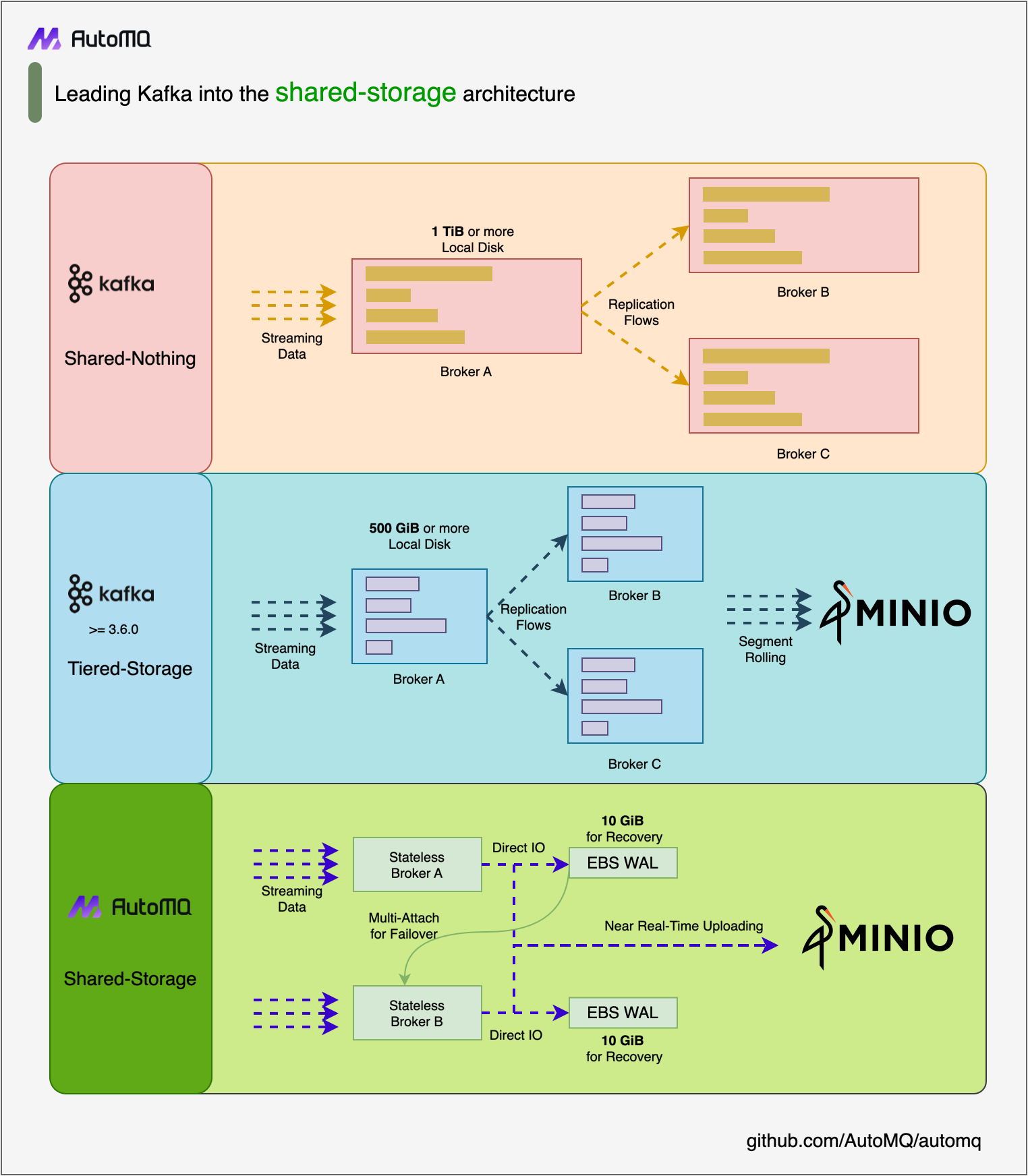

However, as cloud computing and cloud-native technologies have rapidly evolved, Kafka faces increasing challenges. Traditional storage architectures are struggling to meet the demands for cost efficiency and elasticity in the cloud environment, prompting a reevaluation of Kafka's storage model. Tiered storage was once seen as a possible solution, attempting to reduce costs and extend the lifecycle of data by layering data storage across different media. Yet, this approach has not fully addressed the pain points of Kafka, and instead has increased the complexity and operational difficulty of the system.

AutoMQ is an open-source fork of Kafka that replaces Kafka's storage layer with a shared storage architecture based on object storage, while reusing 100% of Kafka's compute layer code, ensuring full compatibility with the Kafka API protocol and ecosystem. As illustrated below, this innovative shared storage architecture not only achieves technical and cost advantages through shared storage but also completely resolves the original issues of cost and elasticity in Kafka without sacrificing latency.

Thanks to MinIO's full compatibility with the AWS S3 API, you can deploy the AutoMQ cluster even in private data centers to obtain a streaming system that is fully compatible with the Kafka API but offers better cost efficiency, extreme scalability, and single-digit millisecond latency. This article will guide you on how to deploy the AutoMQ cluster and store its data on MinIO.

Use Cases

AutoMQ's innovative disaggregated storage architecture opens up a plethora of use cases that cater to the demands of modern data streaming and processing environments:

- Cost-Efficient Data Streaming: AutoMQ's use of object storage significantly reduces the cost of storing large volumes of streaming data, making it ideal for organizations with budget constraints or those seeking to optimize their spending.

- Scalability for Growing Data Needs: With its ability to scale out effortlessly, AutoMQ can handle increasing data loads without compromising performance, ensuring that organizations can grow their data streams as needed.

- Low-Latency Applications: AutoMQ achieves single-digit millisecond latency, making it suitable for applications that require real-time data processing and analysis, such as financial transactions, IoT data streams, and online gaming.

- Hybrid, Multi-Cloud and Private Cloud: Unlike other alternatives to Kafka, AutoMQ's disaggregated storage model allows it to be deployed in private data centers, supporting fully on-prem, hybrid, and multi-cloud environments. This flexibility is crucial for organizations that need to maintain data sovereignty or have specific regulatory requirements.

But why use it with MinIO? Out of the box MinIO includes

- Encryption: MinIO supports both encryption at Rest and in Transit. This ensures that data is encrypted in all facets of the transaction from the moment the call is made till the object is placed in the bucket.

- Bitrot Protection: There are several reasons data can be corrupted on physical disks. It could be due to voltage spikes, bugs in firmware, misdirected reads and writes among other things. MinIO ensures that these are captured and fixed on the fly to ensure data integrity.

- Erasure Coding: Rather than ensure redundancy of data using RAID which adds additional overhead on performance, MinIO uses this data redundancy and availability feature to reconstruct objects on the fly without any additional hardware or software.

- Secure Access ACLs and PBAC: Supports IAM S3-style policies with built in IDP, see MinIO Best Practices - Security and Access Control for more information.

- Tiering: For data that doesn’t get accessed as often you can siphon off data to another cold storage running MinIO so you can optimize the latest data on your best hardware without the unused data taking space.

- Object Locking and Retention: MinIO supports object locking (retention) which enforces write once and ready many operations for duration based and indefinite legal hold. This allows for key data retention compliance and meets SEC17a-4(f), FINRA 4511(C), and CFTC 1.31(c)-(d) requirements.

Prerequisites

- A functioning MinIO environment. If you do not yet have a MinIO environment set up, you can follow the official guidance for installation. TK MinIO supports a wide range of replication capabilities. Here are some best practices.

- To deploy locally you will need the following:

- Linux/Mac/Windows Subsystem for Linux

- Docker

- Docker Compose version > 2.22.0

- At least 8 GB of free memory

- JDK 17

Tips: For a production environment, it is recommended to have five hosts to deploy AutoMQ. AutoMQ recommends that the hosts should be Linux amd64 systems, each with 2 CPUs and 16GB of memory. For testing and development, you can get by with less.

- Download the latest official binary installation package from AutoMQ Github Releases to install AutoMQ.

- Create two buckets in MinIO named

automq-dataandautomq-ops.

- Lastly, you will need to create an access key. Navigate to the User panel in MinIO Console and then to Create access key. Please note that you can only copy down the secret key at the time of creation, so keep both parts of your access key secure.

Install and start the AutoMQ cluster

Step 1: Generate an S3 URL

The following install steps are verified for AutoMQ versions under 1.1.0. Refer to the latest install documentation to get updated installation steps. The AutoMQ provides the automq-kafka-admin.sh tool, which allows for quick startup of AutoMQ. Simply supply an S3-compatible URL containing the required endpoint and authentication information to launch AutoMQ at the push of a button, eliminating the need for manual cluster ID generation or storage formatting.

In a terminal window, navigate to where you installed the AutoMQ binary and run the following command:

bin/automq-kafka-admin.sh generate-s3-url \

--s3-access-key=XXX \

--s3-secret-key=YYY \

--s3-region=us-east-1 \

--s3-endpoint=http://127.0.0.1:9000 \

--s3-data-bucket=automq-data \

--s3-ops-bucket=automq-ops

When using MinIO, the following configuration can be used to generate the specific S3 URL.

| Param | Default Value in This Example | Description |

|---|---|---|

| --s3-access-key | minioadmin | A unique identifier, similar to a username, used to identify the user making a request. |

| --s3-secret-key | minioadmin | A secret password-like credential used in conjunction with the access key to sign and authenticate requests. |

| --s3-region | us-east-1 | us-east-1 is the default value for MinIO. If applicable use the region specified in the MinIO config file. |

| --s3-endpoint | http://10.1.0.240:9000 | By running the sudo systemctl status minio.service command, you can retrieve the endpoint |

| --s3-data-bucket | automq-data | |

| --s3-ops-bucket | automq-ops |

Output result

Upon executing the command, it will automatically proceed in the following stages:

- Use the provided accessKey and secretKey to explore the basic functionalities of S3 to verify the compatibility between AutoMQ and S3.

- Generate an S3Url using the identity and endpoint information.

- Use the S3Url to get an example of the Startup command for AutoMQ. In the command, replace --controller-list and --broker-list with the actual CONTROLLER and BROKER deployment requirements.

A truncated example of the execution results::

############ Ping s3 ########################

[ OK ] Write object

[ OK ] RangeRead object

[ OK ] Delete object

[ OK ] CreateMultipartUpload

[ OK ] UploadPart

[ OK ] CompleteMultipartUpload

[ OK ] UploadPartCopy

[ OK ] Delete objects

############ String of s3url ################

Your s3url is:

s3://127.0.0.1:9000?s3-access-key=XXX-secret-key=YYY-region=us-east-1&s3-endpoint-protocol=http&s3-data-bucket=automq-data&s3-path-style=false&s3-ops-bucket=automq-ops&cluster-id=GhFIfiqTSkKo87eA0nyqbg

...

If instead of the above, you get an error message that looks like this.

[ FAILED ] Delete objects

FAILED: Caught exception Failed to Delete objects on S3. Here are your parameters about S3: s3 parameters{endpoint='https://127.0.0.1:9000', region='us-east-1', bucket='automq-data', isForcePathStyle=false, credentialsProviders=[org.apache.kafka.tools.automq.GenerateS3UrlCmd$$Lambda$112/0x000000800109cdc8@5cbd159f], tagging=null}.

Here are some advices:

You are using https endpoint. Please make sure your object storage service supports https.

forcePathStyle is set as false. Please set it as true if you are using minio.Make sure that you're using your Access Key and not your username and password. Additionally, at the time of writing, it appears that AutoMQ does not support https.

Step 2: Generate a list of startup commands

Replace --controller-list and --broker-list in the command generated in the previous step with your host information. Specifically, replace them with the IP addresses of the 3 CONTROLLERS and 2 BROKERS mentioned in the environment preparation, using the default ports 9092 and 9093. If you're running locally, you will need to use your Private IP address rather than your public one.

Then change s3-path-style to true.

Run the edited command in a terminal window:

bin/automq-kafka-admin.sh generate-start-command \

--s3-url="s3://127.0.0.1:9000?s3-access-key=XXX-secret-key=YYY&s3-region=us-east-1&s3-endpoint-protocol=http&s3-data-bucket=automq-data&s3-path-style=true&s3-ops-bucket=automq-ops&cluster-id=GhFIfiqTSkKo87eA0nyqbg" \

--controller-list="192.168.0.1:9093;192.168.0.2:9093;192.168.0.3:9093" \

--broker-list="192.168.0.4:9092;192.168.0.5:9092"

Parameter Explanation

| Parameter Name | Required | Description |

|---|---|---|

| --s3-url | Yes | Generated by the command line tool bin/automq-kafka-admin.sh generate-s3-url, which includes authentication, cluster ID, and other information |

| --controller-list | Yes | You need to provide at least one address to be used as the IP and port list for the CONTROLLER host. The format should be IP1:PORT1;IP2:PORT2;IP3:PORT3. |

| --broker-list | Yes | You need at least one address to use as a BROKER host IP and port list. The format should be IP1:PORT1;IP2:PORT2;IP3:PORT3. |

| --controller-only-mode | No | The decision on whether a CONTROLLER node exclusively serves the CONTROLLER role is configurable. By default, this setting is false, meaning that a deployed CONTROLLER node also takes on the BROKER role concurrently. |

Output result

After executing the command, it generates the command used to start AutoMQ. Your truncated terminal response should look something like the below:

To start an AutoMQ Kafka server, please navigate to the directory where your AutoMQ tgz file is located and run the following command.

Before running the command, make sure that JDK17 is installed on your host. You can verify the Java version by executing 'java -version'.

bin/kafka-server-start.sh --s3-url="s3://127.0.0.1:9000?s3-access-key=XXX-secret-key=YYY&s3-region=us-east-1&s3-endpoint-protocol=http&s3-data-bucket=automq-data&s3-path-style=true&s3-ops-bucket=automq-ops&cluster-id=GhFIfiqTSkKo87eA0nyqbg" --override process.roles=broker,controller --override node.id=0 --override controller.quorum.voters=0@192.168.0.1:9093,1@192.168.0.2:9093,2@192.168.0.3:9093 --override listeners=PLAINTEXT://192.168.0.1:9092,CONTROLLER://192.168.0.1:9093 --override advertised.listeners=PLAINTEXT://192.168.0.1:9092

...Tips: The default generation for node.id starts from 0.Step 3: Start AutoMQ

To launch the cluster, execute the list of commands from the previous step on the designated CONTROLLER or BROKER host. For instance, to start the first CONTROLLER process on 192.168.0.1, run the first command in the generated startup command list.

bin/kafka-server-start.sh --s3-url="s3://127.0.0.1:9000?s3-access-key=XXX&s3-secret-key=YYYY&s3-region=us-east-1&s3-endpoint-protocol=http&s3-data-bucket=automq-data&s3-path-style=false&s3-ops-bucket=automq-ops&cluster-id=GhFIfiqTSkKo87eA0nyqbg" --override process.roles=broker,controller --override node.id=0 --override controller.quorum.voters=0@192.168.0.1:9093,1@192.168.0.2:9093,2@192.168.0.3:9093 --override listeners=PLAINTEXT://192.168.0.1:9092,CONTROLLER://192.168.0.1:9093 --override advertised.listeners=PLAINTEXT://192.168.0.1:9092

Parameter Explanation

When using the startup command, parameters not specified will default to Apache Kafka®'s default configuration. For new parameters introduced by AutoMQ, the defaults provided by AutoMQ will be used. To override the default settings, you can add extra --override key=value parameters at the end of the command.

| Parameter Name | Required | Description |

|---|---|---|

| s3-url | yes | Generated by the bin/automq-kafka-admin.sh tool, including authentication, cluster ID, and other relevant information. |

| process.roles | yes | The configuration value for a host serving as both CONTROLLER and BROKER can be set as "CONTROLLER, BROKER". |

| node.id | yes | Integer is used to uniquely identify a BROKER or CONTROLLER in a Kafka cluster, which must be unique within the cluster. |

| controller.quorum.voters | yes | The host information participating in the KRAFT election includes nodeid, IP, and port information, for example: 0@192.168.0.1:9093, 1@192.168.0.2:9093, 2@192.168.0.3:9093. |

| listeners | yes | IP address and port for listening. |

| advertised.listeners | yes | BROKER provides access addresses for clients. |

| log.dirs | no | Directory for storing KRaft and broker metadata. |

| s3.wal.path | no | In a production environment, it is recommended to store AutoMQ WAL data on a dedicated mounted raw device volume. This approach can provide better performance as AutoMQ supports writing data to raw devices, reducing latency. Please ensure correct configuration of the path to store WAL data. |

| autobalancer.controller.enable | no | The default value is false, which disables traffic rebalancing. When automatic traffic rebalancing is enabled, the AutoMQ's auto balancer component will automatically migrate partitions to ensure overall traffic balance. |

Tips: If you need to enable continuous self-balancing or run Example: Self-Balancing When Cluster Nodes Change. It is recommended to explicitly specify parameters for the Controller during startup. --override autobalancer.controller.enable=true.

Running in the background

To run in the background, add the following code at the end of the command:

command > /dev/null 2>&1 &

Data volume path

Use the lsblk command on Linux to view local data volumes; unpartitioned block devices are the data volumes. In the following example, vdb is an unpartitioned raw block device.

vda 253:0 0 20G 0 disk

├─vda1 253:1 0 2M 0 part

├─vda2 253:2 0 200M 0 part /boot/efi

└─vda3 253:3 0 19.8G 0 part /

vdb 253:16 0 20G 0 disk

By default, the location for AutoMQ to store metadata and WAL data is in the /tmp directory. However, it is important to note that if the /tmp directory is mounted on tmpfs, it is not suitable for production environments.

To better suit production or formal testing environments, it is recommended to modify the configuration as follows: specify other locations for the metadata directory log.dirs and the WAL data directory s3.wal.path (raw device for writing data).

bin/kafka-server-start.sh ...\

--override s3.telemetry.metrics.exporter.type=prometheus \

--override s3.metrics.exporter.prom.host=0.0.0.0 \

--override s3.metrics.exporter.prom.port=9090 \

--override log.dirs=/root/kraft-logs \

--override s3.wal.path=/dev/vdb \

> /dev/null 2>&1 &

Tips:To change the s3.wal.path to the actual local raw device name, ensure that the specified file path points to a local SSD storage with available space greater than 10GB. For instance, set the AutoMQ Write-Ahead-Log (WAL) to be stored on a local SSD disk: --override s3.wal.path=/home/admin/automq-walFinal Thoughts

At this point, you have successfully deployed an AutoMQ cluster on MinIO, which provides a low-cost, high-performance, and efficiently scaling Kafka cluster.

One of the standout features of MinIO that enhances its integration with AutoMQ is its robust messaging and notification system. MinIO provides an event notification system that can be seamlessly integrated with Apache Kafka API, enabling real-time event processing and analysis. This integration allows organizations to set up event-driven architectures that can react to changes in object storage instantly.

Moreover, MinIO’s extensive support for server-side replication ensures that your AutoMQ data can be replicated across multiple regions and data centers, providing enhanced data durability and availability. This replication capability is crucial for maintaining business continuity and disaster recovery plans, ensuring that your data streams remain uninterrupted and resilient to failures.

Combining these features, MinIO not only addresses the storage challenges associated with Kafka but also enhances the overall capabilities of your data streaming architecture. Whether you are dealing with high-throughput data streams or require stringent data consistency and availability guarantees, MinIO and AutoMQ together provide a comprehensive solution that meets the demands of modern data-driven applications.

If you have any questions while you're implementing, please feel free to reach out to us at hello@min.io or through our Slack channel. We'd be happy to help.