Get Started with MinIO on Red Hat OpenShift for a PoC

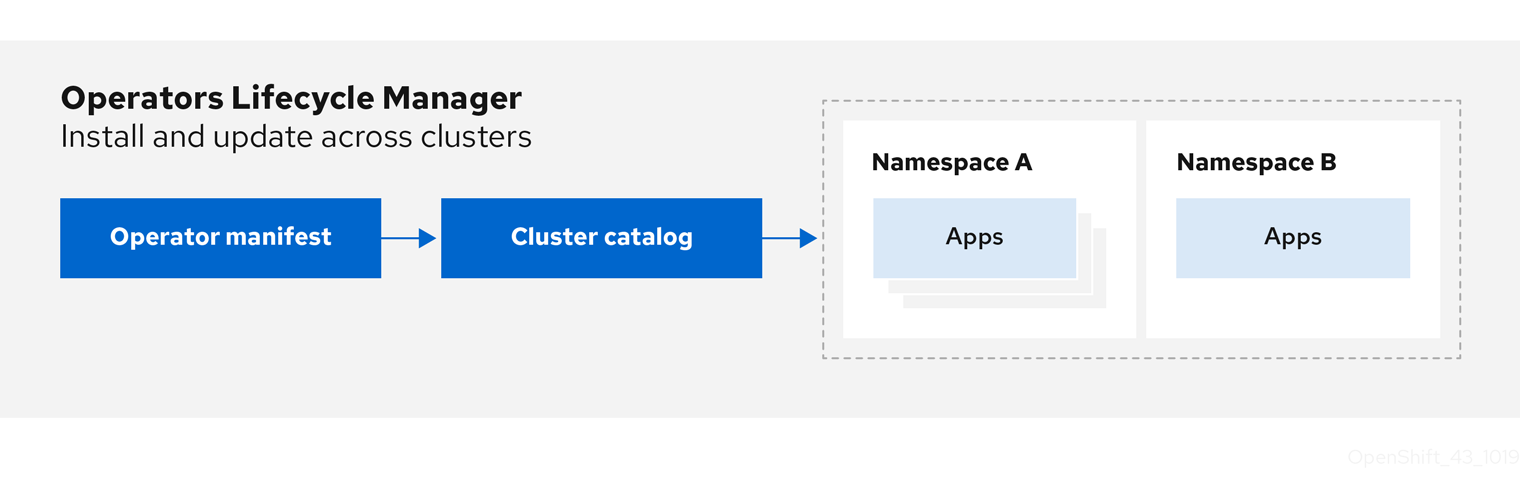

When we announced the availability of MinIO on Red Hat OpenShift, we didn’t anticipate that demand would be so great that we would someday write a series of blog posts about this powerful combination. This combination is being rapidly adopted due to the ubiquitous nature of on-prem cloud and the need of large organizations wanting to bring their data closer to apps and users, and at the same time offer their users the cloud-native capabilities that they’ve come to know and trust in public clouds. Ultimately, the combination of MinIO and OpenShift enables teams to use native APIs, CLIs and plugins to launch infrastructure in a version-controlled manner so changes are tracked. In addition to these, OpenShift also has a concept of Operator Lifecycle Management (OLM) that helps developers install, update and manage the lifecycle of the Kubernetes operators deployed to the OpenShift cluster. The Operator Framework toolkit allows you to manage operators in an effective, automated and scalable way.

We talked about how to get started with MinIO and OpenShift on your laptop in our last iteration, and in this post, we will go deeper to discuss the steps that you need to take when you want to set up your cluster (an actual cluster, not just a test machine) for Proof of Concept, or any other reason, so you have something more robust to experiment with.

You will need to understand the nuances of OpenShift security policy to set up an OpenShift cluster for experimentation, development, testing or a POC. Production clusters are a different matter, with additional requirements, and we’ll address them in a future blog post.

OpenShift Cluster Prep Work

Let’s start by examining the different components required and how you should consider them when setting up and configuring the OpenShift cluster for PoC purposes. Again, these settings should not be used in production.

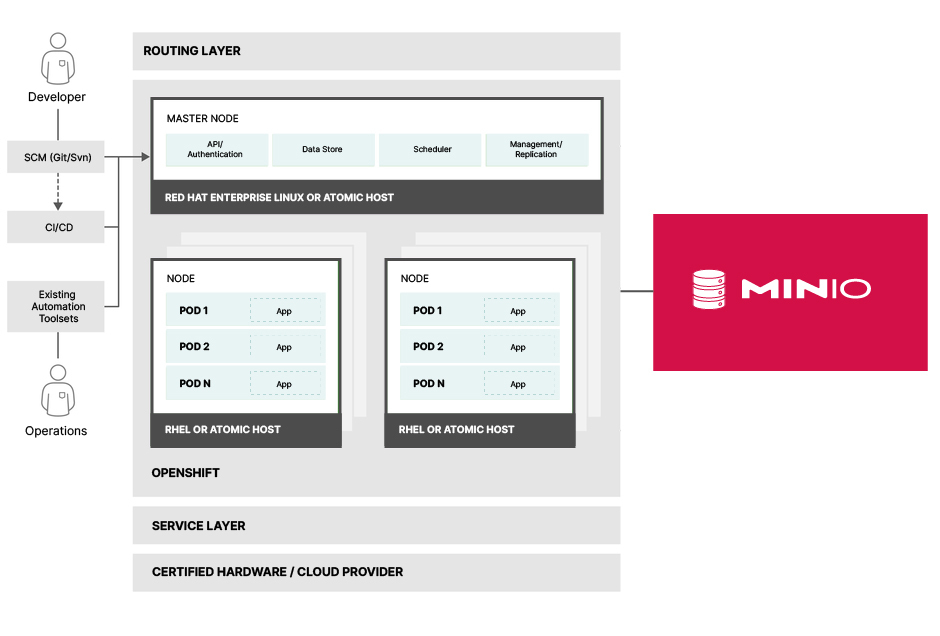

OpenShift is designed to expose Docker-Formatted container images with Kubernetes concepts where developers can deploy applications to infrastructure as easily as possible. OpenShift Container Platform has multiple systems that work together on top of the Kubernetes cluster with data stored in etcd. These services expose rich APIs which developers can then use to customize their clusters and deployments. OpenShift also takes security seriously in this regard. User access is strictly controlled via OAuth tokens and X.509 certificates, most of the communication with the API happens via the `oc` CLI tool which uses the OAuth bearer tokens for most purposes. Once authenticated, authorization is handled in the OpenShift Container Platform policy engine, which groups several different roles in a single document. These could be actions like creating a StatefulSet, fetching a list of secrets and other sensitive operations. Below is an architecture diagram of how this looks and we’ll go into more detail in later sections of this blog.

Server Infrastructure

While the initial infrastructure for PoC purposes does not need to be beefy, OpenShift does have some specific requirements on the number of nodes and the type of function each node provides to the cluster.

At the very least you need a node for Master with the following specifications

- 4 CPUs

- 16 GB RAM

- 50 GB hard disk space

Since this is the PoC, etcd can be colocated on the same physical node as the Master node, just ensure you have at least 4 CPUs. For simplicity's sake, use a simple partition scheme without going into too much detail – remember the goal of this PoC is to get up and running with as minimal overhead as possible.

Next, we need to add 2-3 physical nodes that can take on the Kubernetes workloads. MinIO is very lightweight and barely takes 20% of the CPU even during the most intensive operations, such as erasure coding, encryption, decryption, and compression, so you don’t need to invest in a lot of CPU power, but be sure to have enough drive space and memory depending on the amount of data you are using in your testing. The general rule of thumb is to increase your memory on the nodes as the amount of data increases. For more information, please see Selecting the Best Hardware for Your MinIO Deployment.

The specifications of the nodes running the workloads should be something as follows

- 1-2 CPUs

- 8-16 GB MEM

- 50-500 GB drive space

Similar to the Master nodes, let's keep the partitions simple in these nodes as well. Instead of giving specific specs, we added a range so you can decide what works best for your PoC.

Security Policies

Now comes the tricky part. Once the infrastructure is set up, if you try to launch MinIO right away, you most probably will run into a bunch of errors. Why would OpenShift do that to you? It turns out that even though this slows you down, it's good because OpenShift forces security controls on you. We like this – OpenShift is built with a focus on security just like MinIO is and together they enable performance and scalability without sacrificing security.

One of the ways OpenShift manages cluster security is by using RBAC: Role-Based Access Control. It's just a fancy term for a list of rules grouped into what is called a role that gets attached (also known as binding) to your user. These roles say what you can and cannot do on a cluster as the authenticated user. For example, you can have a role that has 2 rules, one which says they can list all pods, and the other can say they can only see one particular secret in the cluster. The rules can get fairly complex but you have commands such as `auth can-i` that allow you to traverse the role to see what you exactly have access to. Roles can give users access to both clusters or a specific namespace within (called Projects in OpenShift parlance).

Straight out of the box, OpenShift is locked down. While this is great for production and edge deployments, in a POC this can become a detriment because it hinders developers from exploring the intricacies of OpenShift and testing their applications.

Now that we have a brief overview of policies, let's go ahead and deploy a MinIO tenant to see if we can get all of this to work.

Fetch the MinIO repo

Apply the resources to install MinIO

Were you able to deploy MinIO? Or did you see any errors?

The Policy Engine

So what happened? When you go to apply the resources, one of the first issues you are going to encounter while deploying MinIO is an error similar to below

Forbidden: not usable by user or serviceaccount, spec.volumes[13]: Invalid value: "csi": csi volumes are not allowed to be usedThis error generally happens when you are trying to deploy a tenant that is using DirectPV.

To fix this, first, let's get a list of all the service accounts under the tenant using the following command

oc get serviceaccounts -n <project>

The project can be either minio-operator, directpv or the tenant-namespace.

Once you run the command there will be several service accounts listed. Be sure to give access to all of them using the commands below because even if one is missing you could have a bad day trying to go down the debugging rabbit hole.

Once you have them listed, go ahead and give them privileged access using the following commands.

oc adm policy add-scc-to-user privileged -n <tenant-namespace> -z builder

oc adm policy add-scc-to-user privileged -n <tenant-namespace> -z deployer

oc adm policy add-scc-to-user privileged -n <tenant-namespace> -z default

oc adm policy add-scc-to-user privileged -n <tenant-namespace> -z pipeline

oc adm policy add-scc-to-user privileged -n <tenant-namespace> -z tenant01-saOnce these are set let’s go ahead and apply our YAMLs again

Apply the resources to install MinIO

Verify MinIO is up and running. You can get the port of the MinIO console as well.

As you can see, once you have the necessary policies properly set up, it's no sweat to deploy MinIO.

In one of the OpenShift clusters, we got an error similar to unexpected service accounts. Without proper permissions, it did not allow the MinIO Pods to get scheduled. Normally `builder`, `deployer` and `default` are all the permissions we need to give permission to, but for a POC this will allow it to proceed with MinIO Deployment.

They see me rollin’

Now you have all the required tools to get started on your OpenShift PoC, in an environment that is as close as possible to production, but without security constraints.

We urge caution. Please note the policies we discussed above are strictly for PoC non-production use cases only. These settings are generally fine for when you want to get OpenShift up quickly on more powerful hardware than your laptop for more testing. This cluster should not be exposed to the public Internet. Stay tuned If you want to learn how to run locked-down MinIO and OpenShift in a production environment with only the necessary policies – we will cover this topic in an upcoming blog post.

If you have any questions about OpenShift policies or how to configure them please reach out to us on Slack!