Selecting the Best Hardware for Your MinIO Deployment

From the get go, MinIO has been designed to run efficiently on many varied types of hardware. We recommend that our users and customers use commodity hardware with disks in pure JBOD mode to ensure the underlying infrastructure is as simple and performant as possible. MinIO is the perfect combination of high performance and scalability, and this puts every data-intensive workload within reach. MinIO is capable of tremendous performance - a recent benchmark achieved 325 GiB/s (349 GB/s) on GETs and 165 GiB/s (177 GB/s) on PUTs with just 32 nodes of off-the-shelf NVMe SSDs.

Choosing the Best Datacenter Sites

When considering disaster recovery or distributed data, the physical location of the data centers and their proximity to customers do matter. You want to balance between being able to serve the data to be as close as possible to your customers at the edge, but at the same time having access to a big Internet pipe that is capable of replicating the data to multiple locations.

MinIO provides the following types of replication:

- Bucket Replication: Configure per bucket rules to synchronize objects between two MinIO buckets. Bucket Replication synchronizes data at the bucket level, such as bucket prefix paths and objects. You can configure bucket replication at any time, and the remote MinIO deployments may have pre-existing data on the replication target buckets.

- Batch Replication: You can use the replicate job type to create a batch job that replicates objects from the local MinIO deployment to another MinIO location at a time interval. The definition file can limit the replication by bucket, prefix, and/or filters to only replicate certain objects.

- Site Replication: Configure multiple MinIO deployments across multiple sites as a cluster of replicas called peer sites. Each peer site synchronizes bucket operations, STS, among other components.

Since we introduced multi-site active-active replication, our focus has been on maximizing replication performance without causing any additional degradation to existing operations performed by the cluster. This allows you to replicate data across multiple datacenters, clouds for ongoing operations and analyses, and even for disaster recovery where one site going offline will not decrease overall application availability. The replication configuration is completely handled on the server side with a set-it-and-forget-it ethos – the application using MinIO does not need to be modified in any way.

Our dedication to flexibility means that MinIO supports replication on site and bucket levels to give you the ability to fine tune your replication configuration based on the needs of your application and have as much control as possible over the process. Data remains protected via IAM across multiple MinIO deployments using S3-style policies and PBAC. Replication synchronizes creation, deletion and modification of objects and buckets. In addition, it also synchronizes metadata, encryption settings and security token service (STS). Note that you can use site replication or bucket replication, but not both. If you are already running bucket replication, then it must be disabled for site-replication to work.

Selecting Server Hardware

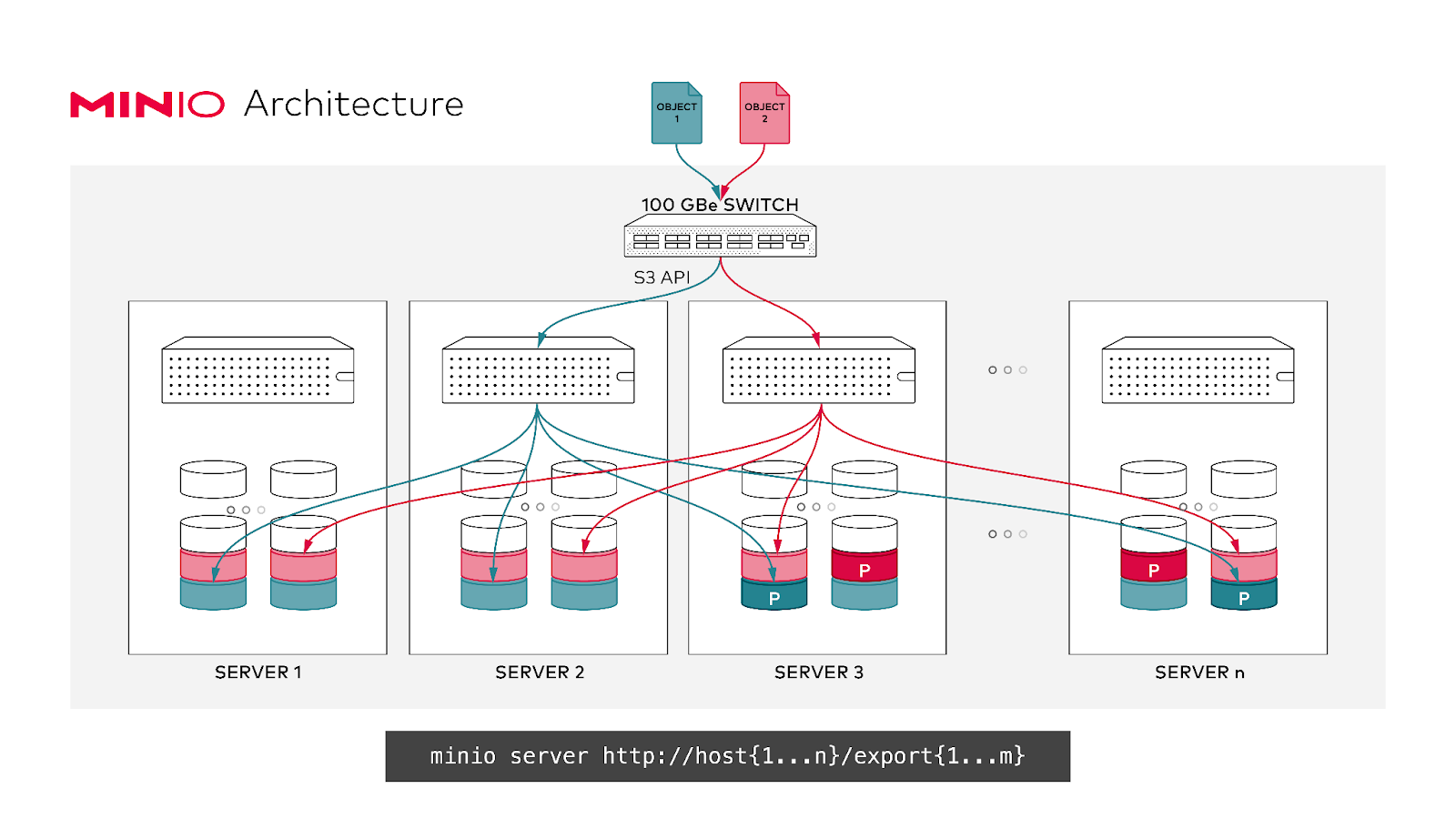

During benchmarking, stress testing, or suggesting hardware for production we’ve always been a proponent of commodity hardware. With MinIO, there is no need for something like specialized infiniband infrastructure with proprietary networking to max out throughput. Performance and scaling (and performance-at-scale) is managed by MinIO running on top of the commodity hardware.

We do not recommend adding RAID controllers or any other distribution replication component outside of MinIO. Ideally the server needs to include just a bunch of disks (JBOD) with enough drive capacity to be able to meet your anticipated need to house the data objects and speed to saturate the network. MinIO is designed to handle durability, replication and resiliency of data at a software level across multiple sites while the underlying hardware configuration can be kept minimal. Later, we’ll go into detail regarding specific components inside the server.

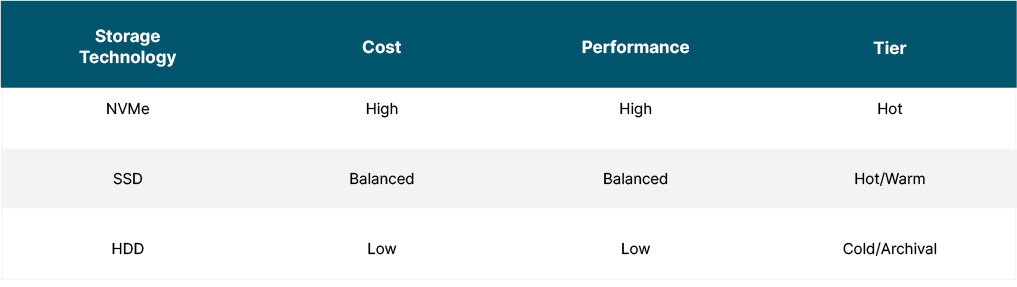

Drives

Since the primary use case here is storage, let's start with talking about the drives. There are a number of different types of drives and each of them vary vastly between cost, performance and capacity; selection must be made on the basis of use case. The drives can be roughly divided into the following three categories:

Generally, if you are using the MinIO cluster for basic object storage in production, then you should probably consider something like an NVMe SSD that gives a good balance between cost and performance. If you are working with backups and archived data that is over a year old and it isn’t queried very often, but it still needs to be accessed although not at lightning speeds, then it can be tiered to more affordable media. In these cases, you will most probably go with simple spindle SATA HDDs in the archive tier to save on cost.

This is the beauty of MinIO; it's very simple, flexible and not only runs on myriad hardware, but you can version objects and tier data to send old unused objects data to slow drives such as SATA but keep the latest or frequently accessed data on fast media such as NVMe. As mentioned earlier, based on our benchmarks, NVMe gives the best performance to cost ratio.

Network

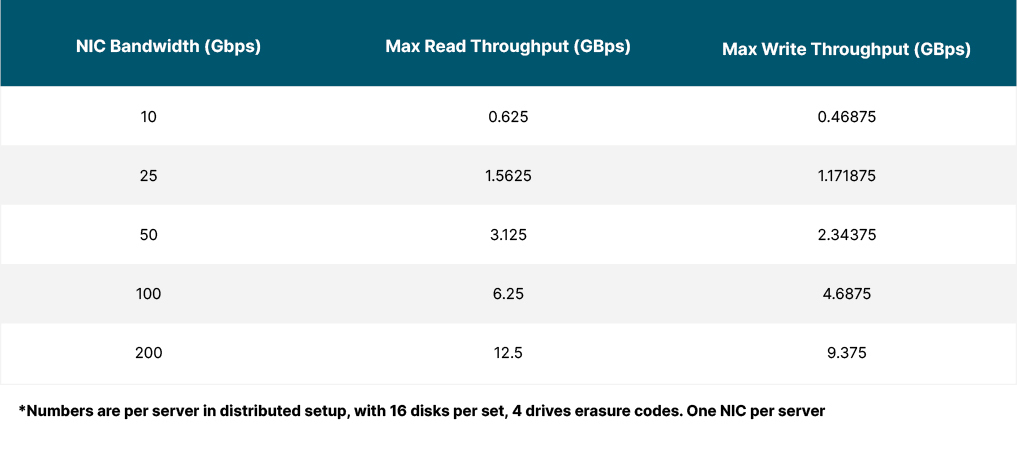

From working on your own local system, you might consider drives as the main bottleneck when it comes to storage. While this may be the case, when you add distributed storage into the mix such as MinIO, the bottleneck shifts and you will also need to consider inter-node performance, which is dependent on networking. Because data is stored across multiple servers, you need to ensure that the data speed between the servers is as fast as possible. While it is possible to run at 1 Gbps or 10 Gbps, if you truly desire top performance you need to have at the very bare minimum 25 Gbps speeds with dual NICs. For high performance, we recommend the fastest network and NICs that you can afford – 100GbE, 200GbE, and 400 GbE NICs are what we are seeing in production these days as the norm.

When talking about network performance you need to consider a few things. It is physically not possible to reach the maximum theoretical speeds of the NICs because of certain overheads especially when you are considering distributed setup. Other than MinIO there are other network services that use bandwidth during their normal operations. Due to this you can expect roughly 50% of NIC capacity to be available for MinIO. In a future blog post we’ll go into detail regarding the internals of networking and the best way to set it up for MinIO.

Low bandwidth can artificially impact the performance of MinIO so it's essential to ensure all the networking components such as fiber/ethernet cables, routers, switches and NICs support these levels of high throughput. Here is a basic chart of the guidelines that we recommend:

For example, if you go with the minimum requirements of 4 nodes with 4 drives each (total 16 drives) configuration from our multi-server multi-drive docs, you would need a total network possible aggregate output of 25GbE.

CPU and Memory

MinIO is very CPU efficient, and using features like TLS, Content Encryption, Erasure Coding, Compression will often not have any significant impact on MinIO. Typical CPU usage goes toward IO - Network and in particular Disk IO.

In general MinIO does not recommend purchasing SMP systems, unless you expect to run multiple MinIO instances on discrete CPUs. Since MinIO is capable of very high throughput that means that interconnect traffic latency and Non-Uniform-Memory-Access (NUMA) will typically bring the speed down on the system so much that SMP provides little to no benefit.

Instead MinIO will benefit more from a single CPU with a higher core count or spending the difference on other hardware improvements.

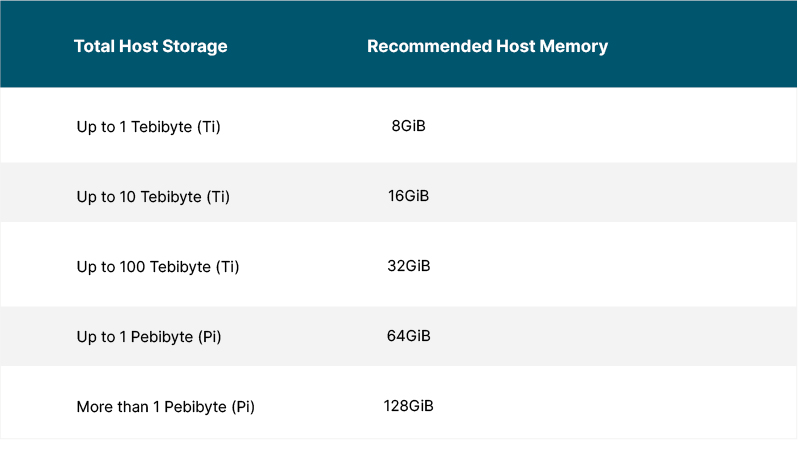

When memory is being considered, one of the primary factors is the amount of concurrent requests on the cluster. The total number of concurrent requests can be calculated as follows

totalRam / ramPerRequest

To calculate the amount of Ram used per request use the following calculation

Lets see a few questions of the max number of concurrent requests based on the number of drives and ram

Generally speaking the amount of memory depends on the number of requests and the number of drives in the cluster. Here is a table that shows the amount of storage and the minimum recommended memory.

If you see performance degradation such as a system running out of memory then either you can scale horizontally by adding more nodes to the cluster to spread the load or adding more memory to the current node. For production workloads, we recommend 128GiB of RAM to ensure memory is never a bottleneck.

Rack Power

In addition to server failures, the racks and the components around it such as power distribution units (PDUs), switches, routers, among other equipment are subject to failure as well. In addition, sometimes these need to be taken offline due to regular maintenance, and while every precaution is taken to ensure the maintenance doesn’t take down the entire infrastructure, things do not always go as planned, leading to failure at some unforeseen single point of failure (SPOF).

When designing power distribution for a MinIO deployment, it is paramount to ensure there are at a minimum 2 Power Supply Units (PSUs) per server and they connect to two different PDUs from different circuit breakers. This is to ensure that there is redundancy at each of the following levels:

- Power source failure (circuit breaker tripping)

- PDU socket or strip failure

- PSU device or power cord failure

When connecting servers to the PDU, generally it is recommended to not go above 80% of the circuit rated amperage. For example, if you are provisioned with a 30 amp circuit, then you should not load it over 24 amps or you risk the circuit overheating and the breaker tripping. However, this is not always the case. Some data centers are wired such that you could potentially use the full 100% of the circuit’s rated capacity. These are generally far more efficient as you can power more servers per rack than you could otherwise.

But what about when there are 2 circuits for redundancy? How much power should be consumed at this point? Well, if you think about it, if you can only use 24 amps on a single 30 amp circuit, then if there are 2 circuits we could only use 12 amps each per circuit. The reason for this being when there is an entire circuit outage the other circuit needs to take over the additional load. If both circuits are loaded to80%, then during the failover the single circuit will end up using 24 + 24 total or 48 amps and it will trip the “good” circuit as well. For this reason it is recommended to use only 50% of the available circuit capacity when using 2 circuits for redundancy.

Benchmarking Tools

With any production environment, you want to make sure you perform a performance and stress test on the infrastructure. This ensures that you work out any bottlenecks or edge cases in the setup before production data is placed on the node. There are a number of benchmarking tools we offer both to our open source community and our customers.

Perf test: Integrated as part of the `mc` admin tool, the perf test helps you conduct a quick performance assessment of your MinIO cluster. Using the results you can track the performance overtime or look at specific pitfalls that you might encounter. You would run the command as follows:

mc support perf alias

WARP: This is a tool developed by MinIO inhouse and open-sourced as a separate binary that thoroughly benchmarks MinIO or any S3 compatible storage by performing mixed tests of read and writes over all disks utilized by MinIO cluster. For instance, this is how you would launch a warp mixed benchmark:

WARP_ACCESS_KEY=minioadmin WARP_SECRET_KEY=minioadmin ./warp mixed --host host{1...4}:9000 --duration 120s --obj.size 64M --concurrent 64DD: This is a default operating system tool that tests drive performance. Test each of the drives independently and compare the results to ensure all drives are giving the same performance. To show the actual drive performance with consistent I/O, test the drives performance during write operations.

dd if=/dev/zero of=/mnt/driveN/testfile bs=128k count=80000 oflag=direct conv=fdatasync > dd-write-drive1.txtAlso test is during read operations

dd if=/mnt/driveN/testfile of=/dev/null bs=128k iflag=direct > dd-read-drive1.txtOne thing to keep in mind when running the dd is to ensure you are using objects with similar size that you would expect in production to accurate results. For example, if you expect to be dealing with many (several million) small files then test the performance with that many number of files and size to ensure it will perform well in production.

IO Controller Test: The IOZone I/O test the read and write performance of the controller and all the drives on the node within the cluster. Below is an example command that you would run:

iozone -s 1g -r 4m -i 0 -i 1 -i 2 -I -t 160 -F /mnt/sdb1/tmpfile.{1..16} /mnt/sdc1/tmpfile.{1..16} /mnt/sdd1/tmpfile.{1..16} /mnt/sde1/tmpfile.{1..16} /mnt/sdf1/tmpfile.{1..16} /mnt/sdg1/tmpfile.{1..16} /mnt/sdh1/tmpfile.{1..16} /mnt/sdi1/tmpfile.{1..16} /mnt/sdj1/tmpfile.{1..16} /mnt/sdk1/tmpfile.{1..16} > iozone.txtSUBNET: In addition to the open source tools mentioned above, our standard and enterprise customers also have access to Performance and Healthcheck tools via the SUBNET portal. This adds additional insights to performance data and has the added benefit of our engineers being able to guide you in the architecture and performance of your infrastructure.

SUBNET not only goes deep into the performance checks of the cluster, but has a number of added benefits such as Diagnostics, Logs, Cluster Inspection, among others. But the most important of all you will have direct support from our engineers who write the MinIO codebase. You do not need to open a ticket to explain the same thing several times before escalating. Our SUBNET portal is designed with user experience in mind and it gives a chat-like experience not unlike Slack where you can write your messages as your thoughts flow while our engineers help you troubleshoot your issue. A few of our customers have told us that SUBNET is magical – there is no other software company in existence where the engineers who wrote the code are a click away.

Build Confidence with Chaos

Chaos Monkey is a tool originally developed by Netflix to intentionally degrade otherwise fine infrastructure in order to understand and anticipate the different failure scenarios. The tool does a number of things, but one example is taking a certain number of servers offline under load to see how gracefully the other servers take over the additional load.

Similarly with MinIO, we can get inventive and experiment with simulated failures. Take a couple of the drives or servers offline to see if in fact the Erasure Code calculator settings that you setup and it should work as expected according to the calculator. For instance, if your settings call for up to 4 servers being down/offline at any given time, try to actually shut down 4 servers randomly to see if the cluster is still operational. This is a great way for building confidence among your team when managing MinIO so in the future when failure happens or maintenance needs to be done everyone can know exactly what to expect from the cluster.

You can also try taking individual network devices offline, such as switches or entire racks altogether, since MinIO can be made aware of not only drive and server level redundancy but also rack level redundancy as follows:

minio server http://rack{1…n}.host{1…n}/drive{1…n}

What is the Ideal Hardware Configuration?

The truth is that there is no optimal hardware configuration for MinIO. Our customers select hardware based on their use case and requirements. If there were a single optimal hardware configuration for MinIO, then we would sell MinIO on an appliance, but this would deprive you of the freedom to choose the best hardware for the job and lock you into a specific form factor. Instead, we’ve chosen to empower you with the ability to select the best hardware or instances to meet your goals.

Rest assured that whichever hardware you select, MinIO is designed to extract the most performance out of it.

In the 32-node benchmark mentioned above, we did not use any performance tuned hardware, but instead relied on the generic NVMe SSDs on commodity hardware. The setup is as follows:

- 32 Nodes, each with:

- 96 CPUs Cores

- 768 GB Mem

- 8 x 7500 GB NVMe SSD

- 100 Gbps Network with Dual NICs

We also provide you with a handy hardware reference guide and checklist that shows you some of the configurations of MinIO recommended hardware and our reasoning and calculations behind it.

Moreover, before deploying a configuration into production, be sure to stress test the infrastructure using the tools mentioned above to have a baseline performance. Perform Chaos Monkey type operations to ensure the infrastructure is as resilient as possible, and that your team is prepared for unexpected failures.

Before finalizing your hardware be sure to reach out to our engineering team on the optimal configuration for your cluster based on your application requirements. We’re here to help! So reach out to us on SUBNET, Slack or email us at hello@min.io.