AIStor Tables: Native Iceberg V3 for On-Premises Object Storage

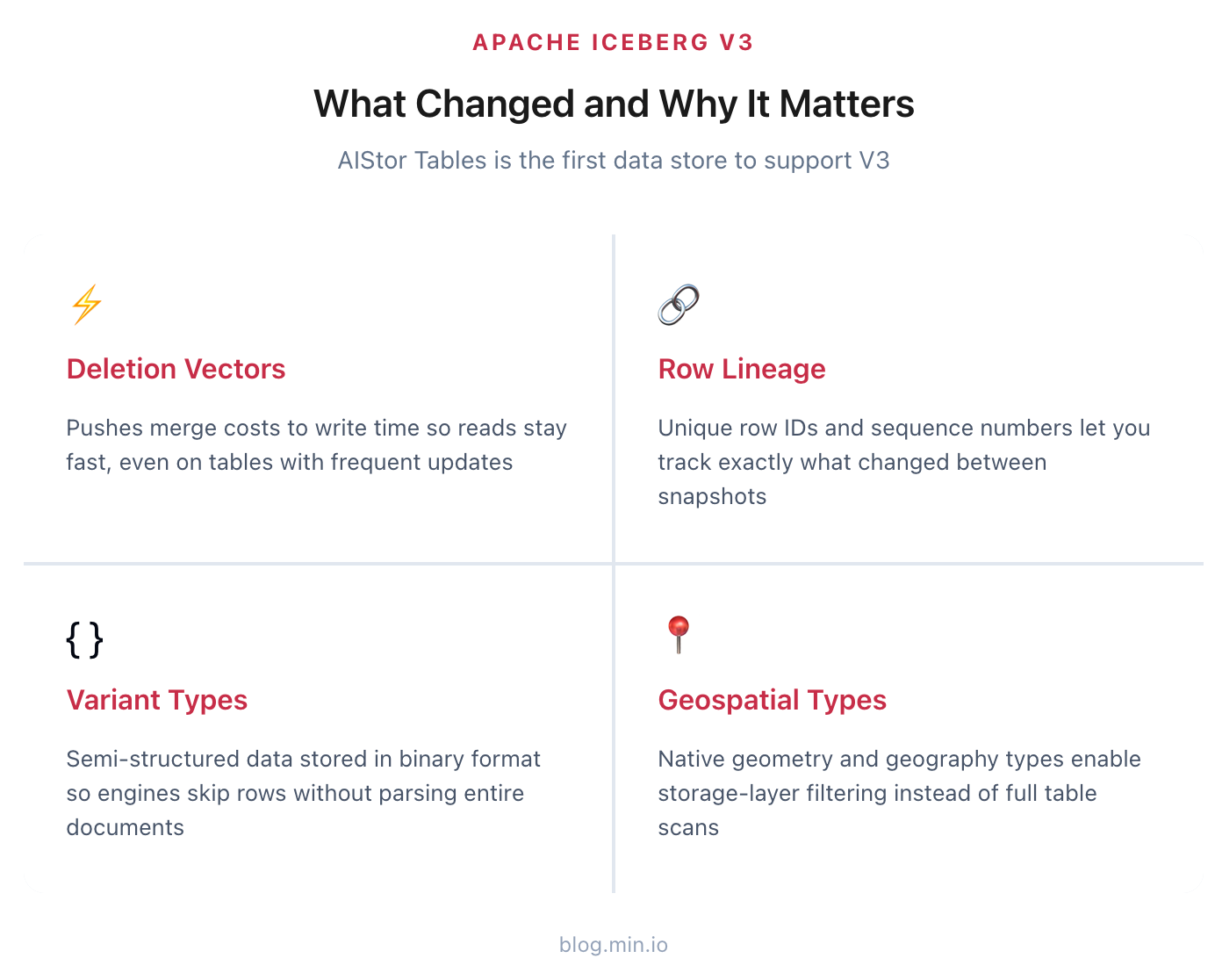

Apache Iceberg Format Version 3 represents a significant evolution in how table formats handle row-level operations, data lineage, and complex data types. AIStor Tables is the first data store in the industry to support V3, embedding the Iceberg Catalog REST API directly into MinIO AIStor object storage. This post explains what V3 changes at a technical level and why those changes matter for teams building data platforms.

How Iceberg Versioning Works

Iceberg format versions define what features a table can use. V1 established the foundation: immutable Parquet/Avro/ORC files, manifest-based file tracking, snapshot isolation, and schema evolution through column IDs rather than names. V2 added row-level delete support through position delete files (which reference file paths and row positions) and equality delete files (which specify column values to match).

V3 expands on this with new capabilities that affect both write and read paths.

Faster Reads by Moving Delete Costs to Write Time

V2's approach to row-level deletes created operational problems. Position delete files reference specific row numbers within data files. Equality delete files specify predicates that must be evaluated against every row. Neither approach required writers to consolidate deletes at write time, so tables accumulated many small delete files that readers had to merge on every query.

V3 introduces deletion vectors: compact binary bitmaps that mark deleted rows within a data file. The format uses Roaring Bitmaps encoded in Puffin files, which compress well for both sparse and dense delete patterns.

The critical change is behavioral, not just structural. V3 requires writers to maintain exactly one deletion vector per data file. When a write operation deletes rows from a file that already has a deletion vector, the writer must merge the new deletes with the existing vector and produce a single updated vector. This pushes merge costs to write time rather than read time.

For workloads with frequent updates, like change data capture pipelines or event correction workflows, this eliminates the accumulation of delete files that previously degraded read performance over time.

Row Lineage Enables Incremental Processing

Tracking which rows changed between snapshots has historically required scanning and comparing data. V3 adds row-level metadata to solve this: each row carries a unique row ID and a last-modified sequence number.

The row ID persists across compaction and file rewrites. When a row moves from one data file to another during maintenance operations, its ID stays the same. The sequence number updates only when the row's actual content changes, not when it relocates.

This distinction matters for incremental processing. A system consuming changes from an Iceberg table can now identify exactly which rows were inserted, updated, or deleted between two snapshots by comparing row IDs and sequence numbers. Previously, file-level tracking could not distinguish between a row that was modified and a row that was simply rewritten into a new file during compaction.

New Variant Types for Fast Semi-Structured Data Analysis

Storing JSON in string columns forces query engines to parse text at read time. They cannot push predicates into the storage layer because the structure is opaque until parsed. Every query reads every row.

The variant type encodes both the schema and the data in a binary format that engines can navigate without full parsing. A query filtering on a nested field can skip rows where that field does not match, without deserializing the entire JSON structure.

The encoding stores metadata about the variant's structure separately from the values. This allows engines to read only the portions of the variant they need. For wide JSON documents where queries typically access a small subset of fields, this reduces I/O significantly.

New Variant Types also handles schema inconsistency. Unlike struct types that require a fixed schema, variant columns can contain documents with different shapes in different rows. This fits use cases like event logs, API responses, and IoT telemetry where schemas evolve or vary by source.

Skip Full Scans for Geospatial Queries

Before V3, teams stored coordinates as binary columns (WKB) or text columns (GeoJSON strings). Iceberg treated these as opaque data. A query for all delivery stops within a city boundary had to read every row in the table, deserialize each geometry, and then apply the spatial filter. The storage layer couldn't help because it had no understanding of what the binary data represented.

This created two problems. First, query performance scaled with table size rather than result size. A table with billions of location records took the same time to scan whether you wanted points in a small neighborhood or across an entire continent. Second, partition strategies couldn't help much. You could partition by region or geohash, but irregular query boundaries rarely aligned cleanly with partition boundaries, so you still scanned more data than necessary.

V3 adds geometry (planar coordinates) and geography (spherical coordinates accounting for Earth's curvature) as native types. With native types, Iceberg can store bounding box metadata in manifest files. A query for objects within a geographic region can skip entire data files whose bounding boxes do not intersect the query region. This is partition pruning applied to spatial data.

The types support standard representations (WKB, GeoJSON) and integrate with spatial libraries in query engines. Teams working with location data, logistics routing, or any application involving coordinates can now filter at the storage layer instead of reading everything and filtering in memory.

What This Means for Your Architecture

Iceberg V3's changes address specific pain points: delete file accumulation hurting read performance, difficulty tracking row-level changes for incremental processing, and inefficient handling of semi-structured and geospatial data.

AIStor Tables is the first data store to support V3, embedding the Iceberg Catalog REST API directly into MinIO AIStor object storage. This removes the external catalog service from your architecture. For teams running on-premises or hybrid deployments, you get V3 capabilities with one less component to manage, while keeping full compatibility with the Iceberg ecosystem. Any engine that supports Iceberg REST Catalog, including Spark, Trino, Dremio, Starburst, and Flink, works without modification.

To learn more, see AIStor Tables: Technical Deep Dive and the AIStor Tables documentation.

"Apache Iceberg, Iceberg, Apache, the Apache feather logo, and the Apache Iceberg project logo are either registered trademarks or trademarks of The Apache Software Foundation. Copyright © 2025 The Apache Software Foundation, Licensed under the Apache License, Version 2.0."