The Architect’s Guide to Thinking About the Hybrid/Multi Cloud

We were recently asked by a journalist to help frame the challenges and complexity of the hybrid cloud for technology leaders. While we suspect many technologists have given this a fair amount of thought, we also know from first-hand discussions with customers and community members that this is still an area of significant inquiry. We wanted to summarize that thinking into something practical, expanding where appropriate and becoming prescriptive where it was necessary.

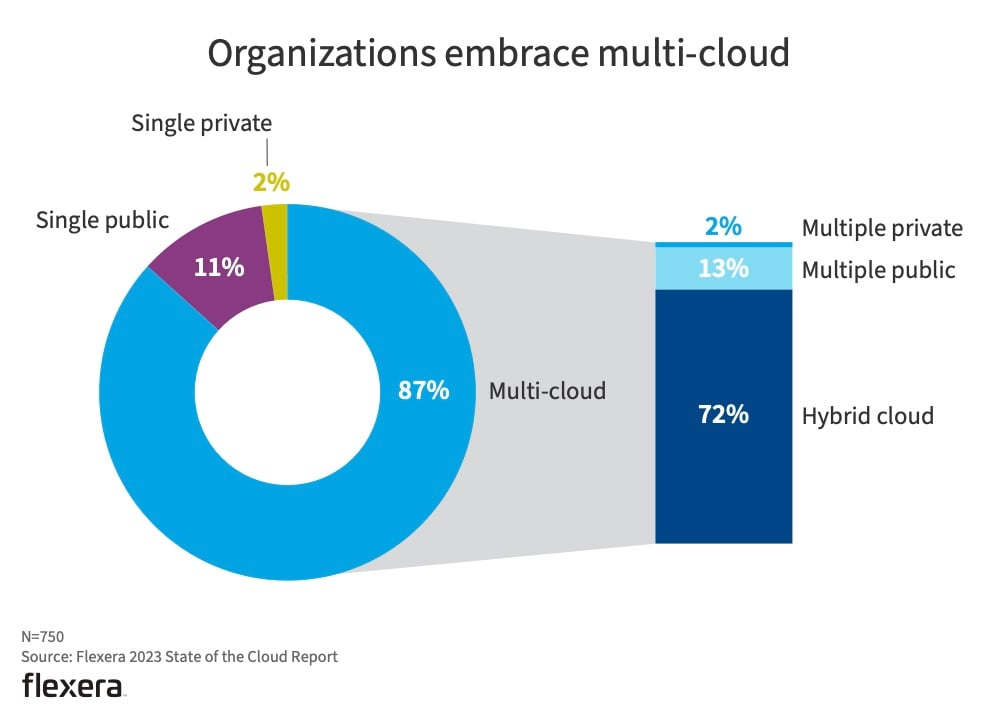

Let’s start by saying that the concepts of the hybrid cloud and the multicloud are difficult to unbundle. If you have a single on-premises private cloud and a single public cloud provider, doesn’t that qualify you as a multicloud? Not that anyone really has just two. The team at Flexera does research each year on the subject and 87% of enterprises consider themselves multicloud with 3.4 public clouds and 3.9 private clouds on average, although those numbers are actually down a touch from last year’s report:

There is a legitimate question in there: Can you have too many clouds?

The answer is yes. If you don’t architect things correctly, you can find yourself in the dreaded “n-body problem” state. This is a physics term that was co-opted for software development. In the context of multiple public clouds, the "n-body problem" refers to the complexity of managing, integrating and coordinating these clouds. In an "n-cloud environment," each cloud service (AWS, Azure, Google Cloud, etc.) can be seen as a "body." Each of these bodies has its own attributes like APIs, services, pricing models, data management tools, security protocols, etc. The "n-body problem" in this scenario would be to effectively manage and coordinate these diverse and often complex elements across multiple clouds. A few examples include interoperability, security and compliance, performance management, governance and access control and data management.

As you add more clouds (or bodies) into the system, the problem becomes exponentially more complex because the differences between the clouds aren't just linear and cannot be extrapolated from pairwise interactions.

Overcoming the "n-body problem" in a multicloud environment requires thoughtful architecture, particularly around the data storage layers. Choosing cloud-native and more importantly cloud-portable technologies can unlock the power of multicloud without significant costs.

On the other hand, can there be too few clouds? If too few equals one, then probably. More than one, and you are thinking about the problem in the right way. It turns out that too few clouds, or multiple clouds with a single purpose (computer vision or straight backup for example) deliver the same outcome — lock-in and increased business risk.

Lock-in reduces optionality, increases cost and minimizes the firm’s control over its technology stack, choice within that cloud notwithstanding (AWS, for example, has 200+ services and 20+ database services). Too few clouds can also create business risk. AWS and other clouds go down. Several times a year. Those outages can bring a business to a standstill.

Enterprises need to build a resilient, interchangeable cloud architecture. This means application portability and data replication such that when a cloud goes down, the application can fail over to the other cloud seamlessly. Again, you will find dozens of databases on every public and private cloud — in fact some of them aren’t even available outside of the public cloud (see Databricks). That is not where the problem exists in the “too few clouds” challenge.

The data layer is more difficult. You won’t find many storage options running on AWS, GCP, Azure, IBM, Alibaba, Tencent and the private cloud. That is the domain of true cloud-native storage players, those who are object stores, software-defined and Kubernetes-native. In an ideal world AWS, GCP and Azure would all have support for the same APIs (S3), but they don’t. Applications that depend on data running on one of these clouds will need to be rearchitected to run on another. This is the lock-in problem.

The key takeaway is to be flexible in your cloud deployment models. Even the most famous “mono-cloud” players like CapitalOne have significant on-premises deployments — on MinIO in fact. There is no large enterprise that can “lock onto” one cloud. The sheer rigidity of that would keep enterprises from buying companies that are on other clouds. That is the equivalent of cutting off one’s nose to spite one’s face.

Enterprises must be built for optionality in the cloud operating model. It is the key to success. The cloud operating model is about RESTful APIs, monitoring and observability, CI/CD, Kubernetes, containerization, open source and Infrastructure as Code. These requirements are not at odds with flexibility. On the contrary, adhering to these principles provides flexibility.

So What Is The Magic Number?

Well, it is not 42. It could, however, be three. Provided the enterprise has made wise architectural decisions (cloud-native, portable, embracing Kubernetes), the answer will be between three and five clouds with provisions made for regulatory requirements that dictate more.

Again, assuming the correct architecture, that range should provide optionality, which will provide leverage on cost. It will provide resilience in the case of an outage, it will provide richness in terms of catalog depth for services required and should keep the n-body problem manageable.

What About Manageability?

While most people will tell you complexity is the hardest thing to manage in a multicloud environment, the truth is that consistency is the primary challenge. Having software that can run across clouds (public, private, edge) provides the consistency to manage complexity. Take object storage. If you have a single object store that can run on AWS, GCP, Azure, IBM, Equinix or your private cloud, your architecture becomes materially more simple. Consistent storage and its features (replication, encryption, etc.) enables the enterprise to focus on the application layer.

Consistency creates optionality and optionality creates leverage. Reducing complexity can’t come at some unsustainable cost. By selecting software that runs across clouds (public, private, edge) you reduce complexity and you increase optionality. If it’s cheaper to run that workload on GCP, move it there. If it’s cheaper to run that database on AWS, run it there. If it’s cheaper to store your data on-premises and use external tables, do that.

Choose software that provides a consistent experience for the application and the developer and you will achieve optionality and give you leverage over cost. Make sure that experience is based on open standards (S3 APIs, open table formats).

Bespoke cloud integrations turn out to be a terrible idea. As noted, each additional native integration is an order of magnitude more complex. Think of it this way: If you invest in dedicated teams, you are investing $5 million to $10 million per platform, per year in engineers. That doesn’t account for the cost of the tribal knowledge for each cloud. In the end, it results in buggy and unmaintainable application code. Software-defined, Kubernetes-centric software can solve these problems. Make that investment, not the one in bespoke cloud integrations.

What We Fail to See…

As IT leaders, we often deal with what is in front of us: shadow IT or new M&A integrations. Because of this we often fail to create the framework or first principles associated with the overall strategy. Nowhere is this more evident than the cloud operating model. Enterprises need to embrace first principles for containerization, orchestration, RESTful APIs like S3, automation and the like. Those first principles create the foundation for consistency.

IT leaders that attempt to dictate Cloud A over Cloud B because they get a bunch of upfront credits or benefits to other applications in the portfolio are suckers in the game of lock-in that companies like Oracle pioneered, but the big three have mastered.

Going multicloud should not be an excuse for a ballooning IT budget and an inability to hit milestones. It should be the vehicle to manage costs and accelerate the roadmap. Using first cloud operating model principles and adhering to that framework provide the context to analyze almost any situation.

Cloud First or Architecture First?

We had a client ask us the other day if we recommended dispersing clouds among multiple services. It took us a moment to understand the question because it was the wrong one. The question should have been, “Should I deploy multiple services across multiple clouds?”

The answer to that is yes.

Snowflake runs on multiple clouds. Hashicorp Vault runs on multiple clouds. MongoDB runs on multiple clouds. Spark, Presto, Flink, Arrow and Drill run on multiple clouds. MinIO runs on multiple clouds.

Pick an architecture stack that is cloud-native and you will likely get one that is cloud-portable. This is the way to think about the hybrid/multi-cloud.