Boosting Snowflake with External Tables

As a Snowflake user, you're likely familiar with its strengths in managing data in collaborative environments. The user experience of Snowflake is often praised for its intuitive interface and seamless integration capabilities. Snowflake's ability to handle diverse data workloads, from simple queries to complex data processing tasks, makes it an indispensable tool for many data professionals. There has, however, been a gap historically in data not in Snowflake, for regulatory, logistical or economic reasons.

Frank Slootman emphasized in Snowflake’s Q2 FY23 earnings report that Snowflake’s next frontier of innovation is aimed at transforming how cloud applications are built, deployed, sold, and transacted, further underscoring the company’s strategic pivot towards connecting to data wherever it’s stored and created. Examining these principles is the foundation of the cloud operating model; which views the cloud not as a physical location, but as a design principle and goes a long way towards reflecting on those gaps in data afflicting many Snowflake users. The Q1 FY23 report also reflects this focus, with significant investments in enabling every single workload type needing data access.

Along with these trends, more recent earnings reports indicate that Snowflake is shifting its focus from storage to compute, driven by the increasing demand for AI-capable data products. This ravenous demand is evident in Snowflake’s product revenue, which reached $698.5 million in Q3 FY24, a 34% year-over-year increase. This growth is attributed to the rising need for computational power for AI and advanced analytics rather than pure storage.

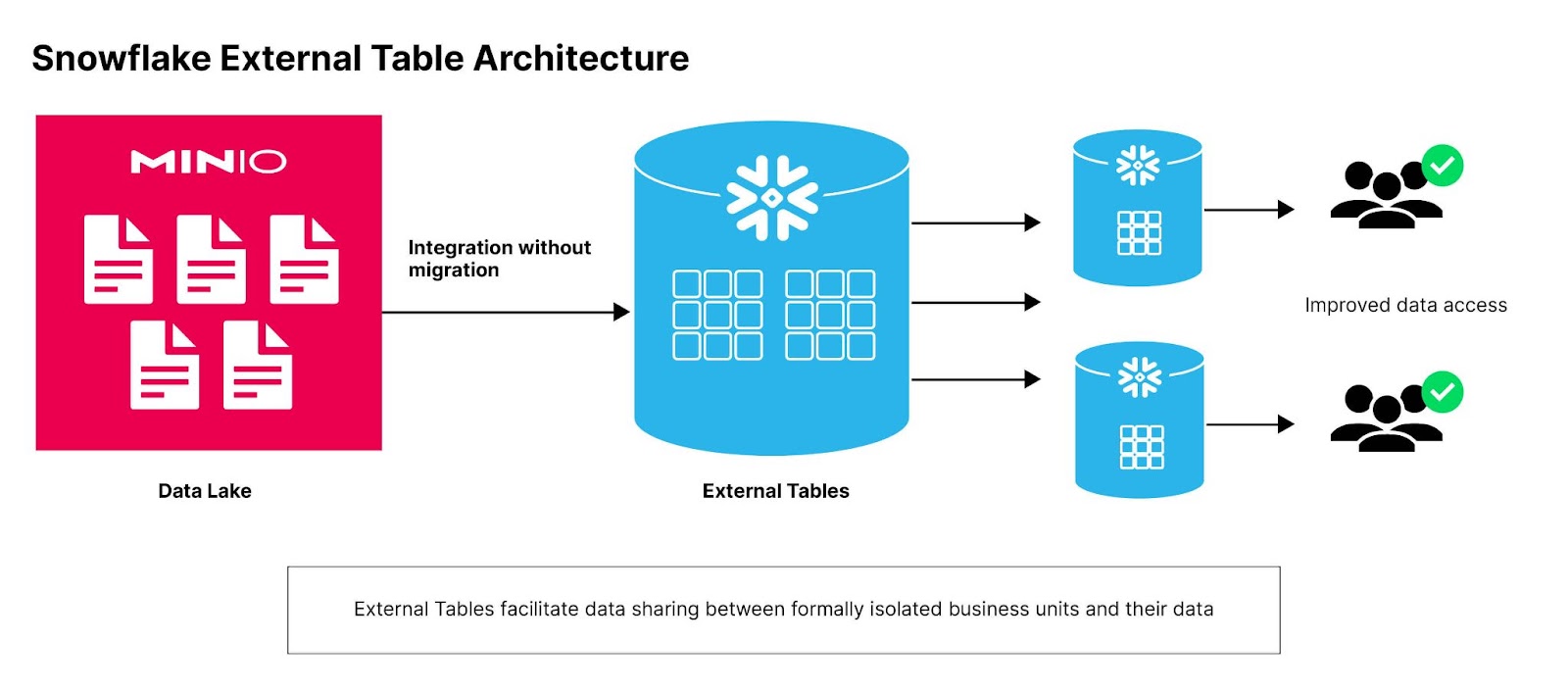

To address the gaps in data accessibility and continue its growth trajectory, Snowflake has been investing heavily in external tables. These features allow users to query and operate on data stored in external object storage in Snowflake. This strategic approach enables Snowflake to delegate storage responsibilities to specialized providers while focusing on enhancing its compute capabilities, which are crucial for AI and advanced analytics.

Integrating external data sources into Snowflake’s platform is particularly powerful. By supporting external tables, Snowflake ensures that users can access and analyze data regardless of where it is stored. This not only bridges the historical gaps in data accessibility but also leverages the strengths of object storage solutions; which in the case of MinIO include performance, availability, and scalability. Read on for more details on implementing this architecture in your Snowflake environment.

Simple to Use

Like most things in Snowflake, connecting to your data stored on MinIO is relatively simple. Querying data stored in MinIO buckets can be as simple as Select * from Bucket1.

Setting up Snowflake

It’s also important to note that while the basic functionality of external tables is available across various editions of Snowflake, certain advanced features, such as materialized views on external tables, require the commercial version.

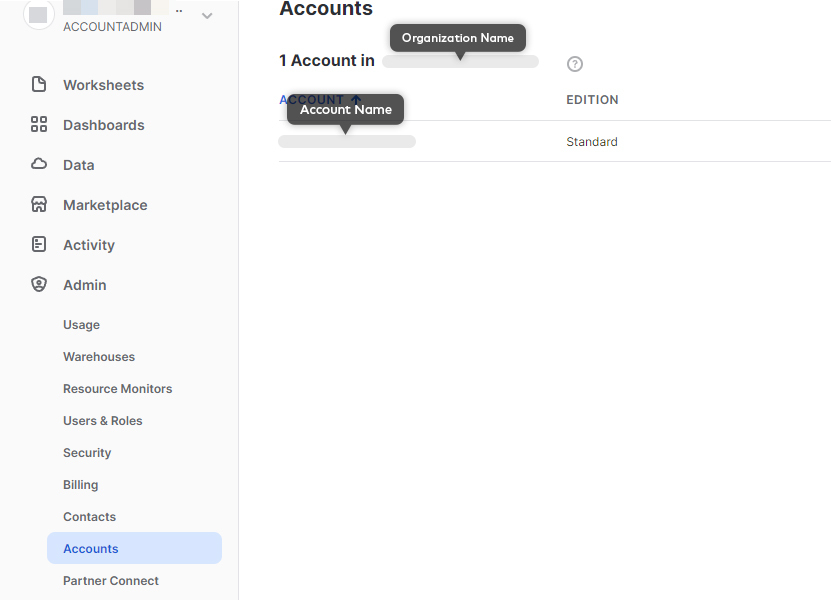

You will most likely need to reach out to your Snowflake rep to whitelist your MinIO endpoint. For this request, you will need to gather your account identifier, Snowflake cloud and Snowflake region.

Setting up MinIO

MinIO is an object storage solution that offers an Amazon Web Services S3-compatible API, supporting all core S3 features. Designed to view the cloud as an operating model rather than a physical location, MinIO can be deployed across various environments including public and private clouds, bare metal infrastructure, orchestrated environments, and edge locations.

There are only a few requirements on the MinIO setup to get it to work with Snowflake.

- MinIO has to be set up to allow for DNS style access, and the bucket must be publicly available. For example, if your MinIO server is at https://play.min.io, and the bucket is named snowflake, the bucket should be accessible at https://snowflake.play.min.io.

- Currently, the region has to be set to NULL or the same as the region of the Snowflake instance (e.g.

us-west-2). You will get an error message from the Snowflake query so you will know which region it is expecting.

In the example below, the bucket snowflake has 4 objects in it and another object in the folder/sub-prefix sn1. Snowflake will fetch all of these objects.

The sample files are available at https://docs.snowflake.com/en/user-guide/getting-started-tutorial-prerequisites.html#sample-data-files-for-loading

Getting Started

The first step to create an external table is to create an external stage. An external stage in Snowflake is used to reference an external data location (such as object storage) where files are stored. It acts as a pointer to that data location. They are primarily used for data loading and unloading operations and facilitate the process of staging files for bulk data operations as well as to support external tables.

In the past, external stages were all inside Snowflake. This often resulted in duplicated data, with this architecture allowing the same data in object storage, the stage in Snowflake, and in Snowflake tables. However, enterprises can set up high-performant object storage like MinIO as an external stage directly.

This does the following

- Simplifies the flow of data into Snowflake

- Allows for data to be available faster for analysis to meet business needs

- Ensures that there are no unnecessary copies of the data which helps in both governance and cost.

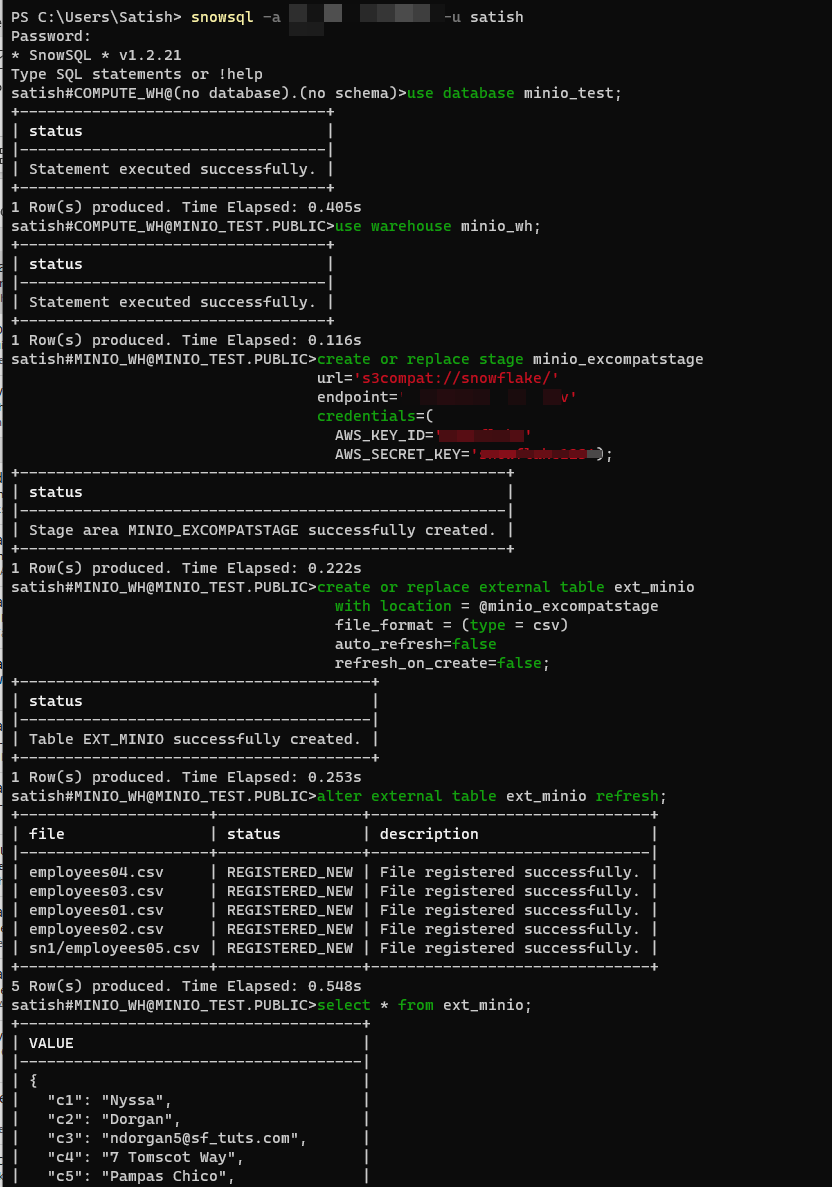

Below is a sample SQL command you can execute in the Snowflake Console to set up a MinIO external stage. Replace the url with your own bucket and endpoint with your own endpoint.

create or replace stage minio_excompstage

url='s3compat://snowflake/'

endpoint='play.min.io'

credentials=(

AWS_KEY_ID='********'

AWS_SECRET_KEY='********'

);MinIO for External Tables

Queries in Snowflake can be performed directly on external tables, allowing enterprises to access data in MinIO buckets without the need for data migration. This architecture enables users to have read-only access to data in MinIO inside their Snowflake environment and to avoid the costs and delays associated with moving data into Snowflake. External tables function just like any other read-only table in Snowflake and can be used in JOINs, SELECT queries, and included in materialized views.

The time taken by the initial query will depend on the amount of data that is being transferred, but reads are cached and subsequent queries may be faster till the next refresh.

The benefits of this approach are huge:

Here is example SQL to implement an external table in your own Snowflake:

create or replace external table ext_minio

with location = @minio_excompstage

file_format = (type = csv)

auto_refresh=false

refresh_on_create=false;

alter external table ext_minio refresh;You are now able to query your external table and join it to other tables in your Snowflake.

select * from ext_minio;Using Snowflake CLI (SnowSQL)

The same commands above for creating can be performed using the Snowflake CLI (SnowSQL).

You can find the instructions on how to install SnowSQL on your platform here: https://docs.snowflake.com/en/user-guide/snowsql-install-config.html

The only difference is that you have to start with the command

$ snowsql -a <account_identifier> -u <user_name>The account-identifier is of the format <organization-name>-<account-name>. You can find this from the Admin page in the Snowflake console.

Summary

If you are a MinIO customer looking to add Snowflake capabilities or if you are a Snowflake customer looking to extend its capabilities to data stored outside Snowflake, give it a try. Either way, with external tables you get to extract more out of your current infrastructure investment. Reach out to us at hello@min.io or our Slack channel with any questions.