Building a High-Performance, On-Prem Data Pipeline with Materialize and MinIO AIStor

Materialize is a software platform designed for real-time data integration and transformation. It lets you create up-to-date views of any aspect of your business just by using SQL. Materialize is built on top of Timely Dataflow, a distributed data-parallel compute engine, and focuses on high throughput, incremental updates to queries as new data arrives. For these workloads, where performance is critical, running on-prem is a cost-effective and performant choice.

Materialize’s primary storage is object storage. While other object storage vendors are on the market, the combination of Materialize and MinIO AIStor far exceeds the standard performance achievable with other object storage solutions. AIStor fully saturates the network, and the only factors limiting its performance are your networking capacity and hardware. If performance is a priority when processing your data, combining AIStor and Materialize could be the ideal solution.

This tutorial will help you get started with AIStor and Materialize locally.

Why Build On-Prem

Deploying your data infrastructure on-prem offers several advantages, mainly when performance and control are paramount, as is the case for very performant real-time analytics on sensitive data. You’ll get a performance boost by positioning your object store near your data sources, dashboards, and applications. Less latency means faster query responses. However, latency is only part of performance; the other is throughput. You can achieve high throughput by building your data platform on MinIO AIStor. This architecture enables Materialize to take advantage of the fastest object storage on the market, even faster than Amazon’s S3 when deployed to AWS. If performance matters, this combination could be key to your infrastructure.

Another benefit of running Materialize and AIStor on-prem is that it provides greater control over your data, which is crucial for organizations with stringent security and compliance requirements. By maintaining data within your own infrastructure, you can implement tailored security measures and ensure compliance with industry-specific regulations.

On-prem deployments can also save costs by eliminating recurring cloud fees, like egress and fees associated with GETs and PUTs over object storage. MinIO AIStore runs on commodity hardware, further driving down costs while delivering high performance.

Data is the foundation of AI

AI is only as good as the data it learns from. High-quality, well-structured, and accessible data is the backbone of every successful AI initiative. Even the most advanced models will struggle to deliver meaningful insights without clean, well-managed data.

Fresh data is critical for AI-driven workloads. Materialize can help ensure that data lakes are updated in real-time and that data is available when needed.

Performance is more than a vanity

True performance isn’t just about headline speeds—it’s about delivering that performance consistently and efficiently at scale. MinIO AIStor supports single namespace deployments that scale to hundreds of petabytes and deliver over 3 TiB/s of read and write throughput. This is a key differentiator. While some competitors can saturate a single GPU server, doing so typically requires more hardware, cost, and complexity—especially when managing multiple clusters or namespaces. With AIStor, you get maximum throughput per GPU server (up to 53 GiB/s over 400GbE) while keeping the storage architecture simple and manageable. This means fewer mountpoints for IT teams to maintain and fewer buckets for developers to worry about. The result? Faster time to insight, lower total cost of ownership, and better price/performance—at scale.

Local Materialize and MinIO AIStor Deployment Tutorial

Prerequisites

Before starting, ensure you have the following installed:

- Kind: Install Kind.

- Docker: Install Docker.

- Helm 3.2.0+: If not installed, follow the Helm documentation.

- kubectl: Install kubectl.

Docker Resource Requirements

For this local deployment, ensure Docker has:

- 3 CPUs

- 10GB memory

Installation Steps

- Start Docker: Ensure Docker is running.

- Open a Terminal: Create a working directory and navigate to it:

mkdir my-local-mz

cd my-local-mz- Create a Kind Cluster:

kind create cluster- Label the Kind Node:

MYNODE=$(kubectl get nodes --no-headers | awk '{print $1}')Apply labels:

kubectl label node $MYNODE materialize.cloud/disk=true

kubectl label node $MYNODE workload=materialize-instance

Verify labels:

kubectl get nodes --show-labels- Download Sample Configuration Files:

Set the Materialize version:

mz_version=v0.130.4Download files:

curl -o sample-values.yaml https://raw.githubusercontent.com/MaterializeInc/materialize/refs/tags/$mz_version/misc/helm-charts/operator/values.yaml

curl -o sample-postgres.yaml https://raw.githubusercontent.com/MaterializeInc/materialize/refs/tags/$mz_version/misc/helm-charts/testing/postgres.yaml

curl -o sample-minio.yaml https://raw.githubusercontent.com/MaterializeInc/materialize/refs/tags/$mz_version/misc/helm-charts/testing/minio.yaml

curl -o sample-materialize.yaml https://raw.githubusercontent.com/MaterializeInc/materialize/refs/tags/$mz_version/misc/helm-charts/testing/materialize.yamlFile Descriptions:

sample-values.yaml: Configures the Materialize Operator.sample-postgres.yaml: Configures PostgreSQL as the metadata database.sample-minio.yaml: Configures MinIO.sample-materialize.yaml: Configures the Materialize instance.

- Install the Materialize Helm Chart:

Add the Helm repository:

helm repo add materialize https://materializeinc.github.io/materializeUpdate the repository:

helm repo update materializeInstall the Materialize Operator:

helm install my-materialize-operator materialize/materialize-operator \

--namespace=materialize --create-namespace \

--version v25.1.2 \

--set observability.podMetrics.enabled=true \

-f sample-values.yaml

Verify installation:

kubectl get all -n materializeWait for all components to be in the Running state.

- Install PostgreSQL and MinIO AIStor:

Install PostgreSQL:

kubectl apply -f sample-postgres.yamlInstall AIStor:

kubectl apply -f sample-minio.yamlVerify installation:

kubectl get all -n materializeWait for all components to be in the Running state.

- Install the Metrics Server:

Add the metrics server repository:

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/Update the repository:

helm repo update metrics-serverInstall the metrics server:

helm install metrics-server metrics-server/metrics-server \

--namespace kube-system \

--set args="{--kubelet-insecure-tls,--kubelet-preferred-address-types=InternalIP\,Hostname\,ExternalIP}"Verify installation:

kubectl get pods -n kube-system -l app.kubernetes.io/instance=metrics-serverWait for the metrics-server pod to be in the Running state.

- Install Materialize:

Use the sample-materialize.yaml file to create the materialize-environment namespace and install Materialize:

kubectl apply -f sample-materialize.yaml

Verify installation:

kubectl get all -n materialize-environment

Wait for all components to be in the Running state.

- Access the Materialize Console:

Find the console service name:

MZ_SVC_CONSOLE=$(kubectl -n materialize-environment get svc \

-o custom-columns="NAME:.metadata.name" --no-headers | grep console)

echo $MZ_SVC_CONSOLEPort forward the console service:

(

while true; do

kubectl port-forward svc/$MZ_SVC_CONSOLE 8080:8080 -n materialize-environment 2>&1 | tee /dev/stderr |

grep -q "portforward.go" && echo "Restarting port forwarding due to an error." || break;

done;

) &

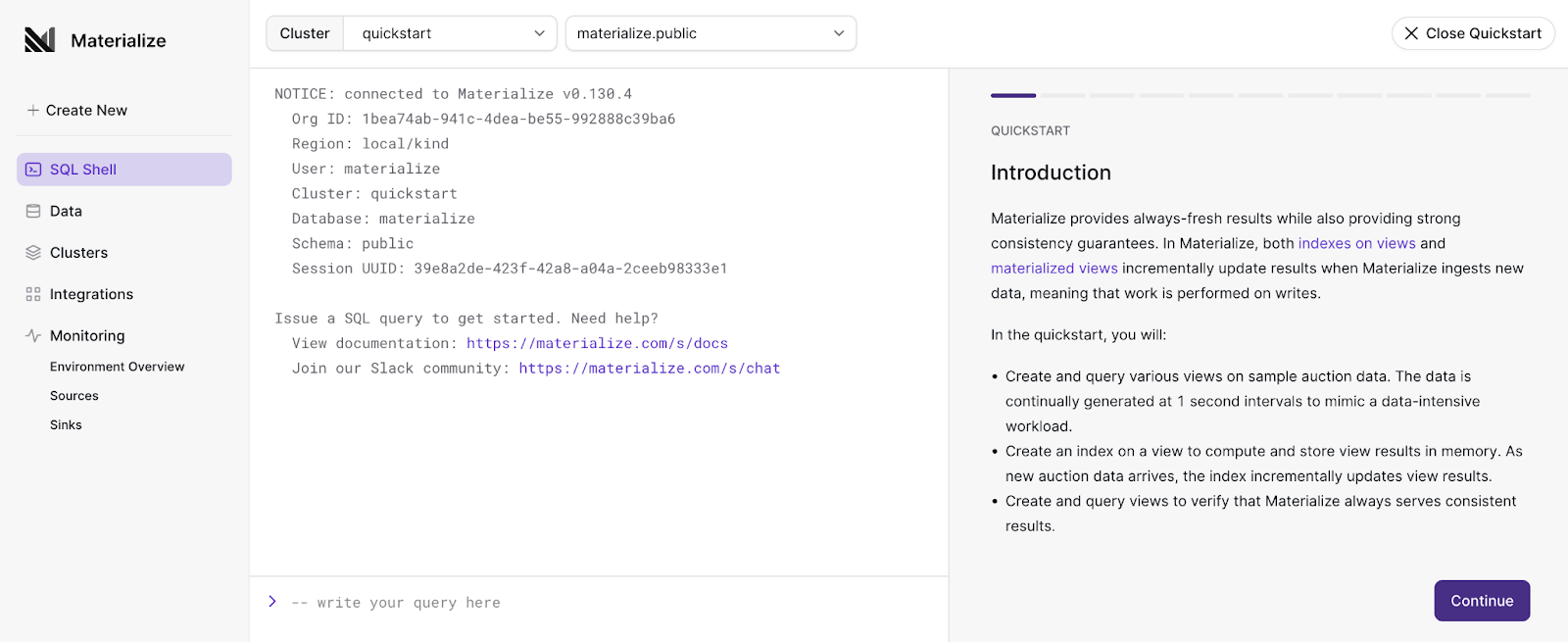

Open your browser and navigate to http://localhost:8080.

You can now run the quickstart to get started with Materialize on top of MinIO.

Ready for Production?

When you’re ready to build Materialize on-prem, contact their solution architects here. MinIO AIStor has an entire suite of enterprise-ready software, including Observability. This tool is purpose-built to address the challenges of managing large-scale data infrastructure by providing comprehensive insights into system performance and health. S3 over RDMA (Remote Direct Memory Access*) allows data to bypass the CPU in favor of the GPU, significantly accelerating data transfers for compute-intensive, metrics-heavy workloads. Something to leverage when you’re ready to use the data you accumulated with this stack.

Whether you're optimizing for speed, security, or scalability, this combination provides the foundation for a truly production-ready deployment. Feel free to contact us at hello@min.io or on our Slack to ask for any help with implementation.

*Available to customers via private preview.