Chat With Your Objects Using the Prompt API

Tl;dr: GET, PUT, PROMPT. It’s now possible to summarize, talk with, and ask questions about an object that is stored on MinIO with just natural language using the new PromptObject API. In this post, we explore a few use-cases of this new API along with code examples.

Motivation:

Object storage and the S3 API’s ubiquity can be most attributed to its unwavering simplicity. Simplicity scales. When the S3 API was introduced to the world in 2006, it changed the way developers and their applications interacted with their data forever. Storage was simplified to two words: GET and PUT. With a simple REST API, developers could suddenly harness the exploding volume of data for the coming decades.

18 years later, we face a new, but interesting challenge. Users and applications need to interact with an explosion of multimodal data, increasingly, with just natural language. What if this new type of interaction was made as simple as storing and retrieving the objects themselves? Enter PromptObject.

This capability is exposed as a simple function that can be called using the Client SDK of your choice. For example, minio_client.prompt_object(bucket, object, prompt, *kwargs) in Python or minioClient.PromptObject(bucket, object, prompt, options) in Go.

For a deeper look into the API and the benefits, definitely check out the Introduction blog post. The rest of this post will cover some real-world examples of what’s possible when one can programmatically prompt objects.

PromptObject in the Wild:

The motivation for PromptObject was largely the imagination of real-world use cases and types of applications that would be simpler to build with such an API. Here are a few:

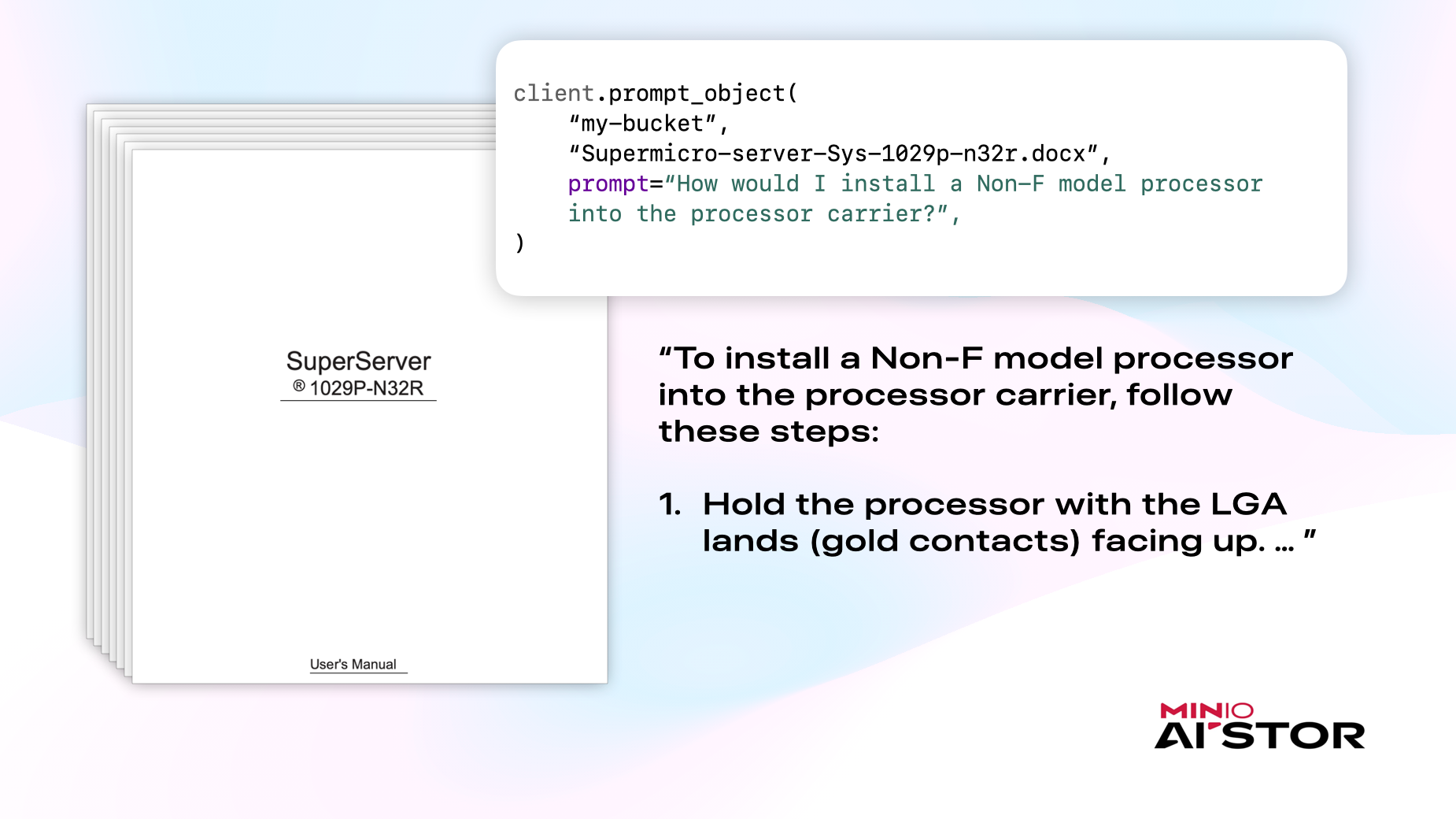

Document Question Answering

Object store is home to unstructured data like long PDFs, Documents, and Slideshows. It’s now possible to ask questions against a long and complex document using a simple client SDK function. For example, a chatbot application that answers questions for user-uploaded documents could simply call PromptObject with the user’s question and document, and return the answer. No custom OCR, in-memory RAG, or LLM message construction needed. Just initialize a MinIO client, specify an object as you would for GetObject or PutObject, and then provide a prompt:

from minio import Minio

client = Minio(

<MINIO_HOST>,

access_key=...,

secret_key=...,

secure=...,

)

client.prompt_object(

“my-bucket”,

“Supermicro-server-Sys-1029p-n32r.docx”,

prompt=“How would I install a Non-F model processor into the processor carrier?”,

)Question Answering against Multiple, Arbitrary Objects

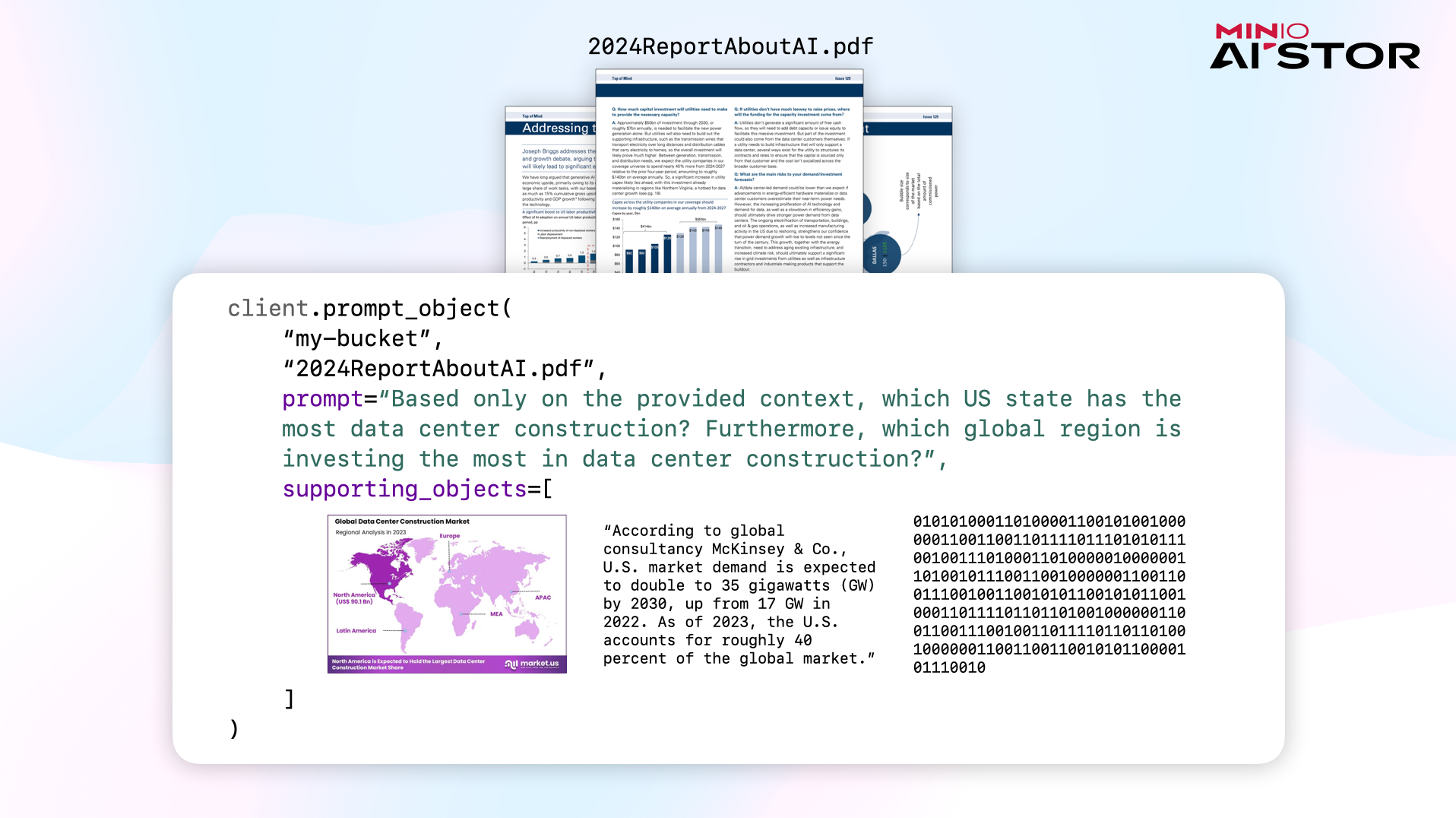

PromptObject exposes a parameter called supporting_objects which can accept a list of arbitrary objects, URLs, text, and binary data (!) as additional context for the PromptObject call. This is particularly useful for automating tasks that require the synthesis of information from many different sources. For example, building on the previous example, imagine building an AI executive research assistant that can agentically search the web, get relevant objects from the object store, and content from the user’s notes. Once the right sources of data have been collected, the application must be capable of answering questions across them. Instead of building out custom tooling to support arbitrary file types and most critically, downloading all these files into your application memory for extensive pre-processing, it’s possible to just send the “pointers” in the form of keys or URLs (as well as actual objects if needed) to PromptObject for understanding and question answering. Here’s how that might look:

# Question Answering with Supporting Objects

client.prompt_object(

bucket_name="my-bucket",

object_name="2024ReportAboutAI.pdf",

prompt="Based only on the provided context, which US state has the most\ data center construction? Furthermore, which global region is investing\ the most in data center construction?",

supporting_objects=[

"https://market.us/wp-content/uploads/2022/12/Data-Center-Construction-Market-Region.jpg",

client.presigned_get_object("my-bucket", "LastYearsReportAboutAI.pdf"),

"According to global consultancy McKinsey & Co., U.S. market demand is\ expected to double to 35 gigawatts (GW) by 2030, up from 17 GW in 2022. As of 2023, the U.S. accounts for roughly 40 percent of the global market.",

base64.b64encode(some_pdf_file.read()).decode('utf-8'), # bytes as base64

]

)Notice the variety of supporting object sources used. (1) URL on the web, (2) Presigned URL of another object on MinIO, (3) text, (4) some file's bytes as a base64 string

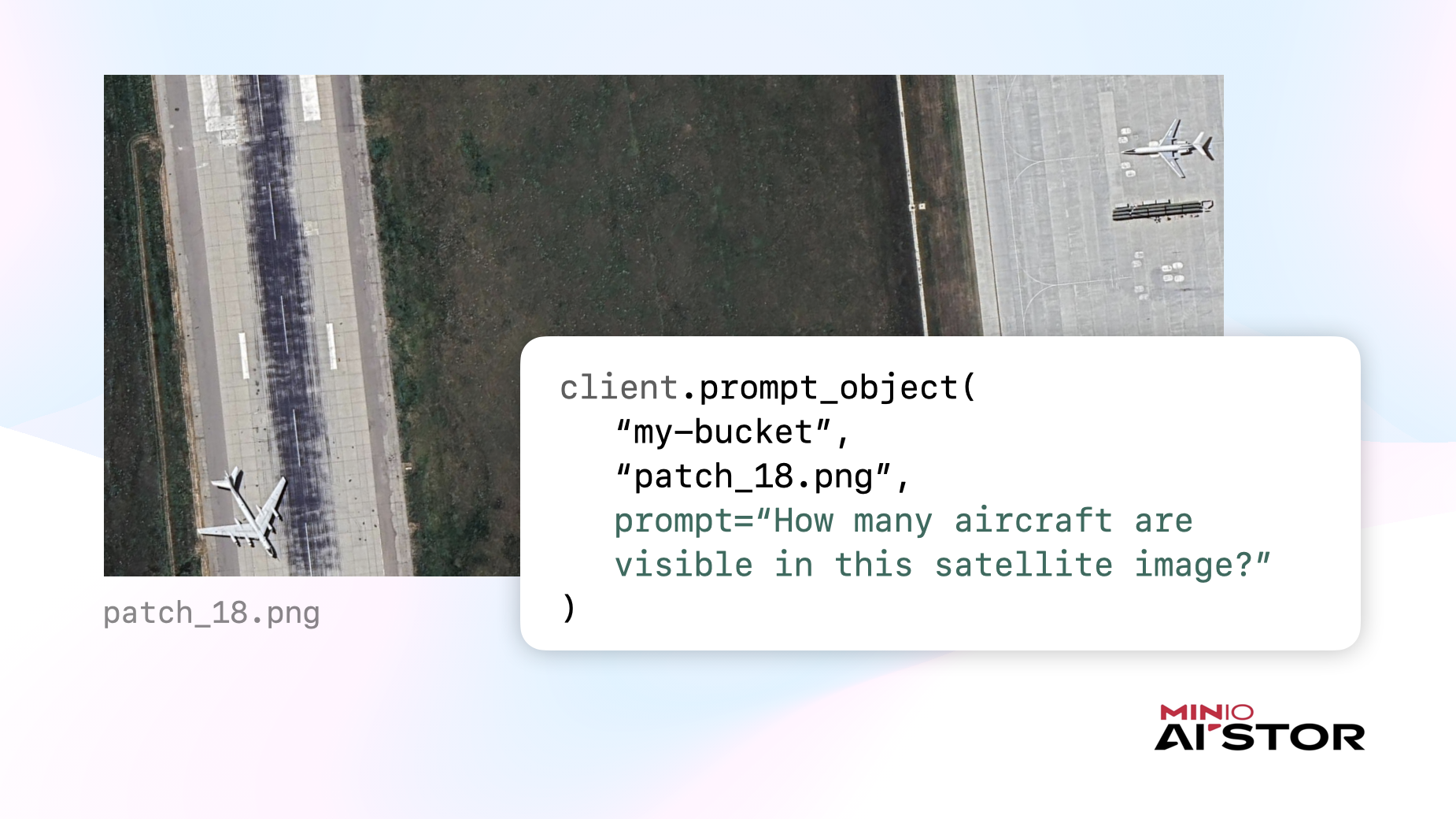

Satellite Imagery Analysis

Images are often stored with the intention of performing analytical tasks such as identification or classification or parsing of some kind. For example, imagine an application that automates the detection of aircraft from lots of satellite imagery. Instead of burdening your application software with the downloading of these objects and construction of inference requests, it is now possible to request a natural language prompt like “How many aircraft are visible in this satellite image?” upon the “pointer” (bucket and object name) to the desired image and simply consume the answer:

# Satellite Image Analysis

client.prompt_object(

bucket_name="my-bucket",

object_name="patch_18.png",

prompt="How many aircraft are visible in this satellite image?"

)Now let’s try a slightly more advanced example, making use of the supporting_objects parameter that we learned about earlier:

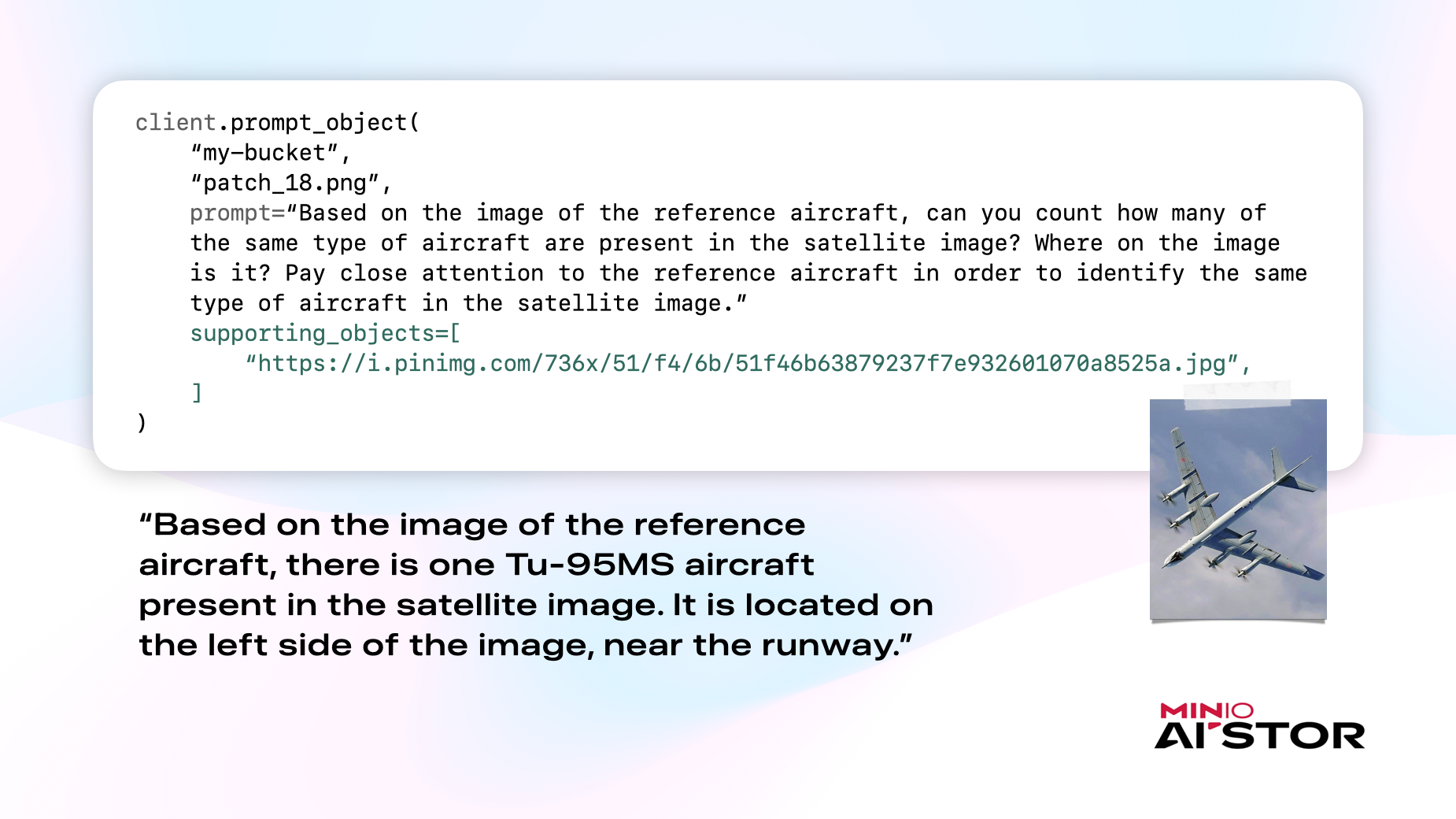

Earlier, we saw how it is possible to add more context to the PromptObject call using supporting_objects. Let’s say users of a satellite imagery analysis application want to be able to provide a reference image of the specific aircraft they are interested in, rather than detecting any and all aircraft. With supporting_objects and PromptObject, this is now possible in just a few lines of code. We can now provide the image of the airplane of interest and tune the prompt to accordingly keep an eye out for that specific plane when analyzing the same satellite image from before:

# Advanced Satellite Image Analysis

client.prompt_object(

bucket_name="my-bucket",

object_name="patch_18.png",

prompt="Based on the image of the reference aircraft, can you count how many of the same type of aircraft are present in the satellite image? Where on the image is it? Pay close attention to the reference aircraft in order to identify the same type of aircraft in the satellite image.",

supporting_objects=[

"https://i.pinimg.com/736x/51/f4/6b/51f46b63879237f7e932601070a8525a.jpg"

],

)Structured Answers from Unstructured Data

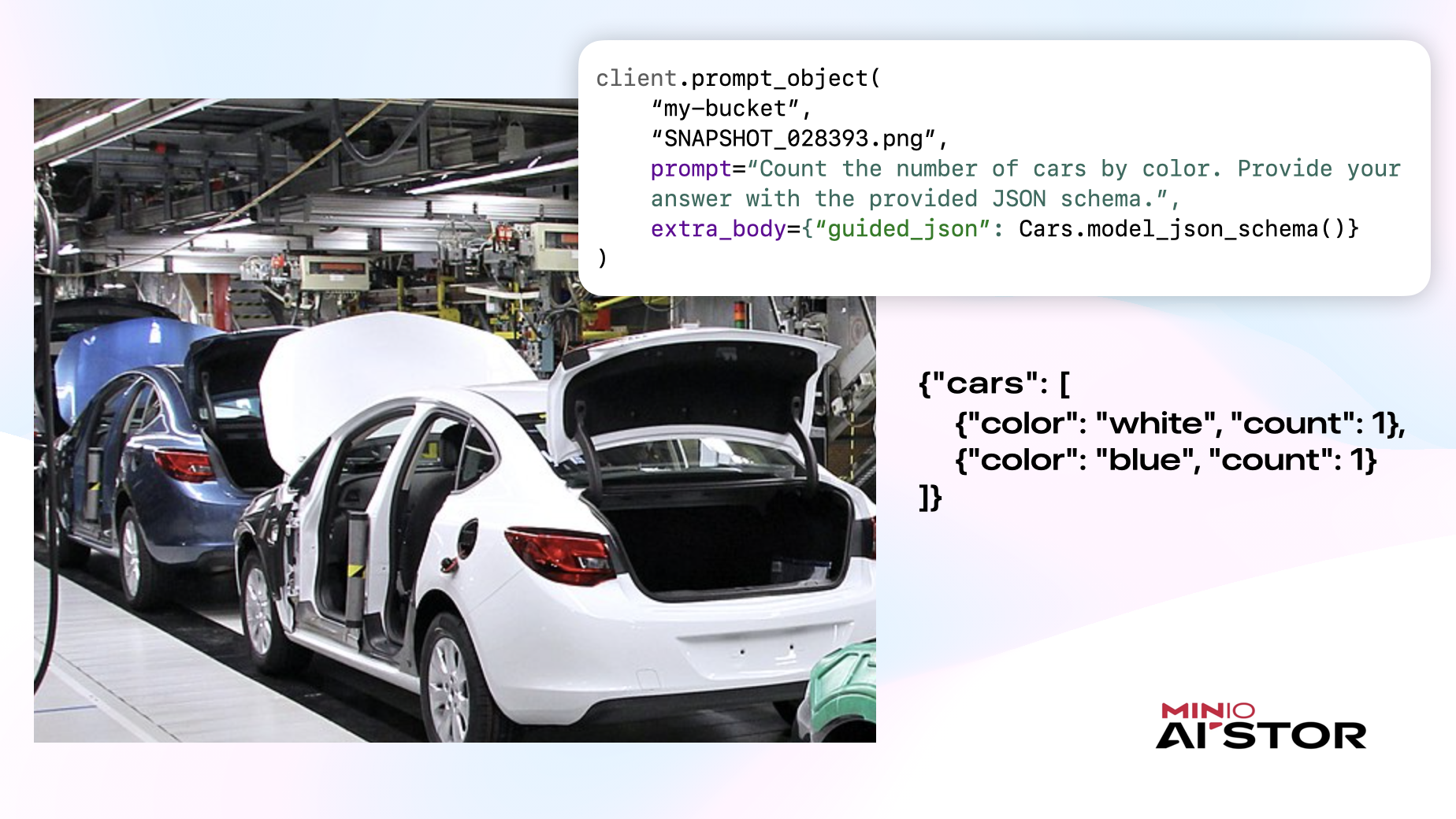

My personal favorite capability is being able to get structured answers from unstructured data like images. If your application depends on PromptObject outputting an answer that follows a particular schema, it’s possible to enforce that as well. For example, an application may be tasked with identifying the distinct cars present in a factory floor image and then passing that result on to another service. In this situation, a structured answer that follows a known schema is preferred over one that is just in natural language. The best way to do this is by passing the JSON schema to the PromptObject call. In Python, to make things even easier, we can use Pydantic to define a model class for the result and generate a JSON schema from it, as shown here:

from pydantic import BaseModel, Field

from typing import List

# Answer Structured Query on Factory Floor Image Capture

class Car(BaseModel):

"""The structured description of a car ."""

color: str = Field(..., description="Color of the car.")

count: int = Field(..., description="Number of cars of this color.")

class Cars(BaseModel):

cars: List[Car] = Field(..., description="List of distinct cars in the image and their counts.")

client.prompt_object(

“my-bucket”,

“SNAPSHOT_028393.png”,

prompt=“Count the number of cars by color. Provide your answer with the provided JSON schema.”,

extra_body={“guided_json”: Cars.model_json_schema()}

)In this example, we pass the desired JSON schema using extra_body and guided_json. extra_body is a kwarg that is forwarded to a language model inference server. Depending on the inference server you use, this specification could be different. PromptObject supports them all.

Closing Thoughts

The Prompt API, fundamentally, is about making it easier than ever before for your applications, agents, and users to interact with data. What you can build with PromptObject is really only limited by your imagination. In this post, we explored just a handful of use-cases, that hopefully, left you thinking about the ways you can leverage this API in your own awesome AI applications and projects. We look forward to seeing what you build!

If you want to go deeper and get a demo, we can arrange for that as well. As always, if you have any questions join our Slack Channel or drop us a note at hello@min.io.