Build a CI/CD Pipeline for your AI/ML infrastructure

So far, in our CI/CD series, we’ve talked about some of the high-level concepts and terminology. In this installment, we’ll go into the practical phase of the build pipeline to show how it looks in implementation.

We’ll go deeper into baking and frying to show how it looks in the different stages of the MinIO distributed cluster build process. Along the way, we’ll use Infrastructure as Code to ensure everything is version-controlled and automated using Packer and Jenkins.

In order to make it easy for developers to use our distributed MinIO setup without launching resources in the cloud, we’ll test it using Vagrant. Most of our laptops are powerful enough to try something out, yet we’re so accustomed to the cloud that we tend to look there first. But we’ll change that perception and show you just how easy it is to develop in a cloud-like environment locally, complete with MinIO object storage, just on your laptop.

MinIO Image

This is the baking phase. In order to automate as much of this as possible, we’ll use build tools to create a L2 image of MinIO that could be used to launch any type of MinIO configuration. This will not only allow us to follow Infrastructure as Code best practices but also provide version control for any future changes.

MinIO build template

We’ll use Packer to build machine-readable images in a consistent manner. Packer allows you to source from multiple inputs such as ISO, Docker Image, and AMI, among other inputs, and post-process the built images by uploading them to Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), Docker Hub, Vagrant Cloud, and several other outputs, or even locally to disk.

Installing is pretty straightforward; you can either use brew

or if you are looking for other distributions, head over to the Packer downloads page.

When Packer was first introduced, it used only JSON as the template language, but as you may know, JSON is not the most human-writable language. It's easy for machines to use JSON, but if you have to write a huge JSON blob, the process is error-prone. Even omitting a single command can cause an error, not to mention you cannot add comments in JSON.

Due to the aforementioned concerns, Packer transitioned towards Hashicorp Configuration Language or HCL (which is also used by Terraform) as the template language of choice. We wrote a HCL Packer template that builds a MinIO image – let's go through it in detail.

For all our code samples, we’ll paste the crux of the code here, and we have the entire end-to-end working example available for download on GitHub. The primary template will go in main.pkr.hcl and the variables (anything that starts with var) will go in variables.pkr.hcl.

Git clone the repo to your local setup, where you’ll run the Packer build

We’ll use virtualbox to build the MinIO image. Install Virtualbox

then define it as a source in main.pkr.hcl as the first line

Note: Along the post, we’ll reference links to the exact lines in the GitHub repo where you can see the code in its entirety, similar to above. You can click on these links to go to the exact line in the code.

Let’s set some base parameters for the MinIO image that will be built. We’ll define the name, CPU, disk, memory, and a couple of other parameters related to the image configuration.

A source needs to be given in order to build our custom image. The source can be local relative to the path where you run the packer command or a URL online.

The sources need to be given in the order that Packer will try to use them. In the below example, we are giving a local source and an online source; if the local source is available, it will skip the online source. In order to speed up the build, I’ve already pre-downloaded the ISO and added it to the `${var.iso_path}` directory.

The image we create needs a few defaults like Hostname, SSH user, and password set during the provisioning process. These commands are executed as part of the preseed.cfg kickstart process served via http server.

The image will be customized using a few scripts we wrote located in the scripts/ directory. We’ll use the shell provisioner to run these scripts.

Next, let's flex some concepts we learned in the previous blog post, specifically baking and frying the image. In this tutorial, we’ll bake the MinIO binary and the service dependencies, such as the username and group required for MinIO. We are baking this as opposed to frying because these will remain the same no matter how we configure and launch our image.

We’ll set the MinIO version to install along with the user and group the service will run under.

Most of the install steps are tucked away in dedicated bash scripts that are separate from the template, but they will be called from the template. This keeps the overall template clean and simple, making it easier to manage. Below are the list of scripts we are going to use

I’m not going to go into much more detail about what each of these scripts does because most of them are basic boilerplate code that is required to set up the base Linux image – think of this as the L1 phase of our install.

The file I do want to talk about is minio.sh where we are baking MinIO binary.

The MinIO binary is downloaded from upstream, the `MINO_VERSION` variable was set a couple of steps ago as an environment variable that is available during install time.

Once the binary is installed, it needs the user and group, which we created separately so that it will be used by the MinIO service to run as

Generally, for every new version of MinIO, we should build a new image, but in a pinch, you can upgrade the binary without upgrading the entire image. I’ll show you how to do that in the frying stage..

Once the image is built, we must tell Packer what to do with it. This is where post-processors come into play. There are several of them that you can define, but we’ll go over three of them here:

- The

vagrantpost-processor builds an image that can be used by vagrant. It creates the vagrant VirtualBox, which can be launched using Vagrantfile (more on Vagrantfile later). The.boxfile is stored in the location defined inoutputwhich can be imported locally.

- The

shell-localpost-processor allows us to run shell commands locally, where thepackercommand is done to do post-cleanup and other operations. In this case, we are removing the output directory, so it doesn’t conflict with the next packer run build.

- Having the image locally is great, but what if you want to share your awesome MinIO image with the rest of your team or even developers outside your organization? You can manually share the box image generated before, but that could be cumbersome. Rather we’ll upload the image to the Vagrant cloud registry that can be pulled by anyone who wants to use your MinIO image.

Another advantage of uploading the image to the registry is that you can version your images so even if you upgrade it in the future, folks can pin it to a specific version and upgrade at their leisure.

In order to upload your MinIO image to Vagrant Cloud, we will need an access token and the username of the account used to create the Vagrant Cloud account. We’ll show you how to get this info in the next steps.

Registry for MinIO image

Go to https://app.vagrantup.com to create a Vagrant Cloud account. Make sure to follow the instructions below.

In order for us to use Vagrant Cloud, we need to create a repo beforehand with the same name as the vm_name in our Packer configuration.

Follow the steps below to create the repo where our image will be uploaded.

One more thing we need to create in Vagrant Cloud is the token we need to authenticate prior to uploading the built image. Follow the instructions below.

Build the MinIO image

At this point, you should have a Packer template to build the custom MinIO image and a registry to upload the image. These are all the prerequisites that are required before we actually start the build.

Go into the directory where the main.pkr.hcl the template file is located, and you will see a bunch of other files we’ve discussed in the previous steps.

The build needs valid values for the variables to be passed in during the build process, or it will fail to try to use the default values. Specifically, in this case, we need to pass the Vagrant Cloud token and username. You can edit these values directly in variables.pkr.hcl for now but do not commit the file with these values to the repo for security reasons. Later during the automation phase, we’ll show you another way to set these variables that don’t involve editing any files and is a safer alternative.

The values for these variables were previously created as part of the step when we created the Vagrant Cloud account. You can add those values to the default key.

As you build your images, you also need to bump up the version of the image, so each time you build your image, there will be a unique version. We use a formatting system called Semver which allows us to set the MAJOR.MINOR.PATCH numbers are based on the type of release we are making. To begin with, we always start with 0.1.0 which needs to be incremented for every release.

After setting valid values for the variables and other settings, let's ensure our templates are inspected and validated to work properly.

Once everything is confirmed to be correct, run the build command in Packer

This will start the build process, which can take anywhere from 15-20 minutes, depending on your machine and internet speed, to build and upload the entire image.

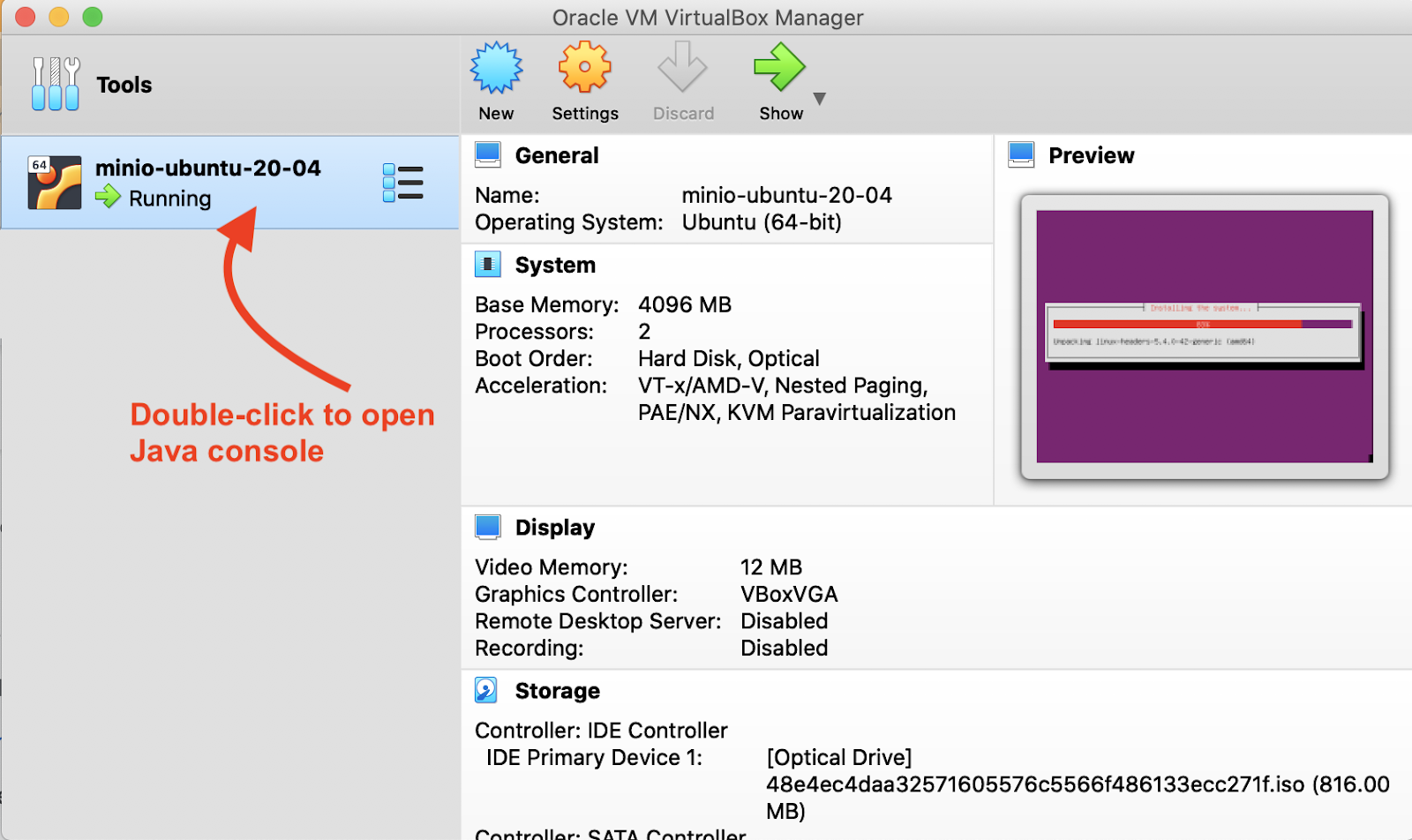

Open the VirtualBox Manager to see the VM launched by Packer. It will have the name that was assigned to the variable vm_name. Double-click on the VM to open the Java console with a live version of the preview screenshot where you can see the OS install process.

Below is a snippet of the beginning and ending, with some MinIO bits sprinkled in between.

Head on over to https://app.vagrantup.com dashboard to see the newly uploaded image

MinIO distributed cluster

This is the frying phase. We have now published an image that can be consumed by our team and anyone who wants to run MinIO. But how do we actually use this published image? We’ll use Vagrant to launch VMs locally, similar to how we use Terraform for cloud instances.

MinIO Vagrantfile

The good news is we’ve written the Vagrantfile for you, and we’ll go through it step-by-step so you can understand how to deploy MinIO in distributed mode. You can use these same steps in a production environment on bare metal, Kubernetes, Docker and others. The only difference is that you would probably want to use something like Terraform or CDK to launch these because they manage more than just VMs or Containers like DNS, CDN, and Managed Services, among other cloud resources.

Go into the vagrant the directory inside the same ci-cd-build directory where packer is located. If you are still in the packer the directory, you can run the following command

Install Vagrant using the following command along with the vagrant-hosts a plugin that will allow us to communicate between VMs using their hostname.

The way DNS works is pretty rudimentary, as the plugin basically edits the /etc/hosts file on each VM with the details of the other VMs launched in the Vagrantfile. This can be done manually, but why would we want to do that when we can automate it? Please note all VMs must be launched using the same Vagrantfile because `vagrant-hosts` does not keep track of hosts between two discrete Vagrantfiles.

You have to be a little familiar with Ruby in order to understand the Vagrantfile, but if you’re not, that’s OK – it's very similar to Python, so we’ll walk you through every part of it. Below you can see how we define variables for some of the basic parameters needed to bring the MinIO cluster up.

Most of these can remain the default setting, but I would recommend paying attention to BOX_IMAGE. The box image username is currently set to minioaj and should be updated with the username you chose when you built the Packer image.

You don’t need to edit anything else besides the above variables. They are pretty self-explanatory and follow the MinIO distributed setup guide to the letter. However, let’s go through the entire file anyway, so we have a better understanding of the concepts we’ve learned so far.

The following loop will create as many VMs as we specify in MINIO_SERVER_COUNT. In this case, it will loop 4 times while defining the VM settings, such as hostname and IP for 4 VMs, along with the image they will be using.

Next, we have to define the number of drives per server set using MINIO_DRIVE_COUNT. You must’ve noticed below we are not only looping numerically but also drive_letter starting at b and incrementing (c, d, e...) with every loop. The reason for this is that drives in Linux are named with letters like sda, sdb, sdc, and so on. We are starting from sdb because sda is taken by the / root disk where the operating system is installed.

In this case, each of the 4 servers will have 4 disks each with a total of 16 disks across all of them. In the future, if you change the settings, the easiest way to know the total number of disks is to multiply MINIO_SERVER_COUNT x MINIO_DRIVE_COUNT.

The previous step only creates a virtual drive, as if we added a physical drive to a bare metal machine. You still need to configure the disk partitions and mount them so Linux can use them.

Use parted to create an ext4 /dev/sd*1 partition for each of the 4 disks (b, c, d, e).

Format the created partitions and add them to /etc/fstab so each time the VM is rebooted the 4 disks get mounted automatically.

This is when we mount the disks we created and set the proper permissions needed for the MinIO service to start. We add the volume and credentials settings /etc/default/minio after all the disk components have been configured. We’ll enable MinIO but not start it yet. After the VMs are up, we’ll start all of them at the same time to avoid an error condition that occurs when MinIO times out if it cannot find the other nodes within a certain period of time.

We set a couple more settings related to the VM, but most importantly the last line in this snippet makes use of the vagrant-hosts plugin to sync /etc/hosts file across all VMs.

By now, you must be wondering why we did not just bake all these shell commands in the Packer build process in the minio.sh script. Why did we instead fry these settings as part of the Vagrantfile provisioning process?

That is an excellent question. The reason is that we will want to modify the disk settings based on each use case – sometimes, you’d want more nodes in the MinIO cluster, and other times you might not want 4 disks per node. You don’t want to create a unique image for each drive configuration as you could end up with thousands of similar images, with the only difference being the drive configuration.

You also don’t want to use the default root username and password for MinIO, and we’ve built a process that allows you to modify that at provisioning time.

What if you wanted to upgrade the MinIO binary version but don’t want to build a new Packer image each time? This is as simple as adding a shell block with commands to download and install the MinIO binary, just as we did for Packer in the minio.sh script. Below is the sample pseudo code to get you started

This is where you, as a DevOps engineer, need to use your best judgment to find the right balance between baking and frying. You can either bake all the configurations during the Packer build process or have the flexibility to fry the configuration during the provisioning process. We wanted to leave some food for thought as you develop your own setup.

Launch MinIO cluster

By now, we have a very good understanding of the internals of how the cluster will be deployed. Now all that is left is deploying the cluster on Virtualbox using Vagrant.

Set the following environment variable as it’s needed to get our disks automagically detected by the VMs

Be sure you are in the same location as Vagrantfile and run the following command

Your output should look like the above, with the states set to not created. It makes sense because we haven’t provisioned the nodes yet.

Finally the pièce de résistance! Provision the 4 nodes in our MinIO cluster

Be sure you see this output ==> vagrant: Features: disks as this verifies the environment variable VAGRANT_EXPERIMENTAL=disks has been set properly. As each VM comes up, its output will be prefixed with the hostname. If there are any issues during the provisioning process, look at this hostname prefix to see which VM the message was from.

Once the command is done executing, run vagrant status again to verify all the nodes are running

All the nodes should be in the running state. This means the nodes are running, and if you don’t see any errors in the output earlier from the provisioning process, then all our shell commands in Vagrantfile ran successfully.

Last but not least, let's bring up the actual MinIO service. If you recall, we enabled the service, but we didn’t start it because we wanted all nodes to start first. Then we will bring all the services up at almost the same time using the vagrant ssh the command, which will SSH into all 4 nodes using this bash loop.

Confirm using the journalctl command to see the logs and verify that the MinIO service on all 4 nodes started properly

You should see an output similar to the example below from minio-4. The 16 Online means all 16 of our drives across the 4 nodes are online.

In the future, if you ever want to bring your own custom MinIO cluster up you now have all the necessary tools to do so.

Automate MinIO build

Technically you could stop here and go on your merry way. But we know you always want more MinIO, so we’ll take this one step further to show you how to automate this process, so you don’t have to manually run the Packer build commands every time.

Install Jenkins

Install and start Jenkins using the following commands

Once Jenkins is started, it will ask you to set a couple of credentials which should be pretty straightforward. After you have the credentials set up, you need to ensure the git plugin is installed using the following steps.

Configure MinIO build

Let’s create a build configuration to automate our Packer build process of the MinIO image. But before you get started, we mentioned earlier there are other ways to set the values for Packer variables. Using environment variables, the values in variables.pkr.hcl can be overwritten by prefixing the variable name with PKR_VAR_varname. Instead of editing the file directly, we’ll use the environment variables to overwrite some of the variables in the next step.

Follow the steps below to configure the build.

Under the “Build Steps” section, we added some commands. The text for that is below so you can copy and paste it into your configuration.

Execute MinIO build

Once we have the job configured, we can execute the build. Follow the steps below.

Once the build is successful head over to https://app.vagrantup.com to verify the image has, in fact, been successfully uploaded.

Related: What is a CI/CD pipeline?

Final Thoughts

In this blog post, we showed you how to take the MinIO S3-compatible object store in distributed mode all the way from building to provisioning on your laptop. This will help you, and your fellow developers get up and running quickly with a production-grade MinIO cluster locally without compromising on using the entire feature set. MinIO is S3-compatible, lightweight, and can be installed and deployed on almost any platform, such as Bare Metal, VMs, Kubernetes, and Edge IoT, among others, in a cloud-native fashion.

Let’s recap what we performed briefly below:

- We created a Packer template to create a MinIO image from scratch.

- We used Vagrant as the provisioner so we could develop locally using the image.

- We leveraged Jenkins to automate uploading the image to the Vagrant cloud for future builds.

We demonstrated that you must achieve a balance between baking and frying. Each organization's requirements are different, so we didn’t want to draw a line in the sand to say where baking should stop and frying should start. You, as a DevOps engineer, will need to evaluate to determine the best course for each application. We built MinIO to provide the flexibility and extensibility you need to run your own object storage.

If you implement this on your own or have added additional features to any of our processes, please let us know on Slack!