Data and Drive parity on AIStor

We often talk about how good, fast and reliable access to data is paramount if you want to have an upper hand in your AI/ML game. While different replication strategies allow you to access your data to be distributed across multiple nodes or sites, you also need to think about data integrity and resiliency at a drive level.

Why is this the case? This is because hardware failures happen at different levels. There are some outages that take down the entire site. Then there are outages that take down a portion of the nodes in the cluster. But there are also failures at a more granular hard drive bit level that needs another type of resilience that replication on its own cannot provide.

This is where Erasure coding comes into play. It is a reliable approach that shards data across multiple drives and fetch it back, even when a few of the drives are not available.

AIStor leverages Reed Solomon erasure coding to ensure data redundancy in multiple disk deployments. Until recently the number of drives used for data and parity in MinIO’s erasure coding was fixed to N/2 each (N being the total number of drives in use by the MinIO server). This is the highest redundancy erasure coding has to offer.

You can lose half of the drives and still be guaranteed that no data loss will occur.

This still remains our recommended configuration.

However, high redundancy also means higher storage usage. There are certain cases where such high parity count and hence storage usage, may not be desirable, for example:

- Users may have deployed specialized storage hardware with fail safety. So, they aren’t expecting frequent failures.

- Data being stored is less critical, and of lesser value compared to storage itself.

- Data in the MinIO store is being backed up to other sites.

To address such use cases, we support Kubernetes storage class in AIStor. Storage classes offer two modes, Standard and Reduced Redundancy, each with configurable data and parity drives.

Before we get into what each of these classes imply and how to use them, let me explain the various combinations of data and parity disks and corresponding drive space usage.

Drive space utilization

To get an idea of how various combinations of data and parity drives affect the storage usage, let’s take an example of a 100 MiB file stored on 16 drive MinIO deployment.

If you use 8 data and 8 parity drives, the file space usage will be exactly twice, i.e. 100 MiB file will take 200 MiB space. But, if you use 10 data and 6 parity drives, the same 100 MiB file will take around 160 MiB. If you use 14 data and 2 parity drives, 100 MiB file consumes only around 114 MiB.

But, it is important to note that as you bring the number of parity drives down, you’re making data less redundant. For example, with 14 data and 2 parity drives, an object can withstand only 2 drive losses, if you lose the 3rd drive, you will lose your data. So, MinIO does not recommend this configuration unless it is for non-critical data.

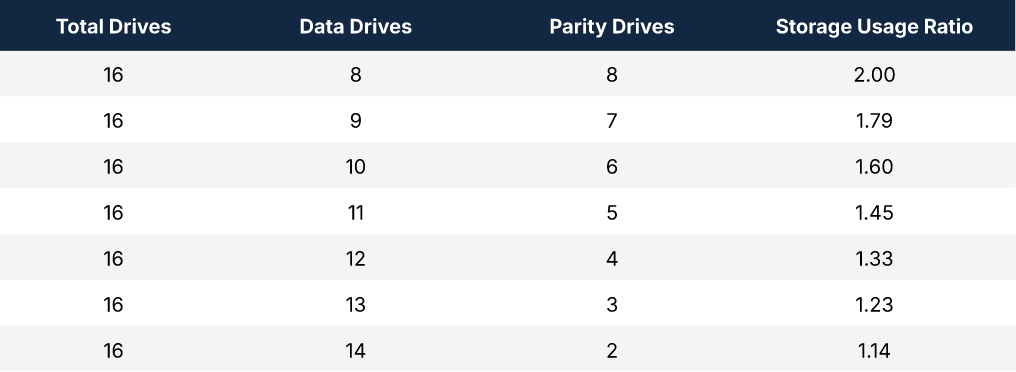

This table summarizes the data/parity drives and corresponding storage space. The field storage usage ratio is simply the drive space used by the file after erasure-encoding, divided by actual file size.

You can calculate approximate storage usage ratio using the formula — total drives (N) / data drives (D).

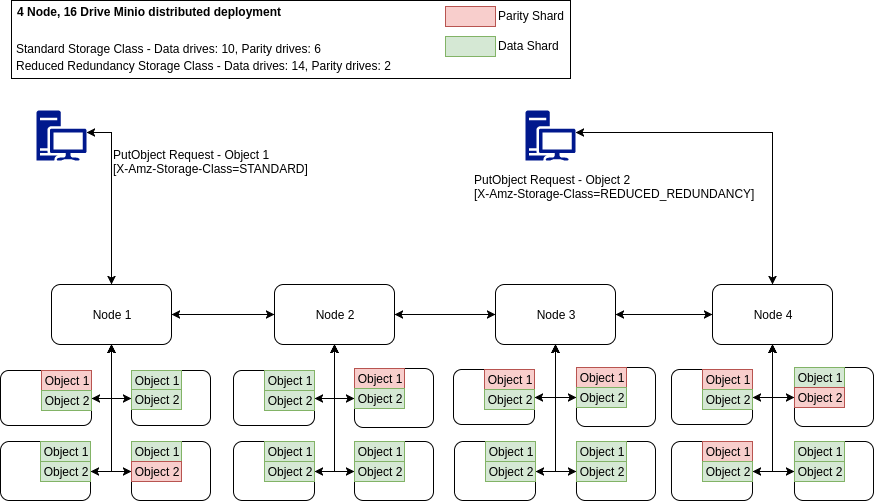

The diagram below shows a sample distribution of data and parity shards on a 4 node, 4 drive each deployment, with both types of storage classes enabled.

16 Drive distributed deployment with both storage classes

Getting started with MinIO storage classes

There are two storage classes supported currently, Standard and Reduced Redundancy. You can set these classes by using

- Environment variables — Set the environment variable MINIO_STORAGE_CLASS_STANDARD and MINIO_STORAGE_CLASS_RRS with values in the format "EC:Parity" . e.g. MINIO_STORAGE_CLASS_STANDARD="EC:5"

- MinIO config file — Set the field storageclass like this"storageclass": { "standard": "EC:5", "rrs": "EC:3"}

Then start MinIO server in erasure code mode.Storage Class:Objects with STANDARD class can withstand [5] drive failure(s). Objects with REDUCED_REDUNDANCY class can withstand [3] drive failure(s).

For further details, refer to the storage class documents here: https://github.com/minio/minio/tree/master/docs/erasure/storage-class

Standard storage class

Standard storage class is the default storage class of your deployment. Once set, all the PutObject requests by default will adhere to the data/parity configuration set under standard storage class.

For example, in a 10 drive MinIO deployment, with standard storage class set to 6 data and 4 parity drives, all the PutObject requests sent to this MinIO deployment will store the object in 6 data and 4 parity configuration.

Here are some other interesting aspects of standard storage class

- By default standard storage class data and parity drives are set to N/2 (and cannot be set any higher than this).

- You can optionally set object metadata set to X-Amz-Storage-Class:STANDARD to enable STANDARD storage class for the corresponding object.

- MinIO server doesn’t return a storage class in the metadata field, if the class is set to STANDARD. This is in compliance with AWS S3 PutObject behavior.

Reduced redundancy storage class

Reduced redundancy storage class can be applied to objects of a less critical nature, requiring less replication. To apply this class, set object metadata as X-Amz-Storage-Class:REDUCED_REDUNDANCY in a PutObject (or multi-part) request. This indicates MinIO server to store the corresponding object with data and parity as defined by the reduced redundancy class.

Here are some of the interesting aspects of reduced redundancy storage class

- By default reduced redundancy storage class parity drives are set to 2 (and cannot be set any lower than this).

- You need to set X-Amz-Storage-Class:REDUCED_REDUNDANCY in object metadata, for server side reduced redundancy to kick in.

- MinIO server will return a storage class in the metadata field, if the class is set to reduced redundancy.

With storage class support, MinIO server now offers fine grained control over disk usage and redundancy. You can now make tradeoffs best suited to your use case, i.e. you can optimize for better redundancy or better storage usage by properly setting values for storage class usage in your MinIO deployment.

While you’re at it, help us understand your use case and how we can help you better! Reach out to us at hello@min.io to learn more about how Data and Parity works in AIStor.