Running Hyper-Scale High-Performance Object Storage on VMware vSphere 7.0: A Technical Deep Dive

Bringing Together IT, Administrators and Applications

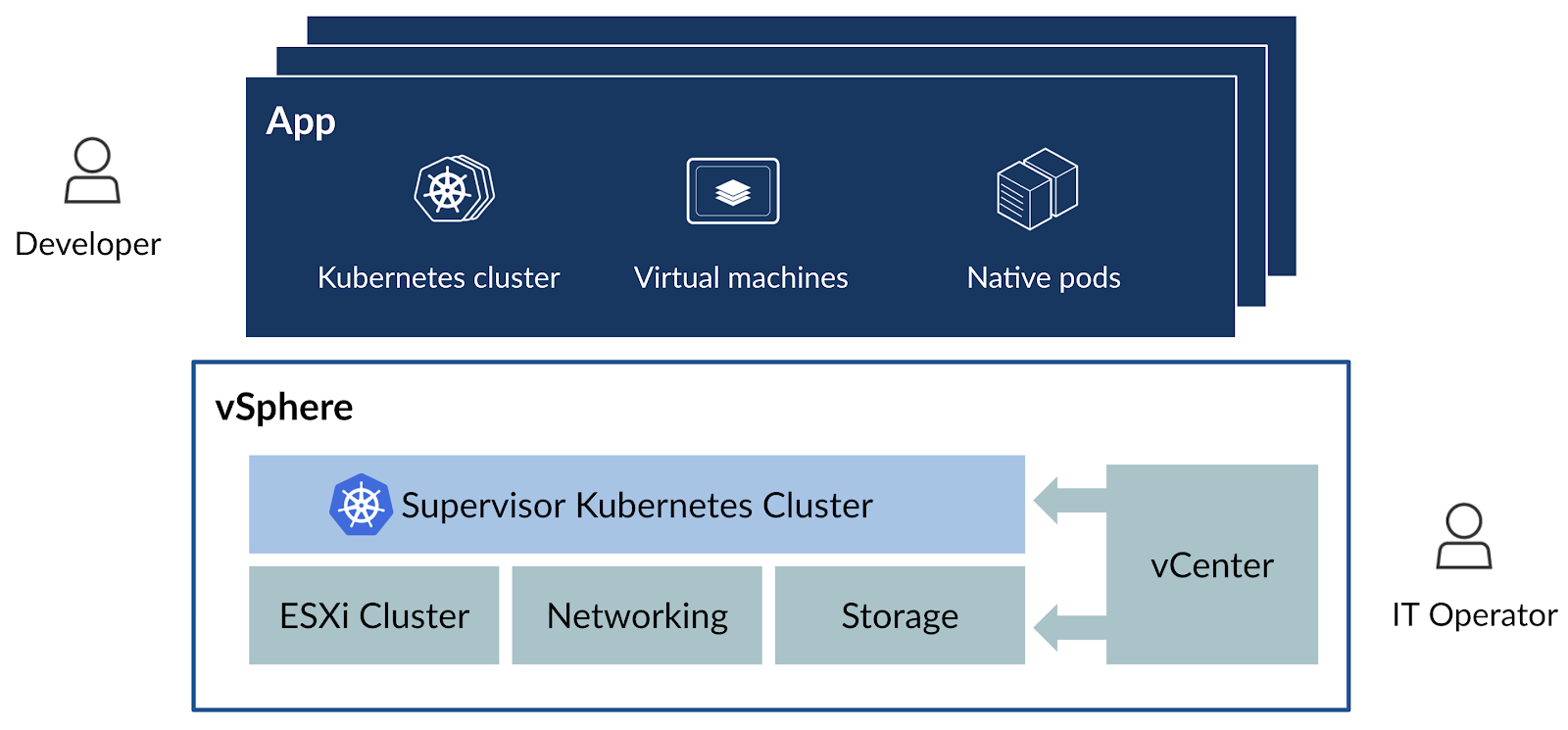

The release of VMware's vSphere 7.0 introduced some exciting new features. One of them is Supervisor Services, which will allow you to install databases, message brokers, and hyperscale high-performance object storage directly from the vSphere UI. With the addition of Kubernetes automation, VMware has created a single platform that provides everything from containerization, orchestration, virtualization, storage with vSAN, networking and security.

With vSphere 7.0, IT administrators can provision physical and virtual infrastructure, opening the floodgates for organizations to quickly provision private clouds with the trust and certainty that vSphere will take care of security, automation, reliability and scale. Only VMware can achieve this with its unique role serving IT administrators, application administrators and developers.

With this in mind, let's dive into how to provision a high-performance hyperscale object storage on vSphere 7.0.

Related: VMware Object Storage Solutions & Integration

Under the Hood and Deploying MinIO

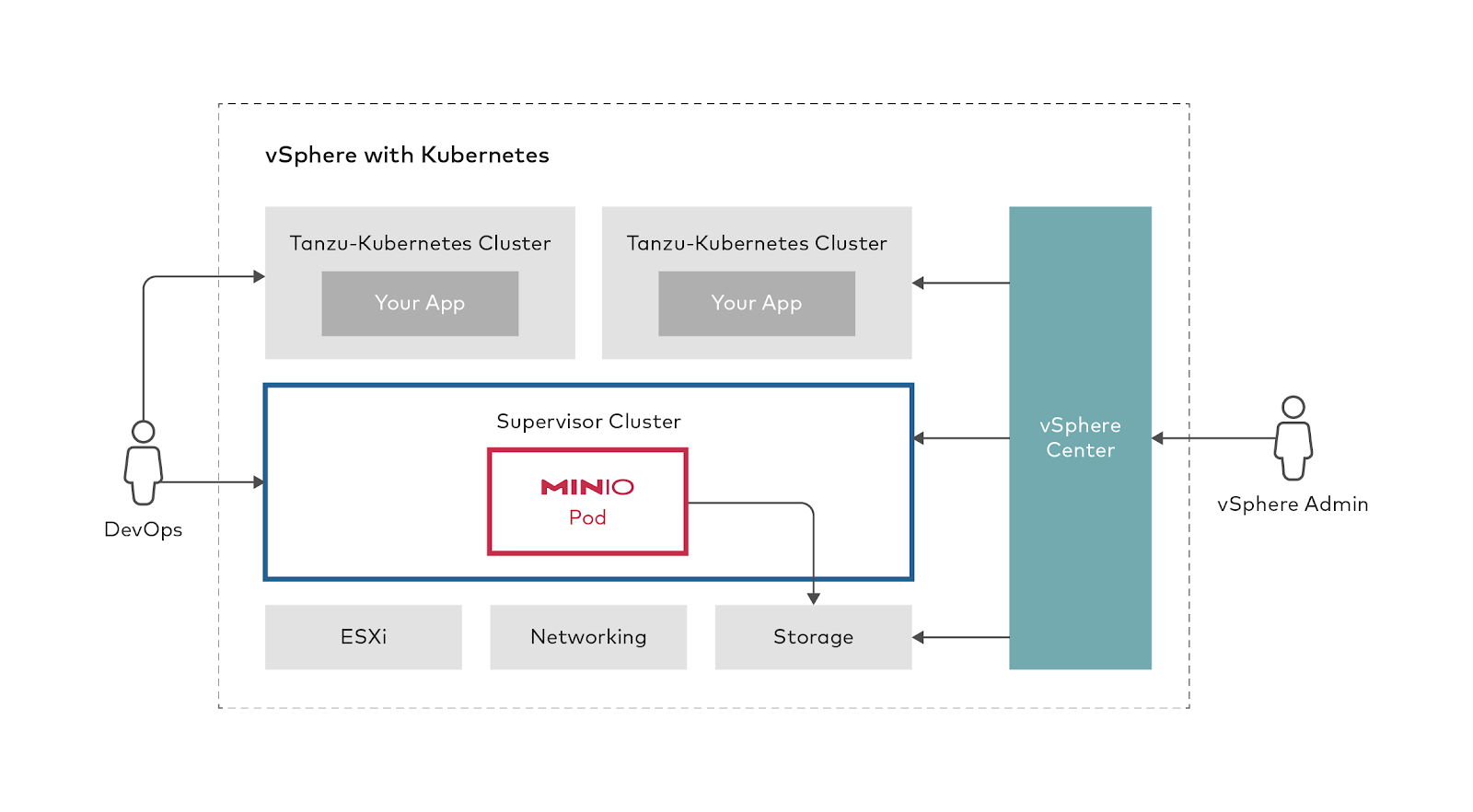

vSphere 7.0 Introduces a new, deep integration of Kubernetes Into the core of the platform. The integration is powered by what’s called a supervisor cluster. The supervisor cluster orchestrates networking, ESXi and storage—this is where the persistent services will live. The supervisor cluster is where we are able to run guest Kubernetes clusters with Tanzu, virtual machines, and native pods.

MinIO is deployed inside the Supervisor Cluster where it has direct access to the ESXi machines, the networking and the storage.

Persistent services will have a unique opportunity to access the underlying storage with introduction of vSAN Direct, a technology that gives persistent services direct access to the underlying disks. This enables IT administrators to provision high-speed, peta-scale infrastructure thanks to a simplified data path that relies on data locality.

For applications that benefit from direct access to the drives and operational automation, vSphere provides critical vSAN Direct functionality. For MinIO in particular, living at the supervisor cluster and sitting on top of vSAN Direct provides a unique opportunity to empower organizations to create extremely large scale object storage clusters with minimum friction.

MinIO can build very large object storage deployments thanks to its simplicity, statelessness, data protection and data distribution capabilities.

We employ a mathematical algorithm called "Erasure Code" that chunks every object into a certain number of data blocks and parity blocks, in which the parity blocks protect the data blocks against corruption and data loss, then stores each one of them on different disks across multiple nodes to effectively protect the data.

Because MinIO doesn't rely on a centralized metadata store (the metadata lives next to the object) any node in the cluster can serve any request and reconstruct any objects, thus achieving a high degree of resiliency. By combining this with a powerful NSX networking layer and the reliability of vSAN, one can easily build a world-class object storage solution for the private cloud that will match any public cloud offering.

All this can be achieved from the vSphere UI.

Let's get started and deploy MinIO using Supervisor Services on vSphere 7.0. To begin, you will need some storage capacity on your vSAN layer. If you want to try vSAN Direct you should configure each disk on every machine as a separate storage pool. There is also a new storage policy called vSAN SNA (Share Nothing Architecture) that works just like vSAN but keeps the underlying store local to each node in the cluster.

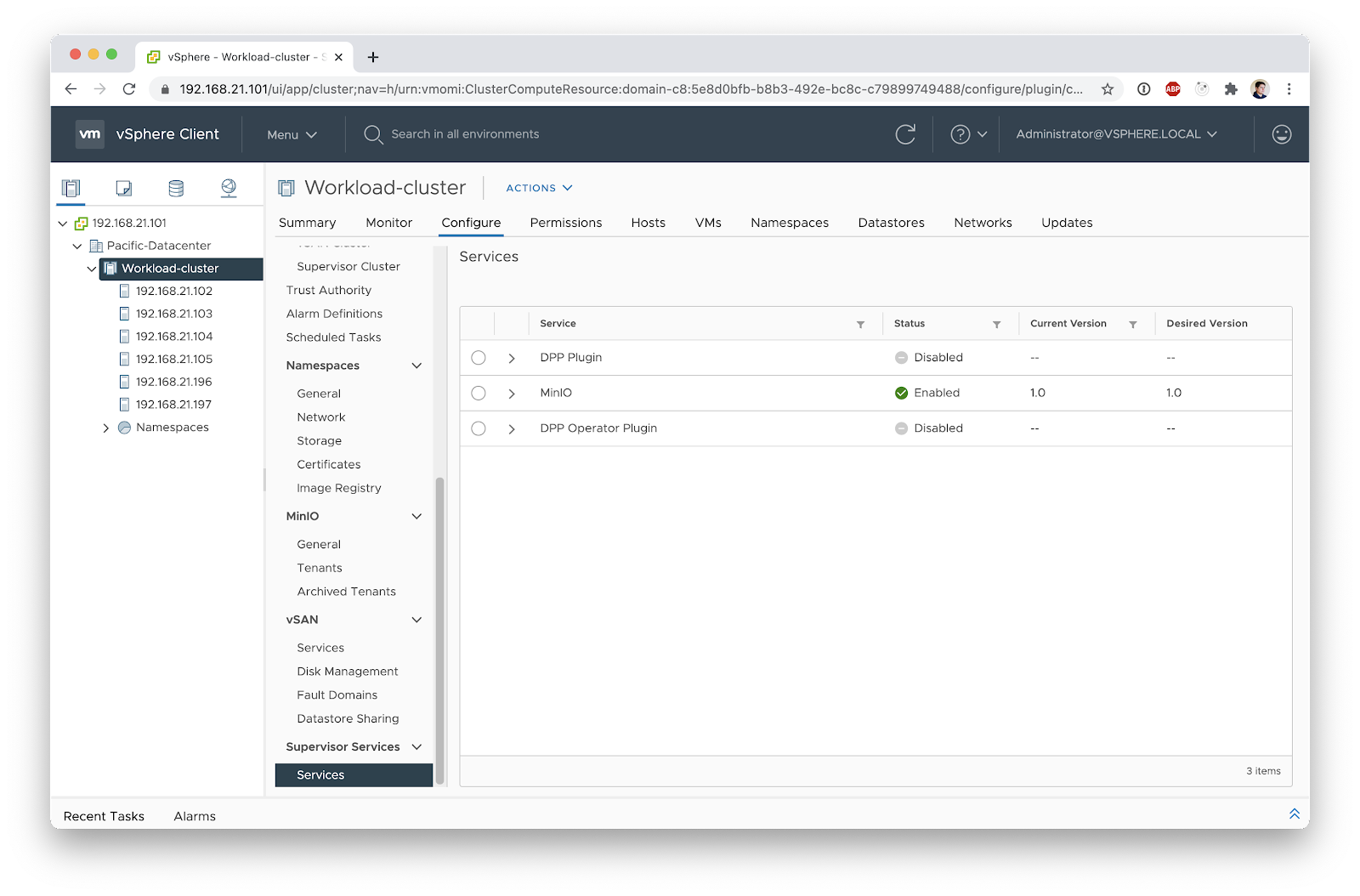

To get started, navigate in the vSphere UI to the cluster to which you want to deploy your first object storage and then go into Configure. From there, scroll to the bottom to find Supervisor Services.

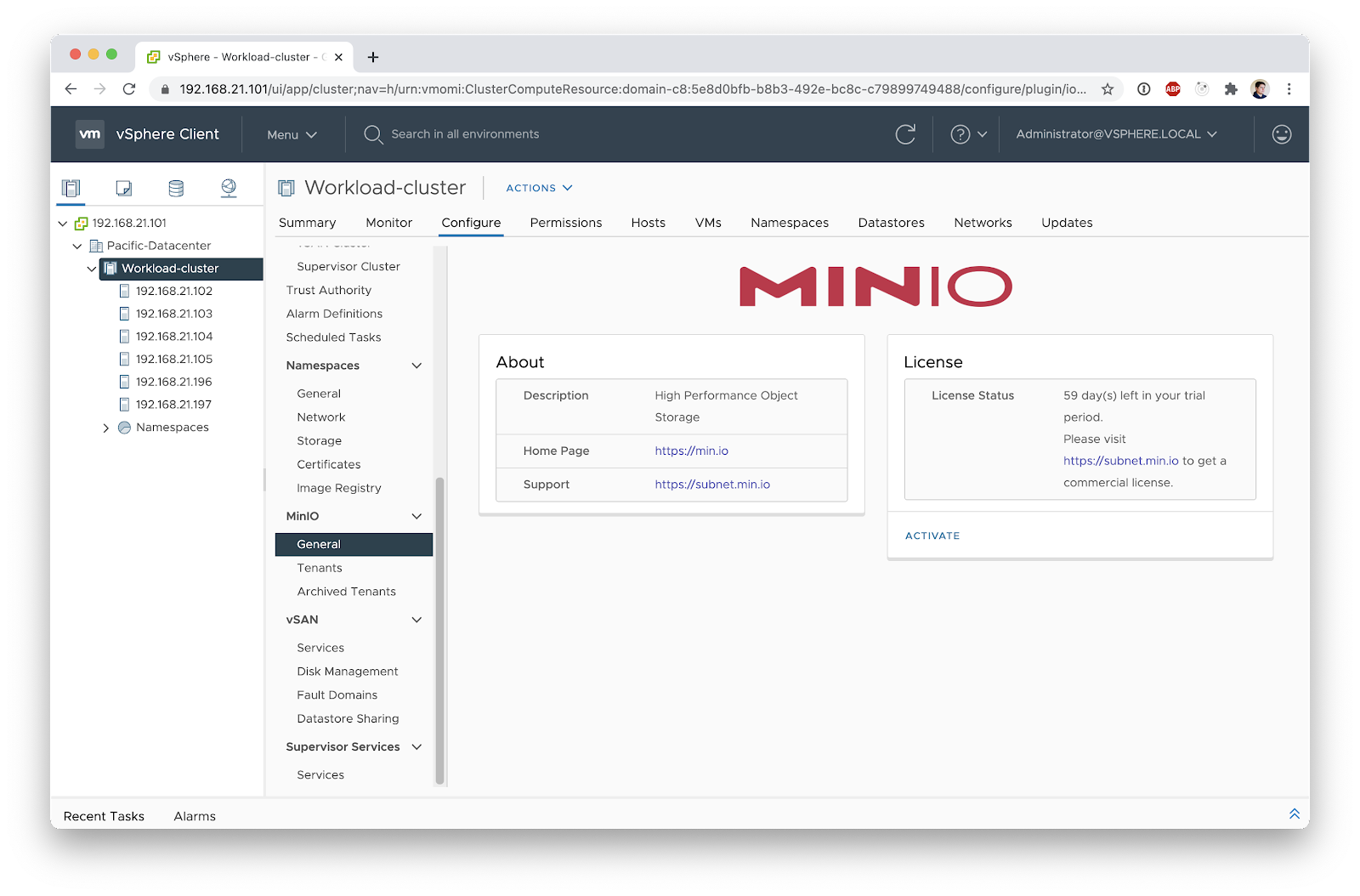

Now select MinIO and click enable, and you will be presented with the option to configure a private registry—this is useful for air gapped deployments. On the next screen you'll see our EULA. Finish activating the Supervisor Service and you'll be greeted with a message that indicates MinIO has been installed and you should refresh your browser.

Now on your cluster Configure section you'll find a MinIO menu.

With the introduction of namespaces in vSphere 7.0 you can now more easily delegate access to a set of resources to another administrator. MinIO embraces this model and enforces that only one MinIO Tenant should exist per namespace. Let’s create a new namespace to attach some storage policies to it.

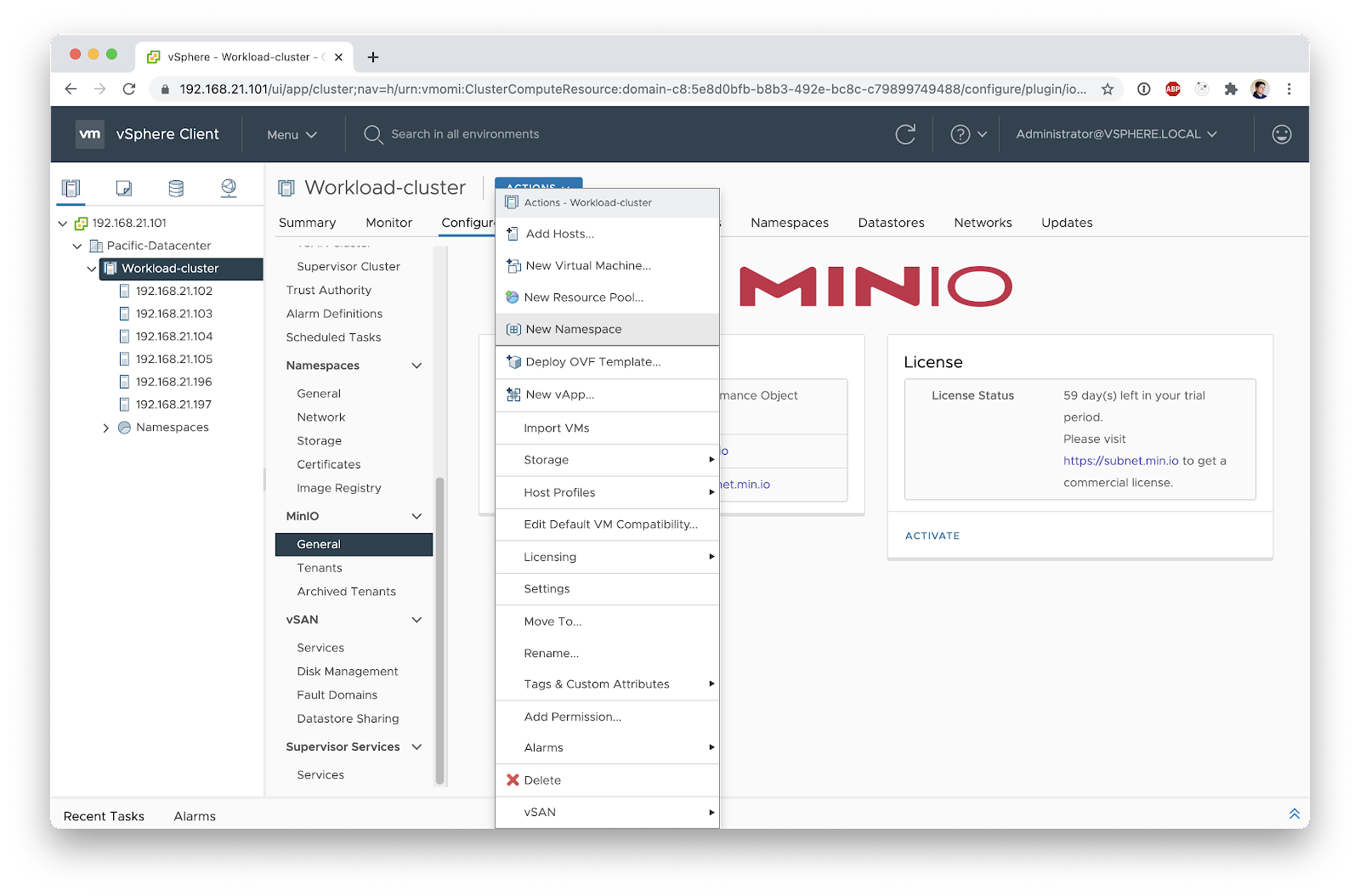

First, create a namespace by going to your cluster Actions and selecting New Namespace.

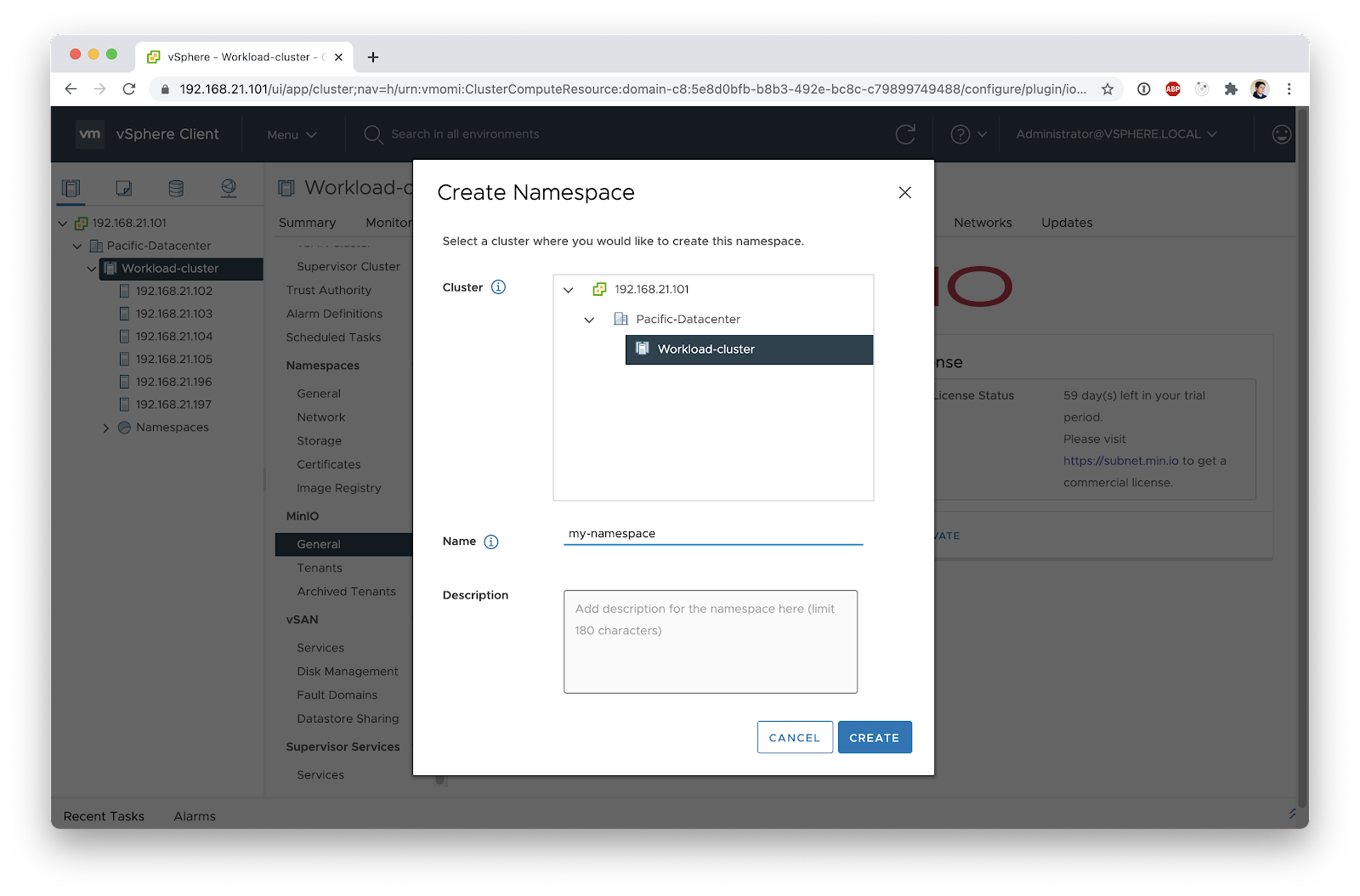

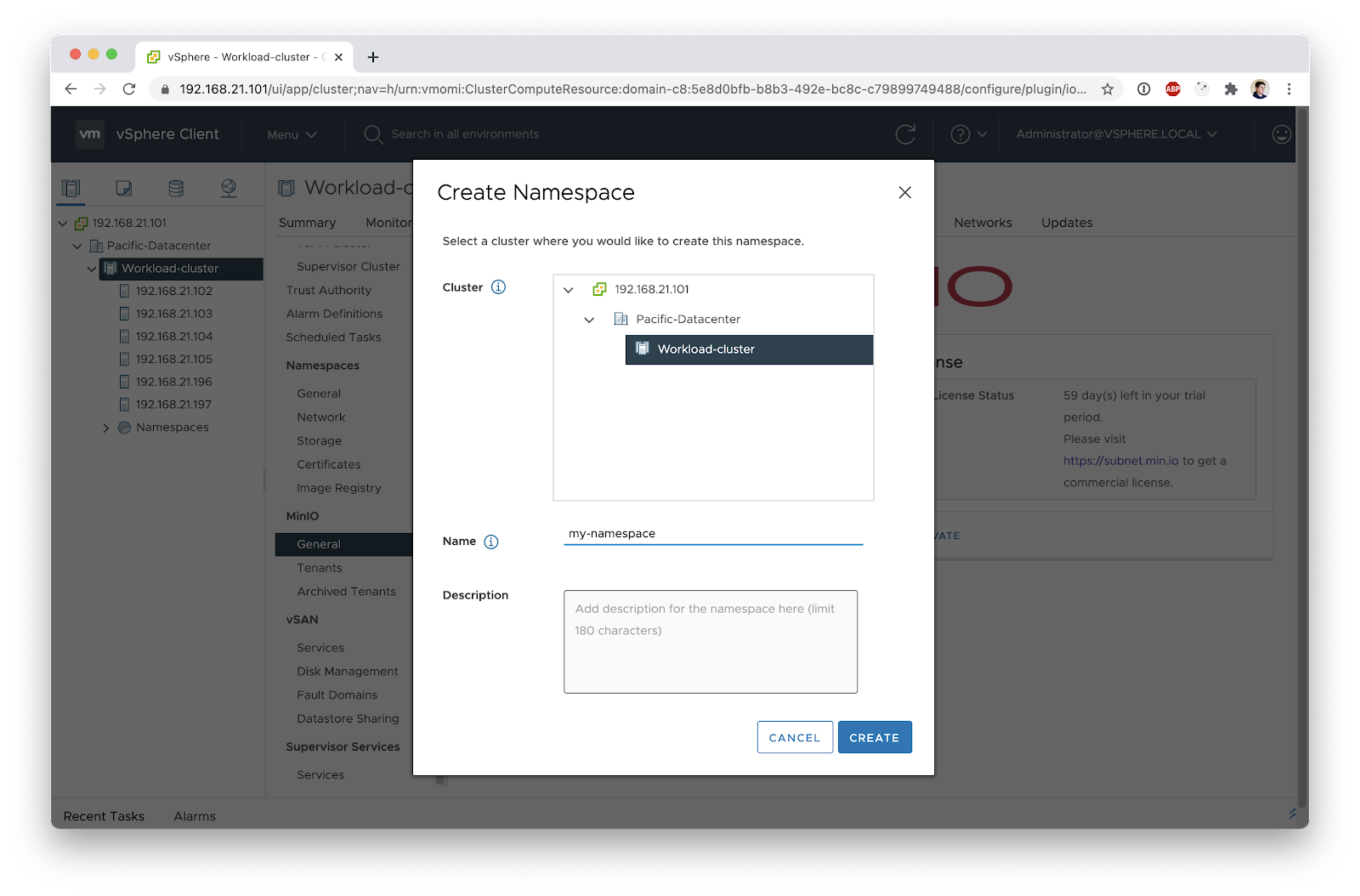

Name your Namespace

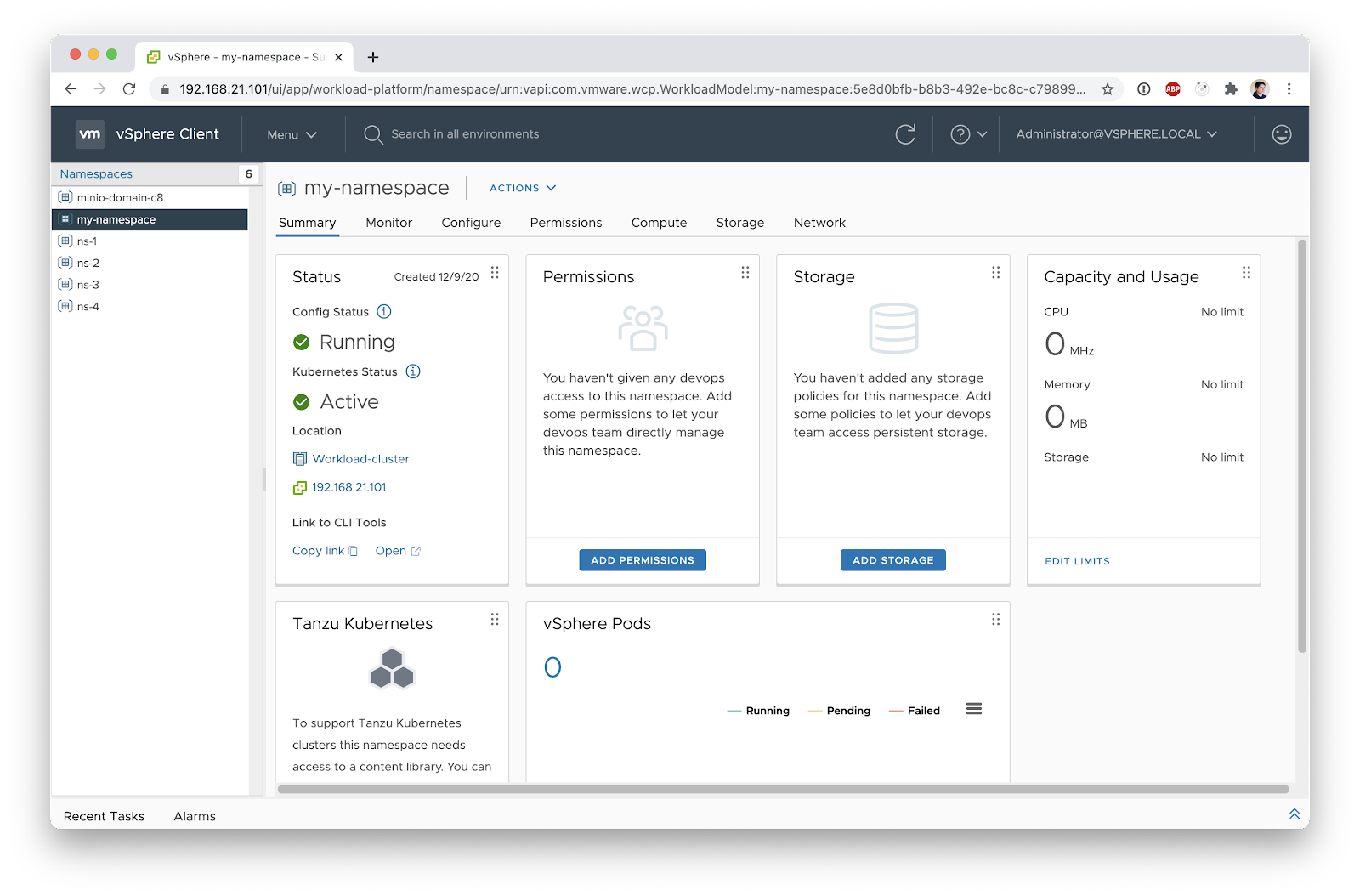

On your new namespace screen, attach some storage policies to your namespace. The MinIO Tenant can only use disks associated with the storage policies attached to the namespace. You'll notice that after installing the MinIO Supervisor Service, two new storage policies were configured; One for vSAN Direct and one for vSAN SNA. While both vSAN options are appropriate for MinIO Tenants, MinIO strongly recommends vSAN Direct for best performance.

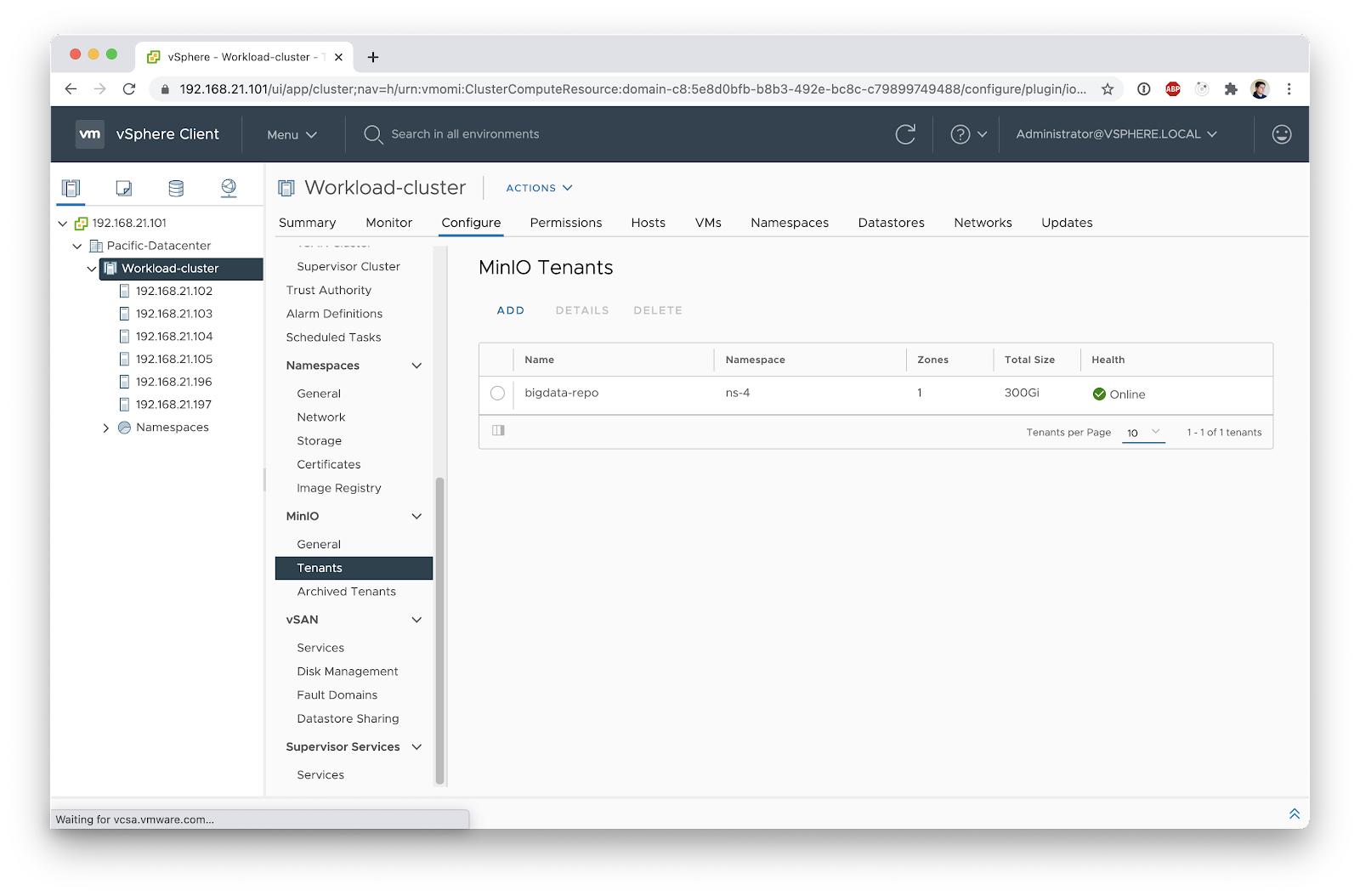

Now we are ready to create our first Tenant. Go back to your Cluster and navigate to Configure > MinIO > Tenants. This screen shows all the tenants in your cluster and which namespace they reside in. Hit the Add button to start configuring your first tenant.

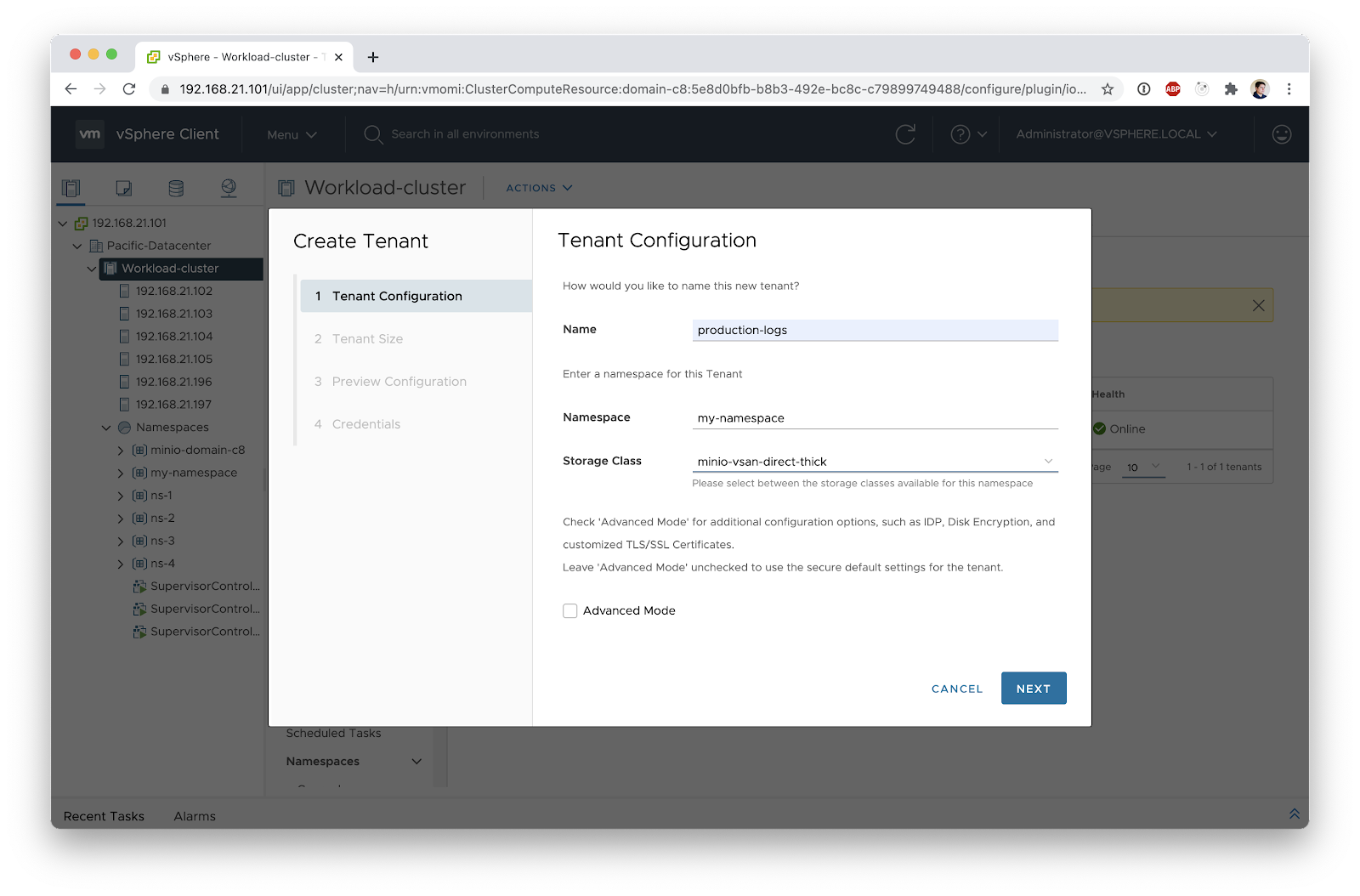

The Add screen will guide you through the process of setting up a new Tenant. The first thing to choose is your new tenant cluster name—we will call ours bigdata-logs. You will notice we have an option for Advanced Mode. You are going to want to use this option when you want to customize your MinIO images, configure encryption, TLS settings and IDPs (OpenID and LDAP are supported).

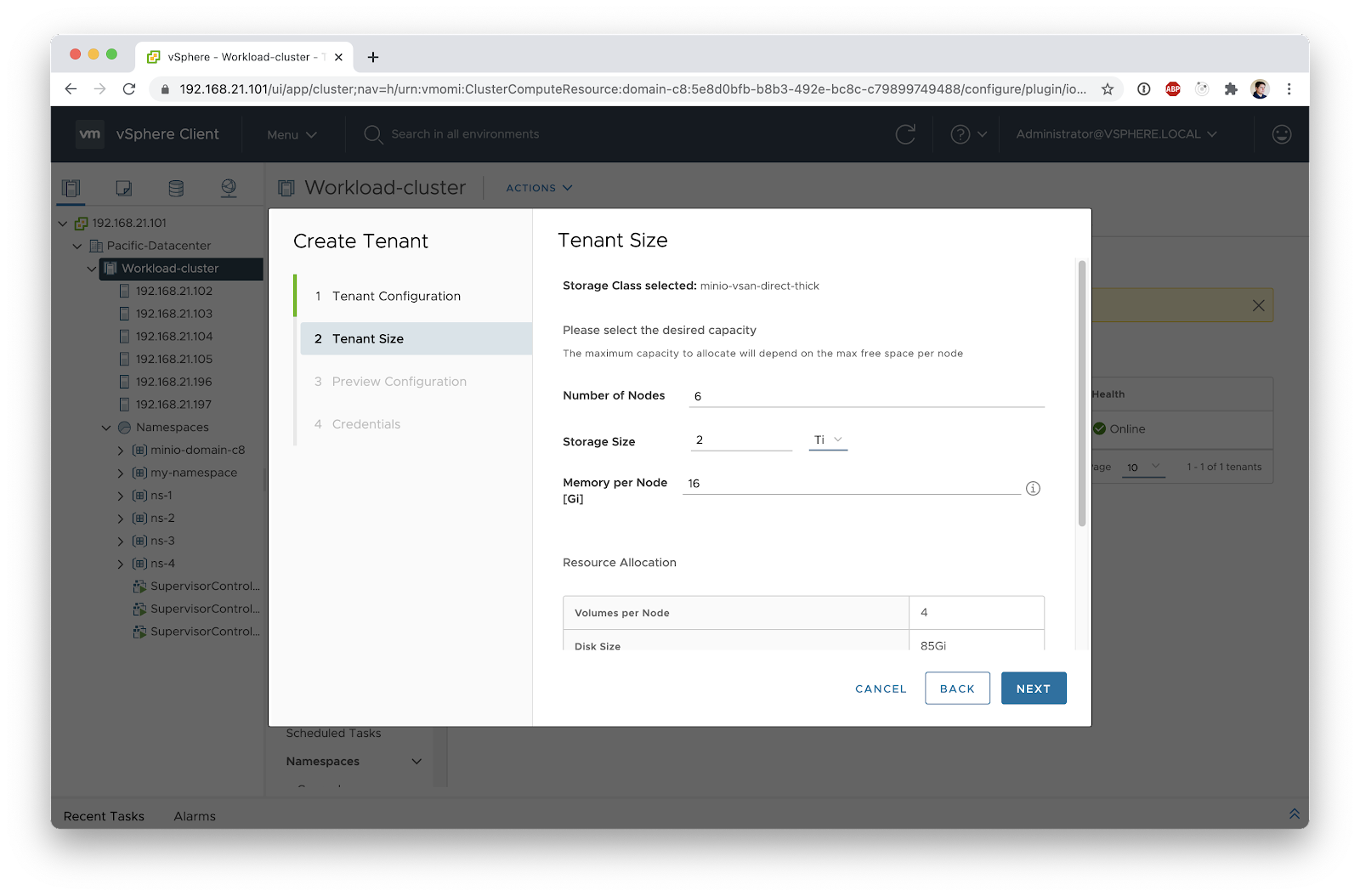

Click Next to size your tenant. This is where the distributed nature of MinIO comes into play. By default, we recommend you spread your object-storage cluster as wide as possible, guaranteeing your data is always available even if some nodes go down.

Finish Setup

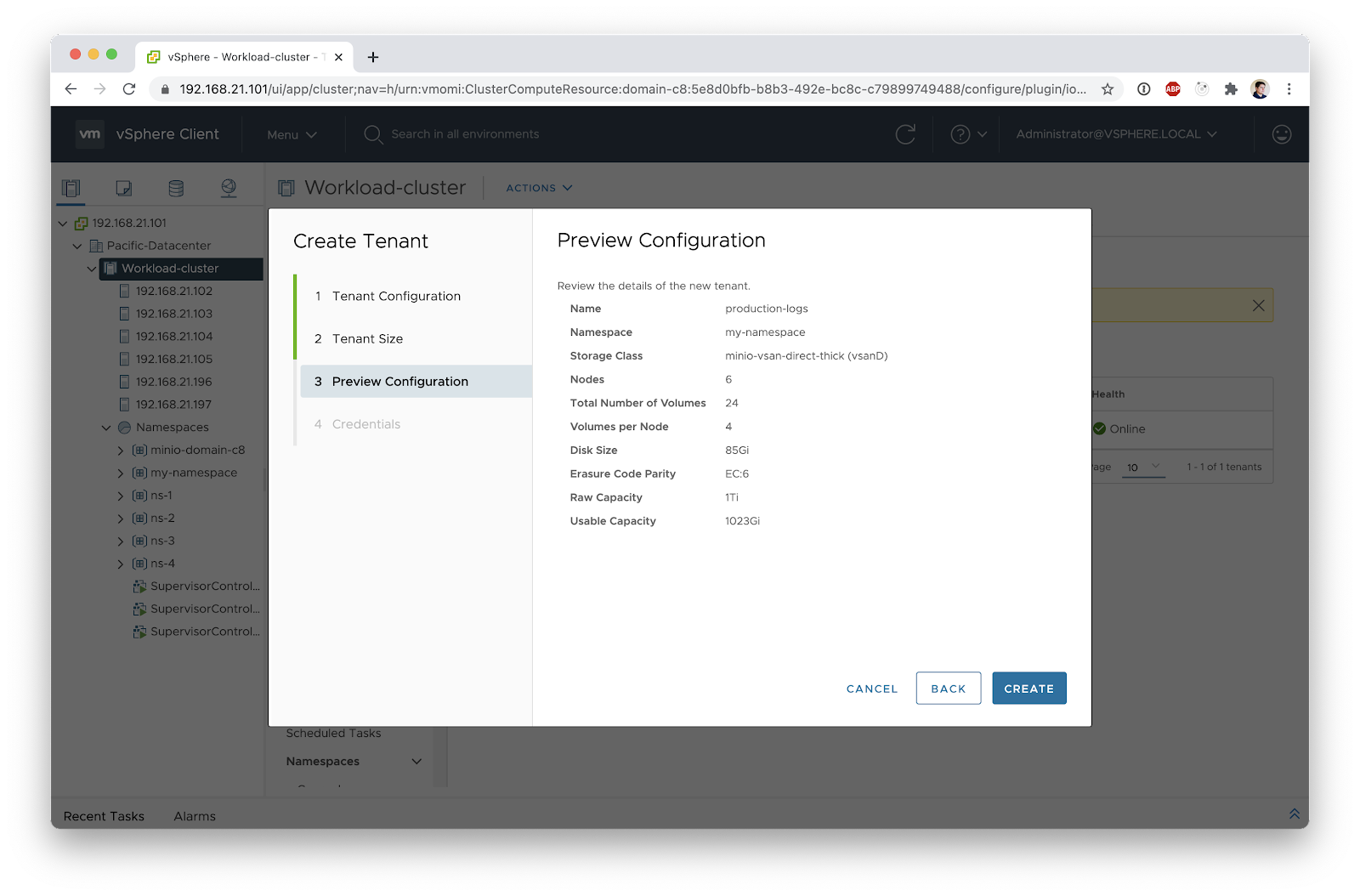

Finally, let's review what's going to happen.

We are going to create a 2Ti tenant across 6 nodes, each one using 16Gi of memory. MinIO automatically calculates the number of volumes and the capacity to request per volume based on the desired total storage and number of nodes.

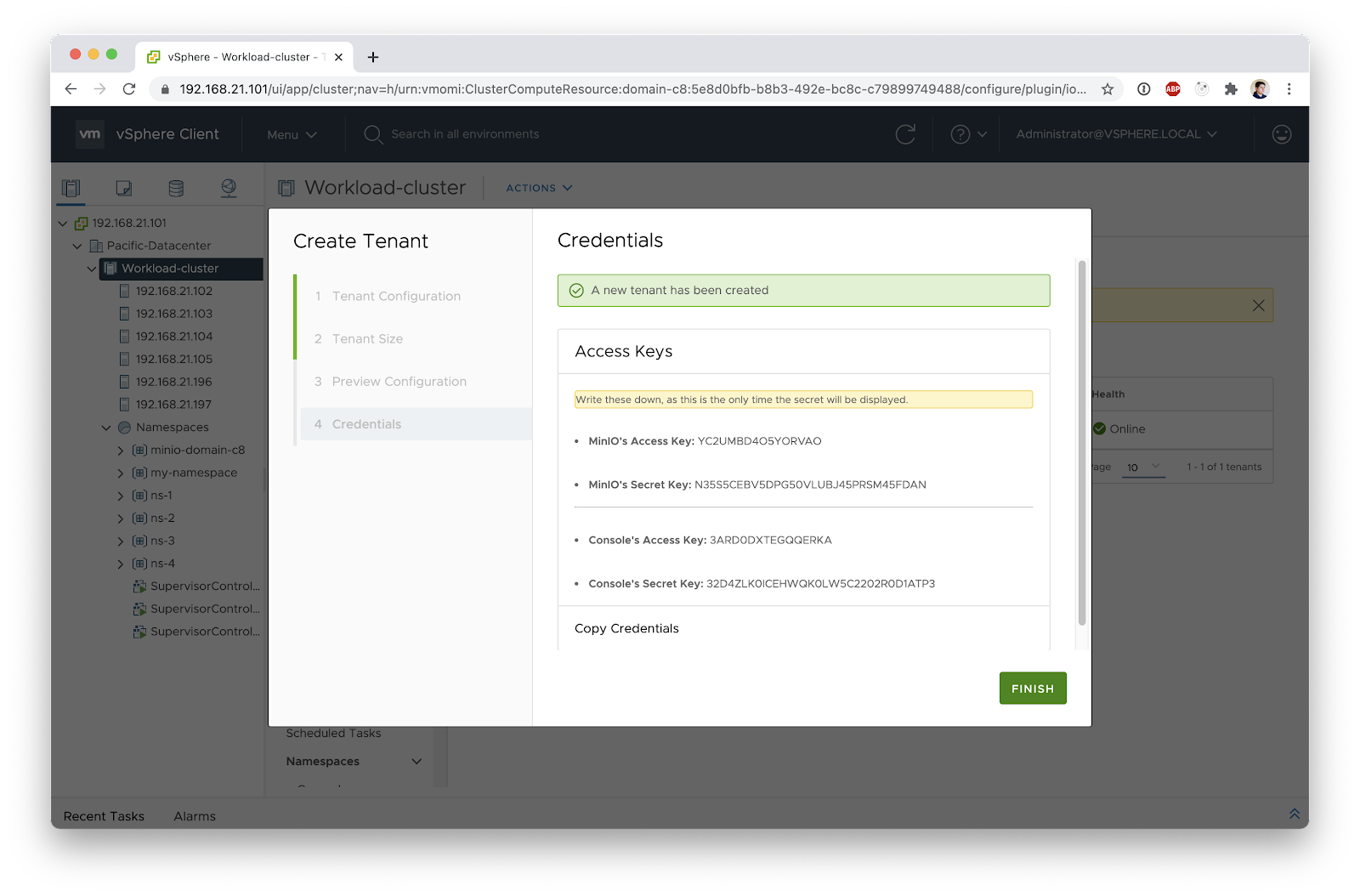

After you hit Create, you'll receive a set of auto-generated credentials for managing MinIO and accessing the Console UI—write these down or save them somewhere handy. MinIO only shows these credentials once, so make sure you save them securely before clicking Finish.

It’s that simple.

After a few minutes, a full-fledged object-storage cluster will be created. If you were to activate the advanced option when creating the tenant on the first screen you would be guided through configuring a multitude of features such as:

- Identity providers

- TLS Management

- Encryption at rest

- Usage of Custom images, useful for air-gap environments

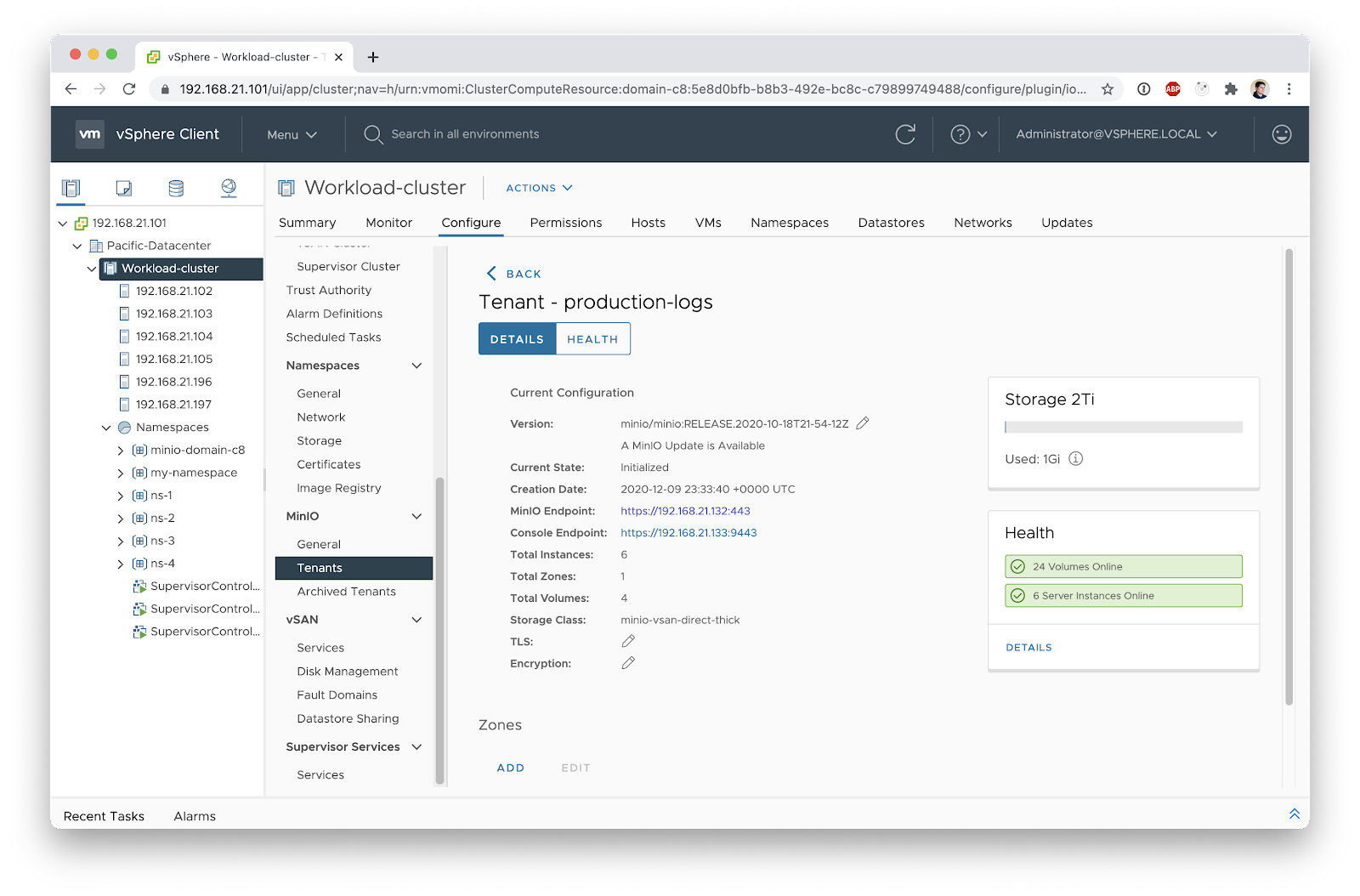

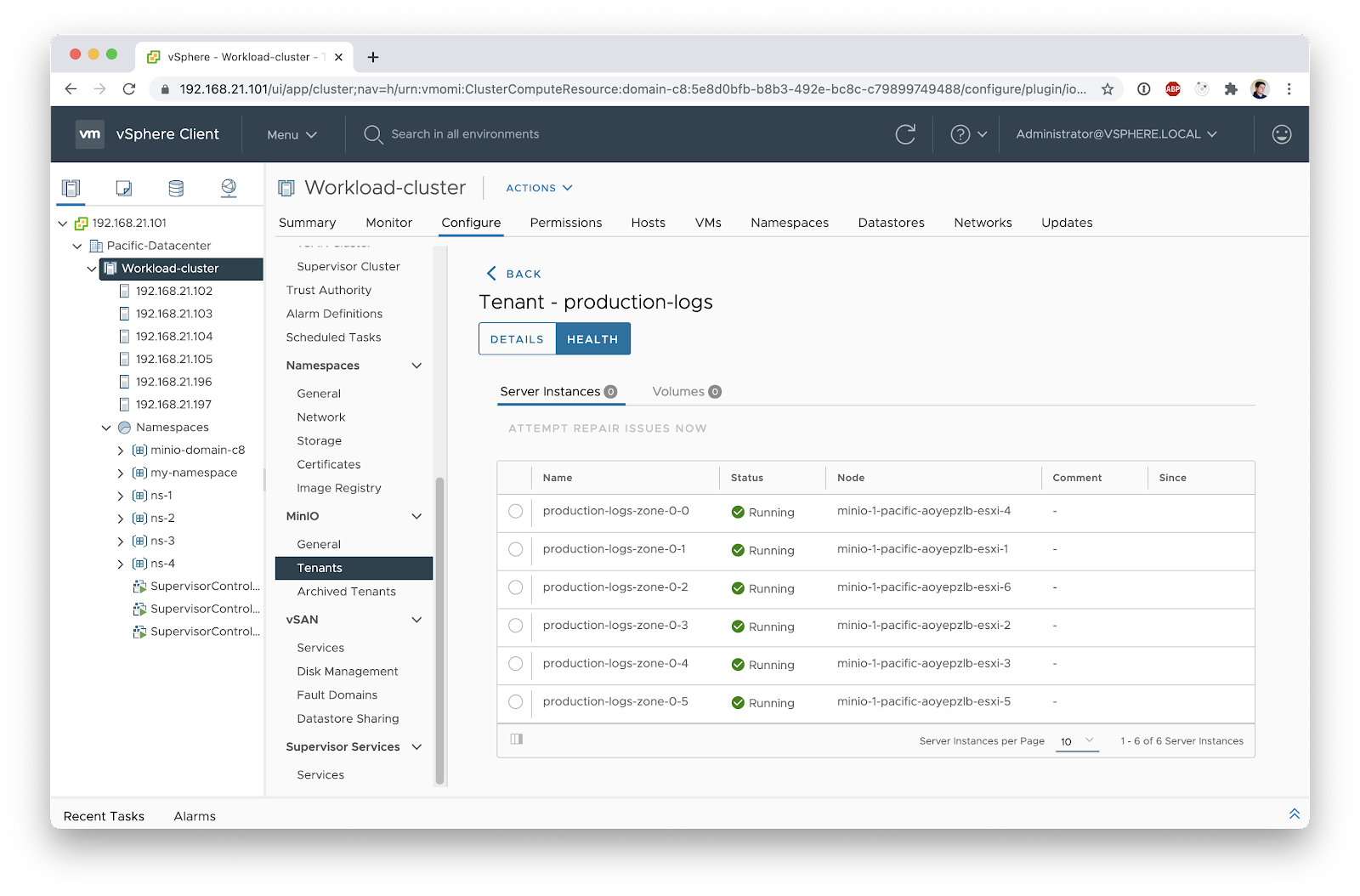

You can go into the tenant details screen to see how the tenant is doing. In this screen you can get some insight into the usage of the tenant. If there are updates for MinIO you will see those here as well. Finally, you will see the endpoints you can use to start consuming your new object storage cluster. From this screen you can also monitor the health of your cluster including your options for expansion.

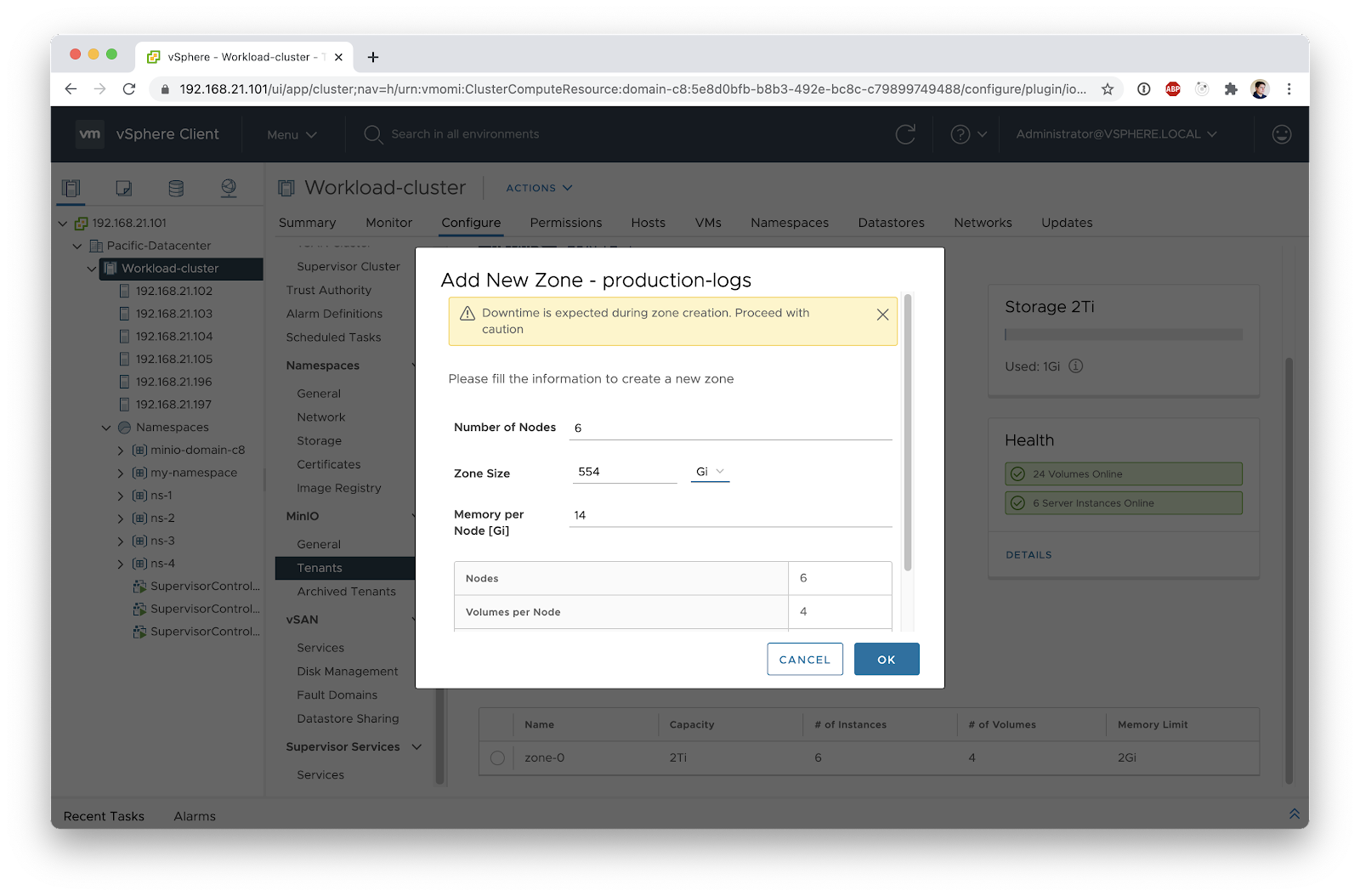

To expand the zone, select the Add Zone option to expand the cluster if you need more capacity later on.

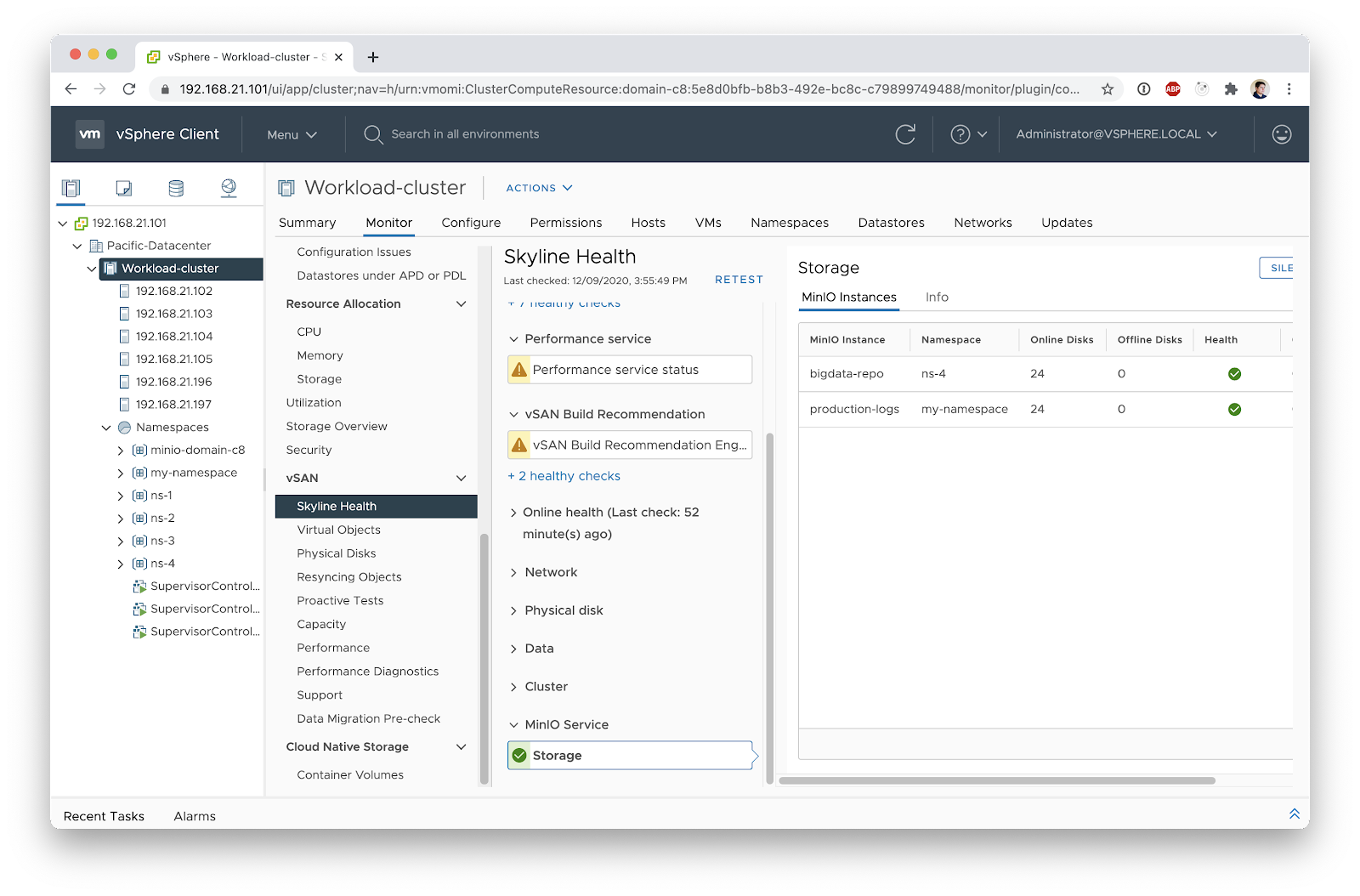

This includes integration with vCenter’s monitoring and operational model, you can see the state of your object-storage clusters by going to your cluster's Monitor > vSAN > Skyline Health

Closing Remarks

MinIO is pleased to be an integral part of VMware’s Kubernetes architecture. While the vision is expansive, MinIO is able to bring distinct capabilities and functionality to the vSAN Data Persistence platform ecosystem. The result is performant, persistent storage and a host of cloud-native applications that will be turnkey for VMware’s partners and customers.

While simple, this is a very powerful combination that takes into account almost anything that a developer, IT admin or devops persona would need. To go deeper on the integration, feel free to visit our dedicated VMware page, our Slack Channel or to ask the expert and engage with one of the engineers.