Object Storage Optimized Databases: Trends & Industry Leaders

Databases have long been the bread and butter workload of SAN-based block storage and NAS-based file storage. The rise of modern object storage has disrupted this seemingly static domain and the expectation is that the entire OLAP database sector will turn to an object storage-first positioning over the next few years, leaving only a handful of increasingly niche workloads for the SAN/NAS vendors (in the OLAP space, OLTP is a different story).

The reason is simple - the scale of data accessed by databases is simply too large to fit in memory and database vendors aren’t particularly interested in becoming storage vendors themselves. The intense competitiveness of the database industry centers around high-speed and advanced query, aggregation, and map reduce functionalities. The natural partnership to support the scale of storage lies with the cloud-native data storage providers (MinIO, AWS S3, Azure Blob, GCP).

In this model, organizations store large volumes of data from a variety of sources in object storage and then access this data through their preferred database with queries. By using this architecture, these organizations opt for a simplified and truly modern approach to data management. They query, join, and analyze their data wherever it happens to be rather than leaving behind valuable data or building complex, costly and often fragile data pipelines to move it to their central databases.

Databases generally interact with object storage as primary storage through external tables. External tables are database objects that allow users to access and query data residing outside the database. Users can define the structure and location of the external data through external table definitions and then perform SQL queries as if the data were within the database, making external data appear and behave like regular database tables.

This strategy aligns with the overall data architecture design of disaggregating storage and compute, where organizations can allocate and scale these resources independently and in doing so optimize performance for both. The most valuable data is unfortunately often the data you chose to leave behind. Disaggregation releases storage pressure on databases and empowers organizations to gather and store all their precious data rather than be forced to pick and choose based on arbitrary thresholds to keep their queries performant.

This phenomenon is occurring at both ends. Not only are databases making this move of their own volition, enterprises are accelerating the transition by actively pushing and/or selecting vendors with the capability to read directly from an object store without ingesting. This makes sense as those same enterprises are architecting data lakes using modern object stores and modern open table formats like Iceberg.

Evidence for Object Storage as Primary Storage

We have covered the object storage as primary storage movement from its earliest days and have the scars to prove it. What was once considered blasphemy is now considered accepted fact (feel free to ask Stephen Foskett of the great Field Day series). Nonetheless, for those less familiar here are the reasons that modern object storage is primary storage:

- Cloud-native object storage is specifically designed for scalability, making it a perfect choice for organizations with databases managing extensive workloads. Software-defined MinIO scales quickly and easily using Server Pools.

- Cost-effectiveness is another factor in the decision to switch to future-facing object storage. Rather than spend time and money moving around data, organizations are instead saving time and money by accessing their data wherever it lives.

- Control, with the subtext of security, is central to the decision to move to object storage as primary storage. With this architecture, your data never moves or replicates outside of your security protocols. You get all the extensibility benefits of a complete data integration with none of the security pitfalls that can arise when you begin to move data around. MinIO aligns with the industry standard of encryption at rest and supports connecting to on-prem and cloud KMS providers like Hashicorp Vault or Amazon Web Services KMS.

- Portability is paramount to data architects interested in shopping around between cloud providers and on-prem solutions for the best deal. Here, the different reasons for supporting object storage begin to intertwine as this mercenary approach can have cost saving effects. MinIO can be deployed in the public clouds, private cloud, baremetal infrastructure, orchestrated environments, and on the edge. All doors are open to object storage that does not care where your data lives.

- Data accessibility is essential, and the simplicity of accessing your data without the additional steps involved in data migration is remarkably empowering. The simplest design is often the most robust.

- Performance is perhaps the most important component of any storage system running data-intensive workloads. As the foundation of your application stack, object storage performance can dictate the success or failure of application adoption. MinIO is the fastest object storage available.

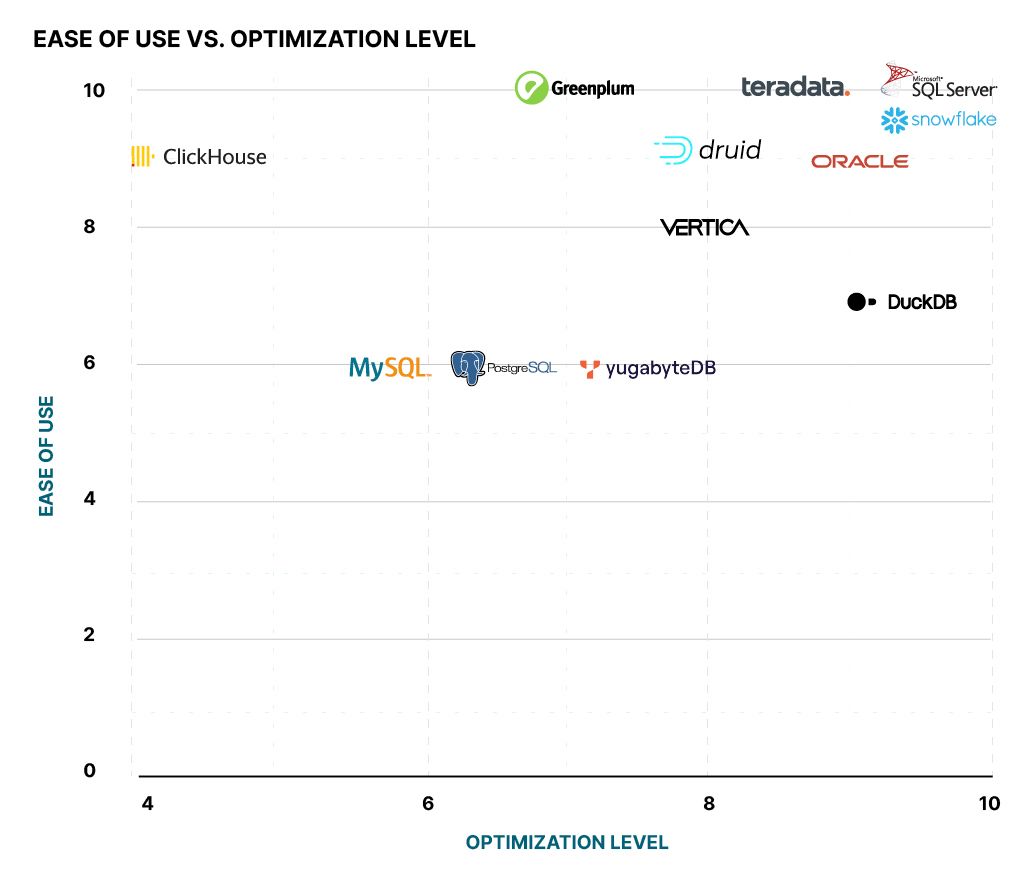

A Survey of Leading Object Storage Optimized Databases

Every movement starts with some bold player making a move. In the technology space, they are generally the upstarts. In the database space - the opposite is true. It is the behemoths in the space that have pivoted to object storage. This is partly because those behemoths target the largest of the large enterprises and they in turn have the data at volume challenges that have required them to adopt modern object storage. It is partly because the behemoth database players are simply more attuned to where the world is heading - and less about where it has been. Either way - here is a survey of the leading databases that are supporting object storage as a first class citizen.

Snowflake

Snowflake was a pioneer in the external tables movement, introducing the capability in 2021. This integration not only simplifies data flows and accelerates data availability but also offers cost savings and the ability to analyze real-time data without data movement. Both automatic and manual partitioning of external tables is supported by Snowflake. It should be noted that this was an easy move for Snowflake - they have been reselling S3 storage since inception. They, more than anyone else, appreciate the power and scale of object storage.

Microsoft SQL Server

Microsoft SQL Server 2022 brought two valuable, MinIO-centric features to the market with the 2022 release: S3 API support for cloud backup and expanded external tables for flexible data querying without movement. This integration allows seamless access to diverse data sources, reduces data replication efforts, and enhances disaster recovery. Plus, SQL Server 2022's security and compliance features pair well with MinIO's tiering capabilities, making it easier to process both relational and non-relational data efficiently.

Demo Using SQL Server 2022 to Store and Query Data on MinIO

Teradata

Teradata interacts with object storage with Native Object Storage (NOS). NOS support for object storage was announced in 2021. NOS leverages SQL to interact with data stored in S3 buckets, enabling their Vantage NewSQL engine to read data directly from MinIO. Current support includes key formats like JSON, CSV, and Parquet, with plans to incorporate AVRO, ORC, and TXT in the future.

Getting Started: Query data stored in object storage

How Teradata Vantage with Native Object Store Decreases Costs

Oracle

Oracle introduced DBMS_CLOUD package first for Autonomous Database in 2019 and then most recently for on-prem installations in 2022. DBMS_CLOUD provides support for working with data in S3-compatible object storage. DBMS_CLOUD can be used both to copy data, but also to create and manage external tables. Note that testing was done against AWS S3.

DuckDB

DuckDB is a free and open source SQL OLAP database. DuckDB operates as an in-memory process and won't persist any data. In order to persist data, you must provide a path or attach database files while the service is running. This super lightweight design makes DuckDB highly optimized for object storage as such has always offered support for the S3 API. DuckDB’s calls the extension for this super straightforward and simple support: httpfs. The httpfs extension supports reading/writing/globbing files.

Apache Druid

Apache Druid is a high performance database that has from its inception been S3-compatible. Druid interacts with object storage in a unique way. Users can ingest from MinIO and while working with the data use the local file system. The data sets users aren’t actively using can be pushed to deep storage in MinIO for storage efficiency.

How to Run Apache Druid and Apache Superset with MinIO

Vertica

Vertica began supporting querying directly from object storage instead of loading data into Vertica in 2022. These external tables can be configured to query various data formats, including Parquet, ORC, plain text, and delimited file formats. External Tables in Vertica have been tested against S3.

Browsing S3 Data Using External Tables

PostgreSQL

PostgreSQL’s concept of foreign data allows users to access data outside PostgreSQL with queries inside PostgreSQL. This data can be accessed through well loved libraries called foreign data wrappers (FDW). While FDW are not officially supported by PostgreSQL, they’ve been around for a long time, first introduced in 2013. As might be expected the use cases for FDW extend well past object storage, but there are wrappers supporting S3-compatible storage access for PostgreSQL. Notably, in 2022 a FDW was developed for Parquet files in s3-compatible storage. This FDW supports MinIO access instead of Amazon S3. PostgreSQL can also be used as an endpoint to publish MinIO bucket notifications.

Internal Docs on Foreign Data Wrappers

Setting up a Development Machine with MLFlow and MinIO

GitHub ParquetS3 Foreign Data Wrapper for PostgresSQL

Greenplum

Greenplum is an open-source data warehousing project built upon PostgreSQL. In 2019 Greenplum began supporting interacting with MinIO through external tables. The process is quite simple, involving granting permissions, defining a path and setting up the external table. There is something to be said about how brief the tutorial is.

Tutorial using Greenplum to Access Minio

YugaByteDB

YugaByteDB is distributed PostgreSQL implementation and has included the PostgreSQL foreign data wrapper extension since 2021. YugaByteDB uses the FDW API as a layer of encapsulation in the regular code-path. Backup and Restore is also a valid MinIO and Yugabtye use case.

Using MinIO for Backup and Restore

MySQL

MySQL external tables are supported by MySQL Server, but more robust support for object storage can be found with HeatWave. Slightly stretching the definition of a database and the category of MySQL instead of Oracle, HeatWave is Oracle’s fully managed MySQL service launched in 2020. HeatWave supports the creation of external tables from object stores manually or through the preferred automated method: HeatWave Lakehouse Auto Parallel Load Procedure. MySQL Server can also be used as an endpoint to publish MinIO bucket notifications.

Lakehouse External Table Syntax

ClickHouse

ClickHouse introduced S3-compatible object storage support using the S3 API in 2020. In addition to the basic import functionality, ClickHouse supports the use of object storage for their MergeTree table engine. MergeTree allows for data to be inserted into a table part by part which can be much more efficient. On the downside, this type of integration means fully replicating the data from object storage inside ClickHouse.

Integrating ClickHouse with MinIO

ClickHouse and S3 Compatible Object Storage

While this list represents a good start, if you are aware of one we missed or one that is in the works, let us know and we will continue to update this survey.

Limitations of Object-Storage Centric Strategies

There are some limitations to object-storage centric strategies. For example, external tables are usually read-only - you cannot perform any data manipulation language (DML) operations like INSERT, UPDATES, or DELETES on them. It usually isn’t possible to push down changes from the database into object storage. However, this design can actually offer benefits for both performance and data quality. It prevents burdening the data infrastructure with multiple copies of the same data and ensures changes are limited to views, avoiding confusion about the true source of truth.

In addition, the tables that use object storage will experience slower query performance than tables with data that is stored or cached natively within the database. The extent of the query latency is a function of many factors - that said, MinIO is remarkably performant, demonstrating its superiority over other object storage providers in certain benchmarks. For example, in a benchmark test that involved loading a dataset containing almost 200 million rows (142 GB) into a database, the MinIO bucket showed a performance improvement of nearly 40% over a competitor. Given that the duration of an initial query depends on the data transfer size, combining MinIO's performance with a database-native caching layer would allow subsequent queries to be quicker. Our experts can help architect a solution that maximizes performance for your infrastructure while making recommendations for future modifications to bring even more power to bear.

Other strategies can be employed against tables created through object storage to improve query performance including the creation of materialized views. More information about these strategies are best pursued in the documentation of the databases themselves.

Other Use Cases for Object Storage with Databases

Obviously, other use cases for object storage with databases include backup and restore. This traditional use case can greatly benefit from a modern approach to object storage. For example, MinIO Jumbo offers an efficient solution for accelerating the backup and restore process. By harnessing the power of parallelization, Jumbo optimizes the speed at which backups are written to object storage. It leverages the entirety of the available bandwidth to swiftly transfer backup files to MinIO. Considering that MinIO is renowned for being one of the fastest object storage solutions available, and Jumbo fully utilizes your network capacity, the only factor limiting the speed of your backups is the performance of your database. Leave the storage to the storage experts, and hold database vendors responsible for performance on their end. Some powerful use cases for MinIO Jumbo include Cassandra and MongoDB.

Another use case for object storage is as sinks for Change Data Capture (CDC). For example, CockroachDB supports storing emitted changefeed messages in object storage. With this use case, we begin to blur the line between database and data lakehouse and from here the use case list could go on and on.

Conclusion

The database isn’t going anywhere in the enterprise - in fact it is getting more and more important. Having said that, modern object storage is effectively enhancing the value of those databases by giving them scale they cannot accommodate natively.

Don’t take our word for it though. See for yourself by checking out the links above and downloading MinIO today. If you have any questions about using MinIO for your specific database, feel free to contact us at hello@min.io or join our supportive Slack community for help and discussions.