Debugging MinIO Installs

MinIO deployments come in all shapes and sizes.. We support bare metal installs on any version of Linux, containerized installs on any version of Kubernetes (including Red Hat OpenShift) and installs just about anywhere you can deploy a small lightweight single binary. But with flexibility comes the inevitability that edge case issues will require debugging.

In this blog post, we’ll show you how to debug a MinIO install running in Kubernetes and also some of the common issues you might encounter when doing bare metal installation and how to rectify them.

Kubernetes Debugger Pod

There are a few ways to access the MinIO API running inside a Kubernetes cluster. We can use kubectl port-forwarding or set up a Service listening on NodePort to be able to access the API. Both of these methods offer a way to access the service from outside the network, but they do come with one major downside: You can only access the Service that the NodePort or Port Forwarding references on an available port (not the usual configuration for the application). For example, you have to access the MinIO API, usually found on port 9000, via a randomly assigned 3xxxx port.

What if I told you there was a better way – and it's not novel? When debugging applications you want to have full access to the native run-time environment so you can use various tools to troubleshoot and debug the cluster. One way to do that is launching a “busybox” style pod and installing all the required tools needed to debug the application.

First launch a Pod into the same namespace as your MinIO install. In order to do this create a yaml file called debugger-pod.yaml with the following yaml.

The above Pod configuration is pulling the image for MinIO mc utility. In order to ensure the pod doesn’t just launch and then exit, we’ve added a sleep command.

Once the yaml is saved apply the configuration to the Kubernetes namespace where the MinIO cluster is running

kubectl apply -f debugger-pod.yaml

Once the pod is up and running access it via shell

Then with mc you can access the MinIO cluster

Now that we have a debugger pod up and running, you can perform action on the cluster directly within the same network. For example, if the replication was broken due to an site going offline or a hardware failure, you can resync any pending objects to be replicated using the following command

Another reason you would run the debugger pod is if there are some file system permissions or invalid groups configurations in your pod, you can update them using the debugger pod

The above debugging method can also be used in bare metal environments. For instance you can launch a busybox or bastion node with mc installed and follow the same instructions as above.

Debugging Bare Metal

Bare metal Linux installs are the most straightforward. In fact it just takes a few commands to get MinIO installed and running with SystemD. For details, please see Configuring MinIO with SystemD.

Once in a great while, bare metal installs go awry. Here are some of the (not-so-common) pitfalls that we are asked about in SUBNET or Slack. These pitfalls are not hardware or operating specific but can be useful to know in any kind of environment.

File Permission

One of the most common pitfalls is the file permissions of the MinIO binary and the configuration file. If this occurs, when you start MinIO using SystemD you will see

Assertion failed for MinIO. and here is the full stack trace

This could be caused by a number of reasons, let's go down the list and check for each of them.

MinIO Binary: The binary, in this example located at /usr/local/bin/minio needs to have root:root permission for user and group, respectively.

MinIO Service User and Group: The MinIO service needs to run under a unique Linux user and group for security purposes, never run as a root user. By default we use minio-user for the user and group names. In the SystemD service config file you should see something like this

MinIO Data Dir: The directory where MinIO data will be stored needs to be owned by minio-user:minio-user or whichever user you decide to run the MinIO service as above.

SystemD and MinIO config: Both the config files should have permissions root:root for user and group like so

Run as Root: The entire install process should be run as root. You can also try sudo if your user has permissions but the recommendation is to run as root as the install needs to place files in a bunch of places that only the root user can access. Your bash prompt should have a # and not a $ like so

# vs $

If none of the above work, the best approach is to remove the app, directories and configs and start a fresh install as a root user.

Port conflict

Another common issue related to deleted files which still hold on to the process, which causes port conflicts. Even when a service is not running, you may be unable to start a new service on the existing port or the service that is running will misbehave (such as not allowing you to login).

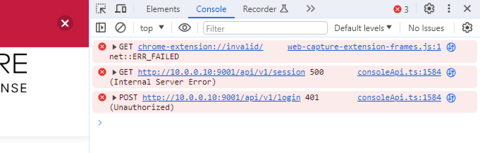

You might see errors such as those below on a MinIO install

- Login Failed

net::ERR_FAILED - 500 Internal Server Error

- 401 Unauthorized

The screenshot above shows an internal server error and an unauthorized error. While looking at the surface it looks unclear what has caused this error, we can debug with a little linux knowledge what to look for that could cause this, let's take a gander.

There are several ways to debug this issue, first lets check to see if multiple MinIO processes are running on the same node

As we can see above there are 2 MinIO processes running. Start by killing the process that is older or has been running the longest, in this case it seems to be the process ID 5048.

kill -9 5048

Sometimes even after killing the process the service might still not start or might still get hung up because it has reserved a process number but not let it go. This can be caused by files that have been deleted but are still being tracked by the operating system. You can find the deleted files via LSOF

lsof -n | grep '(deleted)'

Last but not least, if there are no deleted files left over or hung processes and if everything looks absolutely clean, the last resort is to quickly restart the node. This is a no-nonsense method that shuts down and clears any pending files and processes so you start a fresh install.

SUBNET to the rescue

Although rare, installation edge cases will always exist. MinIO customers know that they have nothing to worry about because they can quickly message our engineers – who have written the code – via the SUBNET portal. We've seen almost everything under the sun, so while the issue might look cryptic or mind boggling at first glance, we'll put our years of expertise debugging installations in many varied environments to work and help you in a jiffy.

If you have any questions on troubleshooting and debugging MinIO installs be sure to reach out to us on Slack!