We Tried the Dell ObjectScale Community Edition, It Wasn’t Pretty

Dell generally focused on the filer game, but they dabble in object storage and have a very old offering, ECS. That makes sense, it was a step up from tape and wasn’t suited for dynamic workloads like HDFS modernization or database workloads. Needless to say, AI was out of the question.

For a few years now, Dell has been teasing a new “modern” product called ObjectScale. Designed to look like MinIO, it has spent a few years in the “coming soon” category. Customers looking to get their hands on it are mostly told to think about 2025 - but there is a community edition that is available for download. We decided to take it for a spin. It didn’t work out well.

Initial hardware requirements

When evaluating any piece of software, it is paramount that the initial setup and installation are as user-friendly as possible. The software should be set up quickly, the available options and configuration should be self explanatory, and in general can get up and running with a minimal default configuration.

This is not that.

It is a fairly heavy lift to get to hello world. ObjectScale has a significant HW requirement, 4 large hardware nodes running OpenShift each with the following specs. Expect to spend $10-15K on the setup:

- Red Hat OpenShift Container Platform (OCP) versions 4.13.x and 4.14.x

- Minimum 4 nodes with 192 GiB RAM per node

- Each node requires a minimum of:

- 20 physical CPU cores are recommended.

- 1 x 500 GB (~465 GiB) unused SSD.

- 128 GiB RAM for ObjectScale.

- 5 unused disks for data.

You use this host to download and extract the ObjectScale Software Bundle packages and other resources that are required for installing the ObjectScale Software Bundle.

Once the HW is procured and configured, the following software versions must be installed:

● Helm v3.38 or greater.

● Kubectl v1.26 or v1.27.

● Podman v4.3.1

● PostgreSQL Server in clustered mode for redundancy

PostgreSQL

PostgreSQL is great. It's one of the best RDBMS out there and has kept up with the test of time. Even today it can support advanced object stores such as MinIO to act as its external data store for tables. PostgreSQL can even store vector data and other AI/ML metadata for it to be referenced and fetched later.

But, it is a terrible solution for object metadata. Unfortunately, that is where ObjectScale chose to store its metadata. In this deployment, PostgreSQL will not scale past a few tens of petabytes. It becomes the bottleneck. Guess what, the world of AI is bigger than tens of petabytes. Much bigger. Here are just some of the issues:

- PostgreSQL must be fast and accessible at all times. It is an RDBMS database that doesn’t have the same architecture as some of the newer databases. While PostgreSQL can scale very well compared to other RDBMS such as MySQL, it still is not as straightforward as adding a node. You need to think about where the data is stored, sharding, among other requirements.

- Accessible at all times means the PostgreSQL cluster needs to be redundant incase of a node failure. This requires several multiple PostgreSQL nodes in Primary-Secondary mode, and if PostgreSQL goes down the entire storage goes offline, creating another point of failure and a tech debt that has the potential to take the entire storage infrastructure offline just because of some secondary node lag.

- On top of those challenges, you have to manage it like a database. That means backing it up, doing regular maintenance and then testing that backed up data to ensure in the event of a failure data is restorable. Basically it creates a giant tech debt where there doesn’t need to be one. All we are looking for is simple, secure, high-performance storage - right?

Docker Hub

This is probably one of the most intrusive things I’ve seen as part of this setup. In order for ObjectScale to install its images it needs access to Docker Hub, full access, so it can write its image data. We don’t know why you would make this decision but can speculate:

- They bundle the docker images that get installed on OpenShift along with the .zip file that was downloaded.

- Because of this they need some sort of Image Registry to push these images to so they can be pulled by OpenShift?

This could be solved by them hosting these images in an external registry hosted by Dell or the many public registries available. This linkage is odd and awkward. What if Docker Hub changes its terms of use?

Community License

When Dell issues new license entitlements to a customer based on a purchase, evaluation, or other event, the entitlements are associated with a unique License Activation Code (LAC). After installing ObjectScale, Dell sends a LAC letter to the customer-provided email address associated with the Dell account. This email contains the necessary information and steps to follow to activate the ObjectScale license.

While this is probably not a big deal in an enterprise environment, for a community edition it doesn’t make sense. It adds hours of waiting. It is almost as if they want you to lose interest (which could be the case ;)). It would be like buying a PS5 and waiting 2-3 hrs for it to update and then another 2 hrs for the game to download. That wouldn’t go well. Unfortunately, that is the experience.

Scalability and Operations

Even in community edition, there must be some basic way to scale the cluster to understand how data works when parts of it are stored in multiple nodes. This helps you in designing the application in a way where you don’t have to think about handling that. But still it needs to be tested.

With Dell ObjectScale there are no clear cut instructions on how to extend the existing 4 node instance of ObjectScale to multiple nodes with more drives. A lot of the documentation is behind their own paid support portal which I believe is only available to the Enterprise license customers.

That’s fine, but why have a community edition if you can’t do anything with it.

No Community Support

Speaking of support in general there is little to non-existent support for ObjectScale, either from Dell or from the community. This could be due to a number of reasons

- The ObjectScale as a product is relatively new. So it's possible there hasn’t been enough installations out there for community support to build up.

- But most probably the reason is no one is really using the Community Edition. This effectively forces the customer to engage with the sales team at Dell who can “handhold” any evaluation. That is not a criticism per se, it is just their business model.

- Finally, the community version would appear to be crippleware. For example, a lot of the replication features across multiple sites isn’t supported. It is documented, but not supported meaning it is only in the Enterprise version. Again, we are not judging, but it begs the question of why even do the Community Edition? There is no community and there isn’t much of an edition either.

MinIO Community Edition

On the other hand, getting the MinIO community edition up and running is very simple. You can use a single VM, a Kubernetes/OpenShift cluster or just bare metal. You can even use Docker to get MinIO working on their development machines. MinIO will run on them all with relative ease. There is no requirement that it has to be tested on OpenShift or Kubernetes to start with. You just download the binary and get started. Oh and yes did we mention it was just a single binary? That is correct. Everything you want to do with the object store is bundled in a single lean binary that comes with a UI for the console as well as a CLI called mc.That is why it has been downloaded more than 7.3 billion times.

For example, most of our developers launch MinIO for the first time directly on their laptop such as Macbook Air with 8GB Memory and 4 CPU cores. These days it's very difficult to get resources allocated for VMs let alone dedicated hardware for running something in community edition. The beauty of MinIO is that rather than hardware being a blocker, you can proof of concept it on your Macbook Air and then later take the same configuration that you’ve tested on and launch in a dev environment in a datacenter later down the line.

Read that last part again because it is really important. You can go from your laptop to production without changing the code. If you are going to production, you will want AIStor (pricing here), it has additional features, 24/7/365 direct to engineer support and a host of other capabilities. But you can go to production with the open source version (provided you adhere to the terms of the AGPL v3 license). There are some massive deployments that have.

Overall Thoughts

The concept of ObjectScale is that it is “new” and works in the cloud native world with containers and orchestration. In reality it is “old”, someone on the team even used the term “archaic.” The method of installation certainly fits that description, as is their use of PostgreSQL to store metadata.

At the end of the day I could not get ObjectScale up and running. If you have read any of my other blogs you will know that this is likely not a function of technical limitations on my part. I was debugging it over a week but with the lack of any community support or support from Dell (to be expected) hindered a lot of the exploration. But don’t take our word for it, we encourage you to try for yourself 😄. When you are done you can check out MinIO.

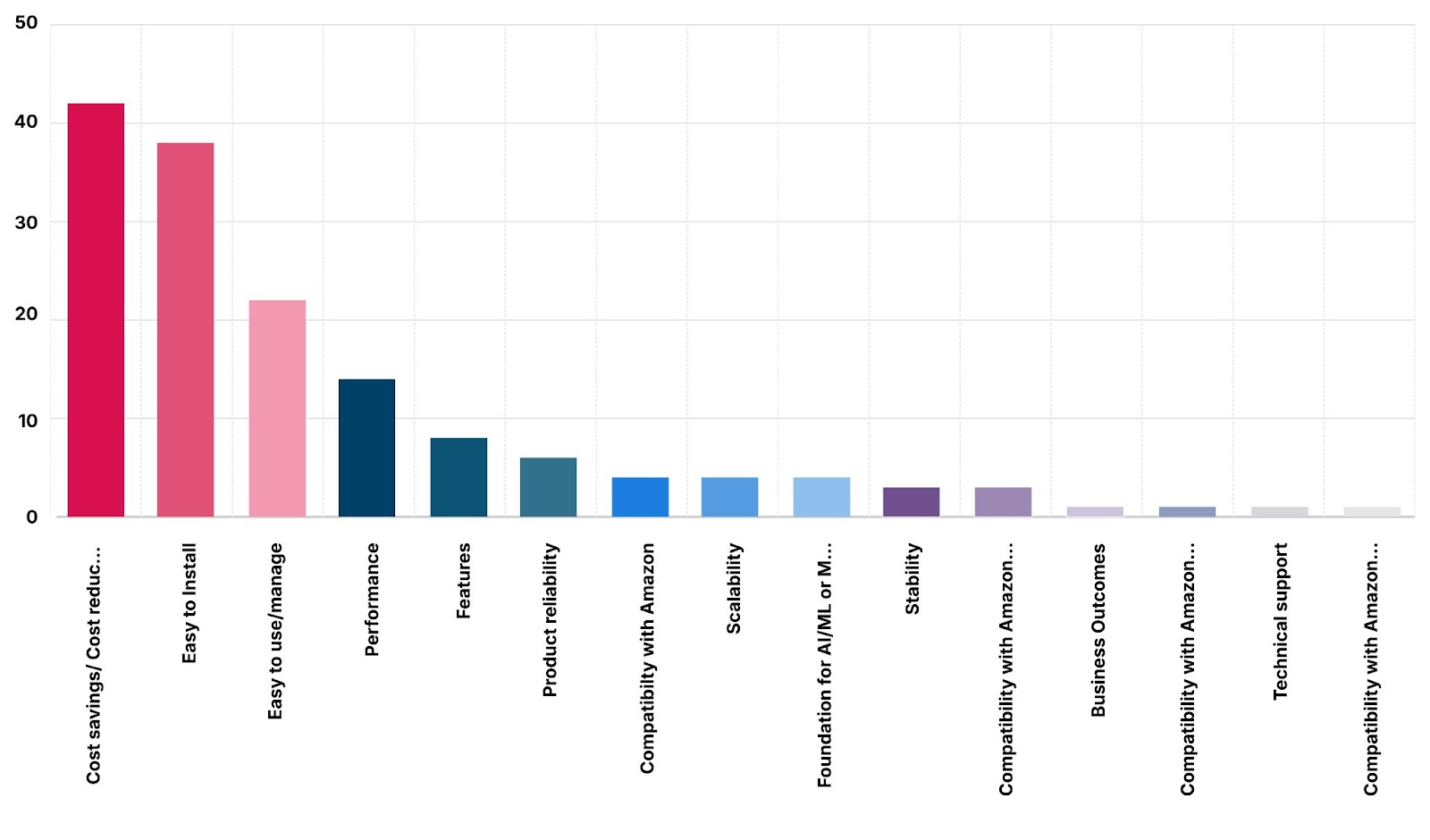

Take a look at this graph from Kubecon and see what folks love about MinIO the most, the ease of install and manageability. ObjectScale was the literal opposite of that.

When you have put the two side by side, take advantage of the neat little feature that Dell built for you to migrate all of your data onto MinIO. We have a blog post on it here.

If you have any questions on how to migrate your data from Dell ECS to MinIO migration be sure to reach out to us on Slack!