Deploying MinIO Tenant and Lab considerations

About 2 months ago we gave you a sneak peek into some of the cool projects the MinIO engineering team has been working on to give you a glimpse on what goes on in the background to build MinIO and support our customers. One of these projects is VM Broker. Today we’ll show you how we use our local lab to test some of the key features and functionality in MinIO. The goal of this post is to not only show you how we test MinIO in our lab but also hopefully we’ll inspire you to elevate the technology and processes in your lab too that can make debugging any application a piece of cake.

Today we’ll talk about two such important topics

- Deploying a MinIO Tenant

- Lab Considerations

Running Operator and Deploying a Tenant

Be sure to check out the previous blog about the basics of VM Broker. In this process we’ll assume that you’ve read some of the features in that post and build upon those in this one. This walkthrough shows how to run a minio-operator script, deploy-tenant.sh.

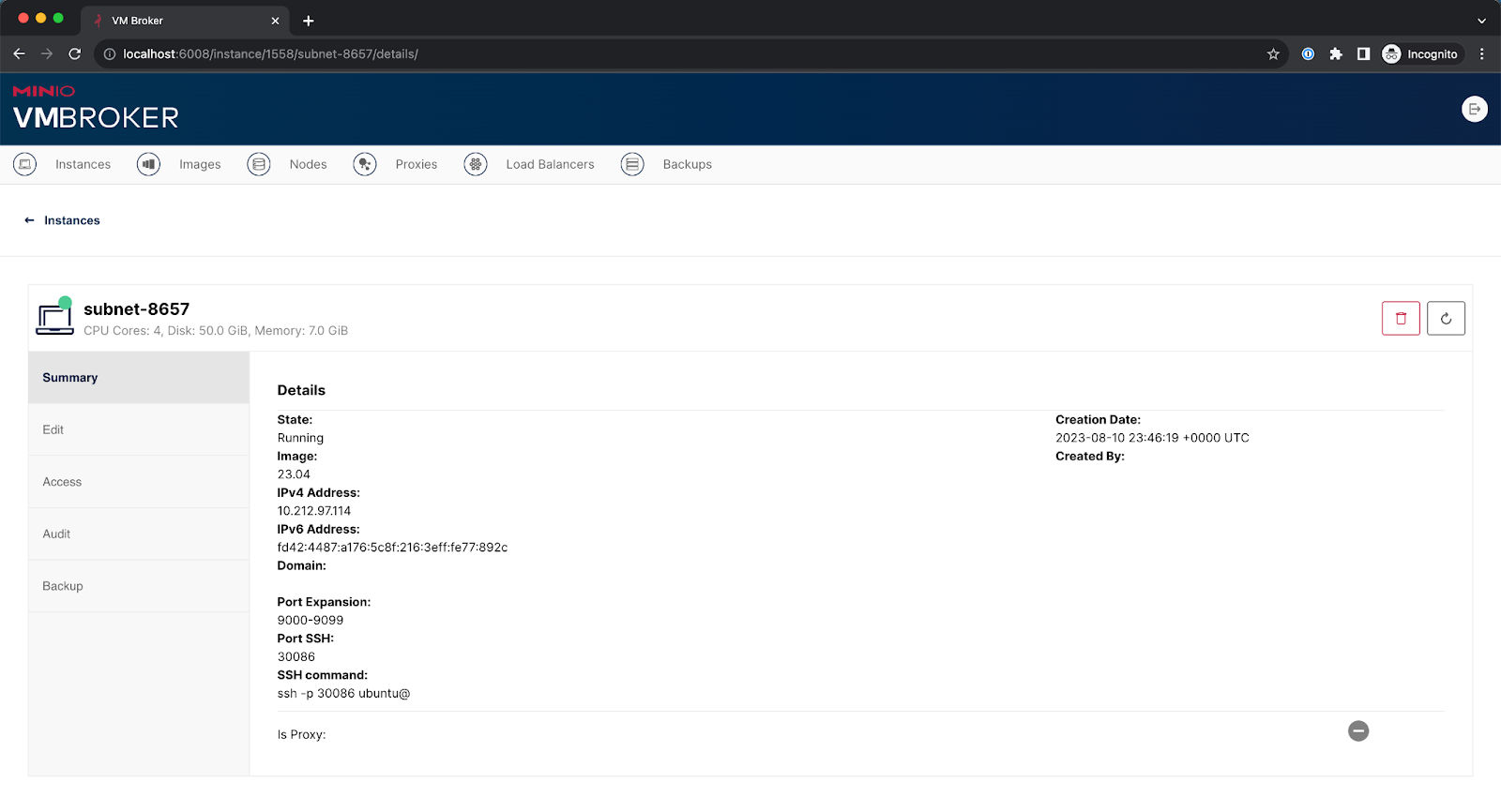

We launch the VM either using an existing backed up instance or a fresh instance. Once the instance is up from the Instance > Summary page, we obtain the ssh command to access the instance to set linger and reboot the node. This allows for a specific user, a user manager is spawned for the user at boot and kept around after logouts. This allows users who are not logged in to run long-running services such as Kubernetes.

ssh -p 30086 ubuntu@10.49.37.22

loginctl enable-linger ubuntu

sudo reboot -h now

Login to install and verify k3s.

ssh -p 30086 ubuntu@10.49.37.22

Install k3s

sudo touch /dev/kmsg

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" sh -s - --snapshotter=fuse-overlayfs

sudo journalctl -u k3s.serviceInstall Docker / Podman

sudo apt-get update

sudo apt-get install -y podman

sudo apt-get install -y podman-docker

sudo apt-get install -y python3-pip

sudo sed -i "s/# unqualified-search-registries.*/unqualified-search-registries\ =\ [\"docker.io\"]/" /etc/containers/registries.conf

pip3 install podman-compose --user --break-system-packagesInstall kind and go. The reason we are installing kind in addition to k3s is because we need kubectl and kind for the deploy-tenant.sh script to run

[ $(uname -m) = x86_64 ] && curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.20.0/kind-linux-amd64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kindcd $HOME && mkdir go && cd go && wget https://go.dev/dl/go1.21.1.linux-amd64.tar.gz && sudo rm -rf /usr/local/go && sudo tar -C /usr/local -xzf go1.21.1.linux-amd64.tar.gz

export PATH=$PATH:/usr/local/go/bin

go versionClone and make minio-operator

git clone https://github.com/minio/operator.git

cd $HOME/operator

make binaryRun deploy-tenant.sh

GITHUB_WORKSPACE=operator TAG=minio/operator:noop CI="true" SCRIPT_DIR=testing $HOME/operator/testing/deploy-tenant.shWhile minio-operator pods are being checked, open another terminal and run:

kubectl patch deployment -n minio-operator minio-operator -p '{"spec":{"template":{"spec":{"containers":[{"name": "minio-operator","image": "localhost/minio/operator:noop","resources":{"requests":{"ephemeral-storage": "0Mi"}}}]}}}}'

kubectl patch deployment -n minio-operator console -p '{"spec":{"template":{"spec":{"containers":[{"name": "console","image":"localhost/minio/operator:noop"}]}}}}'Validate minio-operator pods are running

kubectl -n minio-operator get podsWhen tenant (e.g. namespace myminio) pods are being checked, open a new terminal window and run

kubectl patch tenant -n tenant-lite myminio --type='merge' -p '{"spec":{"env":[{"name": "MINIO_BROWSER_LOGIN_ANIMATION","value": "false"}, {"name": "MINIO_CI_CD","value": "true"}]}}'Validate tenant (e.g. namespace myminio) pods are running

kubectl -n myminio get podsThere you have it! In just a few moments we’ve deployed a working Kubernetes cluster along with MinIO so we can quickly and easily test features and debug issues.

Lab Considerations

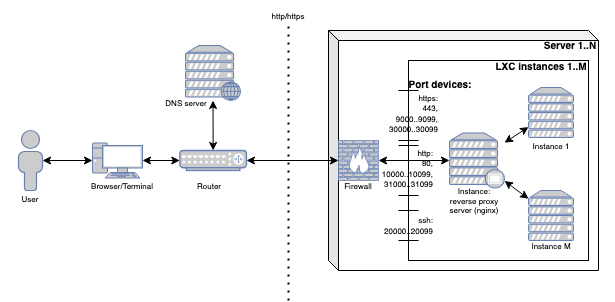

While you set up your MinIO test bed it would be good to keep in mind some of the considerations. Ensure your TCP ports are labeled and the required ranges are accessible. In our case we ensure the following are usable

https: 443, 9000..9099, 30000..30099

http: 80, 10000..10099, 31000..31099Nodeports for k8s on http/s can be taken from the range 30000..30099, 31000..31099.

Linger needs to be enabled to persist between reboots or session logouts. This allows users who are not logged in to run long-running services such as with Kubernetes.

loginctl enable-linger ubuntuPodman will sometimes fail with the following error

Error: creating container storage: creating read-write layer with ID "f018abe30704d95279f60d7ddf52bfeba241506974b498717b872c0fa1d1df41": Stat /home/ubuntu/.local/share/containers/storage/vfs/dir/a8dfe82502f73a5b521f8b09573c6cabfaedfd5f97384124150c5afd2eac1d3a: no such file or directoryTo fix this run the following command

podman system prune --all --force && podman rmi --allThen launch the container again

docker run -itd \

-p 10050:9000 \

-p 10051:9090 \

--name minio1 \

-v /mnt/data/minio1:/data \

-e "MINIO_ROOT_USER=minioadmin" \

-e "MINIO_ROOT_PASSWORD=minioadmin" \

quay.io/minio/minio server /data --console-address ":9090"Another consideration is that podman-compose does not respect the network directive. This means that, for example in the IAM lab, the LDAPserver in its docker container will not be able to communicate with the minio server process running on its respective container.

To work around this, use the host LXC container IPaddress to communicate between the LDAP server and the minio server. To do this before creating the LDAP docker container, obtain the ip address to the LXC host private IP address

echo $(ip -4 addr show eth0 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

Then change the value for the following configuration

components."org.keycloak.storage.UserStorageProvider".config.connectionUrl

Final Thoughts

This is just a snippet of what is possible with a lab setup like this. We use these lab configurations to test new features and integrations, and also to help our customers in the SUBNET portal. This setup can be made possible with just a couple of nodes with commodity hardware. We help folks every day to set up not only their lab environment, because it's paramount that the clusters are in a sandbox environment, but more importantly the customer’s production MinIO setup where our engineers guide them hands-on to ensure they are running a scalable, performant, supportable MinIO cluster.

If you would like to build cool labs yourself or have any questions about deploying MinIO to production systems, ask our experts using the live chat at the bottom right of the blog and learn more about our SUBNET experience by emailing us at hello@min.io.