Unbundling the Data Stack: the Disaggregation of Storage and Compute 2.0

There is an excellent post trending with the data and database crowd over on LinkedIn. Written by Theory VC partner Tomasz Tunguz it speaks to a trend that we have discussed since 2019.

Databases are becoming high-speed query engines and are jettisoning storage. This doesn’t mean storage is unimportant, on the contrary, it is more important than ever, what it means is that high-speed query processing is a core capability and it competes with storage. Databases want to focus on database stuff and they want storage to focus on storage stuff.

It is disaggregation 2.0. We saw the first wave when HDFS imploded under the weight of its own requirement to have a compute node (high-speed query processing) for every storage node.

Think of the monolithic approach to database management taken by Cloudera, Oracle and others. This approach served a purpose at a time when users were just starting to think about data at scale. Turns out it didn’t scale. Managed service data platforms with tightly coupled storage/compute were quickly recognized as untenable in a data-first world where the requirement for storage greatly outpaced the requirement for compute.

Let’s dig into disaggregation 2.0.

Unbundling the Database

Imagine a library where books (data) and reading desks (compute resources) are kept separate. Readers (queries) can access any book they need without being tied to a specific desk. This setup allows the library to adjust the number of desks based on demand, optimizing both space and resources. Similarly, disaggregating storage and compute in databases allows for flexible and efficient data processing; while the traditional closely coupled design of storage and compute limited flexibility and locked users into specific vendor ecosystems.

The Rise of Open Data Formats and demands for Modern Datalake Infrastructure

Users are demanding more control and flexibility. If you need first-hand evidence listen to Snowflake's recent earnings call or read the transcript. This is a legendarily customer-focused organization and they are racing to adopt Open Table formats (Iceberg in particular) AND forgoing storage revenue in the process because this is what their users want. It cost them billions in short-term market cap. Not millions, billions. It is not just Snowflake you see it everywhere. SQL Server, Teradata, ClickHouse, Greenplum and more. Customers with large volumes of data enterprises are increasingly pushing for and accepting nothing less than:

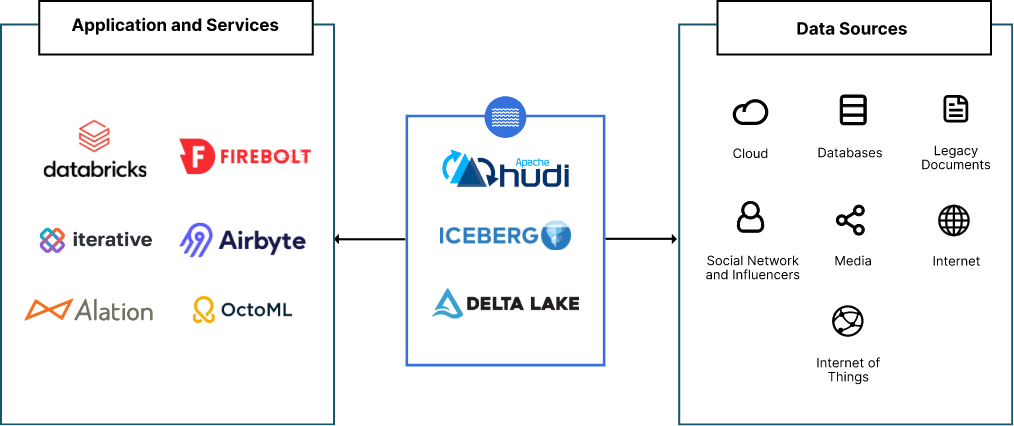

- Open table formats: Formats like Iceberg, Hudi and Delta Lake enable seamless data exchange between different systems. This interoperability empowers businesses to choose the best tools for specific tasks.

- Centralized data storage: Instead of replicating data for various purposes (analytics, AI, etc.) into siloed data marts, central storage solutions, or datalakes are the architectural blueprint. These data lakes are required to house all of an organization’s data across many different use cases. Instead of data marts, different systems access and process this data as needed from one central location.

Benefits of Disaggregation 2.0

In disaggregation 1.0 - the driver was primarily cost, simplicity and avoidance of lock-in:

- Cost savings: Separating storage from compute allows both to scale independently. Storage is typically a smaller expense compared to compute, and an architecture that naturally separates the two allows for both users to right-size both performance and cost-effectiveness.

- Simplified architectures: Disaggregated architectures are more modular and easier to manage. Businesses can select best-of-breed solutions for storage, compute, and various data processing tasks. There is really no need to be hampered by ailing and inappropriate technology with this modern method of managing a data stack.

- Avoiding Vendor Lock-in: With compute separated from storage, different vendors can compete on factors like price, performance, and features specific to each workload. This fosters a more dynamic and innovative data processing landscape.

In disaggregation 2.0 - the benefits are performance, extensibility and optionality:

- Performance: For a database, the separation of storage and compute offers a chance to build best-of-breed architectures. For Snowflake, they invest in becoming the fastest, most performant query engine on the market. There may not be a more competitive market in software than databases. This is a strategic imperative. It also allows their customers to make similar, performance-oriented best-of-breed decisions on storage. Those will obviously be object storage decisions but which ones (AWS S3, Azure Blob, GCP Object, MinIO) will depend on what the customer is trying to achieve. We don’t want to rathole on this, but we don’t understand the storage companies who are now claiming to be database companies and want to compete with Snowflake and Databricks under the guise of a data platform. There is an Icarus story developing there.

- Extensibility: We are entering uncharted territory on the AI front and this impacts even the giants like Snowflake and others. They want, and frankly need, the ability to operate on more data and in more ways. Databases, not just Snowflake, need to become more extensible with regard to the value they are providing. That is what their customers want too. Snowflake is a utilization model, the more utilization the more they get paid. When you separate storage from compute, it frees up a portfolio of options for Snowflake and its customers on the compute side as they are not burdened by having to co-engineer storage solutions (which they have limited sway over considering they have been reselling someone else’s object storage). Now Snowflake can push the envelope and push customers to adopt storage that can keep pace. This is good for everyone (and as you might imagine really good for the world’s fastest object store).

- Optionality: Finally, disaggregation 2.0 brings increased optionality for customers. Can they continue to use the model in place (Snowflake + cloud object storage) - yes. Can they adopt new technologies where storage is separate from compute (via external tables) - yes. This additional optionality is always a net positive and customers get more of it in disaggregation 2.0.

A New Era for Data Management

While data warehouse vendors are familiar with the concept of separation of storage and compute, past implementations were primarily focused on scaling within their own ecosystem. Users are just starting to realize the advantages of thinking like the hyperscalers in this regard. The current movement demands a deeper separation, for all users, where storage becomes a utility, independent of the compute layer.

This paradigm shift empowers businesses to unlock the true potential of their data. This is especially true in the case of AI and ML workloads which require vast amounts of clean, available data to be successful. Open data formats and disaggregated architectures allow businesses to leverage a wider range of tools and technologies to extract maximum value from their data assets.

The future belongs to open, flexible, and cost-effective data architectures. Disaggregation of storage and compute paves the way for a new era of data management, empowering businesses to harness the true potential of their information. Drop us a message to let us know what you build at hello@min.io or in our Slack.