Solving Scale in Security: MinIO Key Management Server

In the realm of robust and dependable storage solutions, MinIO stands out as a persistence layer, offering organizations secure, durable, and scalable storage options. Often entrusted with mission-critical data, MinIO plays a crucial role in ensuring high availability, sometimes on a global scale. The nature of the stored data, ranging from financial and healthcare records to intricate product details and cutting-edge AI/ML models, demands not only resilience but also stringent security measures to guard against unauthorized access and disclosure.

MinIO addresses these security concerns through its core features of fine-grain identity and access management, object locking, and end-to-end data encryption (at rest and on the wire). For safeguarding data at rest, MinIO seamlessly integrates with various Key Management Systems (KMS), including renowned options like HashiCorp Vault, Thales CipherTrust Manager, AWS KMS, and SecretsManager, among others. These KMS solutions serve as guardians for encryption keys, storing them securely away from the data they protect.dd

While the KMS solutions supported by MinIO have proven their mettle and are widely used, some challenges have surfaced, especially in the context of large-scale storage infrastructures handling petabytes or even exabytes of data. The limitations observed over the years, mitigated in part by our KES project, shed light on the intricacies of integrating and maintaining separate KMS solutions.

Managing a general-purpose KMS poses complexities, as these are often intricate distributed systems. Juggling the demands of security, compliance, and performance becomes a non-trivial task. On the flip side, the robustness of a high-performance storage infrastructure may necessitate requirements, particularly around availability, response time, and overall performance, that generic KMS solutions struggle to meet. Even if they do, they might become prone to unreliability, excessive resource consumption, or escalating costs.

Therefore, recognizing the unique challenges posed by large, highly available, and performant data infrastructures, we have dedicated efforts to develop a MinIO-specific KMS, tailored to address these specific demands. MinIO KMS is exclusively offered to our AIStor customers.

MinIO KMS is a highly available KMS implementation optimized for large storage infrastructures in general and MinIO specifically. It is engineered with the following key objectives:

- Predictable Behavior: MinIO KMS is designed to be easily managed, providing operators with the ability to comprehend its state intuitively. Due to its simpler design, MinIO KMS is significantly easier to operate than similar solutions that rely on more complex consensus algorithms like Raft, or Paxos.

- High Availability and Fault Tolerance: In the dynamic landscape of large-scale systems, network or node outages are inevitable. Taking down a cluster for maintenance is rarely feasible. MinIO KMS ensures uninterrupted availability, even when faced with such disruptions, mitigating cascading effects that can take down the entire storage infrastructure. For common workloads, KMS provides the highest availability possible. Specifically, you could lose all but one node of a cluster and still handle any encryption, decryption or data key generation requests.

- Scalability: While the amount of data usually only increases, the load on a large-scale storage system may vary significantly from time to time. MinIO KMS supports dynamic cluster resizing and nodes can be added or removed at any point without downtime.

- Consistent and High Performance: On large-scale storage infrastructure, data is constantly written and read. The responsiveness of MinIO KMS directly influences the overall efficiency and speed of the storage system. KMS nodes don’t have to coordinate when handling such requests from the storage system. Therefore, the performance of a KMS cluster increases linearly with the number of nodes. Further, MinIO KMS supports request pipelining to handle hundreds of thousands of cryptographic operations per node and second.

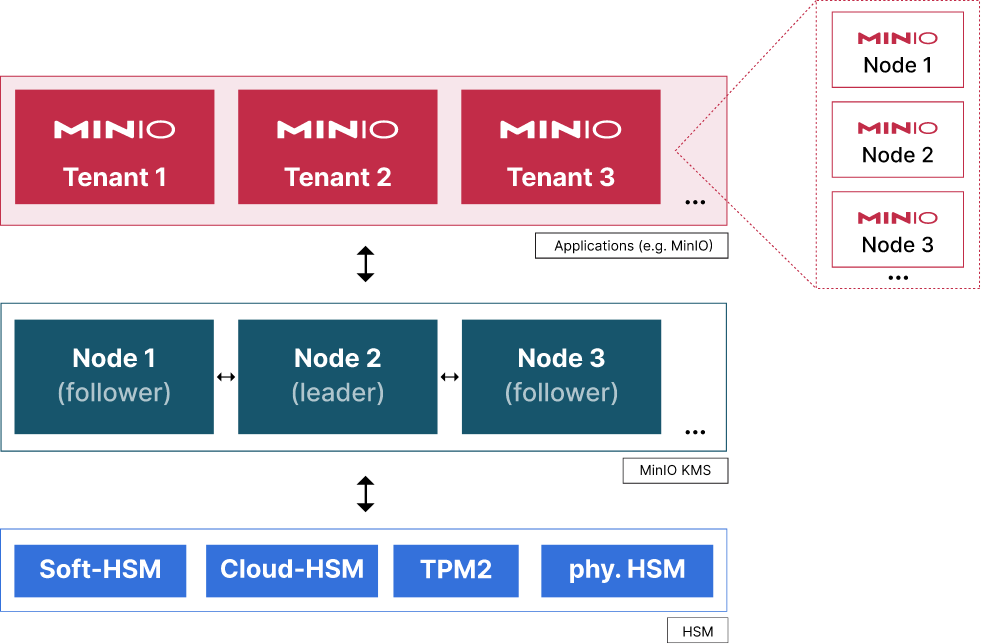

- Multi-Tenancy: Large-scale storage infrastructures are often used by many applications and teams across the entire organization. Isolating teams and groups into their own namespaces is a core requirement. MinIO KMS supports namespacing in the form of enclaves. Each tenant can be assigned its own enclave which is completely independent and isolated from all other enclaves on the KMS cluster.

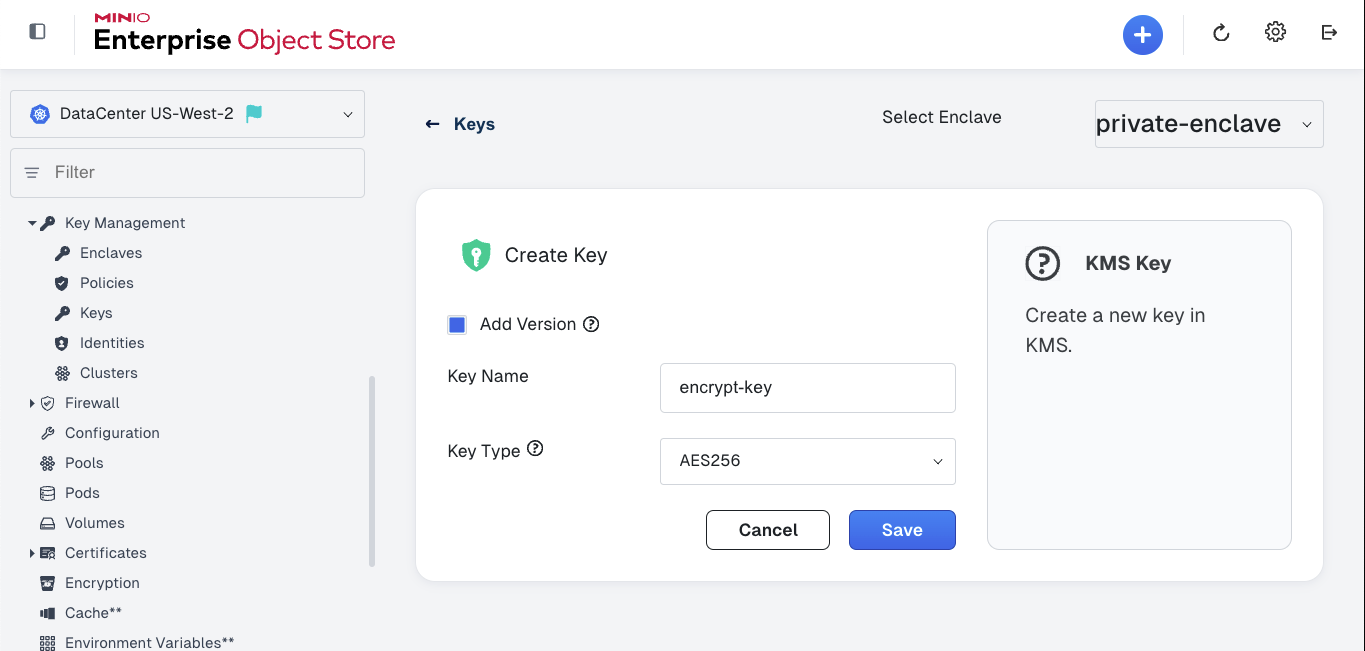

The KMS can be configured in the Global Console or the command line.

Now, let's delve into a high-level overview of how KMS operates, beginning with an examination of its fundamental security model, followed by insights into cluster architecture and management, and concluding with its seamless integration into MinIO.

Related: Enterprise Object Storage Encryption

Security Model

KMS establishes its foundational trust on a (hardware) security module (HSM) that assumes a pivotal role in sealing and unsealing the KMS root encryption key. The HSM's responsibility extends to safeguarding the integrity of KMS by allowing the unsealing of its encrypted on-disk state and facilitating communication among nodes within a KMS cluster.

However, the HSM is rather a concept and not necessarily an actual hardware device. For example, a cloud HSM, like AWS CloudHSM, or a software HSM may be used instead of a physical device. KMS implements its own software HSM, prioritizing user-friendly implementation and operational simplicity.

Setting a single environment variable on each MinIO KMS server is sufficient to get started. For example:

export MINIO_KMS_HSM_KEY=hsm:aes256:vZ0DeGvwlb/KHwIfi8+c7/8ZHjweHKVL0WNrRc3+FxQ=

On a single KMS server, all data resides either in memory or encrypted on disk. However, when running a distributed KMS cluster, nodes must exchange messages. To ensure secure inter-node communication, KMS uses mutual TLS, employing keys directly derived from the HSM. Consequently, only nodes with access to the identical HSM, exemplified by sharing the same software HSM key, can establish communication through the inter-node API. In this way, the HSM serves a dual purpose, not only as the guardian of trust for the overall system but also as the bedrock for establishing trust in inter-node communications.

Cluster Management

A KMS cluster is a distributed system that uses single-leader synchronous replication to propagate state changes to all cluster nodes and elects a leader based on votes. It’s conceptually very similar, but not identical, to the Raft consensus protocol. It is still strictly consistent and provides linearizability for all events.

However, it may be easier to understand how KMS works by looking at a few examples. Operating a KMS cluster does not require expertise in cryptography or distributed systems.

Therefore, let’s get a little bit more hands-on and get a feeling for how KMS clusters behave. In the simplest case, a KMS cluster consists of a single node. When starting a new KMS server it automatically creates a new single-node cluster. For example:

export MINIO_KMS_HSM_KEY=hsm:aes256:vZ0DeGvwlb/KHwIfi8+c7/8ZHjweHKVL0WNrRc3+FxQ

=

minkms server --addr :7373 /tmp/kms0

We can use the automatically generated API key to authenticate to the cluster as cluster admin and list all its nodes.

export MINIO_KMS_API_KEY=k1:ThyYZWXUjlSOL-l5hldSgO49oQPWZezVZFU4aiejVoU

minkms ls

Now that we have our first KMS cluster up and running, let’s create a new enclave for the master keys we want to use in the future. As mentioned earlier, enclaves implement namespacing. All keys within one enclave are completely isolated from all others residing in other enclaves.

minkms add-enclave enclave-1

Within the enclave, we can create our first master key and inspect its status

minkms add-key --enclave enclave-1 key-1

minkms stat-key --enclave enclave-1 key-1

So far we are only operating with a single KMS server. Now let’s expand our single-node cluster from one to three nodes. Therefore, we’ll start two more KMS servers, for simplicity on the same machine.

First, the 2nd server:

export MINIO_KMS_HSM_KEY=hsm:aes256:vZ0DeGvwlb/KHwIfi8+c7/8ZHjweHKVL0WNrRc3+FxQ=minkms server --addr :7374 /tmp/kms1

and then the 3rd:

export MINIO_KMS_HSM_KEY=hsm:aes256:vZ0DeGvwlb/KHwIfi8+c7/8ZHjweHKVL0WNrRc3+FxQ=minkms server --addr :7375 /tmp/kms2

Now, we can return to our initial KMS single-node cluster and expand it by adding the other two servers to it. Therefore, we use the server’s IP address and port number (or DNS name and port). Here it’s 192.168.188.79 but this may be different on your machine. Use the IP and port your servers print on the console on startup.

minkms add 192.168.188.79:7374

minkms add 192.168.188.79:7375

When we query the status of our KMS cluster again, it will tell us that it consists of three nodes - the initial server and the two we just added.

minkms ls

Now that we’ve joined multiple nodes into one cluster, do all nodes have the same state? For example, is node 1 also able to give us status information about the key key-1 in enclave enclave-1 that we created before? We can find out by asking the node directly. This step again depends on the IP addresses used in your local network. Adjust accordingly.

MINIO_KMS_SERVER=192.168.188.79:7374 minkms stat-key --enclave enclave-1 key-1

When nodes join a cluster, they receive the entire KMS state and are only considered part of the cluster once they’re in sync. Hence, all nodes within a cluster are replicas of each other and hold the same data. There are no partial states and no silent synchronization in the background. This is one reason why KMS clusters are very predictable. They cannot go out of sync.

For example, we could take down two of our three KMS servers. Technically, we can take down any two, but we stop node one and two. Hence, we don’t have to adjust MINIO_KMS_SERVER to ensure that we communicate with the one remaining node. Once done, we can list the cluster nodes again.

minkms ls

As expected, two out of three nodes are not available. However, we can still query the status of key-1 on the remaining node.

minkms stat-key --enclave enclave-1 key-1

You may not be surprised that the server responds with the expected output. However, other KMS implementations, e.g. ones that use Raft as the underlying consensus algorithm, will not be able to respond, at least not without tolerating staleness and giving up strong consistency. A three-node Raft cluster with two nodes being down is usually considered unavailable and cannot serve any request. The key difference is that KMS can remain available for all read requests without weakening its consistency guarantees. Luckily, most KMS operations used by large-scale storage systems don’t require a state change on the KMS cluster, and therefore, can be considered “read-only”. Hence, the KMS fault tolerance model matches what large-scale storage systems expect from a highly available and reliable KMS. This is not a coincidence.

However, KMS cannot and does not circumvent the CAP theorem. Trying to change the state of the remaining node, for example by creating a 2nd key, will fail. The server cannot accept the write operation because it would, potentially, go out of sync. Therefore, we have to bring back the two remaining nodes before we can create a new master key.

Nevertheless, it's crucial to recognize that KMS operates within the confines of the CAP theorem and cannot bypass its inherent principles. Any attempt to alter the state of the remaining node, such as creating a second key, will fail. The server is inherently restricted from accepting the write operation to prevent potential divergence and state inconsistencies. In practical terms, this means that before creating a new master key, the two remaining nodes must be restarted to establish write quorum within the cluster.

Summary

This new feature available to our AIStor customers simultaneously shrinks the attack surface while addressing the performance challenges associated with billions of cryptographic keys. MinIO KMS can deliver predictable behavior, even at the scale of hundreds of thousands of cryptographic operations per node per second while delivering high availability and fault tolerance. Furthermore, KMS also supports multi-tenancy enabling each tenant to be assigned its own enclave which is completely independent and isolated from all other enclaves on the KMS cluster.

If you want to go deeper on any of the AIStor features, drop us a note at hello@min.io.