Exposing MinIO Services in AWS EKS Using Elastic Load Balancers: Overview

MinIO can be deployed in a variety of scenarios to meet your organization’s object storage needs. MinIO provides a software-defined high-performance object storage system across all of the major Kubernetes platforms, including Tanzu, Azure, GCP, OpenShift, and SUSE Rancher.

For MinIO, Elastic Kubernetes Services (EKS) is a great fit for workloads running in the AWS public cloud. MinIO natively integrates with the Amazon EKS service, enabling you to operate your own large scale multi-tenant object storage as a service or a multi-cloud data lakehouse using AWS’s infrastructure. MinIO is a complete drop-in replacement for AWS S3 storage-as-a-service and runs inside of AWS as well as on-prem and/or other clouds (public, private, edge).

One of the challenges of running MinIO on EKS is to expose its Kubernetes services to consumers of the object storage API and UI to users and applications outside the Kubernetes cluster, either internally to AWS using a Virtual Private Cloud (VPC) or externally to the Internet in general.

In this guide, we will describe the advantages and disadvantages of each method (Classic Load Balancer, NGINX, Application Load Balancer and the Network Load Balancer) of exposing MinIO services. We will then provide a follow-up post including greater detail about the process of exposing MinIO services.

The goals of this guide are to describe how to expose MinIO services in EKS and identify when to choose one kind of ingress or load balancer over the other.

Kubernetes-native Ways to Expose Applications

The de facto way to expose applications and services running inside the Kubernetes cluster to both internal and external applications is by using services and ingress.

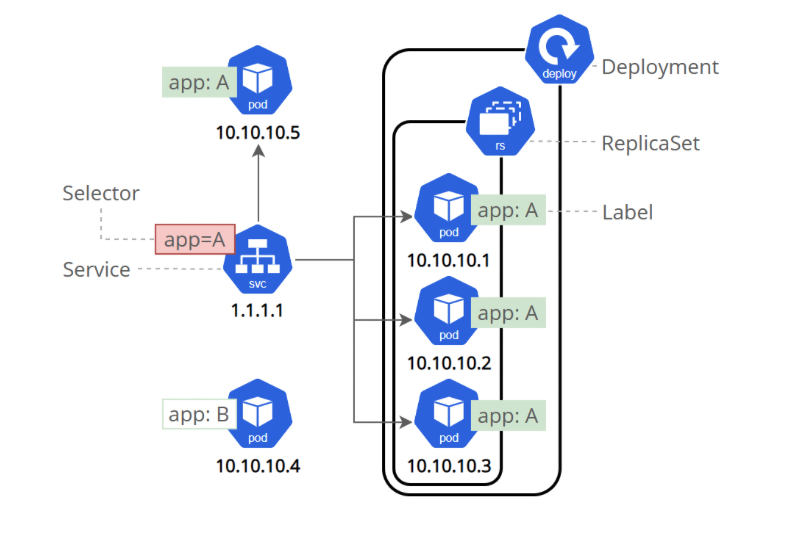

When a service is created we need to designate it as a type, and the type will determine how traffic is routed to an application.

ClusterIP (Default)

The above diagram shows an example of this architecture. This is the ideal type of load balancing when the traffic is to be internal on the Kubernetes cluster only. The service is assigned an internal IP address in Kubernetes. In the image above, it is 1.1.1.1, and any traffic the Service ClusterIP receives is forwarded to the underneath pods on network 10.10.10.x.

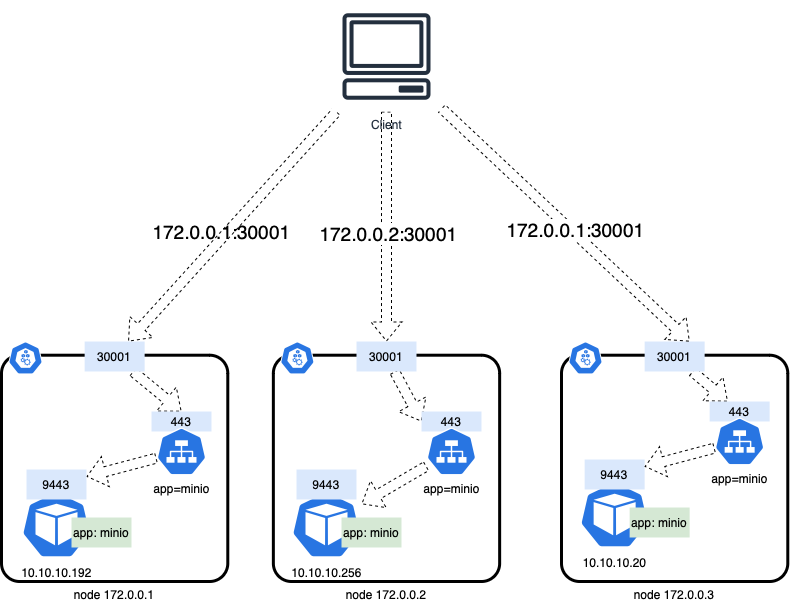

NodePort

Using NodePort, the same port (in the range of 30,000 to 32767) is open on the cluster nodes.

Any traffic a node receives on the designated port is forwarded to the service, making this the simplest way to expose services. The drawback is that only one service per port is possible.

If your node’s IP address changes, or the NodePort configuration changes, then you will need to deal with the changes in the client.

LoadBalancer

This method creates a load balancer in the public cloud platform, for AWS EKS it is a Classic Load Balancer. In bare-metal clusters LoadBalancer is not available, you can use MetalLB as a way to load balance.

A different load balancer will be created for each service, and there are no options to do smart routing like path or hostname routing.

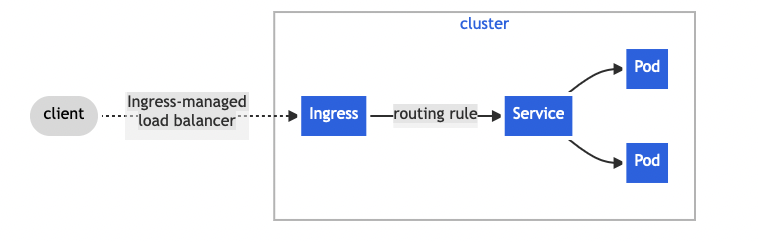

Ingress

Ingress is not a service type, it is another Kubernetes-native resource that forwards HTTP traffic from outside the Kubernetes cluster to internal services.

Ingress allows smart routing, which means that we can use the same ingress load balancer to route traffic for multiple services using paths or host names or even a combination.

Another advantage of ingress is that we can do TLS termination in the ingress load balancer. However, ingress requires an ingress controller installed in the cluster. Here is a list of ingress controllers available in Kubernetes.

Ingress and the services associated with it are namespace-bounded resources, meaning that an ingress for resources in namespace a needs to be created in namespace a. There is a natural downside in that a single ingress resource cannot route traffic for resources across namespaces – for the security conscious this might be seen as an advantage as it isolates resources. An ingress that exists in namespace a can only route traffic to services inside namespace a, it cannot route traffic for services in namespace b, see more details about it in the ingress rules Kubernetes documentation.

MinIO Services and Internal DNS

MinIO is a Kubernetes-native service. This means that applications running inside the Kubernetes cluster can seamlessly communicate with MinIO by using the internal DNS provided by Kubernetes for services. Assuming the Kubernetes cluster DNS suffix is cluster.local, the DNS will be:

minio.{ tenant namespace}.svc.cluster.local for the minio API endpoint

{tenant-name}-console.{tenant namespace}.svc.cluster.local for the tenant console UI

console.{operator namespace}.svc.cluster.local for the Operator Console UIExpose MinIO Services Outside of Kubernetes

Access to MinIO services from outside the Kubernetes cluster requires setting up either Load Balancers or Ingress to expose the services. There are many way to accomplish this on AWS EKS:

- Nginx ingress

- Classic Load Balancer

- Application Load Balancer ingress

- Network Load Balancer

All of them allow access to the MinIO services from outside the Kubernetes cluster. However, each option differs on the number of features they allow and its limitations. For this reason, you would choose one over another based on the needs dictated by your systems and their architecture.

We’ve put together a handy comparison chart so you can understand your options. All of these will work, it’s up to you to decide which has the options you need for your environment. For a more comprehensive comparison of AWS Load Balancers and their capabilities, see the AWS Documentation

The sections below go into greater detail for each option and include important considerations.

Operator Managed

MinIO Operator creates and ensures the desired state of MinIO tenants without the need for additional controllers or grants in your cluster. This is the simplest way to expose the services, and is supported by using the exposeServices and serviceMetadata fields of the Tenant CRD, both will be described in detail later.

Deployable via Kubernetes Manifest

For this feature we are looking for the capacity to create either load balancers or ingress via Kubernetes manifests. It simplifies operations and is a best practice to create the infrastructure and services configuration in manifests that Kubernetes understands and manages.

For each way to expose services, there is a different handler in Kubernetes:

Support for HTTPS and TLS termination

The MinIO services are HTTP services, and as a best practice HTTPS should be used to encrypt the information in transit. For this reason, you should run MinIO with TLS and the load balancers used to expose the MinIO services should have a TLS certificate in order to guarantee end-to-end encryption of data in transit.

This is something all of the load balancers support in some way, however there are nuances around self-signed certificates, private CA-issued certificates and AWS ACM issued certificates with some approaches. We will provide details in follow-up posts about specific load balancing and ingress options.

Comparison of Load Balancer and Ingress Methods

Below, we’ve prepared a handy list of features and advantages of exposing MinIO services in one or another way.

Use Classic Load Balancer when you:

- Need the lowest possible dependency on additional resources.

- Are going to be doing TLS termination in the MinIO service where it's optimal for simplicity when you have the private keys of your custom domains’ certificates to upload to MinIO.

- Are doing TLS termination only on the load balancer.

- Don’t need end-to-end encryption and can afford unencrypted HTTP traffic inside the Kubernetes cluster.

- See Expose MinIO Tenant Services on EKS for detailed instructions.

Use Nginx Ingress when you:

- Want to set TLS termination in the Nginx ingress and you have the private key of the custom domain certificate available to store in secrets for Nginx for use.

- Want to set up complex routings.

- Want to hide, add or replace custom headers.

- Need some other Nginx features.

- Official documentation: Set up Nginx proxy with MinIO Server.

- Blog Post: How to Use Nginx, LetsEncrypt and Certbot for Secure Access to MinIO.

Use Application Load Balancer Ingress when you:

- Want to use AWS Certificate Manager issued certificates, and want to make TLS termination in the load balancer. Note that because the certificates are managed on AWS it is not possible to download the private key, and, for example, assign them in Nginx ingress.

- Want to reuse load balancers to route to more than one service. AWS ALB allows routing to several services using paths and host names, so it helps to save on infrastructure costs by using the same load balancer for more than one service (MinIO Console and API), without compromising performance.

- Want higher control of the load balancer settings like subnets, security groups, health checks, and more. ALB Ingress allows for high customization via service annotations.

- See Expose MinIO Tenant Services on EKS for detailed instructions.

Use Network Load Balancer for the strict highest performance.

- NLB is a Layer 4 Proxy which means that it has to do less processing and adds the minimal latency possible before forwarding traffic to the backend services.

- NLB is supposed to handle huge spikes of sudden traffic without problems or pre-warming, in comparison ALB could need a time to catch up or you might need to contact AWS to warn about a forecasted expected spike of traffic so that they prewarm the load balancer for you. Note that this is high spikes of traffic, think of a SuperBowl or Black Friday like event traffic.

- If your solution needs to have a Static IP, NLB provides a static IP per AZ and also is compatible with Elastic IP’s, this is not possible with ALB.

- Custom and advanced Network topologies

- See Expose MinIO Tenant Services on EKS for detailed instructions.

Conclusion

MinIO is already running on millions of nodes across the public cloud, with AWS hosting the greatest number of deployments. We make it easy for you with our managed application in the AWS marketplace, which can be deployed with a few clicks.

Whether you’re running on EKS or another flavor of Kubernetes, hosted or not, you are likely to want to expose MinIO to services and applications running outside of the Kubernetes cluster running MinIO. We’ve put together this two-part guide to exposing MinIO services using load balancers and ingress.

Questions or comments? Reach out to us on our Slack community.