Expose MinIO Tenant Services on EKS using Elastic Load Balancers: Instructions

This blog post is part two in a series about exposing MinIO tenant services to applications outside of your Amazon EKS cluster. The first blog post provided an overview and comparison of the four methods used for Exposing MinIO Services in AWS EKS Using Elastic Load Balancers.

Software-defined MinIO provides distributed high-performance object storage for baremetal and all major Kubernetes platforms, such as Tanzu, Azure, GCP, OpenShift, and SUSE Rancher. MinIO natively integrates with the Amazon EKS service, enabling you to leverage the most popular cloud with the world’s fastest object storage. MinIO is a complete drop-in replacement for AWS S3 that runs on EKS as well as on-premise and/or other clouds. Customers use MinIO for myriad uses, such as to provide multi-tenant object storage as a service, a multi-application data lakehouse, or an AI/ML processing pipeline.

To make the most of MinIO on EKS, you’ll want to expose its Kubernetes services (UI and API) to users and applications outside the cluster, either internally to AWS using a Virtual Private Cloud (VPC) or externally to the Internet.

This blog post will walk you through three options: Classic Load Balancer, Application Load Balancer and Network Load Balancer. We’ll show you how to deploy them on EKS, including a discussion of architecture and the pros and cons of this methodology.

Exposing MinIO Services with Classic Load Balancer

To expose a service using a Classic Load Balancer on EKS, all you need to do is to set the service type: LoadBalancer; for that, the Tenant CRD has the exposeServices property that tells Operator to handle the creation of the services on your behalf.

First, we are going to need an EKS cluster, a MinIO Operator and a MinIO tenant. Please follow the instructions in Deploy MinIO Through EKS.

Then on the tenant manifest we specify the fields in exposeServices as in the example below:

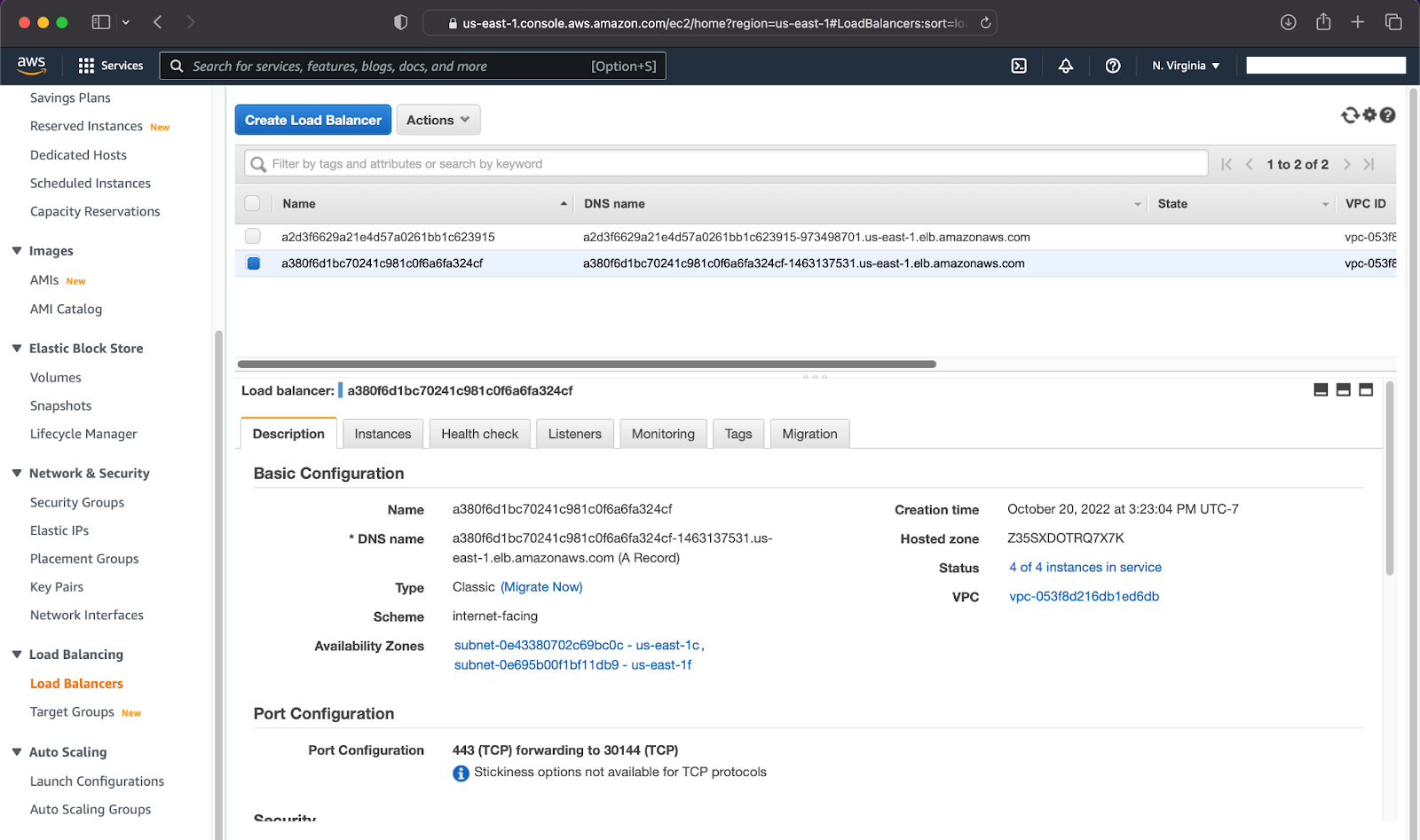

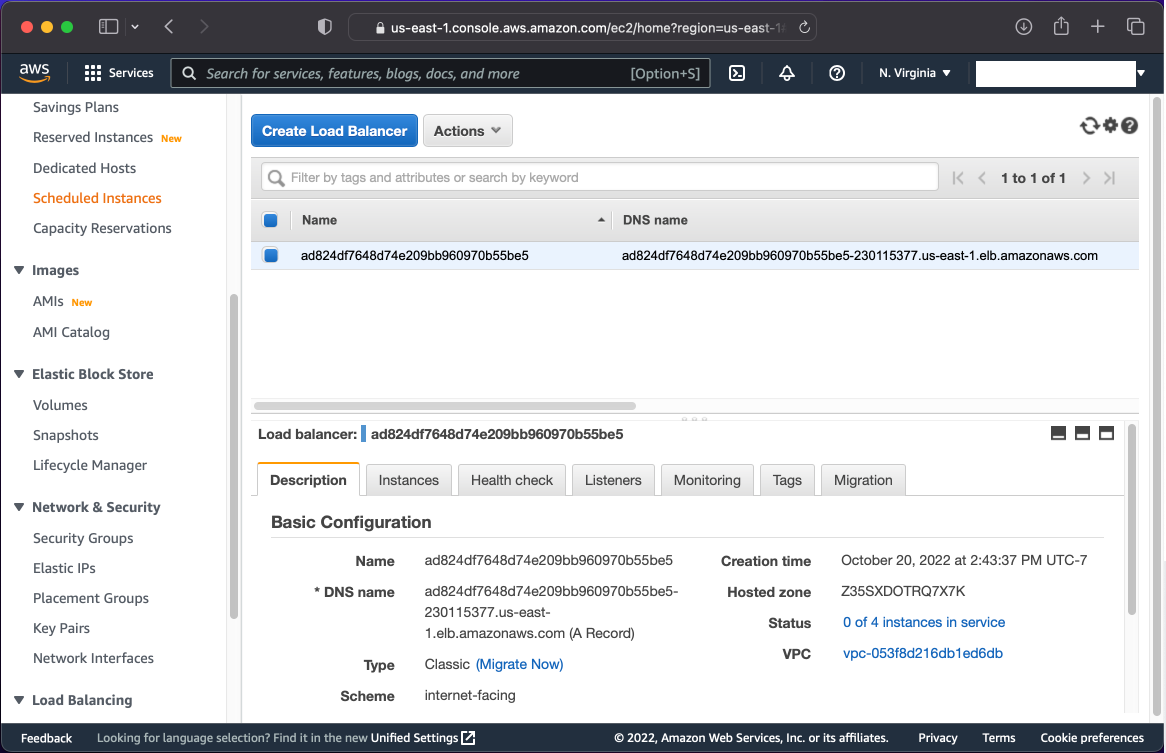

This will set the console and MinIO services type to LoadBalancer, then EKS will create 2 Classic Load Balancers, one for the MinIO Console service and another for the MinIO Server service as shown in the image below:

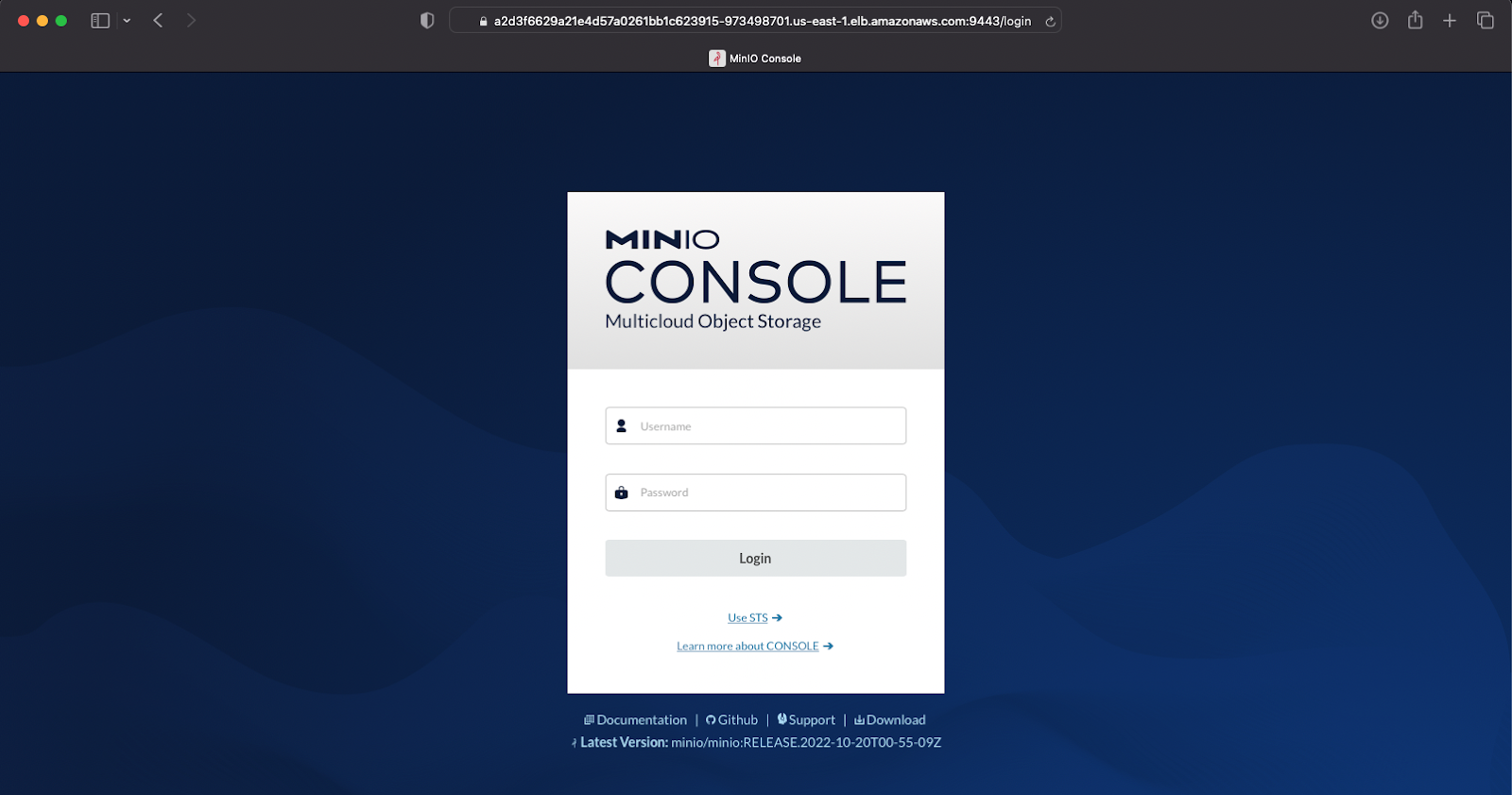

Now you can browse the MinIO Console on port 9443 (HTTPS):

And access the MinIO API on port 443 (HTTPS):

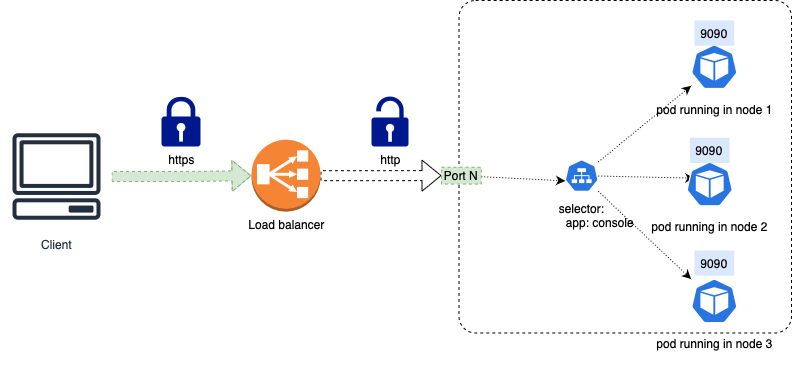

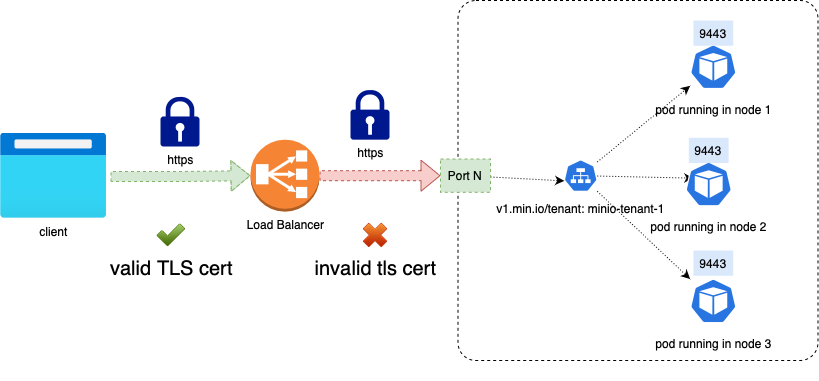

Traffic forwarding

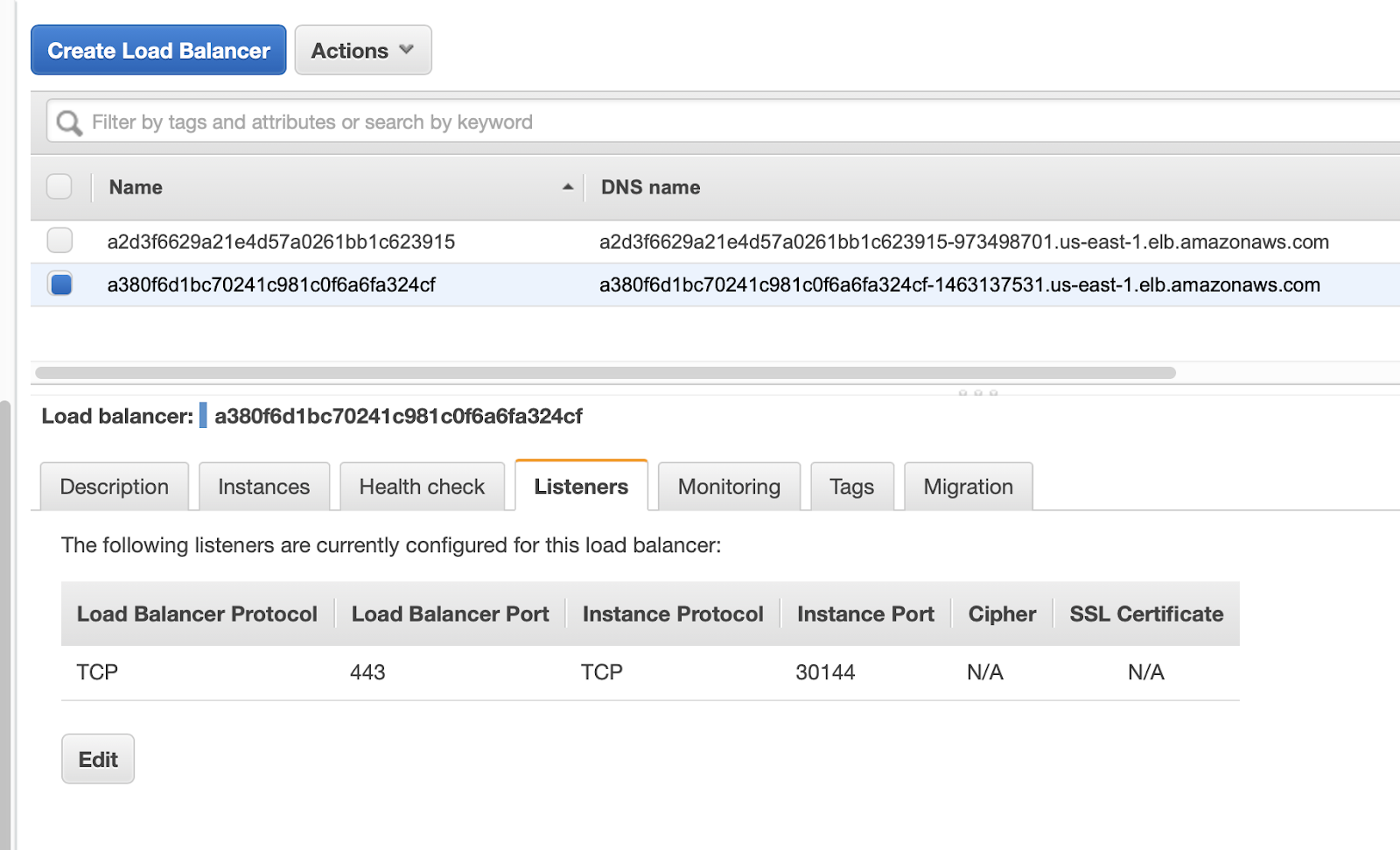

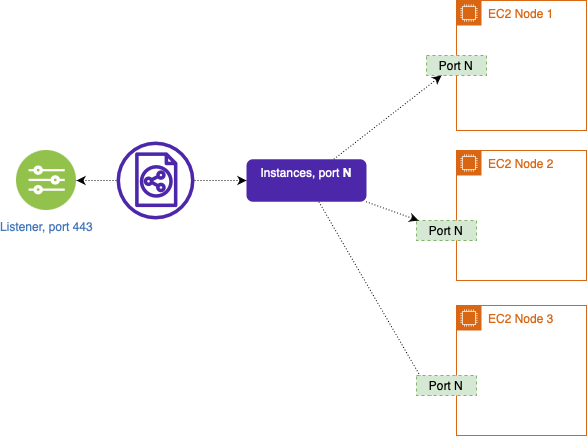

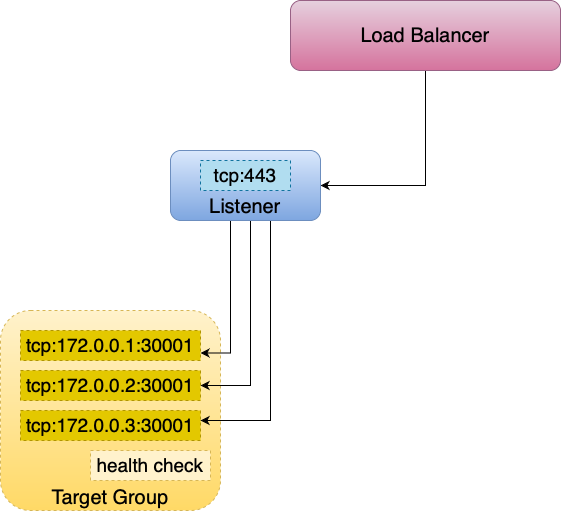

EKS creates the load balancers for us, and adds a Listener for each of the ports that the services expose. In the case of the MinIO service, EKS adds a listener on port 443 as you can see in the image below.

The listener will forward the traffic to the same port on each of the instances of the cluster (aka nodes).

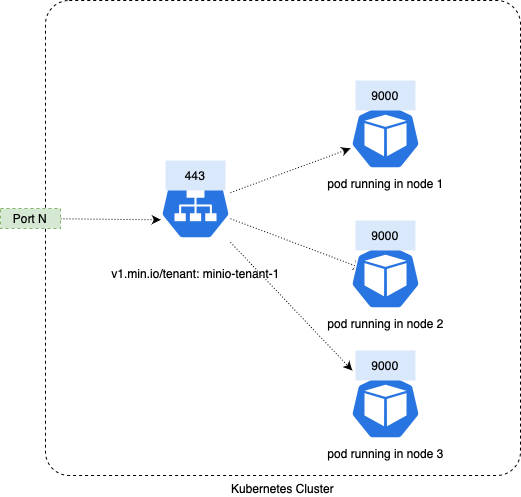

The service is exposed as a NodePort using the port N of all the nodes running in Kubernetes; in the previous example this port would be 30144.

The Kubernetes NodePort forwards the received traffic to the service MinIO, and then the service forwards the traffic to the pods that match the service filter.

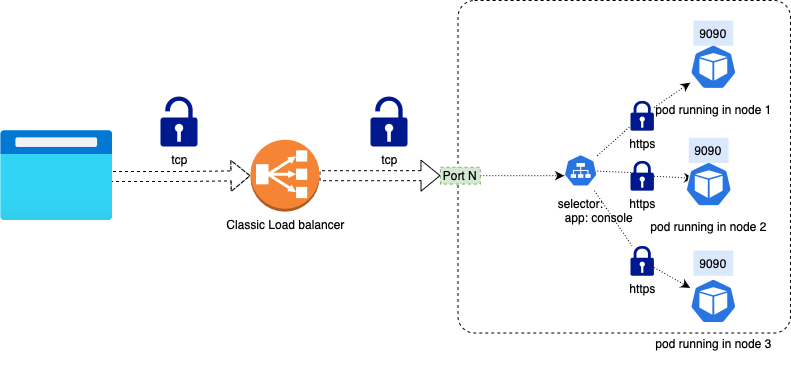

The Classic Load Balancer creates TCP (Layer 4) listeners and does not filter any traffic.

If you are using TLS, the MinIO service itself is responsible for TLS termination. Please refer to the Network Encryption TLS documentation to know more of how to configure TLS certificates in MinIO.

Expose Operator Console Using Classic Load Balancer

To allow access to the MinIO Operator Console from outside the Kubernetes cluster using a Classic Load Balancer, all that is needed is to change the service type from ClusterIP to LoadBalancer. For example:

The file contents will be something like this:

All we need to do is change the type field from ClusterIP to LoadBalancer.

And then apply the changes:

You will see a new load balancer in your AWS EC2 console:

The load balancer’s DNS hostname can also be obtained from the status of the service:

Finally, to manage MinIO, access the MinIO Operator Console via browser at the listed hostname on port 9090.

Additional Service Annotations for Classic Load Balancer

Although Classic Load Balancer is the default load balancer on AWS EKS, sometimes we need to customize its behavior. This is achieved by adding annotations to the MinIO service and MinIO Console service, as well as for the MinIO Operator Console service.

The Kubernetes Service documentation lists all the supported annotations for a Classic Load Balancer.

MinIO Tenant Console and MinIO services annotations

To add annotations to the MinIO Console and MinIO services, we add them in the serviceMetadata field within the tenant manifest.

Example: Classic Load Balancer internal

In this example, we’ll use annotations such as those below to configure the Classic Load Balancers for the MinIO service and the MinIO Console to be of type internal in order to allow internal traffic.

Operator Console service annotations

To expose the Operator Console service, we need to apply the annotations directly to it.

Example: TLS Termination on Classic Load Balancer

Adding the following annotations will enable TLS Termination in the Classic Load Balancer with a AWS ACM-issued certificate on the port 9090, with the backend traffic sent as unencrypted HTTP:

In order to accomplish this, let’s first list the Operator Console service manifest.

After editing the annotations, the Operator Console service manifest will look like this:

Note: To emphasize the important sections and save space in this post, some sections of the manifest have been edited and replaced with an ellipsis.

When you are finished editing, apply the changes:

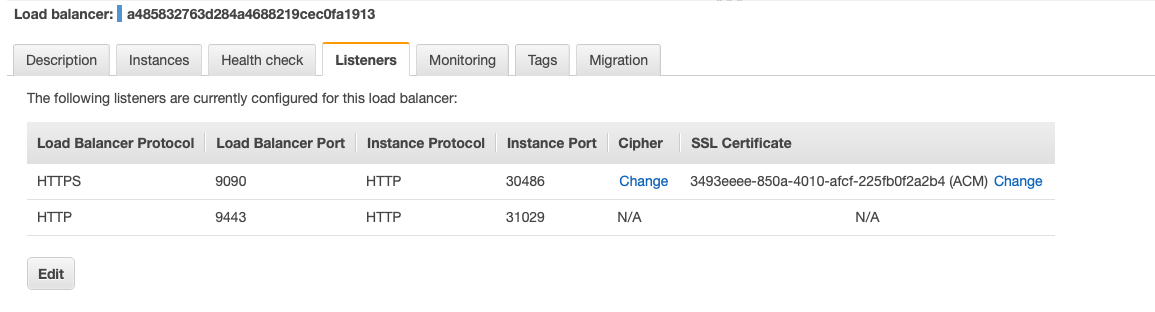

The listener for the Classic Load Balancer exposes port 9090 and assigns the certificate to that port as shown below:

Now we can navigate to the Operator Console UI using HTTPS with a valid public TLS Certificate:

Classic Load Balancer Annotations

Following are some of the most commonly used annotations with MinIO services.

Internal Classic Load Balancer

This annotation will make the load balancer internal only, instead of the default internet-facing:

Change Cipher and TLS Protocol

This annotation will change the TLS Policy. Use it when you require a certain specific ciphers group or SSL Protocols like TLS 1, TLS 1.1 or TLS 1.2 ( here is the list of policy groups for Classic Load Balancer).

Enable Classic Load Balancer Access Logs

These annotations together enable you to store ELB annotations in an S3 target. Access logs are useful to identify connectivity issues, as well as a by-request granular request details, see how to interpret access logs in the AWS documentation.

Make sure that the Target S3 and ELB exist in the same AWS Region and the S3 bucket Enable write access logs from ELB.

Security Groups for Classic Load Balancer

Sometimes custom security settings are needed regarding security groups associated with the load balancers, specially when a Kubernetes cluster were made manually or with specific security measures in place, the following 2 annotations will help in these cases.

A list of existing security groups to be configured on the ELB created. Unlike the annotation service.beta.kubernetes.io/aws-load-balancer-extra-security-groups, this replaces all other security groups previously assigned to the ELB and also overrides the creation of a uniquely generated security group for this ELB.

The first security group ID on this list is used as a source to permit incoming traffic to target worker nodes (service traffic and health checks).

If multiple ELBs are configured with the same security group ID, only a single permit line will be added to the worker node security groups, that means if you delete any of those ELBs it will remove the single permit line and block access for all ELBs that share the same security group ID.

This can cause a cross-service outage if not used properly

A list of additional security groups to be added to the created ELB.

Classic Load Balancer Cross Zone Load Balancing

Cross-Zone Load Balancing distributes traffic evenly across all your back-end instances in all Availability Zones

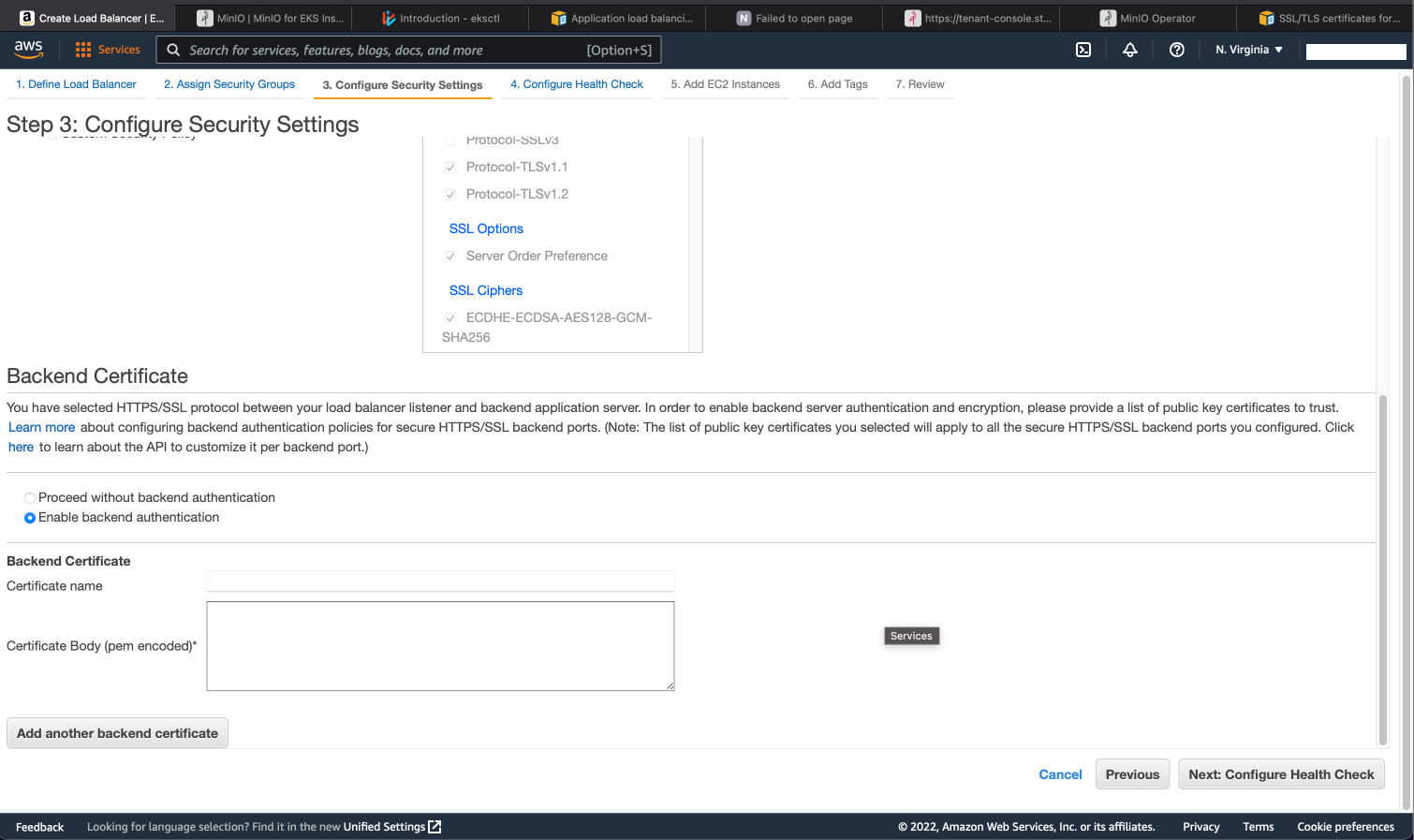

End-to-end TLS encryption in Classic Load Balancer

Even though it is possible to assign an AWS ACM issued certificate to the Classic Load balancer using the service.beta.kubernetes.io/aws-load-balancer-ssl-cert annotation to do the TLS Termination in the load balancer, in order for the Classic Load Balancer to trust the backend service (MinIO) it needs that the service have either a valid public CA issued certificate serving on the HTTPS port of the pods, or upload the Kubernetes issued certificate that MinIO serves to the load balancer as a “backend certificate” to trust it.

As of now there is not an automated way to do this from inside Kubernetes in EKS via annotations. Instead, you can use the AWS Console UI to create and configure Classic Load Balancer creation as shown below.

Even if we add the MinIO service certificate to the load balancer, any manual change to the load balancer configuration may – and most likely will – be lost during the next reconciliation loop of the MinIO Operator. For that reason, it is not recommended to do TLS termination on the Classic Load Balancer when MinIO has self-signed certificates or private CA issued certificates. Instead, use an alternative like Nginx Ingress, Application Load Balancer or Network Load Balancer for that purpose.

Expose MinIO Tenant services using Application Load Balancer

An Application Load Balancer works at the application, or seventh, layer of the OSI model. Listeners are configured to route requests to different target groups based on the content of the application traffic. The Application Load Balancer provides several key enhancements over the Classic Load Balancer, including support for registering Lambda functions as targets, as well as greater customization and improved performance.

First we are going to need an EKS cluster, install the MinIO Operator and a Tenant, follow the instructions in Deploy MinIO Through EKS for that.

On the EKS Cluster we will need to install the AWS Load Balancer Controller, see instructions here.

Then we will create an Ingress Manifest with the proper ALB annotations, see a full list of annotations here.

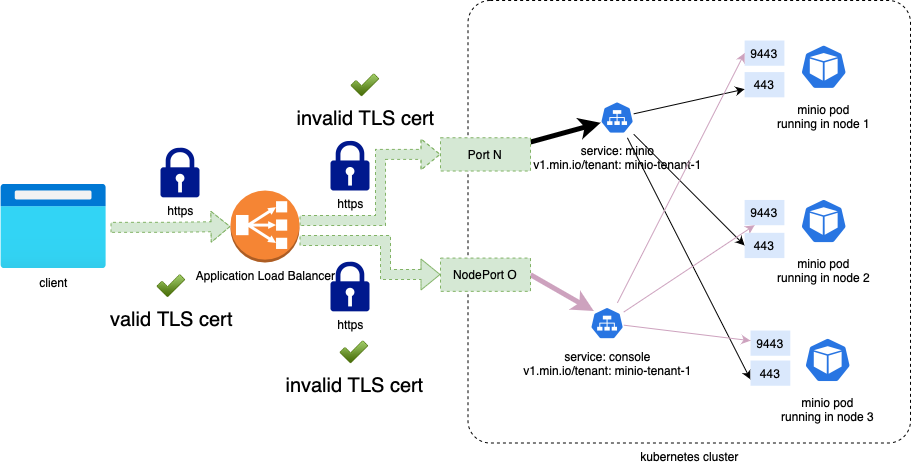

Example: Application Load Balancer end-to-end TLS encryption

Please note the following about this example:

- A single ALB is created, listening on port 443.

- Both domains tenant.exampledomain.com and minio.exampledomain.com DNS will be pointing to the same ALB, using the Host name resolution the ALB ingress forward traffic to the right service, console or minio, respectively.

- 200 and 443 are valid success codes for health checks

- TLS is terminated at the ALB level for public domains (tenant-console.exampledomain.com and minio.exampledomain.com) using an ACM managed SSL Certificate

- Traffic from the Application Load Balancer to the service is TLS encrypted, even with invalid certificates (self-signed certificate or private CA-issued certificate)

Edit the fields marked with the #replace comment in the example ingress manifest below to match the settings required for your environment.

Finally, apply the manifest.

A curiosity of ALB’s is that they do not validate the backend TLS certificate according to this AWS documentation:

“... you can use self-signed certificates or certificates that have expired. Because the load balancer is in a virtual private cloud (VPC), traffic between the load balancer and the targets is authenticated at the packet level, so it is not at risk of man-in-the-middle attacks or spoofing even if the certificates on the targets are not valid.”

Which means that you can do TLS termination at the ALB with a valid TLS certificate, then use a self-signed certificate, kubernetes CA-issued or any other private CA-issued certificate in the MinIO service to enable the end-to-end encryption.

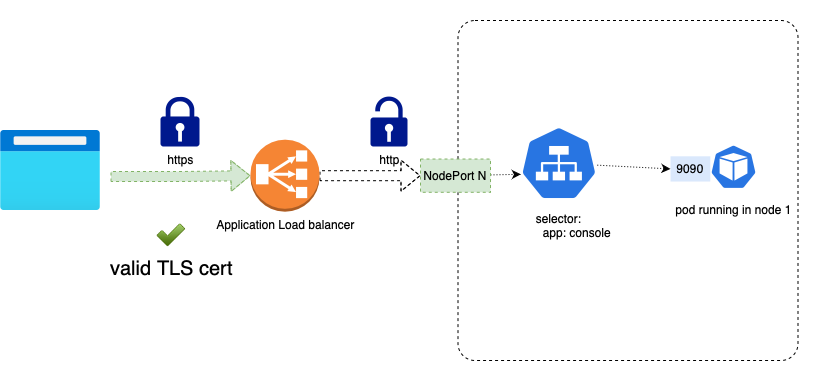

Expose MinIO Operator Console Using Application Load Balancer

To expose the Operator Console using ALB ingress is similar to the way the tenant and console are exposed, namely, by creating an ingress.

Example: Operator Console with TLS termination in the ALB

In this example we will:

- Route traffic received with the host header operator.exampledomain.com to the Operator Console service (replace the custom domain with your own).

- Set up TLS termination at the ALB.

- Forward HTTP traffic to port 9090 where the Operator Console is exposed.

Edit the fields marked with the #replace comment in the example Ingress manifest below to match your settings.

Then, apply the manifest.

Expose the MinIO Tenant services using Network Load Balancer

The final option for exposing MinIO outside of your EKS cluster is to use the Network Load Balancer.

As usual, we’ll start by creating an EKS cluster, installing the MinIO Operator and a tenant. You can follow the instructions in Deploy MinIO Through EKS for that.

On the EKS Cluster, we will also need to install the AWS Load Balancer Controller, see instructions here.

Example: Expose MinIO service and MinIO Operator Console service with Network Load Balancer

We will again edit the tenant manifest to specify exposeServices, and we will set the annotations to specify that we want a Network Load Balancer, otherwise Kubernetes will provision the default Classic Load Balancer instead.

In the serviceMetada field we specify the annotations for both the MinIO Operator Console and MinIO services, as in this example:

This will create a Network Load Balancer for the MinIO Service, and another Network Load Balancer for the MinIO Operator Console service.

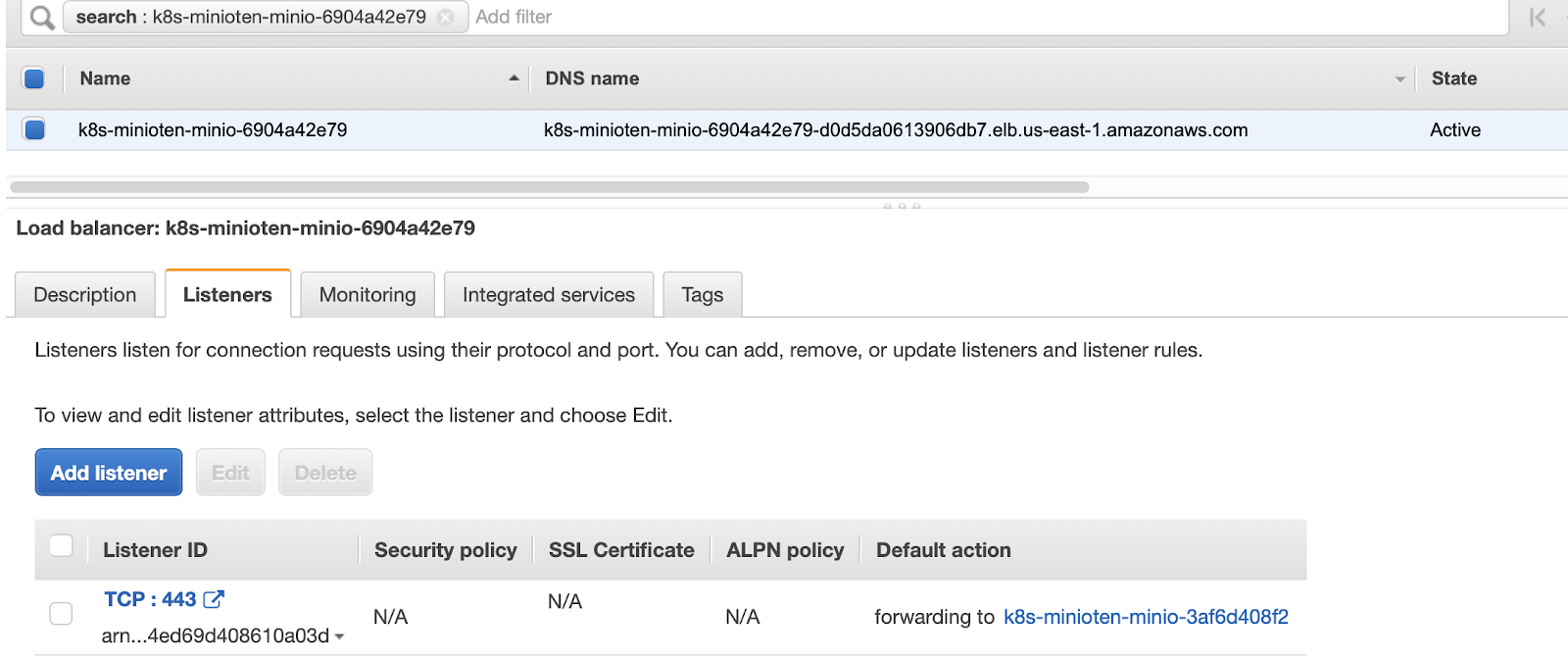

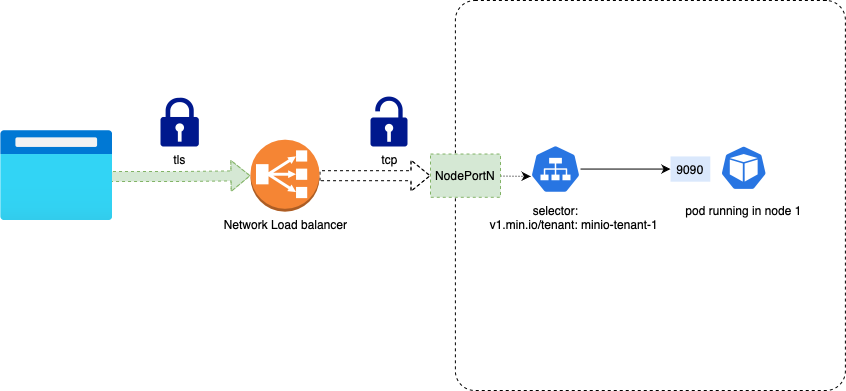

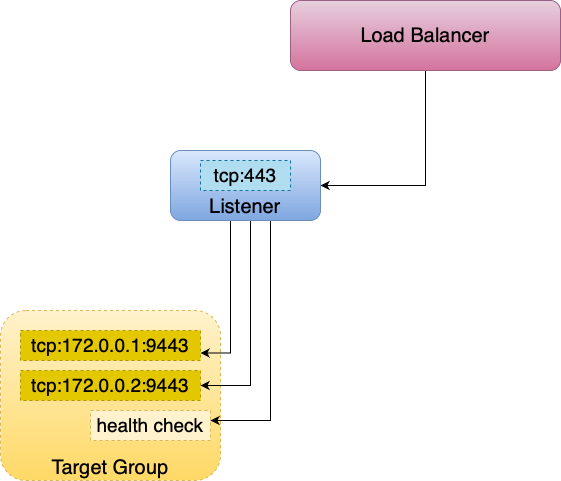

The Network load Balancer will add a TCP listener on the port 443 for MinIO, as shown below.

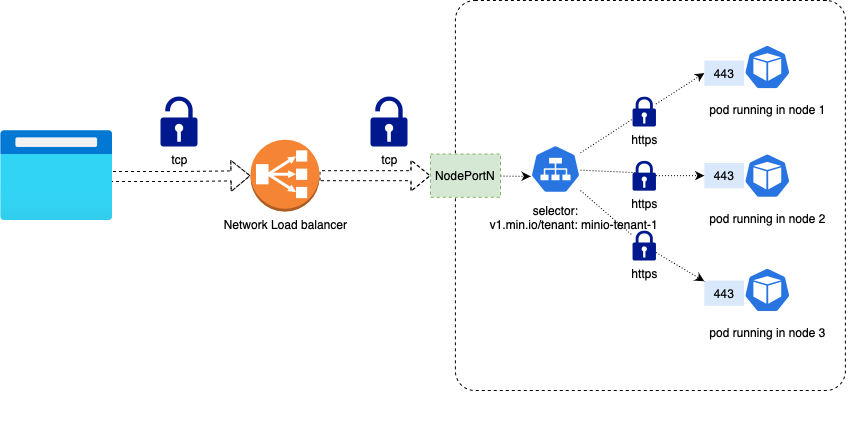

Network Load Balancer listens for TCP (Layer 4) traffic. As a result, Network Load Balancer does not filter any traffic.

The MinIO service itself is going to be in charge of TLS Termination. If this is what you’d like to do, please refer to the Network Encryption TLS documentation to learn more about configuring TLS certificates in MinIO.

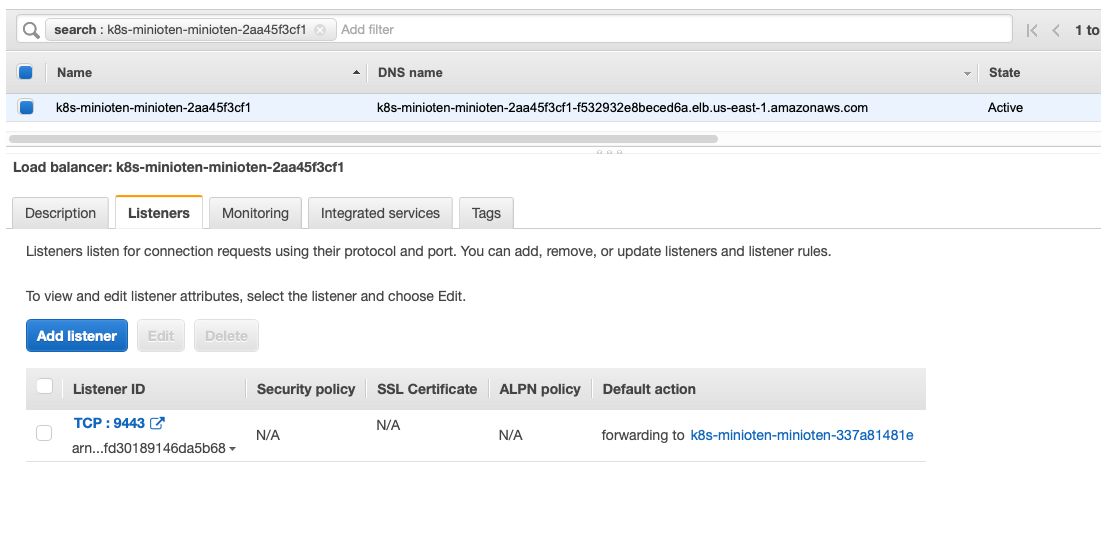

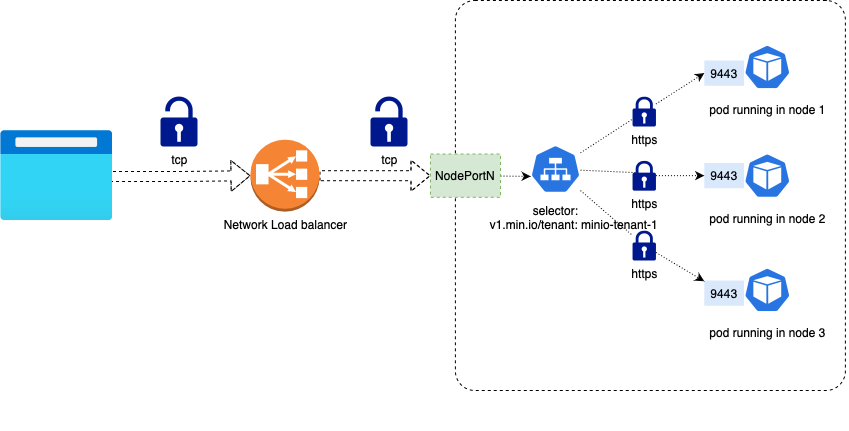

For the MinIO Operator Console service, let’s add a TCP listener on port 9443, as shown below.

If you would like the MinIO Operator Console service itself to be in charge of TLS Termination, please refer to the Network Encryption TLS documentation to learn how to configure TLS certificates in MinIO.

Example: Expose MinIO Operator Console with TLS Termination at the Network Load Balancer

Adding the following annotations will set up TLS Termination at the Network Load Balancer, with an AWS ACM-issued certificate, on port 9090, with the back end traffic sent as unencrypted HTTP traffic:

Let’s return to the service manifest. For this example we are going to use the MinIO Operator Console service.

Then, add the annotations and change the type to LoadBalancer. The resulting MinIO Operator Console service descriptor will look like this:

Note: Some sections of the manifest are edited and replaced with an ellipsis to shorten the file and emphasize the relevant sections.

Next, apply the manifest.

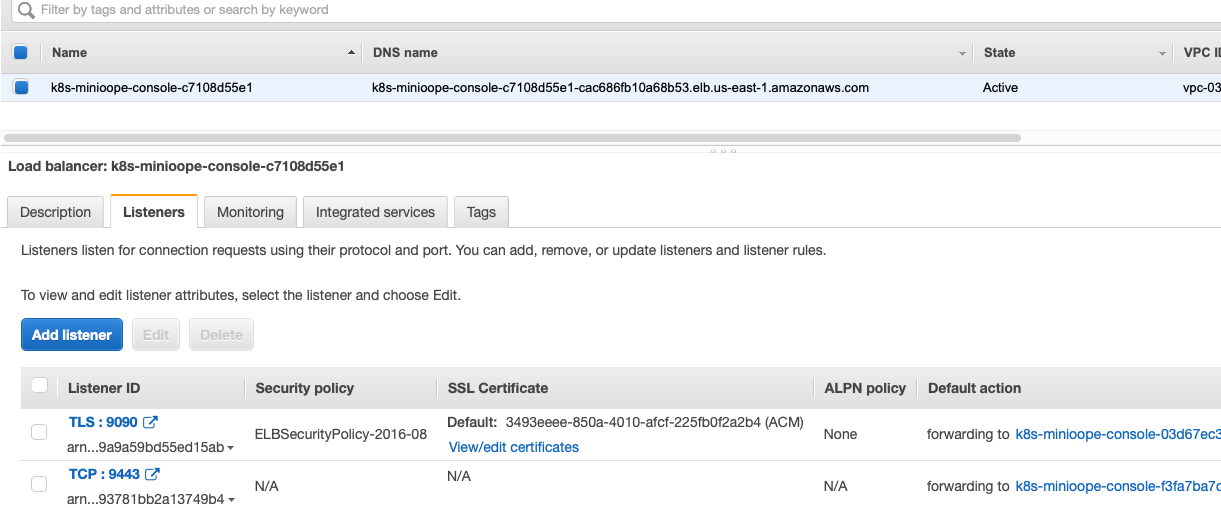

The listener of the Network Load Balancer exposes port 9090 and assigns the certificate to that port as shown below

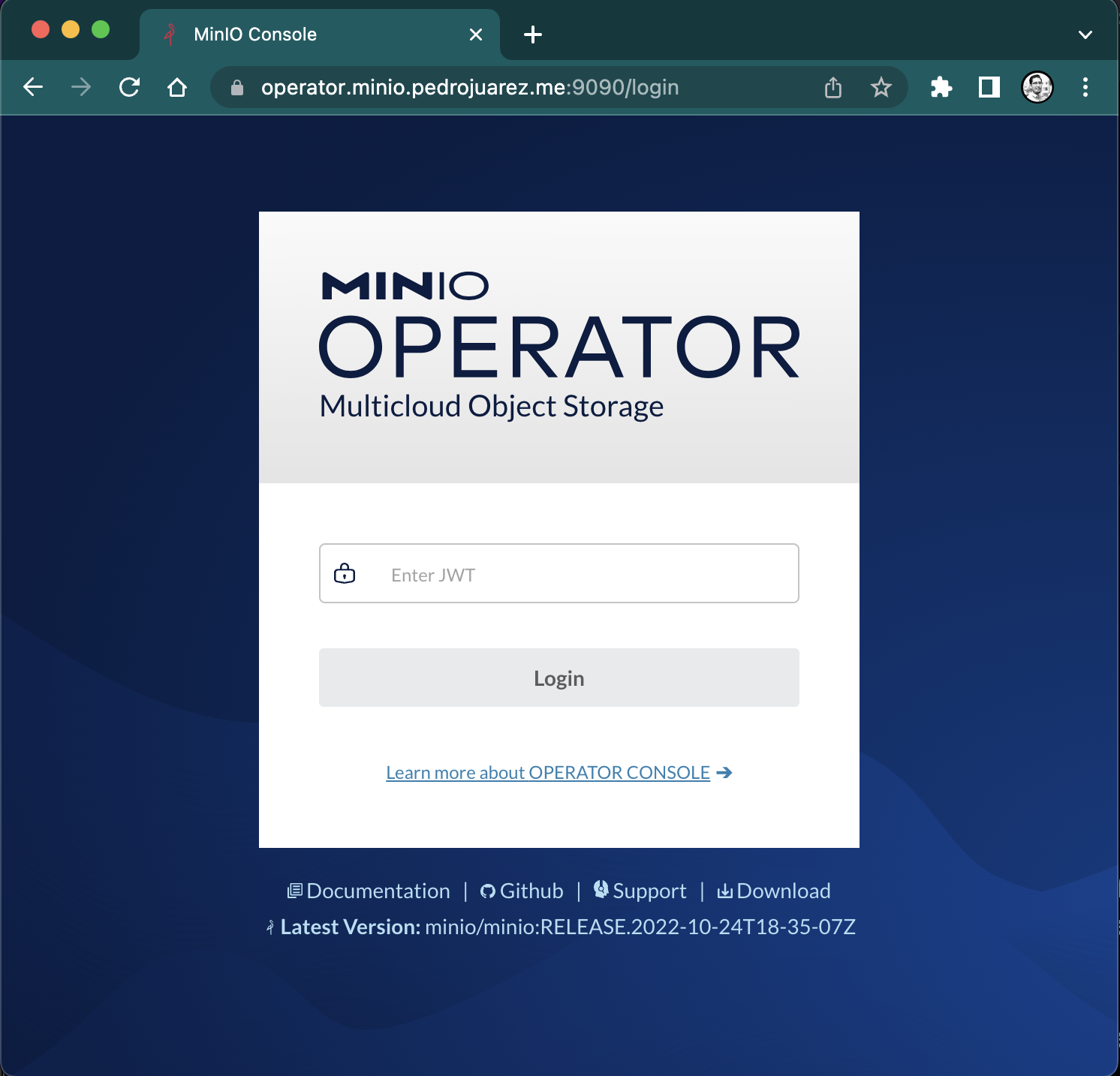

Next, navigate to the MinIO Operator Console using a browser, using HTTPS with a valid public TLS certificate:

Additional Service Annotations for the Network Load Balancer

Below you will find some of the most common configurations using annotations for Network Load Balancer. For a complete reference of the AWS Network Load Balancer Service annotations, please consult the AWS Load Balancer Controller documentation.

Network Load Balancer Annotations

Internet Facing Network Load Balancer

This annotation will make the Network Load Balancer internet connected, instead of the default internal.

Subnets

Use this annotation to specify the list of subnets to associate with the Network Load Balancer.

Access Logs

Use the aws-load-balancer-attributes annotation to configure access logs for the Network Load Balancer.

Target Type

This annotation specifies the target type to configure for the Network Load Balancer. You can choose between instance and IP.

See the Networking in EKS Best Practices documentation for an extensive explanation of the different approaches and use cases.

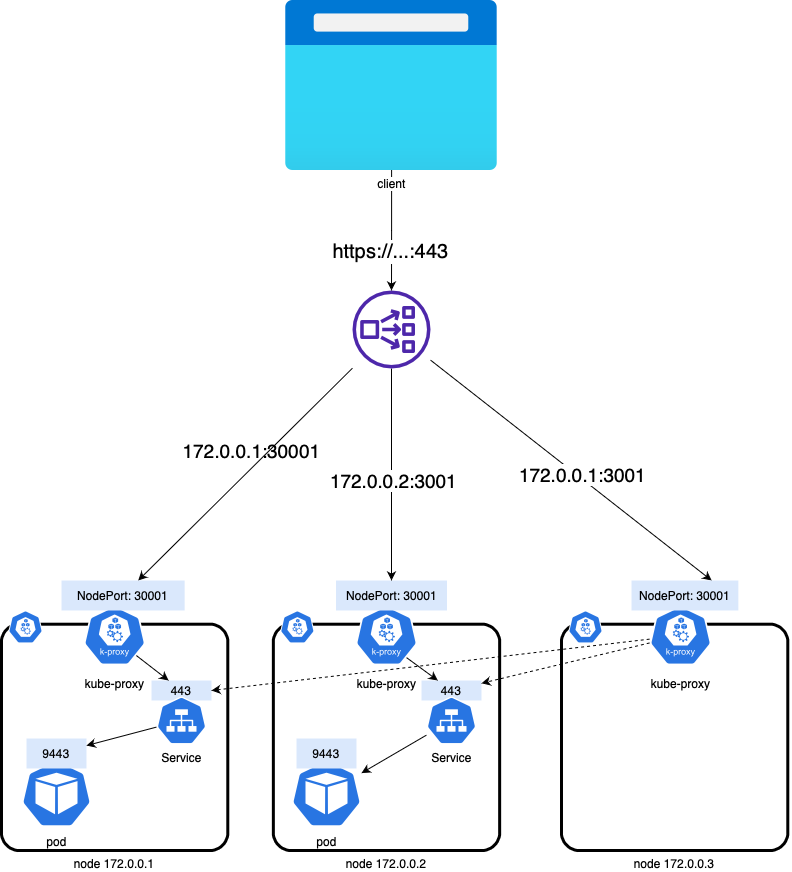

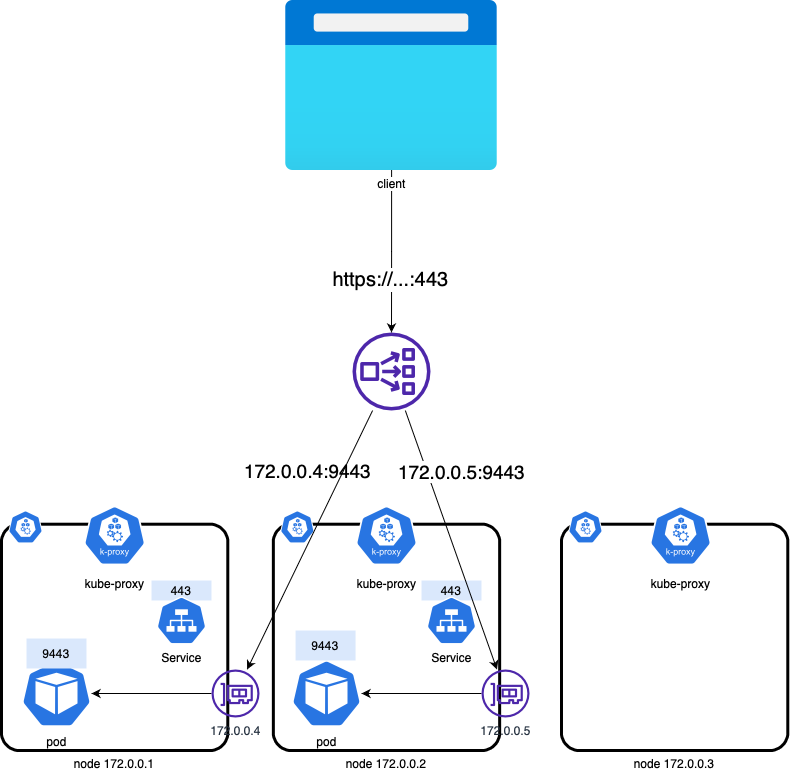

Instance Mode

This is the default and most common mode of exposing services on Kubernetes, It will route traffic to all EC2 instances within the cluster on the NodePort opened for your service.

In the example above, all three nodes are added to the target group

The NLB routes traffic to all of the nodes, including the node with IP 172.0.0.3, even though it has no pods of the service running on it. Kube-proxy on the individual worker nodes sets up the forwarding of the traffic from the NodePort to the pods behind the service.

IP Mode

This mode routes traffic directly to the pod IP. AWS NLB sends traffic directly to the Kubernetes pods behind the service, eliminating the need for an extra network hop through the worker nodes in the Kubernetes cluster.

Because the Pod has an ENI and an IP address reachable in the VPC, the Network Load Balancer does not need to route traffic through kube-proxy and only routes to the pod-id:port combinations of the target group, excluding node IPs that are not running pods of the service.

This mode requires the AWS VPC CNI plugin to be installed on the EKS cluster.

Some use cases for this networking (among others) include:

- Reduce the latency of a hop through kube-proxy

- IPV6 support

- Security groups per pod

- Custom networking

IPV6

If you're load balancing to IPv6 pods, add the service.beta.kubernetes.io/aws-load-balancer-ip-address-type: dualstack annotation. You can only load balance over IPv6 to IP targets, not instance targets. Without this annotation, load balancing is over IPv4.

Make Magic with MinIO and Managed Kubernetes

This blog post provided detailed instructions for exposing MinIO Operator Console and tenant services to applications and users outside the Kubernetes cluster. Whether you’re running on EKS or another Kubernetes distribution, hosted or not, it is straightforward to deploy and manage Kubernetes-native MinIO.

Try out the MinIO managed application in the AWS marketplace or download MinIO and deploy it locally today.