GitLab and MinIO for DevOps at Scale

GitLab is a web-based Git repository manager that provides source code management (SCM), continuous integration/continuous deployment (CI/CD), and issue tracking. It can be used as a self-hosted platform or as a cloud-based platform.

The self-hosted version of GitLab is used by organizations that prefer to have full control over their source code management and deployment processes. They also require the ability to customize GitLab to meet their specific needs such as better protect their IP and code base. Specific security, compliance, or regulatory requirements will also play a role in choosing between the cloud version of GitLab or an on-prem deployment.

GitLab can use MinIO as its object storage backend to store large files such as artifacts, Docker images, and Git LFS files. Given the right underlying hardware, MinIO provides the performance and scale to support any modern workload, including GitLab. We previously wrote about MinIO and GitHub Enterprise, and provided a tutorial that showed you how to work with GitHub Actions and GitHub Packages using MinIO.

To use MinIO as the object storage backend for GitLab, you need to configure GitLab to use the MinIO endpoint as the object storage URL. This can be done in the GitLab configuration file or through the GitLab user interface. Once this is done, GitLab will store artifacts, Docker images, and Git LFS files in MinIO instead of the local file system.

Using MinIO delivers performance, high availability, scalability and superior economics across a number of different scenarios. For example when you have two different regions or data centers where GitLab is running but you need the same set of base assets to build the final release. MinIO will handle the multi-site replication and ensure these assets are available in both sites. You can also use it in a DR scenario if one of the sites goes down, because of the site to site replication you can simply point to the other side and get things back to normal. All of this data requires a sophisticated, cloud-native storage system. That system is ideally S3 compatible, performant, seamlessly scalable to hundreds of PBs, software-defined, multi-cloud, secure and resilient.

In other words, GitLab requires MinIO and MinIO enhances GitLab with the following:

- S3 Compatible — Developers use RESTful APIs today. That is what S3 is — RESTful. The S3 API for object storage is the de facto standard. MinIO is the most widely deployed alternative to Amazon S3 and has more than a billion Docker pulls to prove it. There is no S3 compatible object store that is run in more architectures, across more types of hardware, and against more use cases than MinIO. That is why it “just works” for hundreds of thousands of applications.

- Performant — MinIO is the world’s fastest object store with GET/PUT speeds in excess of 325 GB/s and 165 GB/s respectively. Storage and retrieval is fast — no matter what the object size. MinIO has created a comprehensive blueprint for data infrastructure to support exascale AI and other large scale data lake workloads. It is called the MinIO DataPod. Why? Because exascale data is the reality that is common today in today's enterprise.

- Multi-cloud — GitLab runs everywhere; its storage solution needs to as well. MinIO runs on every public cloud, private cloud, and at the edge.

- Secure — GitLab is responsible for the enterprise’s most important software assets so it requires storage that takes security as seriously as it does. MinIO’s encryption schemes support granular object-level encryption using modern, industry-standard encryption algorithms, such as AES-256-GCM, ChaCha20-Poly1305, and AES-CBC. MinIO is fully compatible with S3 encryption semantics, and also extends S3 by including support for non-AWS key management services such as Hashicorp Vault, Gemalto KeySecure, and Google Secrets Manager. MinIO remains highly performant when encryption is turned on.

- Seamlessly scalable — MinIO scales through a concept called server pools that is designed for hardware heterogeneity. GitLab instances are fast growing, get really large and are long-lived. MinIO is well suited for these use cases.

- Integrated — MinIO supports the use of external key-management-systems (KMS). If a client requests SSE-S3, or auto-encryption is enabled, the MinIO server encrypts each object with an unique object key which is protected by a master key managed by the KMS.

- Resilient — Protecting data goes beyond encryption. Immutability is at the heart of the object storage framework and MinIO supports a complete object locking / retention framework offering both Legal Hold and Retention (with Governance and Compliance modes).

In addition, MinIO has some critical capabilities that matter to GitLab.

- MinIO supports both synchronous and asynchronous replication. If the link goes down between the two clusters, then even synchronous replication temporarily reverts to asynchronous replication, automatically. MinIO provides mechanisms for managing centralized updates and upgrades. Lifecycle management is part of the cluster console and policies are managed centrally. This allows architects to design a system that can withstand the failure of a complete cloud. This is meaningful because wIth a two cluster HA setup with replication enabled along with a load balancer, if a cluster completely fails, GitLab will continue to function by sending all the requests to the other cluster. That means no downtime for developers.

- Cluster level identity management is performed with AD/LDAP or OpenID Single Sign On (SSO). The user and users belonging to a group are limited with policy-based access controls that dictate actions that can be taken on particular resources. Policy authoring is enabled on the console and admins can create policies for users, groups and service accounts. The centralized management of clusters, pools, policies and buckets is easily managed.

- Management is simple and the joint solution can be automated with technologies like Ansible. Managing both MinIO and GitLab together — whether bare metal or Kubernetes — is a clear plus.

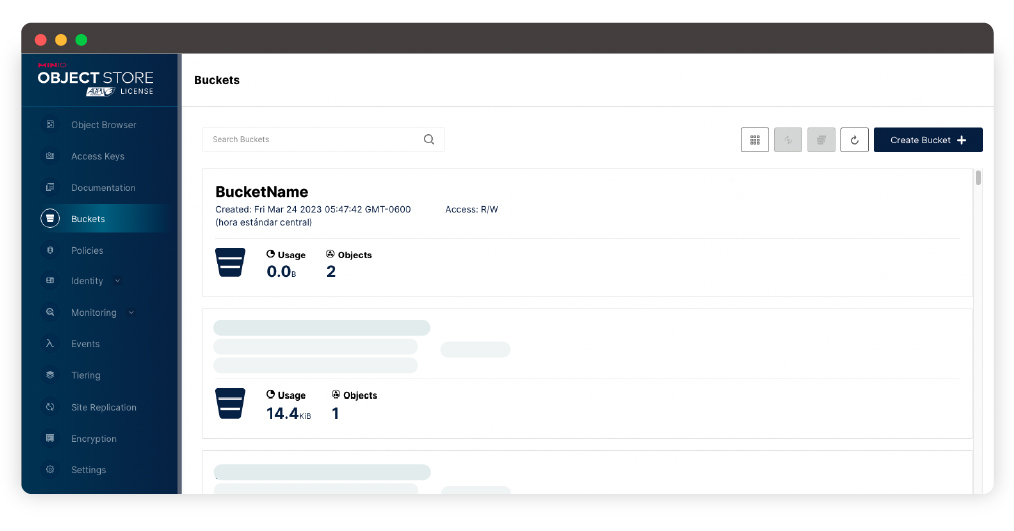

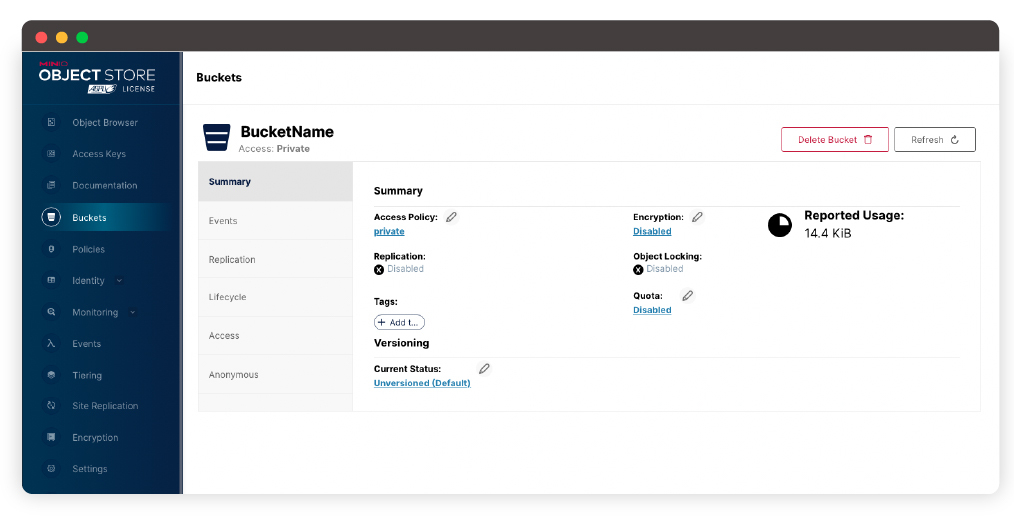

You can browse currently stored caches by inspecting your bucket in the MinIO Console or with the MinIO Client (mc). This provides a convenient way to check disk utilization and delete old caches to free up space. GitLab creates a bucket prefix for each of your project IDs. Within that folder you’ll find ZIP archives containing your caches, each one named by branch and the cache name given in your .gitlab-ci.yml.

Installing MinIO and GitLab Runner

Before we dive into things lets see how the basic architecture works. We will have 2 Docker containers. One running GitLab and the other running MinIO. We will set up the configuration related to object storage in MinIO to our Docker running instance of it. As you will see there are plenty of bits and bobs you can configure in GitLab to store on MinIO object storage. When you set these up in a production environment you will be able to leverage MinIO features such as site-to-site replication, encryption in transit and encryption at rest when storing data to ensure you meet compliance requirements and keep your data safe.

Let’s show you how it works.

Object Storage in Gitlab can be used for distributed runner caching along with storing built Docker containers.

Install Gitlab runner

Start Gitlab runner

Make local directories for Gitlab volumes

Install Gitlab in Docker

Create /etc/hosts entry

Get the initial root password from the following command

docker exec -it gitlab grep 'Password:' /etc/gitlab/initial_root_password

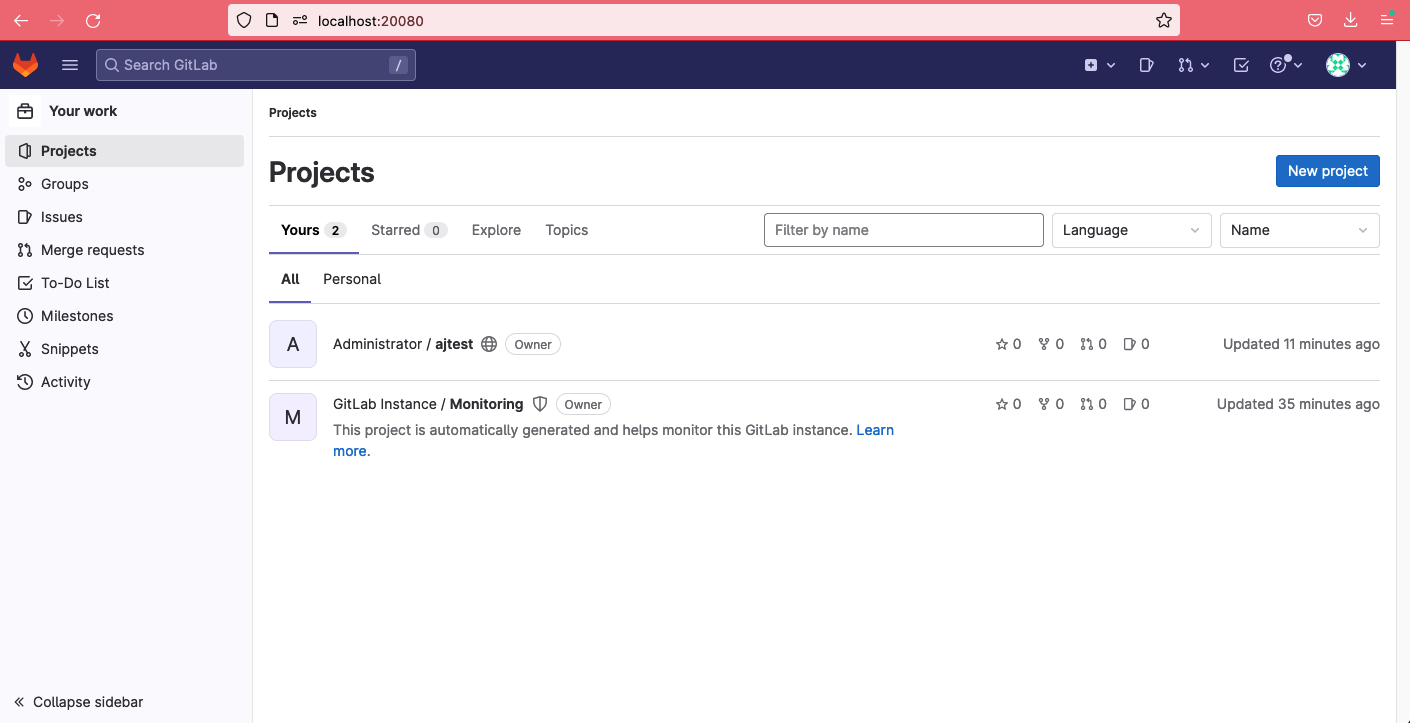

Go to http://localhost:20080

Update credentials to the following

User: root

Password: minio123

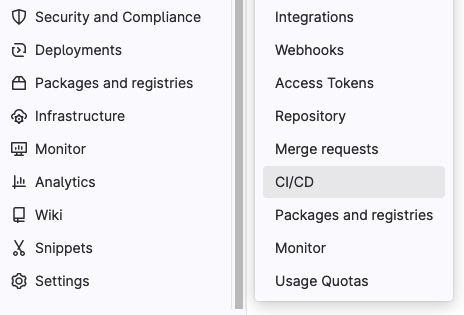

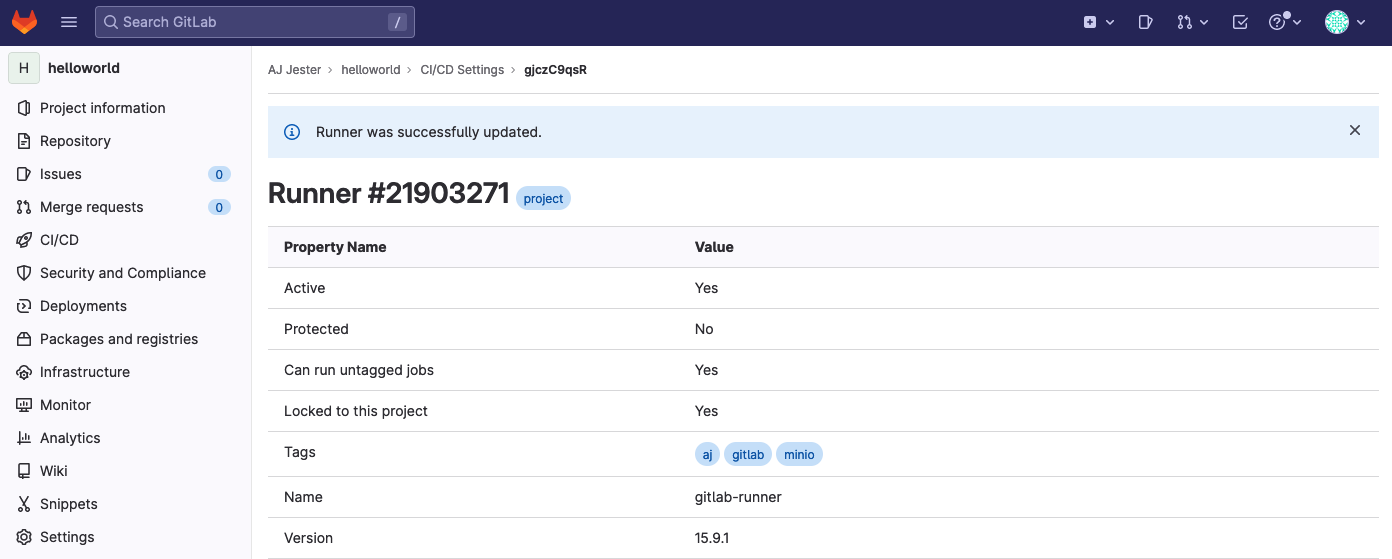

Go to Project, in this case Administrator / ajtest -> Settings -> CI/CD

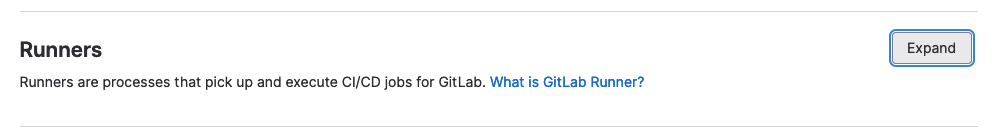

Expand Runners

Go to Project Runners and click on “show runner installation instructions”. Run the following command

gitlab-runner register --url http://localhost:20080/ --registration-token TOKEN

When it asks for “enter an executor” pick “shell” as seen below

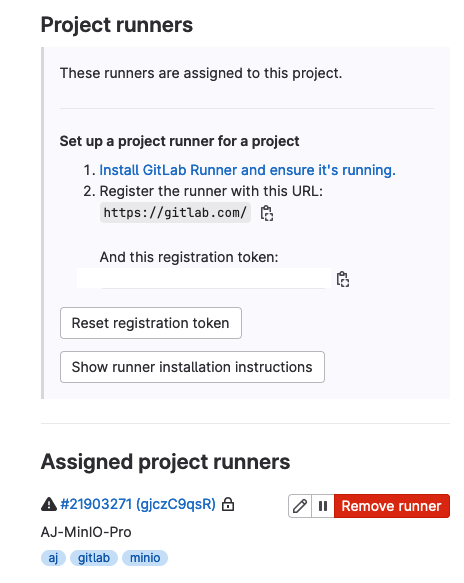

After a few minutes you should see your locally installed runner in the Project runners dashboard under Assigned project runners

You can also update the runner settings remotely in the http://localhost:20080 project settings.

To git clone the project repo, set the SSH port for your deployment, mine is gitlab.aj.local, to 20022 to match the Docker container hostname and externally exposed port

Git clone the project repo

Launch a MinIO Docker container and expose the API and Console ports. Be sure the -v volume path exists locally, change the path if need be.

Install MinIO Client (mc)

Configure a MinIO alias. If you used the above Docker command, the port should be 20090

Open the GitLab Runner configuration file

$ vi /Users/aj/.gitlab-runner/config.toml

Gitlab Runner configuration

In order to use MinIO as the S3-compatible backend, let’s set up some default GitLab object store connection information such as MinIO endpoint, access key and secret key.

Below are several different ways you can integrate MinIO with GitLab.

Artifacts: GitLab lets you manage artifacts and store them on MinIO, freeing local resources and increasing developer efficiency. When jobs are run, they may output artifacts such as a binary or tar.gz files which are stored locally on disk. You can save these job artifacts in MinIO to enable replication of data and store them on multiple disks in case of a failure.

Eternal Merge Requests: For performance optimization purposes, instead of fetching a diff for a merge request from source you can get these from copies stored in MinIO bucket.

LFS: Objects over a certain disk size cannot be committed into a git repo as normal files, git will not allow and will reject. In this case you need the LFS git protocol to save these large objects in the git repository.

Uploads: Other than git related data, GitLab also needs a place to store data such as avatars upload, attachments to comments and descriptions, etc. These can be stored in the MinIO object store as well.

Pages: When you want to add additional documentation of core samples to GitLab, you don’t need a separate website to add this information and then link to it from the GitLab repository. Wiki pages stored in MinIO object store can be directly accessed when being rendered to the end-user.

Artifacts Configuration

External Merge Requests

LFS Configuration

Uploads Configuration

Pages configuration

Conclusion

Developers love MinIO and they love GitLab. MinIO is easily configured as a basic installation that works as a shared cache for GitLab Runner. This ensures the cache is used reliably when multiple jobs run concurrently. We believe that anyone running a self-hosted GitLab installation will benefit by the addition of MinIO..

Why? Because both are simple yet powerful tools that improve coding efficiency. GitLab-based CI/CD generates large volumes of data, and all of this data requires a sophisticated, cloud-native storage system so enterprises can make the most of it. MinIO complements GitLab with object storage that is S3 API compatible, performant, seamlessly scalable to hundreds of PBs, software-defined, multi-cloud, secure and resilient. Adding MinIO to GitLab frees DevOps teams from drudgery so they can develop your business needs, rather than managing caches and waiting for them to upload/download.

In this post we’ve seen how you can configure GitLab for not only storing its cache in MinIO but also other features such as LFS, Pages among others to ensure you can put them on MinIO and use the underlying features that come with MinIO such as Replication, Encryption, Tiering among others. If you have any questions about the GitLab integration feel free to reach out to us on Slack!