The Most Powerful Version of MinIO Ever - Introducing AIStor

Today we announced the launch of AIStor, a new release which represents our singular focus on building the world’s finest object store for AI/ML workloads. The AIStor represents a year of accelerated learning from our biggest customers. MinIO is operating at a different level than the rest of the industry. We have multiple clients with more than 100 PiB and some north of 1 EiB. The class of challenges you experience at scale is not something you can project - you have to experience it. If you don’t have the right architecture to start with, you will never overcome these challenges. Examples of that include the chipset optimizations we have done for Intel, AMD and Arm. Other examples include our decision to store metadata atomically with the object. Third party metadata databases literally implode at scale. You also have highly efficient, Go-based, implementations of our erasure coding, bit-rot protection and encryption.

MinIO made the choice to invest in these AI workloads because we knew MinIO could scale. We were still surprised at how challenges emerged at 1 EiB - and in places we didn’t think about. Places like memory, networking, replication and load balancing. Because we had the right foundation, we have been able to quickly overcome these challenges and allow our customers to continue to grow their data infrastructure unabated. We are immensely proud of these achievements and those additional capabilities, optimizations and features are all in the new AIStor and are available to our commercial customers.

By focusing our development on this class of workload, we enhance every other workload we support, from advanced analytics, modern data lakehouses, Hadoop replacement, application workloads and traditional archival and backup workloads.

For most storage vendors, object storage is a checkbox exercise. They are focused on the past, with file and block. The future is object storage. That is why we do it exclusively. Vendors that are focused on file and block will never let go of their technical debt and so they will never deliver a great object store. Full stop.

The future is about massive namespaces. A single location for the entirety of the enterprise's AI data. Not all of the enterprise’s data, but all of their AI data - which is to say the vast majority of it. You will continue to have infrastructure that support important but relatively niche systems of record data and they may be file/block based - but like the previous decade, those workloads are in the hundred’s of TiBs. That data will be instantaneously available the the AI data store - and that will be on an object store. Just ask the hyper-scalers.

The New Stuff

There are several core pillars in our AIStor release with a robust roadmap of additional features that both we and our customers have generated. The core features each have their own dedicated blog posts and we encourage you to read them:

promptObject - This new API represents an important extension of the S3 API and enables applications, developers and administrators to “talk” to unstructured objects in the same way one would engage an LLM. This means that application developers can exponentially expand the capabilities of their applications without requiring domain-specific knowledge of RAG models or vector databases. It is available in the Global Console and through MinIO’s SDK. The applications are almost infinite when considering this extension. The object storage world now moves from a PUT and GET paradigm to a PUT and PROMPT paradigm. Applications can use promptObject through function calling with additional logic. This can be combined with chained functions with multiple objects addressed at the same time.

This will dramatically simplify AI application development while simultaneously making it more powerful.

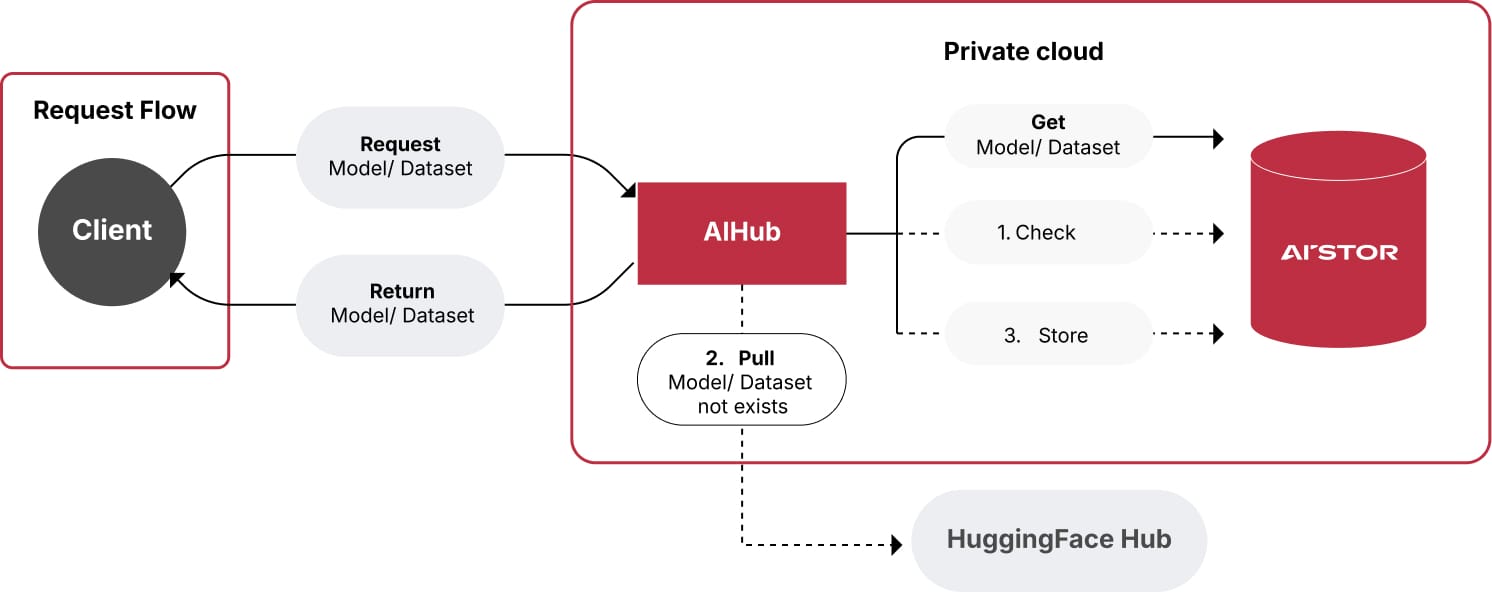

MinIO AIHub - a private repository for storing AI models and datasets directly on AIStor. API compatible with HuggingFace, AIHub allows enterprises to create their own data and model repositories on the private cloud or in air-gapped environments without changing a single line of code. A private data hub eliminates the risk of the developers leaking sensitive data sets or models. Other example integrations could include deploying fine tuned models using vLLM.

Additional Protocol Support - AIStor adds support for S3 over RDMA. By leveraging RDMA’s low-latency, high-throughput capabilities, MinIO enables customers to take full advantage of their 400GbE, 800GbE and beyond Ethernet investments for S3 object access on MinIO servers, providing additional performance gains required to keep the compute layer fully utilized while reducing CPU utilization. Open to customers under private preview.

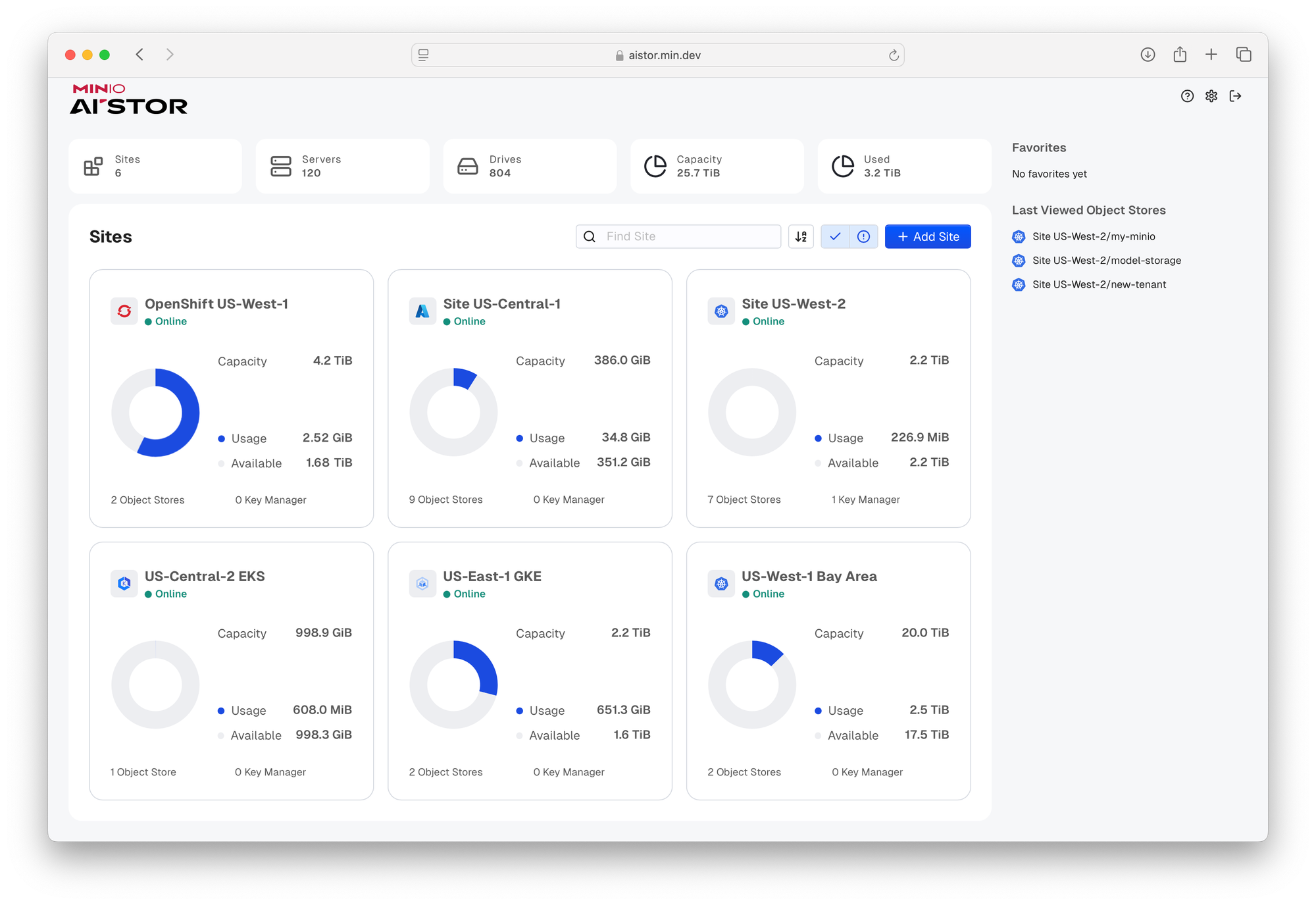

Updated Global Console - a completely redesigned user interface for MinIO that provides extensive capabilities around IAM, ILM, load balancing, firewall, security, caching, replication and orchestration, all through a single pane of glass. The updated console features a new MinIO Kubernetes operator that further simplifies the management of large scale data infrastructure where there are hundreds of servers and tens of thousands of drives.

Again, these represent pillars of this release, but they stand on an incredible foundation. MinIO’s object store is the most widely deployed object store in the world. There are more than 6.2M deployments of it globally. There are more than 2B Docker pulls to date. The reason for that success is that MinIO doesn’t just have every imaginable feature, it has the industry’s best implementation of every imaginable feature, each of which is critical to building a scalable, performant, and secure object store for AI. Replication, Encryption, Object Immutability, Identity + Access Management, Information Lifecycle Management, Versioning, Key Management Server, Catalog, Firewall, Cache, Observability, Load Balancing and of course S3 Compatibility.

While these represent features, the are only part of the overall offering which is underpinned by direct-to-engineering support, amazing SLAs, the option of an instant SLA, the SUBNET support portal, benchmarking tools, health check tools and a myriad of other software capabilities and benefits. If you don’t believe us, check out our Gartner Peer Insights reviews.

Bringing it Home

We are in a different phase when it comes to data and data infrastructure. The challenges are fundamentally distinct from what the enterprise is accustomed to. The reasons are scale and the parallel requirement to deliver performance at scale.

This scale is simply breaking older technologies - that is why so many of them are trying to rebrand themselves away from storage. They know they can’t scale so they become “management” or “database” offerings where they can ostensibly deliver value at the 1-10 PiB range. This scale, however, is exactly what object storage was built for. That is why MinIO, AWS, GCP and Azure are all focused on the object stores - not file and block stores. MinIO, AWS, GCP and Azure know what it is like to operate at this scale and we collectively have the customer rosters to prove it. Managing one exabyte is fundamentally different from one petabyte. That cannot be said about one terabyte to one petabyte. Those aren’t that different.

As one customer put it:

“Managing mobile device exhaust, data that is emitted from the device during application testing, is often highly challenging because applications run on different platforms, operating systems, versions, and event form factors,” said Frank Moyer, CTO from Kobiton. “MinIO enables us to manage and store massive scales of device exhaust. MinIO’s speed and fault tolerance is critical to our ability to conduct mobile application testing, helping our hundreds of testers navigate thousands of test scripts using AI and automation. AIStor will propel our AI-based testing solution to the next level of high performance systems.”

We tell our customers to think big. We have the architecture to support you. Other storage vendors encourage the scarcity mentality. That will not play in the age of AI.

So let’s get started.

Reach out to us on hello@min.io for a demo of the AIStor and we can start thinking big together.