Introducing DirectPV

DirectPV is a CSI driver for Direct Attached Storage. At the most basic level, it is a distributed persistent volume manager, and not a storage system like SAN or NAS. DirectPV is used to discover, format, mount, schedule and monitor drives across servers.

Before we get into the architecture of DirectPV, let’s address why we needed to build it.

History

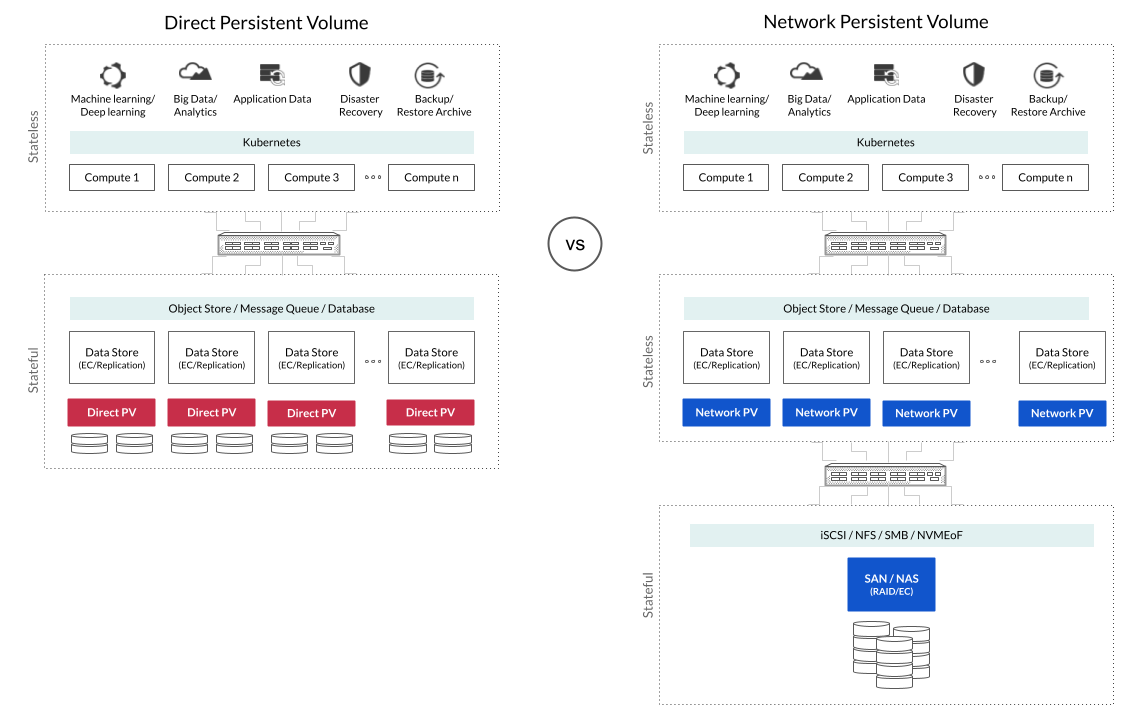

Modern distributed data stores such as object stores, databases, message queues and AI/ML/analytics workloads are designed to run on commodity off-the-shelf servers with locally attached drives. Since they already take care of the critical storage functions like erasure-coding or replication and high-availability, all they need is direct local access to dumb commodity drives in a JBOD configuration (Just a Bunch of Drives). Using network attached storage (NAS) and storage area networks (SAN) hardware appliances for these distributed data services in a Kubernetes environment is both inefficient as well as a Kubernetes anti-pattern. You will be replicating already replicated data while introducing a second network hop between the drives and the host. Neither are necessary. This is true even for hyperconverged infrastructure.

LocalPV vs NetworkPV

Kubernetes early on introduced primitive support for locally attached storage via the Hostpath and LocalPV features. However, since the introduction of Kubernetes Container Storage Interface (CSI) driver model, this job has been left to the storage vendors to extend the functionality outside of the core Kubernetes.

The API did not favor local PV or network PV approaches. It was written in abstract API terms as an API should be. At the time, the persistent volume market was dominated by SAN vendors. This was simply a function of the fact that, historically, storage vendors were all file and block vendors. Even today there are far more SAN and NAS vendors compared to object storage vendors - despite the growth of object storage for primary workloads. This will work itself out over time. But we digress.

Because there were so many more SAN or NAS vendors and because they aren’t cloud native by design (POSIX vs. S3/RESTful API) they worked really hard to talk up CSI as a bridge solution.

In the application world, SAN/NAS vendors spent a disproportionate amount of time talking about disaggregation. In their narrative, disaggregation was between the stateless microservices and containers and stateful services like data stores. Examples of those data stores would include MinIO, Cassandra and Kafka.

The storage vendors rushed to provide Kubernetes CSI drivers for their SAN and NAS hardware appliances, but they had no vested interest in implementing a CSI driver that would cannibalize their own products. VMware was the first to recognize this problem and they introduced vSAN Direct to address this LocalPV issue. However, this solution only available to VMware Tanzu customers.

The second one to follow was OpenEBS LocalPV, which provides both local and network persistent volumes. Others like LongHorn are planning to support LocalPV functionality (https://github.com/longhorn/longhorn/issues/3957).

Introducing DirectPV

Because MinIO was built from scratch to be cloud-native, many of our MinIO deployments are on Kubernetes. We routinely deal with JBODs hosting 12 to 108 drives per chassis. While we liked the simplicity of Kubernetes native LocalPV, it did not scale operationally. The volumes have to be preprovisioned statically and there was no easy way to manage large numbers of drives. Further, LocalPV lacked the sophistication of the CSI driver model.

Taking MinIO’s minimalist design philosophy into account, we sought a dynamic LocalPV CSI driver implementation with useful volume management functions like drive discovery, formatting, quotas and monitoring. There was not one on the market so we did what we always do, we built one.

Hence the introduction of Direct Persistent Volume - https://min.io/directpv.

DirectPV is a Kubernetes Container Storage Interface (CSI) driver for Directly Attached Storage (DAS). Once the local volume is provisioned and attached to the container, DirectPV doesn’t come in the way of application I/O. All reads and writes are performed directly on the volume. You can expect the performance to be as fast as the local drives. Distributed stateful services like MinIO, Cassandra, Kafka, Elastic, MongoDB, Presto, Trino, Spark and Splunk would benefit significantly from DirectPV driver for their hot tier needs. For long term persistence, they may use MinIO object storage as warm tier. MinIO would in turn use DirectPV to write the data to the persistent storage media.

This effectively frees the industry from legacy SAN and NAS based storage systems.

DirectPV Internals

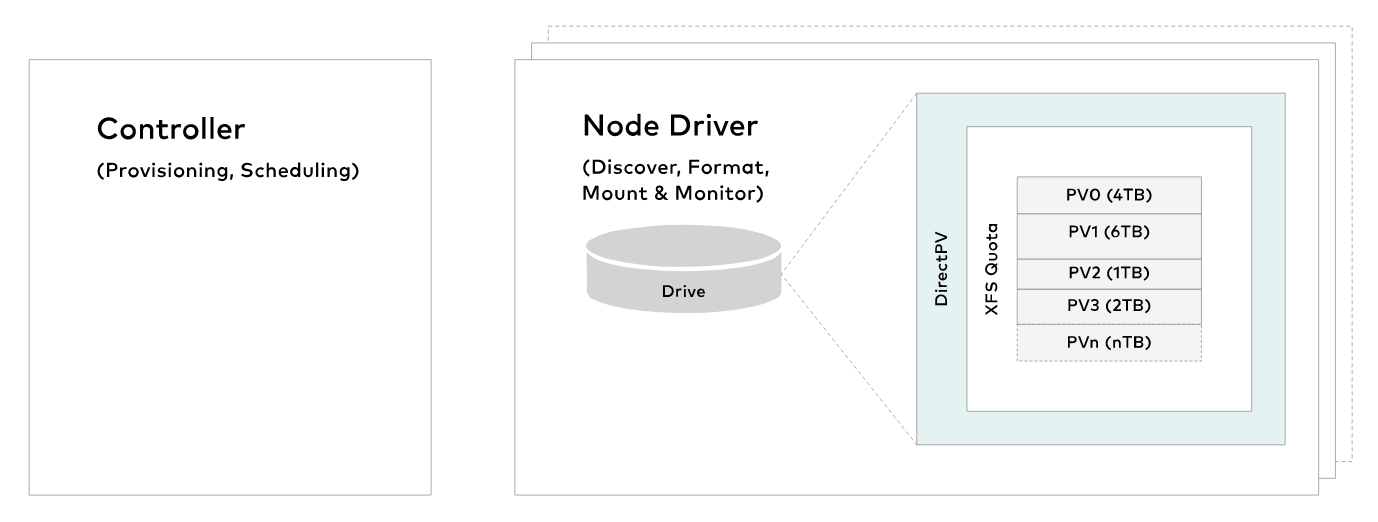

Controller

When a volume claim is made, the controller provisions volumes uniformly from a pool of free drives. DirectPV is aware of pod's affinity constraints, and allocates volumes from drives local to pods. Note that only one active instance of the controller runs per cluster.

Node Driver

Node Driver implements the volume management functions such as discovery, format, mount, and monitoring of drives on the nodes. One instance of the node driver runs on each of the storage servers.

UI

Storage administrators can use the kubectl CLI plugin to select, manage and monitor drives. Integrated web UI is currently under development.

Give it a Try!

DirectPV is completely open source and installation is simple. It takes only two steps. Once you have successfully installed the DirectPV krew plugin, try the info command to ensure that the installation succeeded.

Next you discover all the drives and bring them under DirectPV management. From here on, these drives will be exclusively managed by DirectPV for Kubernetes containers.

$ kubectl directpv drives ls

$ kubectl directpv drives format -drives /dev/sd{a…f} -nodes directpv-{1…n}

Your drives are now ready for provisioning dynamically as LocalPV. The pods will automatically gain affinity to the nodes that has the matching LocalPV. To make a volume claim, use directpv as the storageclass in PodSpec.VolumeClaimTemplates.

Concluding Thoughts

You will notice the efficiency and simplicity of the Direct Persistent Volume immediately. An entire layer of complexity is removed. Applications speak directly to their data through the datastore, eliminating the legacy SAN/NAS layer. DirectPV cuts out all the extra networking hardware requirements, all the extra additional management.

DirectPV’s advantage is in its minimalist design. As a result it solves the LocalPV problem - but just that problem. We do not have any plans to add support for NetworkPV, because they are meant for legacy applications and there are several solutions in the market to address them.

Github: https://github.com/minio/directpv

Community Slack Channel: https://slack.min.io/