Using LXMIN in MinIO Multi-Node cluster

MinIO includes several ways to replicate data, and we give you the freedom to choose the best methodology to meet your needs. We’ve written about bucket based active-active replication for replication of objects at a bucket level, batch replication for replication of specific objects in a bucket which gives you more granular control and other best practices when it comes to site-to-site replication. MinIO uses Erasure Coding to protect and ensure data redundancy and availability for reconstructing objects on the fly without any additional hardware or software. In addition, MinIO also ensures that any corrupted object is captured and fixed on the fly to ensure data integrity using Bit Rot Protection. There are several reasons data can be corrupted on physical disks. It could be due to voltage spikes, bugs in firmware, misdirected reads and writes among other things. In other words, you never have to worry about backing up your data saved to MinIO as long as you set up your MinIO cluster following best practices.

When an entire node fails it often takes several minutes to get it back online. This is because after it's been reprovisioned it has to be reconfigured, configuration management tools such as Ansible/Puppet have to be run multiple times achieve idempotency. In this case it would be very useful to have a snapshot of the VM with the configuration already baked-in to the VM so the node can come back online as soon as possible. Once the node is online the data will get rehydrated using the other nodes in the cluster, bringing the cluster back to a good state.

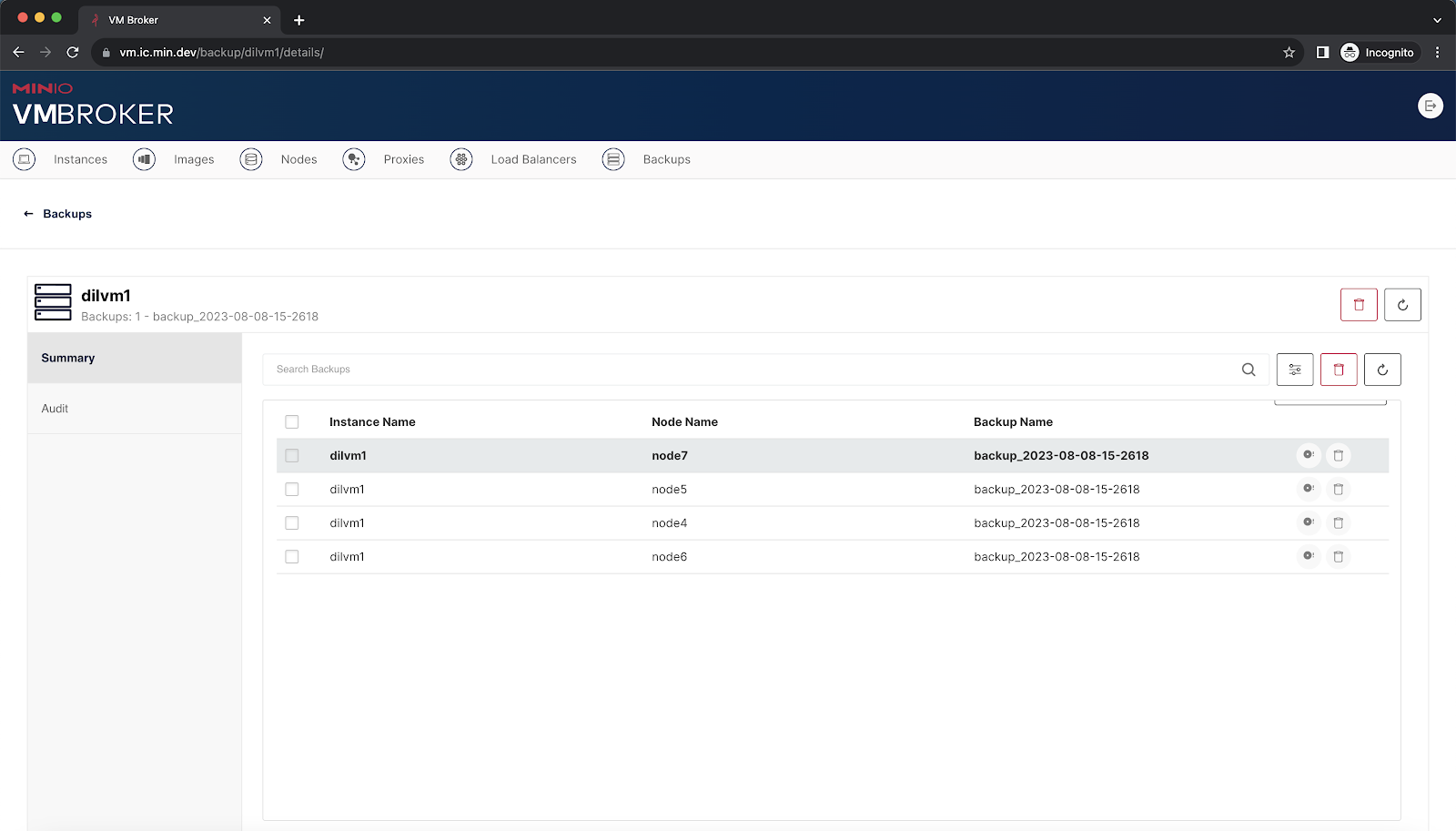

In this post let's take a look at how to set up multiple LXMIN servers backing up to a multi-node multi-drive MinIO cluster. We use LXC internally for our lab but you can use these concepts with any platform. We’ll set up each VM broker server with its own LXMIN service as a means of reducing load on the backup system. We recommend this setup because each request from a specific instance goes to the hypervisor where it's hosted. Moreover, a request for a specific backup goes to the node which is able to list it. In turn, the LXMIN services connect to a single MinIO endpoint which, in this example, allows access to a multi-node multi-drive MinIO cluster.

For each server in the cluster, obtain wildcard certificates issued by a signing authority. Create the following files on the server.

In this example, we used *.lab.domain.com certificates

mkdir -p $HOME/.minio/certs/CAs

mkdir -p $HOME/.minio/certs_intel/CAs

vi $HOME/.minio/certs_intel/public.crt

vi $HOME/.minio/certs_intel/private.keyDownload and prepare LXMIN on each of the MinIO servers

mkdir $HOME/lxmin

cd $HOME/lxmin

wget https://github.com/minio/lxmin/releases/latest/download/lxmin-linux-amd64

chmod +x lxmin-linux-amd64

sudo mv lxmin-linux-amd64 /usr/local/bin/lxmin-ajCreate a systemctl unit file on the MinIO servers for our LXMIN service. NOTE - This may need to run as `User=root` and `Group=root` due to error: Unable get instance config

sudo vi /etc/systemd/system/lxmin-aj.service

###

[Unit]

Description=Lxmin

Documentation=https://github.com/minio/lxmin/blob/master/README.md

Wants=network-online.target

After=network-online.target

AssertFileIsExecutable=/usr/local/bin/lxmin-aj

[Service]

User=aj

Group=aj

EnvironmentFile=/etc/default/lxmin-aj

ExecStart=/usr/local/bin/lxmin-aj

# Let systemd restart this service always

Restart=always

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=65536

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=infinity

SendSIGKILL=no

[Install]

WantedBy=multi-user.target

###To go with the LXMIN service we need a settings file which contains startup configuration for the LXMIN service. Change the values as required.

sudo vi /etc/default/lxmin-aj

###

## MinIO endpoint configuration

LXMIN_ENDPOINT=https://node5.lab.domain.com:19000

LXMIN_BUCKET="lxc-backup"

LXMIN_ACCESS_KEY="REDACTED"

LXMIN_SECRET_KEY="REDACTED"

LXMIN_NOTIFY_ENDPOINT="https://webhook.site/REDACTED"

## LXMIN address

LXMIN_ADDRESS=":8000"

## LXMIN server certificate and client trust certs.

LXMIN_TLS_CERT="$HOME./lxmin/certs_intel/public.crt"

LXMIN_TLS_KEY="$HOME/.lxmin/certs_intel/private.key"

###After creating the systemctl unit and config files, go ahead and enable the service so it can start at boot-up time of the VM. Once it's enabled you can start it and check the status via logs.

sudo systemctl enable --now lxmin-aj.service

sudo systemctl start lxmin-aj.service

sudo systemctl status lxmin-aj.service

sudo journalctl -f -u lxmin-aj.serviceThe following commands are also useful if you want to disable the service during boot, stop or restart it.

sudo systemctl disable lxmin-aj.service

sudo systemctl stop lxmin-aj.service

sudo systemctl restart lxmin-aj.serviceNext let’s download and prepare MinIO on the instances

cd $HOME

mkdir minio

cd $HOME/minio

wget https://dl.min.io/server/minio/release/linux-amd64/minio

chmod +x minio

sudo mv minio /usr/local/bin/minio-ajFor each MinIO server in the cluster, obtain wildcard certificates issued by a signing authority.

Copy to the following files on the server.

NOTE: Be sure to update the SANs (Subject Alternative Names) below with the IPs of the MinIO nodes in the cluster so the certificates get accepted.

mkdir -p $HOME/.minio/certs/CA

wget https://github.com/minio/certgen/releases/latest/download/certgen-linux-amd64

mv certgen-linux-amd64 certgen

chmod +x certgen

./certgen -host "127.0.0.1,localhost,<node-1-IP>,<node-2-IP>,<node-3-IP>,<node-4-IP>"

mv public.crt $HOME/.minio/certs/public.crt

mv private.key $HOME/.minio/certs/private.key

cat $HOME/.minio/certs/public.crt | openssl x509 -text -nooutCreate a systemctl unit file on the MinIO servers for our minio service.

sudo vi /etc/systemd/system/minio-aj.service

###

[Unit]

Description=MinIO

Documentation=https://min.io/docs/minio/linux/index.html

Wants=network-online.target

After=network-online.target

AssertFileIsExecutable=/usr/local/bin/minio-aj

[Service]

WorkingDirectory=/usr/local

User=aj

Group=aj

ProtectProc=invisible

EnvironmentFile=-/etc/default/minio-aj

ExecStartPre=/bin/bash -c "if [ -z \"${MINIO_VOLUMES}\" ]; then echo \"Variable MINIO_VOLUMES not set in /etc/default/minio-aj\"; exit 1; fi"

ExecStart=/usr/local/bin/minio-aj server $MINIO_OPTS $MINIO_VOLUMES

# Let systemd restart this service always

Restart=always

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=65536

# Specifies the maximum number of threads this process can create

TasksMax=infinity

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=infinity

SendSIGKILL=no

[Install]

WantedBy=multi-user.target

# Built for ${project.name}-${project.version} (${project.name})

###

Let's open up the MinIO default configuration file and add the following values

sudo vi /etc/default/minio-aj

###

# do paths in your home directory

MINIO_CI_CD=1

# Set the hosts and volumes MinIO uses at startup

# The command uses MinIO expansion notation {x...y} to denote a

# sequential series.

#

# The following example covers four MinIO hosts

# with 4 drives each at the specified hostname and drive locations.

# The command includes the port that each MinIO server listens on

# (default 9000)

MINIO_VOLUMES="https://node{4...7}.lab.domain.com:19000/home/aj/disk{0...1}/minio"

#MINIO_VOLUMES="https://65.49.37.{20...23}:19000/home/aj/disk{0...1}/minio"

# Set all MinIO server options

#

# The following explicitly sets the MinIO Console listen address to

# port 9001 on all network interfaces. The default behavior is dynamic

# port selection.

MINIO_OPTS="--address :19000 --console-address :19001 --certs-dir /home/aj/.lxmin/certs_intel"

# Set the root username. This user has unrestricted permissions to

# perform S3 and administrative API operations on any resource in the

# deployment.

#

# Defer to your organizations requirements for superadmin user name.

MINIO_ROOT_USER=REDACTED

# Set the root password

#

# Use a long, random, unique string that meets your organizations

# requirements for passwords.

MINIO_ROOT_PASSWORD=REDACTED

# Set to the URL of the load balancer for the MinIO deployment

# This value *must* match across all MinIO servers. If you do

# not have a load balancer, set this value to to any *one* of the

# MinIO hosts in the deployment as a temporary measure.

MINIO_SERVER_URL="https://node5.lab.domain.com:19000"

###Start or restart the service depending on the status of the service.

sudo systemctl enable --now minio-aj.service

sudo systemctl start minio-aj.service

sudo systemctl status minio-aj.service

sudo journalctl -f -u minio-aj.serviceBe sure to repeat all the steps for installing MinIO and LXMIN on the other servers in the cluster. They all have to be configured identically.

Let's take a look at the MinIO console to validate the entire setup

https://node5.lab.domain.com:19001/login

You can also use mc to double-check to make sure the cluster is running correctly.

mc alias set myminio https://node7.lab.domain.com MINIO_ROOT_USER MINIO_ROOT_PASSWORD

To test the LXMIN/MinIO backup, access the following LXMIN endpoint. The assumption here is that the wildcard certificate and key are available at $HOME/.vm-broker/ssl

$ curl -X GET "https://node4.lab.domain.com:8000/1.0/instances/*/backups" -H "Content-Type: application/json" --cert $HOME/.vm-broker/ssl/tls.crt --key $HOME/.vm-broker/ssl/tls.key | jq .

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1100 100 1100 0 0 7766 0 --:--:-- --:--:-- --:--:-- 8148

{

"metadata": [

{

"instance": "delete",

"name": "backup_2023-08-08-09-3627",

"created": "2023-08-08T16:37:40.627Z",

"size": 583116000,

"optimized": false,

"compressed": false

},

{

"instance": "delete",

"name": "backup_2023-08-08-10-3807",

"created": "2023-08-08T17:39:21.241Z",

"size": 583446188,

"optimized": false,

"compressed": false

},

{

"instance": "delete",

"name": "backup_2023-08-08-15-2135",

"created": "2023-08-08T22:22:49.79Z",

"size": 583774771,

"optimized": false,

"compressed": false

},

{

"instance": "dilvm1",

"name": "backup_2023-08-08-15-2618",

"created": "2023-08-08T22:27:53.034Z",

"size": 888696535,

"optimized": false,

"compressed": false

},

{

"instance": "new0",

"name": "backup_2023-08-08-17-5231",

"created": "2023-08-09T00:53:52.235Z",

"size": 617889321,

"optimized": false,

"compressed": false

},

{

"instance": "new2",

"name": "backup_2023-08-08-15-2805",

"created": "2023-08-08T22:29:26.87Z",

"size": 617917969,

"optimized": false,

"compressed": false

},

{

"instance": "test-error",

"name": "backup_2023-08-08-16-1501",

"created": "2023-08-08T23:16:09.232Z",

"size": 516457141,

"optimized": false,

"compressed": false

}

],

"status": "Success",

"status_code": 200,

"type": "sync"

}Replication is not Backup

As a DevOps engineer, I learnt very early on that Replication is not equal to Backup (Replication != Backup). MinIO clusters are lightning fast in performance, can scale to hundreds or thousands of nodes and heal corrupted objects on the fly. What we are recommending is not to backup the data inside MinIO but rather the configuration on the node required for MinIO to run and operate in the cluster itself. The goal here is that if we had a degraded node we would be able to restore it to a good state as soon as possible so it can rehydrate (using Erasure Coding) and participate in the MinIO cluster operations. The actual integrity and replication of the data is managed by MinIO software.

In addition to the API, LXMIN also includes a command line interface for local management of backups. This command line interface allows you to build on top of LXMIN's functionality in any language of your choice. API compatibility checker, and DirectPV to simplify the use of persistent volumes.

If you would like to know more about MinIO replication and clusters, give us a ping on Slack and we’ll get you going!