High Performance Object Storage for Veeam Backup and Recovery

Backups are a big business. Within that market, VM backups represent the lion’s share of the market. The world of VM backups has changed, however. What was once a small challenge for enterprises has become a massive one reaching PBs in size and spawning an entire sub-industry.

Veeam is the one of the major players in backup, recovery and replication software and, in the eyes of many enterprises, the major player. For Veeam customers, what makes MinIO so interesting is the fact that MinIO is software defined. Since software wants to talk to other software, MinIO and Veeam are a superb match when it comes to backing up VMs. Here is why:

- VM backups require scalable storage. There is little debate around this point in architecture circles these days. Object is the most scalable solution - whether in the public or private cloud.

- VM backups require performance. This is a two part requirement. First, backups and restores need to go quickly no matter the size. Traditional object storage architectures (think appliance vendors) are not up to the task here for a number of reasons. This is where modern object stores like MinIO are exceptionally well suited. There are a number of reasons but these four stand out:

- Speed. With the ability to read/write at speeds in excess of 160 GB/s in a single 32 node cluster, MinIO can backup and restore at speeds once considered impossible for object storage. Longer backup restore cycles would result in increased disruption to business. One way slower object stores try to make shortcuts is to move to an eventual consistency model. This can have devastating effects if the plug gets pulled in the middle of your job and your database gets corrupted.

- Object size. MinIO can handle any object size. Veeam’s default is 1MB objects but supports anywhere from 256K to 4MB. Because MinIO writes metadata atomically along with the object data, it does not require a database (Cassandra in most cases) to house the metadata. At smaller object sizes, deletes become highly problematic and effectively disqualify any object store that employs such an approach. At 4MB, dedupe becomes ineffective, so Veeam architects recommend against it.. Suffice to say - if the object storage implementation uses a metadata database, it is not well suited for handling backups.

- Inline and Strictly Consistent. Data in MinIO is always readable and consistent since all of the I/O is committed synchronously with inline erasure-code, bitrot hash and encryption. The S3 service provided by MinIO is resilient to any disruption or restarts in the middle of busy transactions. There is no caching or staging of data for asynchronous I/O. This guarantees all backup operations are guaranteed to succeed.

- Commercial off-the-shelf hardware. COTS hardware = huge savings, familiarity and flexibility. This becomes an important requirement as the data grows into to the Petabytes.

The result of these requirements is that Veeam is a natural pair for high-performance, software defined object storage. That is exactly what MinIO is and is why we fit so well with Veeam.

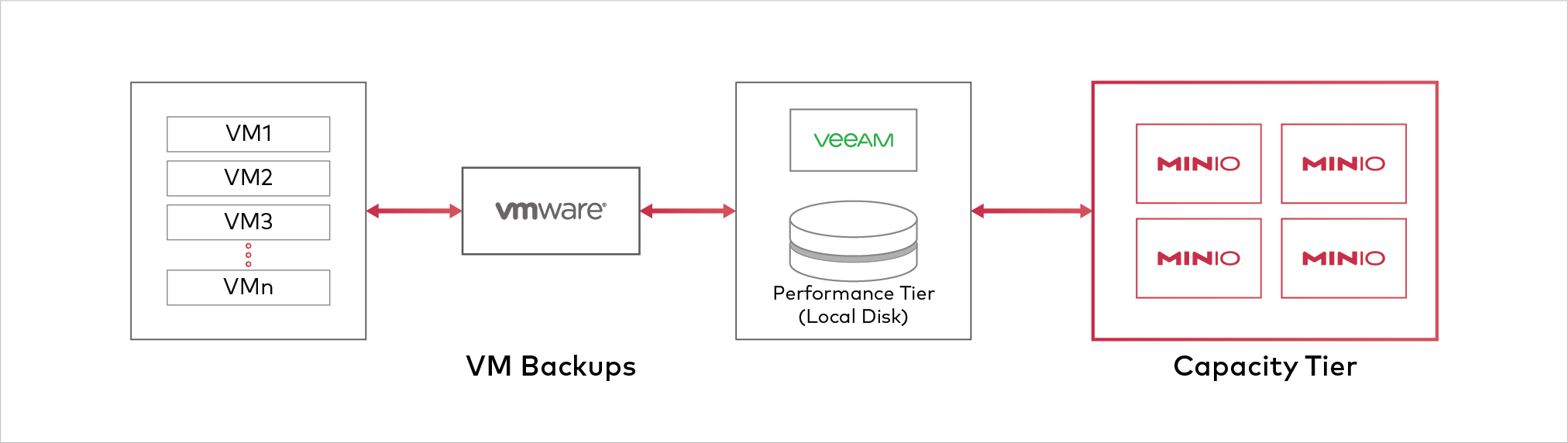

To demonstrate that point, we will pair Veeam together with MinIO as a capacity tier to allow Veeam’s software to offload virtual machines from VMware ESX to Veeam.

ENVIRONMENT

ESX Server

One ESX server running 10 virtual machines with 300GB thin disks with 100GB generated data in each. (specs)

- 28 Physical Cores @ 2.2 GHz

- 384 GB of DDR4 ECC RAM

- 3.8TB NVME

- 10Gbps network *

- 6.5.0 Update 1 (Build 7967591)

Veeam Server

- 28 Physical Cores @ 2.2 GHz

- 384 GB of DDR4 ECC RAM

- 3.2 TB NVMe drive

- 20Gbps switch bonded nic

- Windows Server 2016, Veeam Backup and Replication 10.0.0.4461

MinIO Cluster

We deployed a four node MinIO cluster with the following specs

- 24 Physical Cores @ 2.2 GHz

- 256 GB of DDR4 ECC RAM

- 2.8 TB of SSD per server, split into 4 drives per server

- 2 x 10 GigE bonded NICs

- Ubuntu 18.04

MinIO configuration

We created a four node MinIO cluster with TLS for over the wire encryption, and object encryption. We have noted in previous benchmarks that object encryption has a minimal impact on the CPU performance. As such, we recommend it always be turned on.

MINIO_VOLUMES="https://veeam-minio0{1...4}/mnt/disk{1...4}/veeam"

MINIO_ERASURE_SET_DRIVE_COUNT=4

MINIO_ACCESS_KEY=

MINIO_SECRET_KEY=

MINIO_KMS_AUTO_ENCRYPTION=on

MINIO_KMS_MASTER_KEY=my-minio-key:

Specifications

Before running any tests, we would like to ensure that the underlying hardware is healthy and can generate and sustain sufficient I/O to make the best use of the MinIO cluster. In other performance benchmarks, we have demonstrated that CPU and RAM are never bottlenecks.

Disk Tests

As part of our testing, we like to measure the I/O subsystems of the various servers in the environment, including disk and network. This gives us visibility into the type of performance we can expect out of the environment as a whole, and helps identify potential bottlenecks. These are typically seen at the network, disk, or PCIe bus level, in that order. Since MinIO is highly optimized and lightweight, it does not require much of the CPU or RAM. The top command shows about 30% CPU utilization and 16 to 64GB memory consumption depending on usage.

In order to conduct these tests, we use several tools. To test disk performance, we use dd with caching turned off. This will give us a “best case” profile of what the disks are able to do with a sequential read or write, and also identify if any disks are performing significantly out of scope with the others. From there, we use iozone, which will show us aggregated throughput for all disks on a system so that we know what the entire system is capable of. IOzone will give us some additional assurances, since it utilizes random reads and writes. For network, we use the iperf utility, which will show us the maximum speed between various endpoints. When we are confident there are no system level bottlenecks, we use the Warp benchmarking utility to verify how MinIO will be able to handle various S3 operations, such as GET, PUT, and DELETE.

ESX:

On the ESX server, we tested the VMFS datastore that will house the VM’s that we will be backing up later. Technically this is not needed since the traffic in our tests will be between the Veeam Server and the MinIO cluster, but testing is fun and we like numbers. First, we test reads. The ESX implementation of dd does not support the oflag or iflag options but does not appear to cache reads or writes.

$ time dd if=/dev/zero of=/vmfs/volumes/veeam/dd.delme bs=1M count=10000

This was written in 16.25s, or about 625MB/s.

Doing a dd read test on a 9.2GB file shows just over 500MB/s reads

$ time dd if=/vmfs/volumes/veeam/bigfile.delme of=/dev/null bs=1M

with time consumed for the reads of 18.31s.

MinIO Server Writes:

Since we have four disks, we want to test each independently.

for i in {1..4}; do dd if=/dev/zero of=/mnt/disk$i/dd.delme bs=1M count=10000 oflag=direct; done

We see that each of the disks returns a little over 350MB/s disk writes.

10485760000 bytes (10 GB, 9.8 GiB) copied, 28.839 s, 364 MB/s

MinIO Server Reads

On each of the disks, we place a file just over 9GB in size, then run a read test.

for i in {1..4}; do dd if=/mnt/disk$i/bigfile.delme of=/dev/null bs=1M iflag=direct; done

Each disk returns around 375MB/s reads.

iozone

Now that we have our best case scenario for disk reads and writes via dd, it is time to do a more robust test. We run iozone with the following options:

$ iozone -t 64 -r 1M -s 4MB -I -b iozone.xls -F /mnt/disk{1..4}/tmp{1..16}

This specifies 32 threads at 1MB each, writing 8 threads a piece to each of the four disks using direct I/O and avoiding any cache. We will concentrate on the following values:

Initial write ~1.5GBs aggregate, ~382MB/s per disk

Initial Read ~1.7GB aggregate, ~415MB/s per disk

We see roughly the same numbers for random reads and writes as well.

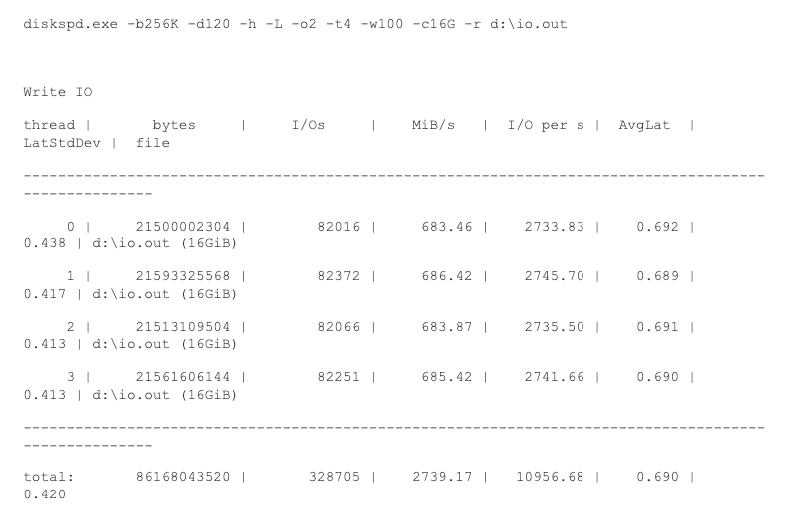

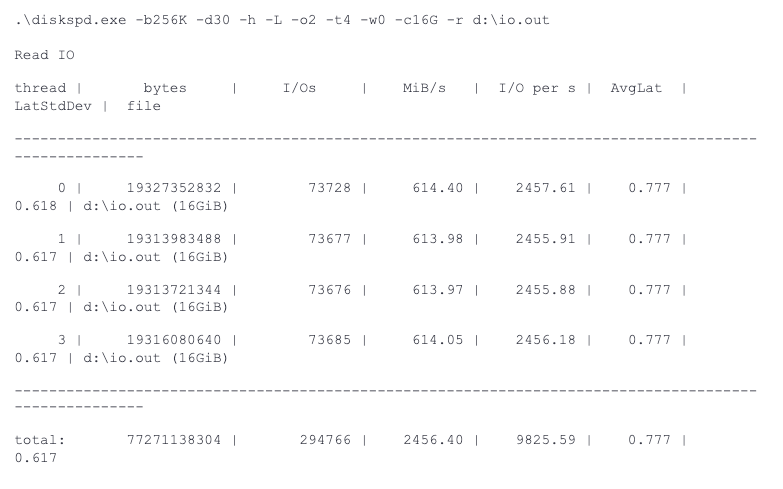

Windows Server

With Windows, we can use the diskspd utility. We use the following options:

diskspd.exe -b256K -d120 -h -L -o2 -t4 -w

-c16G -r d:\io.out

This writes 256k blocks for 120 seconds with 4 threads, no caching and random I/O to a 16GB file. The -w flag lets us define what percentage of writes will be performed for each test. We will use -w100, -w0 and -w50 for writes, reads, and equally mixed reads and writes, respectively.

Writes

Reads

iperf tests

With the iperf utility, we can measure the expected largest transfer speed per server. With an iperf server running, we see the following numbers from each of the clients to the MinIO servers. Note that we also ran the tests from all MinIO nodes to each other (only one result is shown here but all were similar). A sample command we used is iperf -c veeam-minio01 -P20 which uses 20 concurrent client connections to saturate the inbound network.

ESX

9.33 Gbits/sec

MinIO servers

18.7 Gbits/sec

Windows to MinIO servers:

18.9 Gbits/sec

From these numbers, we see that all servers are getting the expected network bandwidth.

Warp tests

Warp is a utility created by the MinIO development team for measuring performance of S3 compatible object stores. In these tests, we have deployed a number of client machines with sufficient network bandwidth to saturate the MinIO cluster.

GET numbers

First, we run a test for GET performance across the cluster.

$ warp get --access-key

--secret-key --tls --host veeam-minio0{1...4}:9000 --warp-client warp-client{1...4} --duration 5m --objects 2500 --obj.size 1MB --concurrent 32

This runs a 5 minute test with 1MB object size, and a concurrently of 32 threads per server for a total of 128 across the cluster. We see ~3.3GB/s reads to the cluster.

Operation: GET. Concurrency: 128. Hosts: 4.

* Average: 3316.29 MiB/s, 3477.39 obj/s (4m59.873s)

PUT numbers

Next we run the same test with PUT operations.

$ warp put --access-key <access-key> --secret-key <secret key> --tls --host veeam-minio0{1...4}:9000 --warp-client warp-client{1...4} --duration 5m --obj.size 1MB --concurrent 32

Here we see ~1.6GB/s writes to the cluster. It is important to note that writes will be smaller than reads with an n/2 erasure set due to write amplification, so these are expected numbers.

Operation: PUT. Concurrency: 128. Hosts: 4.

* Average: 1604.49 MiB/s, 1682.43 obj/s (5.596s)

DELETES

Finally, we test delete performance.

$ warp delete --access-key

--secret-key --tls --host veeam-minio0{1...4}:9000 --warp-client warp-client{1...4} --duration 5m

Operation: DELETE. Objects per operation: 100. Concurrency: 192. Hosts: 4.

* Average: 6738.25 obj/s (7.585s, starting 18:32:15 UTC)

Veeam Setup

Configuring MinIO as an s3 target

1. Create Object Store for capacity tier

First, we create a new S3 compatible object store as a capacity tier. Choose an s3 compatible object store and configure to use your MinIO deployment as a custom endpoint

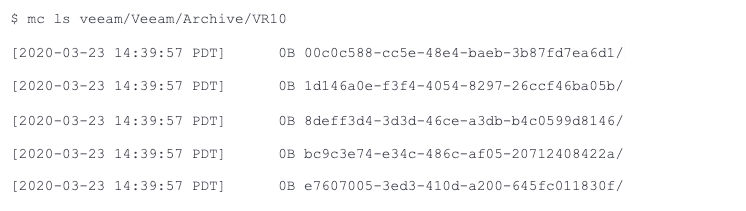

1e. Use the minio client command line utility (mc) to see that Veeam has created the prefix structure under the bucket:

Running a backup to the capacity tier

Next, we create a backup job to offload VM's from Veeam to MinIO. We will use ten test VM’s, each with 100GB of random test data, with each VM having a unique data set to avoid any deduplication.

On the Storage screen, we choose a Scale-out Backup Repository that was configured for this test. After backing up to the Veeam server, VM's will also be backed up to the capacity tier, i.e., MinIO.

Summary

Performance matters when it comes to backups and restores. Veeam's software is very well architected, but when it points to an S3 compatible object store it needs that object store to deliver in turn - otherwise the utility of the overall solution is limited.

MinIO and Veeam are superb compliments to each other. Both deliver scalability, speed and simplicity in a software-defined solution.

We encourage Veeam users to try out this architecture as it will enable them to modernize their stack, improve performance, deliver greater scale and ultimately present vastly superior economics for this critical software workflow.

To get started with MinIO, simply download the code. If you have questions we have outstanding documentation an integration document on Github and the industry's best community Slack channel. If you want a deeper relationship, check out our pricing page to see what options might fit your requirements.