MinIO Networking with Overlay Networks

The evolution of cloud computing and containerization technologies has transformed the way applications are developed, deployed, and managed. This shift has brought about significant changes in the networking landscape, introducing new challenges and opportunities for DevOps and SRE engineers. However, amidst this transformation, a notable knowledge gap has emerged, particularly in understanding the intricacies of networking in the context of physical networks and hardware.

The increasing reliance on API-driven infrastructure provisioning has abstracted away many of the underlying networking complexities, allowing engineers to deploy and manage applications more efficiently. While this abstraction has streamlined the deployment process, it has also led to a decreased familiarity with the foundational principles of networking. As a result, engineers often find themselves grappling with the challenges of troubleshooting, optimizing, and securing network communications in complex, distributed environments.

Overlay networks, especially within the scope of containerization technologies like Docker and Kubernetes, have emerged as an importantl concept in bridging this knowledge gap. By providing a virtual network layer that sits on top of the physical network infrastructure, overlay networks offer a powerful abstraction that simplifies networking tasks and enables scalable, secure configurations.

Evolution of Container Networking

To fully appreciate the significance of overlay networks, it is essential to understand the historical progression of container networking. In the early days of Docker, networking was primarily focused on single-host connectivity, where containers on the same host could communicate with each other using bridge networks. However, as containerization gained popularity and the need for multi-host networking arose, Docker introduced more sophisticated networking solutions.

With the introduction of Docker Swarm (Cluster), multi-host networking became a reality, enabling containers running on different hosts to communicate seamlessly. Initially, this was achieved using links and ambassador containers, which acted as proxies for inter-container communication. However, these approaches had limitations in terms of scalability and flexibility.

As the container ecosystem evolved, more dynamic and scalable networking solutions emerged, such as overlay networks. Overlay networks provided a way to create virtual networks that spanned across multiple hosts, allowing containers to communicate as if they were on the same network, regardless of their physical location. This paved the way for the development of more complex and distributed containerized applications.

Understanding Overlay Networks

An overlay network is a virtual network that is constructed on top of one or more existing network infrastructures, such as physical networks or other overlay networks. It creates a separate, logical network topology that can span multiple physical networks or devices, providing a level of abstraction that simplifies networking tasks and enables flexible, dynamic network configurations.

The concept of overlay networks is not new and has been used in various networking contexts for decades. However, cloud computing and containerization technologies have brought overlay networks to the forefront, as they provide a means to address the networking challenges associated with these environments.

At its core, an overlay network is a logical construct that encapsulates network traffic and routes it over an underlying network infrastructure. It uses a variety of encapsulation and tunneling protocols, such as VXLAN, NVGRE, and IPsec, to create virtual links between nodes, regardless of their physical location or the underlying network topology.

One of the key benefits of overlay networks is their ability to enable seamless communication between nodes, irrespective of their physical location or the underlying network architecture. By abstracting away the complexities of the physical network, overlay networks allow applications and services to communicate with each other as if they were on the same local network, even if they are distributed across multiple data centers or cloud providers.

Overlay networks also provide a high degree of flexibility and agility in network configurations. They allow network administrators to create, modify, and tear down virtual networks programmatically, without the need for physical network changes. This enables rapid provisioning and scaling of network resources, as well as the ability to adapt to changing application requirements and traffic patterns dynamically.

Moreover, overlay networks offer enhanced security and isolation capabilities. By encapsulating traffic and creating logical separation between different applications or tenants, overlay networks can help prevent unauthorized access and data leakage. They also enable the implementation of granular security policies and access controls, further enhancing the overall security posture of the network.

Docker Networking and Overlay Networks

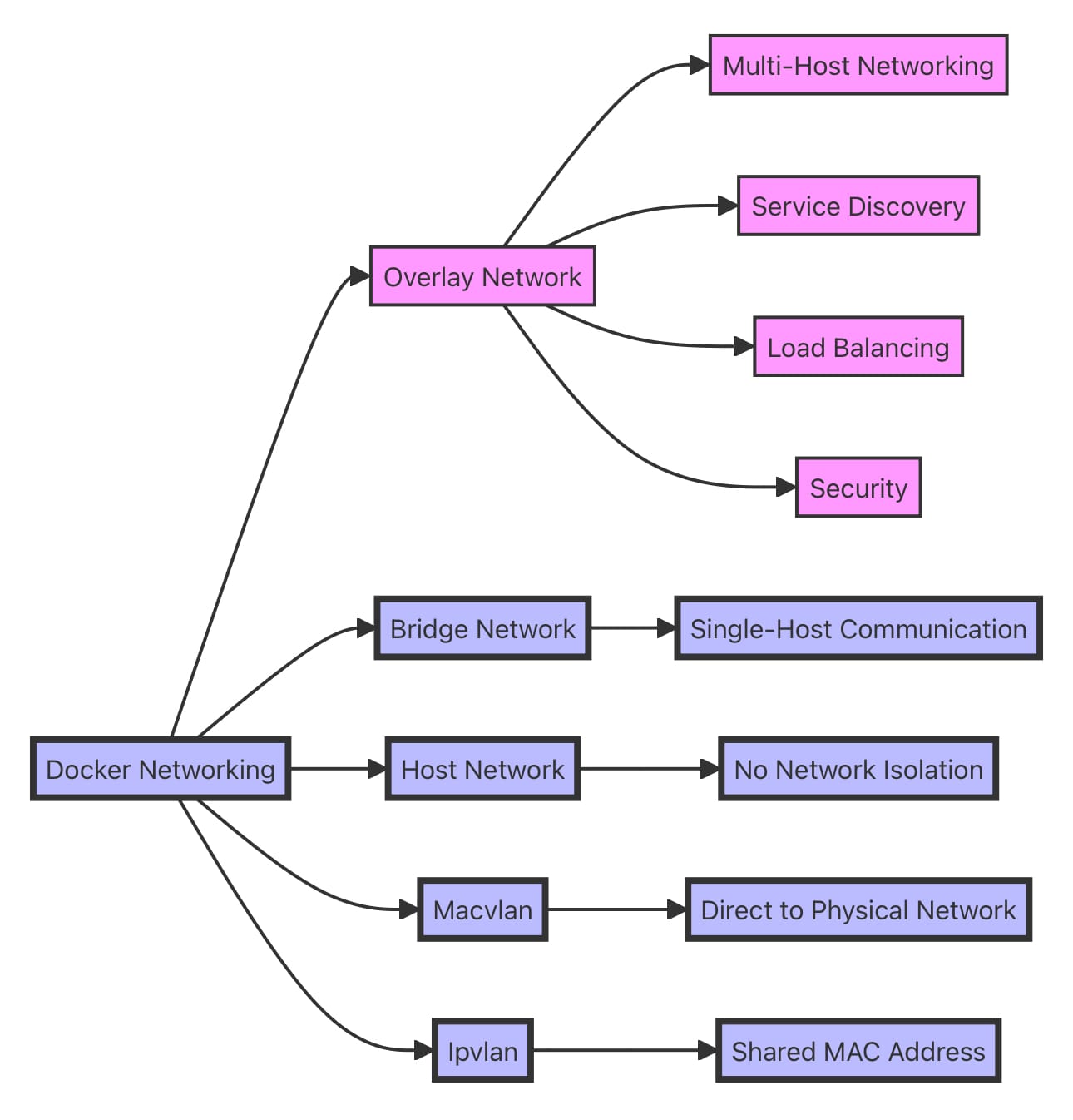

Docker, being the most widely adopted containerization platform, has native support for various networking modes, including bridge networks, host networks, and overlay networks. Among these, overlay networks have emerged as a powerful solution for enabling communication between containers across multiple hosts, making them particularly well-suited for deploying distributed applications in containerized environments.

Docker Networking Drivers

Docker networking is facilitated by a set of drivers that configure the network interface for containers, manage network connection, and ensure isolation and security. These drivers play a crucial role in determining how containers interact with each other and with the external network.

Some of the key Docker networking drivers include:

- Bridge: The default networking driver in Docker, which creates a virtual bridge network on the host and connects containers to it. Containers on the same bridge network can communicate with each other using their IP addresses.

- Host: This driver removes the network isolation between the container and the host, allowing the container to directly use the host's network stack. This can provide better performance but sacrifices the security and isolation benefits of containerization.

- Overlay: The overlay driver enables the creation of distributed networks across multiple Docker hosts. It allows containers running on different hosts to communicate with each other as if they were on the same network, without the need for explicit routing between hosts.

- Macvlan: This driver assigns a MAC address to each container, making it appear as a physical device on the network. This allows containers to be directly connected to the physical network, enabling them to receive routable IP addresses.

- Ipvlan: Similar to the Macvlan driver, the Ipvlan driver provides each container with a unique IP address. However, instead of assigning a MAC address, it uses the same MAC address as the host interface, reducing the overhead of MAC address management.

Each of these drivers has its own use cases, advantages, and limitations. Understanding the characteristics and behavior of these drivers is essential for optimizing container networking based on the specific requirements of the application.

Overlay Networks in Docker

Docker's overlay network allows multiple Docker hosts to be clustered together and managed as a single unified system.When an overlay network is created in a Docker cluster, it spans across all the nodes in the cluster, creating a virtual network that allows containers to communicate with each other seamlessly, regardless of which node they are running on. Each container attached to the overlay network receives a unique IP address, which remains consistent even if the container is rescheduled or moved to a different node.

Overlay networks in Docker use the VXLAN (Virtual Extensible LAN) protocol to encapsulate and tunnel network traffic between containers across the underlying physical network. VXLAN is a widely adopted standard that enables the creation of large-scale, multi-tenant networks by encapsulating Layer 2 Ethernet frames within UDP packets.

The use of overlay networks in Docker brings several key benefits:

- Multi-Host Networking: Overlay networks enable containers running on different hosts to communicate with each other seamlessly, as if they were on the same local network. This is essential for deploying distributed applications that span multiple nodes, such as microservices architectures or clustered databases.

- Service Discovery: Docker's overlay networks integrate with the built-in service discovery mechanism, allowing containers to discover and connect to each other using service names, rather than IP addresses. This simplifies the configuration and management of complex, multi-container applications.

- Load Balancing: Overlay networks in Docker support load balancing of incoming traffic across multiple container instances. This enables horizontal scaling of services and helps ensure high availability and performance.

- Security: Overlay networks provide a degree of isolation and security by default. Containers attached to an overlay network can only communicate with other containers on the same network, and traffic between containers is encrypted using IPsec, providing an additional layer of security.

Kubernetes Networking and CNI Plugins

Kubernetes, the leading container orchestration platform, has its own networking model and relies on CNI (Container Network Interface) plugins to provide network connectivity and policy enforcement for containers. CNI is a standardized interface that allows different network providers to implement their own networking solutions for Kubernetes.

In Kubernetes, each pod (a group of one or more containers) gets its own unique IP address, and containers within a pod can communicate with each other using localhost. However, to enable communication between pods across different nodes in the cluster, Kubernetes relies on CNI plugins.

Some popular CNI plugins used in Kubernetes include:

- Flannel: Flannel is a simple and lightweight CNI plugin that provides a flat, overlay network for Kubernetes clusters. It uses VXLAN or host-gw (host gateway) as the backend to encapsulate and route traffic between pods.

- Calico: Calico is a highly scalable and flexible CNI plugin that offers advanced networking features, such as network policies, and supports both overlay and non-overlay modes. It uses BGP (Border Gateway Protocol) for routing and IPIP (IP-in-IP) or VXLAN for encapsulation.

- Weave Net: Weave Net is a full-featured CNI plugin that creates a virtual network overlay using VXLAN. It provides a simple and easy-to-use networking solution for Kubernetes, with built-in encryption and support for network policies.

These CNI plugins interact with the underlying networking components, such as Open vSwitch (OVS) or iptables, to configure the network interfaces and routes for the pods. They also implement network policies, which allow fine-grained control over the traffic flow between pods based on labels and selectors.

Network policies in Kubernetes provide a way to define rules that govern which pods can communicate with each other. They act as a firewall for the pods, specifying which traffic is allowed or denied based on factors such as pod labels, namespaces, or IP ranges. Network policies are implemented by the CNI plugins and are enforced at the kernel level using iptables or other mechanisms.

By leveraging CNI plugins and network policies, Kubernetes enables secure and efficient networking for containerized applications, ensuring that pods can communicate with each other while maintaining the desired level of isolation and access control.

Deploying MinIO with Overlay Networks

To illustrate the effectiveness of overlay networks in containerized environments, let's explore a real-world scanario: deploying MinIO high-performance S3-compatible object storage solution, using overlay networks.

MinIO is designed to be cloud-native and container-friendly, making it an ideal candidate for deployment in containerized environments. It provides a scalable, distributed storage layer that can be easily integrated with a wide range of applications and services.

Step 1: Create an Overlay Network

Create an overlay network that will be used by the MinIO deployment. This network will span across all the nodes in the Docker cluster, enabling MinIO nodes to communicate with each other and with client applications.

docker network create --driver=overlay --attachable minio-overlay-networkdocker network create

Step 2: Define the MinIO Service

Define the MinIO service using a Docker Compose file (minio-compose.yml):

version: '3.8'

services:

minio:

image: minio/minio:latest

command: server /data

volumes:

- minio-data:/data

networks:

- minio-overlay-network

deploy:

mode: global

placement:

constraints:

- node.role == worker

volumes:

minio-data:

networks:

minio-overlay-network:

external: truedocker compose example

This Compose file defines the MinIO service using the official MinIO Docker image, attaches it to the minio-overlay-network overlay network, and specifies a global deployment mode to run one instance of the service on each worker node in the docker cluster.

Step 3: Deploy the MinIO Stack

Deploy the MinIO stack to the Docker cluster using the following command:

docker stack deploy --compose-file minio-compose.yml miniodocker stack deploy

Docker cluster will distribute the MinIO service across the worker nodes in the cluster, creating one instance of the service on each node. The overlay network will enable seamless communication between the MinIO instances.

Additionally, to deploy containers in a Multi-Host environment using docker service create would use the --network minio-overlay-network flag and value as seen in the following command to deploy MinIO as a replicated service in the overlay network:

docker service create --name minio_service --network minio-overlay-network --replicas 4 -e "MINIO_ROOT_USER=minioadmin" -e "MINIO_ROOT_PASSWORD=minioadmin" minio/minio server /datadocker service create

Expected Outcome:

Service 'minio_service' created with 4 replicas in 'minio-overlay' network.

Step 4: Inspect the MinIO Overlay Network

After deploying the MinIO service using Docker Swarm and the overlay network, it's essential to inspect the network configuration to ensure proper connectivity and troubleshoot any issues. Docker provides the docker network inspect command to retrieve detailed information about a network.

To inspect the minio-overlay-network, run the following command:

docker network inspect minio-overlay-networkdocker network inspect

Additionally, you can use the docker network inspect command to retrieve specific information about the network using filtering and formatting options.

This command will display comprehensive details about the overlay network, including its configuration, connected containers, and network endpoints.

Example output:

[

{

"Name": "minio-overlay-network",

"Id": "nvk9xhel1f1qs1nuzf2trbiv1",

"Created": "2023-06-03T15:30:00.123456789Z",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.1.0/24",

"Gateway": "10.0.1.1"

}

]

},

"Containers": {

"0e1f2d3c4b5a": {

"Name": "minio_service.1.abc123def456",

"EndpointID": "1a2b3c4d5e6f",

"MacAddress": "02:42:0a:00:01:03",

"IPv4Address": "10.0.1.3/24",

"IPv6Address": ""

},

...

},

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4097"

},

"Labels": {},

"Peers": [

...

]

}

]

Output: docker network inspect

The output provides valuable information about the minio-overlay-network, including:

- Network ID and name

- Scope (e.g., swarm)

- Driver (e.g., overlay)

- IP address management (IPAM) configuration

- Connected containers and their network endpoints

- Network options and labels

By inspecting the overlay network, you can verify that the MinIO service containers are properly connected and identify any network-related issues.

For example, to get the subnet of the overlay network:

docker network inspect --format='{{range .IPAM.Config}}{{.Subnet}}{{end}}' minio-overlay-networkdocker network inspect --format

This command will output the subnet configuration of the minio-overlay-network.

Step 5: Access MinIO Container and Service

After deploying the MinIO service and verifying the overlay network, you can access the MinIO container and service to interact with your object storage.

Accessing the MinIO container: you can directly execute commands within the container’s environment (like docker exec) or attaching to the container for interactive tasks. This approach is used for direct interaction with a specific instance of MinIO running in a container.

To access the MinIO server running inside a container, you can use the docker exec command to open a shell or execute commands within the container.

First, retrieve the container ID of a MinIO service replica:

CONTAINER_ID=$(docker ps --filter "name=minio_service" --format "{{.ID}}" | head -n 1)Bash scripting to set CONTAINER_ID

Then, open a shell inside the MinIO container:

docker exec -it $CONTAINER_ID shdocker execute with interactive terminal on CONTAINER_ID

Once inside the container, you can run MinIO CLI commands or interact with the MinIO server directly.

For example, to list objects in a bucket using the MinIO CLI:

mc ls minio/my-bucketminio client (mc) listing objects in minio/my-bucket

This command will list the objects stored in the "my-bucket" bucket.

Accessing the MinIO Service: This involves interactions that consider the MinIO deployment as a whole, especially relevant in a Docker Swarm environment where there might be multiple replicas of MinIO running. Actions like scaling the service, updating the service, or accessing the MinIO web console through the service’s published port fall under this category.

To access the MinIO service deployed in the Docker Swarm cluster, you can use the docker service command.

List the running services:

docker service lsdocker list services

Expected Outcome:

ID NAME MODE REPLICAS IMAGE PORTS

abc123def456 minio_service replicated 4/4 minio/minio *:9000->9000/tcpVerify the status of the MinIO service replicas:

docker service ps minio_serviceList the service process status

Expected Outcome:

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

def456abc123 minio_service.1 minio/minio node1 Running Running 5 minutes ago

ghi789jkl012 minio_service.2 minio/minio node2 Running Running 5 minutes ago

jkl012mno345 minio_service.3 minio/minio node3 Running Running 5 minutes ago

mno345pqr678 minio_service.4 minio/minio node4 Running Running 5 minutes ago The output shows the details of each replica of the MinIO service, indicating that they are up and running on different nodes in the Docker cluster.

Access MinIO Web Console: To access the MinIO web console, open a web browser and navigate to http://<swarm-manager-ip>:9000. Replace <swarm-manager-ip> with the IP address of any node in the Docker cluster.

You will be prompted to enter the access key and secret key. Obtain these credentials from the MinIO server logs or the environment variables set during the service creation.

Once logged in, you can use the MinIO web console to manage buckets, upload/download objects, and perform various object storage operations.

By accessing the MinIO container, service, and web console, you can effectively interact with your distributed object storage deployed using overlay networks. This allows you to manage your data seamlessly across multiple nodes in the cluster.

Updating or Removing a Network from a Service

To update the network of a service that is already up and running you can use the docker service update --network-add command:

docker service update --network-add minio-overlay-network <service-id>docker service update --network-add

Expected Outcome:

<service-id>

overall progress: 1 out of 1 tasks

1/1: running

verify: Service convergedIf a service is mistakenly attached to a network, you can remove it from that network with the --network-rm command:

docker service update --network-rm minio-overlay-network <service-id>docker service update --network-rm

Step 5: Test MinIO Connectivity

Test the connectivity and functionality of the MinIO deployment using the MinIO Client (mc) or any S3-compatible SDK or tool.

This overview demonstrates how overlay networks in Docker simplify the deployment and management of distributed storage solutions like MinIO. By leveraging overlay networks, MinIO instances can communicate seamlessly across multiple nodes in a Docker cluster, providing a scalable and resilient storage layer for containerized applications.

Conclusion

Overlay networks have emerged as an important concept for bridging the knowledge gap faced by DevOps and SRE engineers in the realm of modern networking. By providing a powerful abstraction layer that simplifies networking complexities, overlay networks enable the deployment of scalable, flexible, and secure network configurations in containerized environments.

The native support for overlay networks in Docker and the integration with Kubernetes through CNI plugins have modernized the way distributed applications are deployed and managed. These technologies have greatly simplified the networking challenges associated with modern, cloud-native architectures, allowing seamless communication between containers across multiple hosts.

In conclusion, overlay networks, in conjunction with containerization technologies like Docker and Kubernetes, provide a powerful toolset for addressing the networking challenges of the modern era. By understanding and leveraging these technologies, engineers can build scalable, flexible, and secure infrastructures that can drive innovation and business success in the digital age. As the adoption of containerization continues to grow, the mastery of overlay networks will become an essential skill for DevOps and SRE professionals alike, enabling them to navigate the complexities of modern networking with confidence and expertise.

If you have any questions about overlay networks or anything networking be sure to reach out to us on Slack or hello@min.io.