How to Use Nginx, LetsEncrypt and Certbot for Secure Access to MinIO

When you set up any piece of infrastructure, you would want to do it in a scalable and secure way. The same goes for your MinIO data infrastructure. You want to ensure:

- Traffic to the MinIO cluster gets distributed evenly

- That there is no single point of failure in MinIO cluster

- Communications with the MinIO cluster is secure

- Ease of maintenance when taking MinIO nodes offline

We’ll go over how to set up Load Balancing and TLS with MinIO using Nginx and LetsEncrypt/Certbot. We’ll use containers, but it won’t be entirely automated with something such as cert-manager in Kubernetes so that we understand the internals of how various components work and integrate together. If later you’d prefer to automate it, I actually recommended it because as you scale the number of applications, manually adding/removing MinIO nodes from the load balancer or renewing certificates every 3 months can get cumbersome.

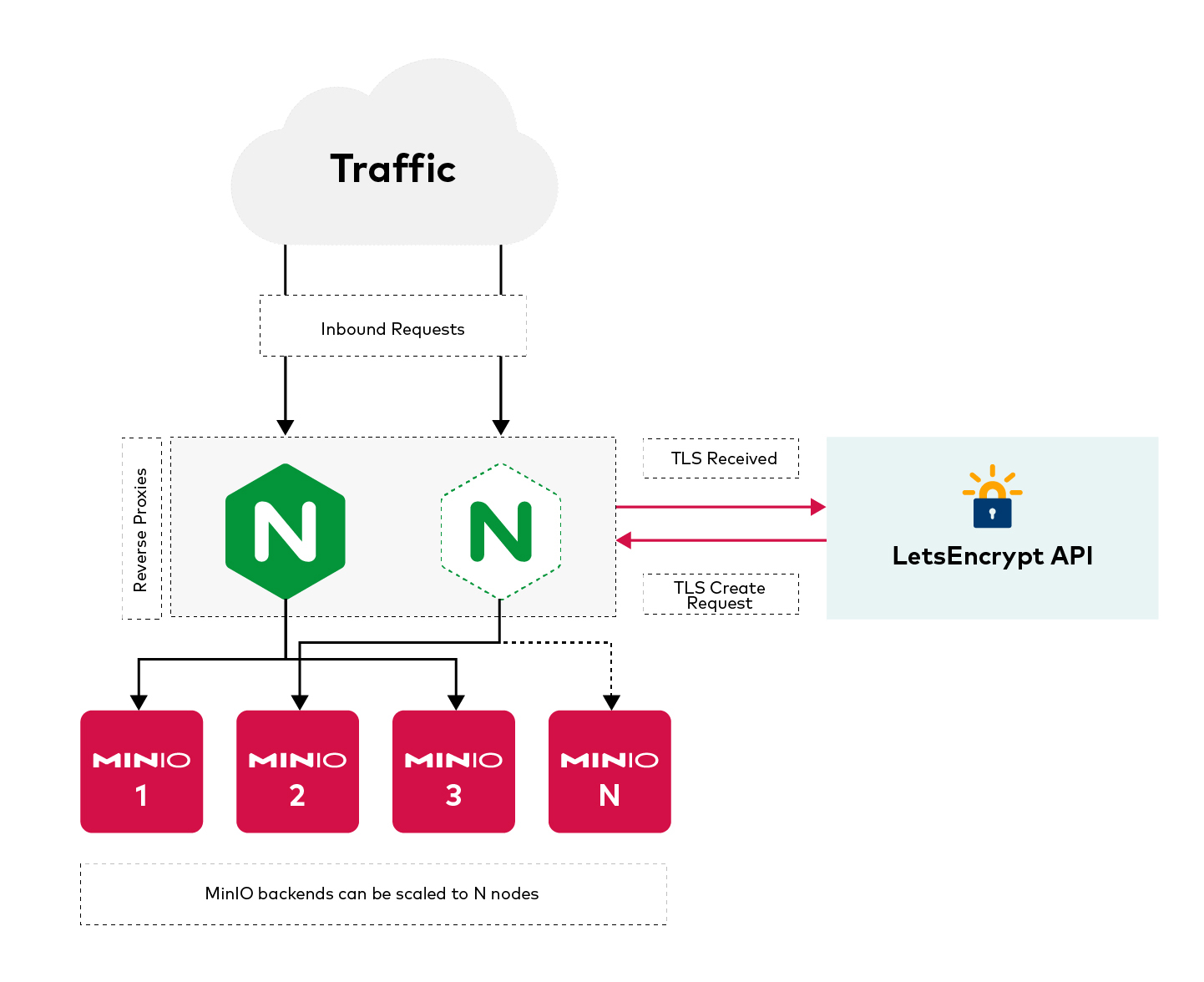

Let’s take a look at a diagram of what we’re about to set up:

MinIO

We’ll bring up 3 identical MinIO nodes with 4 disks each. MinIO runs anywhere - physical, virtual or containers - and in this overview we will use containers created using Docker.

Nodes Setup

Lets name these 3 nodes:

minio1minio2minio3

We’ll walk through deploying minio1, and then you can use the same process for minio2 and minio3

For the 4 disks, create the directories on the host for minio1:

Replace <id> with 1. Repeat the above for minio2 and minio3 as well.

Launch Nodes

Launch 3 Docker containers with the following specifications, similarly replacing <id> with 1, 2 and 3 for the MinIO nodes:

-p <host>:<container>: This is the console UI port exposed on the host which we can use to view buckets and objects and manage MinIO.

-v <host>:<container>: This is for mounting the local directories you created earlier as disks on the container.

--name and --hostname: Name these the same so it's consistent when configuring the containers.

quay.io/minio/minio server http://minio{1...3}/mnt/disk{1...4}/minio --console-address ":9001": The minio{1...3} and disk{1...4} get expanded before being applied to the server configuration.

When all 3 nodes are successfully up and communicating with each other, run docker logs minio1

If you see 12 Online that means you’ve successfully set up the cluster of 3 MinIO nodes with 4 drives each, a total of 12 drives online.

Testing Your New MinIO Cluster

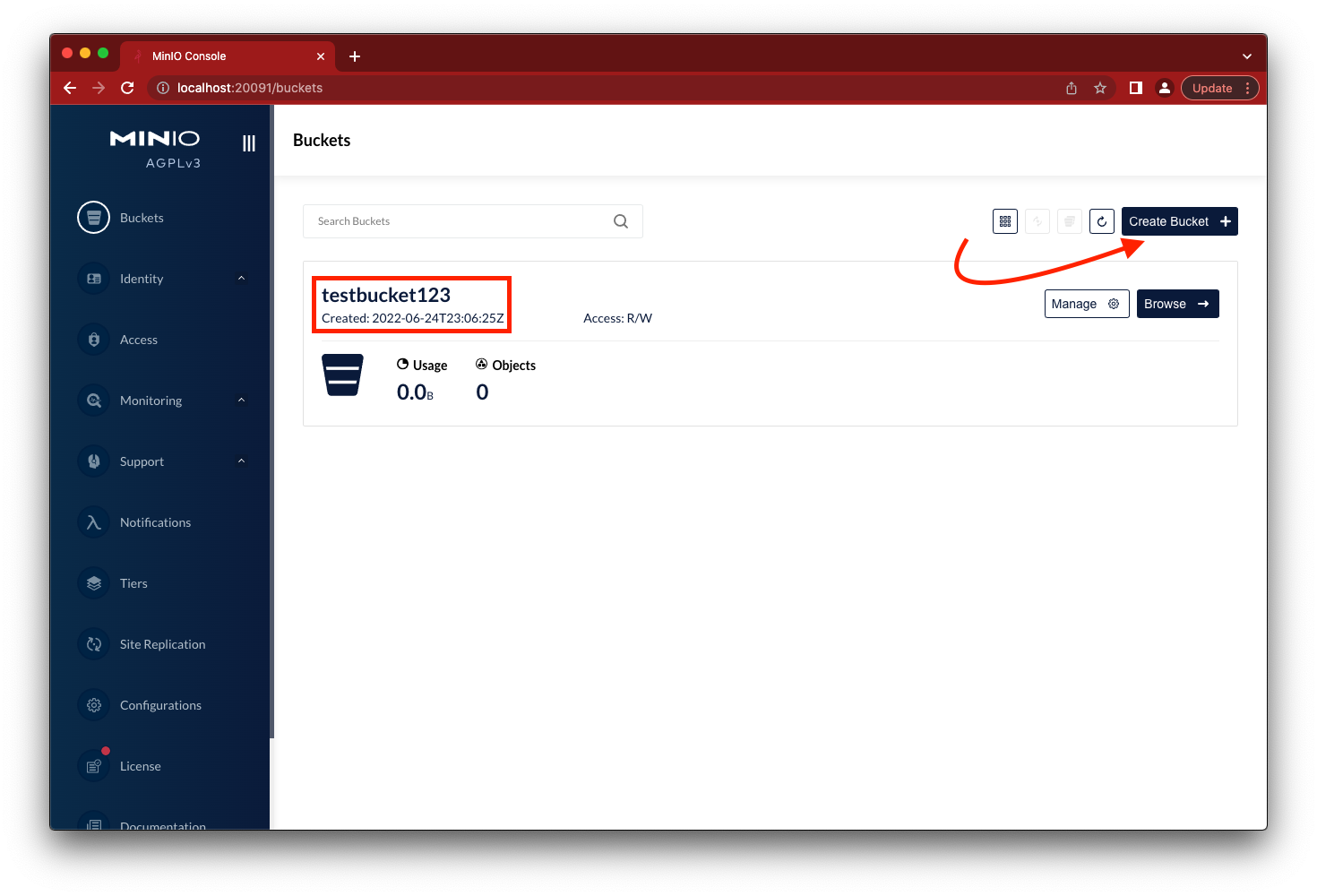

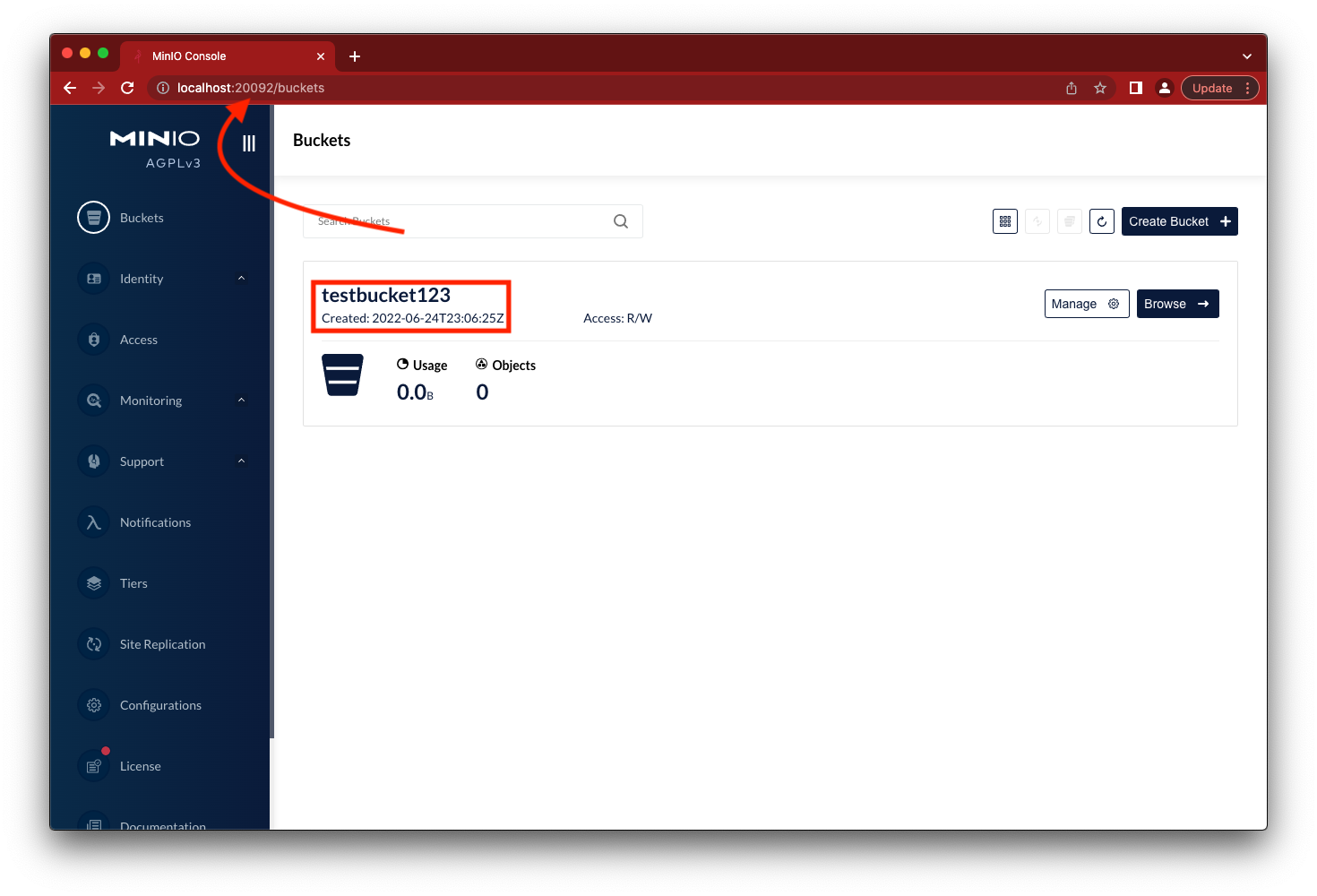

Go to the browser to load the MinIO console for 1 of the 3 containers on their respective ports http://localhost:2009<id>, replacing <id> with 1, 2 or 3 for the MinIO nodes. Click on the Create Bucket button and create testbucket123.

If we access the console on another one of the MinIO containers we will see the same bucket.

Nginx

Load Balancing

Nginx started out as a web server and evolved so that today it is used as an ingress controller in Kubernetes. It has evolved in various facets, but one thing that it arguably does well is distributing requests to a set of backend nodes, a process known as reverse proxying.

TLS Termination

When using a reverse proxy, we should terminate the TLS connection at the proxy layer. This way you don’t have to worry about renewing the certs on each individual node when they expire. If you have a configuration management process in place, then this can be simplified and automated.

Configuration

There are 2 ports in our MinIO cluster, the server running on port 9000 and the console running on port 9001, and we need to proxy both of these through Nginx.

Create 2 upstreams for each of the 2 ports (9000 and 9001) and list our backend MinIO servers:

The minio_server is for port 9000 and minio_console is for 9001.

Create 2 Nginx server directives to go with it for each of the upstreams:

Once you have written the configuration, save it as default.conf in a location you can mount later into the Nginx container, in this case I have used the /home/aj/nginx/conf.d directory.

Using the custom configuration, launch an Nginx image with the port mappings. In this example we are mapping host ports 39000 and 39001 to nginx container ports 9000 and 9001, respectively.

Testing Reverse Proxy

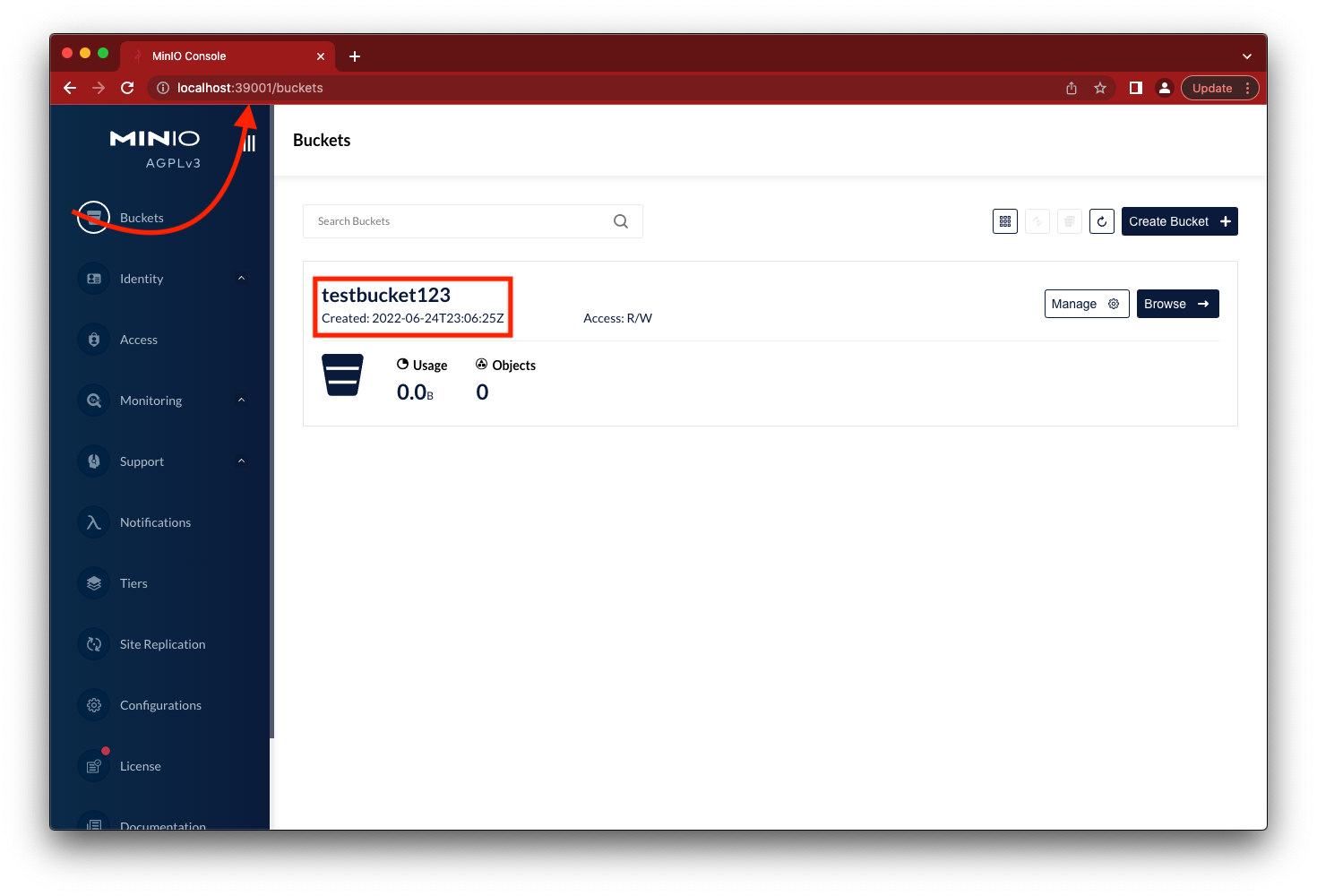

The 3 MinIO containers are now being served by the Nginx reverse proxy. We can confirm this by going to http://localhost:39001 and we should see the same testbucket123.

Nginx will ensure the requests get distributed evenly, so we don’t need to go to individual containers to access the console. The same goes for the server, you can access it on localhost:39000, Nginx will take care of load balancing.

LetsEncrypt

These days the de facto way of creating and managing TLS certificates using LetsEncrypt certificates is via Certbot. We will use Certbot to help manage the lifecycle of a certificate in an automated way. Some of the things it will help you with are:

- Creating certificates

- Renewing certificates

- Revoking certificates

In our case, we’ll use Certbot to create a certificate that we will use with Nginx from the previous step.

Create Certificate

While we would not be using total automation like cert-manager with Kubernetes in this tutorial, we will use Certbot to make the generation and renewal of our certificates a bit easier - let’s not make it that difficult either :)

There are several ways to install Certbot on various platforms, here are the instructions. Once you have Certbot installed for your specific platform, there are two ways to verify your certificates with the LetsEncrypt API using what they call “challenges”.

Challenges

For example, let’s assume the domain you are trying to create certificates for is minio.example.com:

HTTP

- HTTP challenge looks for a URL path is

/.well-known/acme-challenge/:id, in this casehttp://minio.example.com/.well-known/acme-challenge/:idto be accessible on port 80.

Certbot will then write files to the root directory of /.well-known/acme-challenge/:id during the cert generation process and does the verification. For example, this is how an Nginx config for the challenge could look like:

Then the certbot --webroot-path would be /var/html/certbot.

Rather than manually setting the above, the easiest way is to:

- Spin up a VM with Public IP, you need port 80 open.

- Update your DNS A record for

minio.example.comto the public IP of the VM. - Run the following command which will spin up a temporary server, setup the challenge and generate the certs

sudo certbot certonly --standalone

DNS

- In my opinion the DNS challenge is a bit simpler because all you have to do is set a record in minio.example.com’s DNS zone. There is no overhead of creating additional infrastructure or servers.

- Run the following command to start the process of setting up the DNS based challenge

certbot -d minio.example.com --manual --preferred-challenges dns certonly- The above command will stop at a prompt asking you to set a

TXTrecord for_acme-challenge.minio.example.comto a specific value. Once this is able to successfully resolve, the challenge will pass and you will have new certificates for minio.example.com.

One of the downsides to the DNS challenge method is that DNS propagation is not instantaneous. Even if you can resolve it locally on your laptop, the LetsEncrypt DNS servers might not, so you have to wait. Generally this is quick but it's not guaranteed.

Configuring Nginx

No matter which method you use, once the certs are generated there should be two files fullchain.pem and privkey.pem. Save these in a directory for mounting later into the Nginx container /etc/nginx/certs. In this case let’s put them in the /home/aj/nginx/certs directory.

Modify the 2 server { … } blocks in the Nginx default.conf file as follows:

- Change

server_name localhosttoserver_name minio.example.com.

Create the following 2 directives:

ssl_certificate /etc/nginx/certs/fullchain.pemssl_certificate_key /etc/nginx/certs/privkey.pem

Both server { … } blocks should look something like this:

Don’t forget to update the Nginx docker run command with an additional -v so the certs are available for Nginx in the next step:

Execute the above to launch the Nginx container.

Testing TLS

Okay, we’ve got everything configured, but we don’t have a public IP on the Nginx docker container that we can set for minio.example.com A record to use, so how do we test TLS?

One way to test is:

- Add a new line in

/etc/hostsfile of your local laptop - If the docker host is the same as your local laptop, you can add the line

127.0.0.1 minio.example.com. - If the docker host is instead running on another VM with a private IP, you can add the line

<vm_private_ip> minio.example.com. - In the browser go to

https://minio.example.com:39001and you should be able to see a secure connection.

Further Improvements

- Perhaps Nginx can run on a single port and instead use 2

location /…directives for/serverand/consoleon the same port. - As we’ve configured it, Nginx is a single point of failure. One possible option is to have multiple Nginx nodes with something like DNS round robin to distribute the traffic in case one of the Nginx instances needs to be taken offline for maintenance etc.

Final Thoughts

LetsEncrypt/Certbot is what comes to everyone’s mind when they talk about TLS certificates, Nginx is what comes to mind for Reverse Proxy and Kubernetes Ingress. Similarly, everyone knows that MinIO is the best object storage available. What’s the common thread to these? They share the straightforward simplicity of well-written cloud-native software, each providing core infrastructure services.

We couldn’t have written this short tutorial with another object store. Other similar object stores are tremendously complex and time consuming to set up, but we just set up a multi-node MinIO cluster that is load-balanced and TLS secured using just a couple of containers.

Don’t take our word for it though - do it yourself. You can download MinIO here and you can join our Slack channel here.