Deploy MinIO and Trino with Kubernetes

Trino (formerly Presto) is a SQL query engine - not a SQL database. Trino has eschewed the storage component of the SQL database to focus on only one thing - ultra-fast SQL querying. Trino is just a query engine and does not store data. Instead, Trino interacts with various databases or directly on object storage. Trino parses and analyzes the SQL query you pass in, creates and optimizes a query execution plan that includes the data sources, and then schedules worker nodes that are able to intelligently query the underlying databases they connect to.

MinIO is frequently used to store data from AI/ML Workloads, Datalakes to lake houses whether it be Dremio, Hive, Hudi, StarRocks or any of the other dozen or so great AI/ML tools solutions. MinIO is more efficient when used as the primary storage layer, which decreases total cost of ownership for the data stored, plus you get the added benefits of writing data to MinIO that is immutable, versioned and protected by erasure coding. In addition, saving data to MinIO object storage makes it available to other cloud native machine learning and analytics applications.

In this tutorial, we'll deploy a cohesive system that allows distributed SQL querying across large datasets stored in Minio, with Trino leveraging metadata from Hive Metastore and table schemas from Redis.

Components

Here are the different components and what they do in our setup process we’ll go through next.

- Minio: Minio can be used to store large datasets, like the ones typically analyzed by Trino.

- Hive Metastore: Hive Metastore is a service that stores metadata for Hive tables (like table schema). Trino can use Hive Metastore to determine the schema of tables when querying datasets.

- PostgreSQL for Hive Metastore: This is the database backend for the Hive Metastore. It's where the metadata is actually stored.

- Redis: In this setup, Redis for storing table schemas for Trino.

- Trino: Trino (formerly known as Presto) is a high-performance, distributed SQL query engine. It allows querying data across various data sources like SQL databases, NoSQL databases, and even object storage like Minio.

Prerequisites

Before starting, ensure you have the necessary tools installed for managing your Kubernetes cluster:

- kubectl: The primary command-line tool for managing Kubernetes clusters. You can use it to inspect, manipulate, and administer cluster resources.

- helm: A package manager for Kubernetes. Helm allows you to deploy, upgrade, and manage applications within your cluster using pre-defined charts.

Repository Cloning

To access the resources needed for deploying Trino on Kubernetes, clone the specific GitHub repository and navigate to the appropriate directory:

Kubernetes Namespace Creation

Namespaces in Kubernetes provide isolated environments for applications. Create a new namespace for Trino to encapsulate its deployment:

Redis Table Definition Secret

Redis will store table schemas used by Trino. Secure these schemas with a Kubernetes Secret. The following command creates a generic secret, sourcing data from a JSON file:

Add Helm Repositories

Helm repositories provide pre-packaged charts that simplify application deployment. Add the Bitnami and Trino repositories to your Helm configuration:

Deploy MinIO for Data Storage

Initialize MinIO

Prepare MinIO within the Trino namespace.

Create MinIO Tenant

Set up a multi-tenant architecture for data storage. The example below creates a tenant named “tenant-1” with four servers, four storage volumes, and a capacity of 4 GiB:

Set Up Hive Metastore

Trino utilizes Hive Metastore to store table metadata. Deploy PostgreSQL to manage the metadata, then set up the Hive Metastore:

Install PostgreSQL

Deploy Hive Metastore

Use a preconfigured Helm chart to deploy Hive Metastore within the Trino namespace:

Deploying MinIO and Trino with Kubernetes

Trino and MinIO create a powerful combination for distributed SQL querying across large datasets. Follow these steps to deploy and configure the system.

Deploy Redis to Store Table Schemas

Redis is a high-speed, in-memory data store used to hold Trino table schemas for enhanced query performance. Deploy it in the Trino namespace using a Helm chart:

Deploy Trino

Deploy Trino as the distributed SQL query engine that will connect to MinIO and other data sources:

Verify Deployment

Confirm that all components are running correctly by listing the pods in the Trino namespace:

Security Review and Adjustments

Review and adjust security settings as needed. To disable SSL certificate validation for S3 connections, update the additionalCatalogs section of the values.yaml file with the following property:

Testing

Port Forward to MinIO Tenant Service

Port forward to the MinIO service of the tenant, enabling local access:

Create Alias and Bucket for Trino

1. Create Alias: Establish an alias for the tenant using the credentials from the MinIO deployment:

2. Create Bucket: Create a new bucket that Trino will use

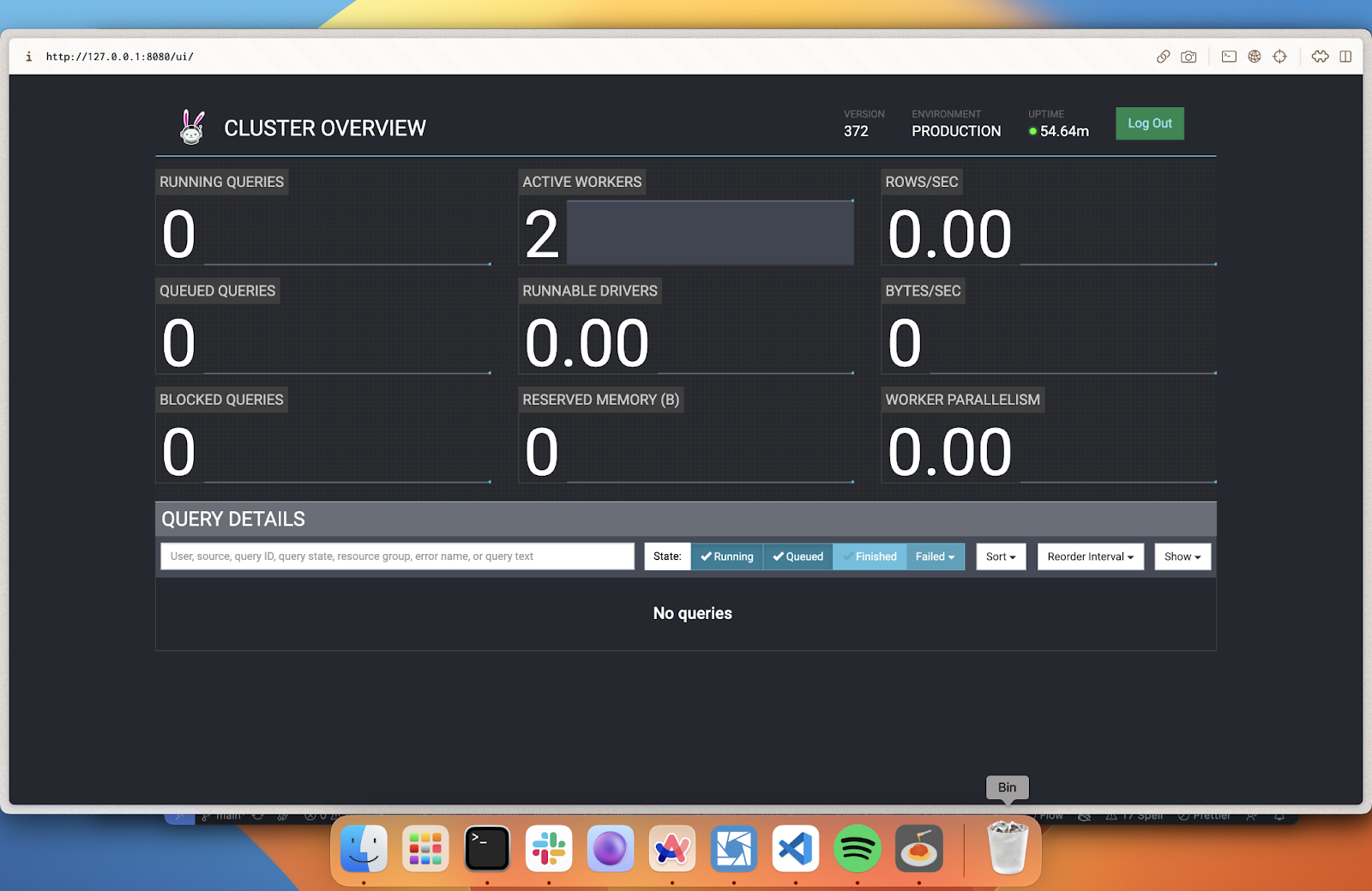

Access Trino UI via Port Forward

1. Obtain Pod Name: Retrieve the name of the Trino coordinator pod:

2. Port Forward: Forward local port 8080 to the coordinator pod:

3. Access UI: Use the Trino UI in your browser by visiting http://127.0.0.1:8080.

Query Trino via CLI

Access the Trino coordinator pod and start querying via the command line:

Confirm Data in MinIO Bucket

After creating the bucket, confirm that the data is stored in MinIO by listing the contents with the mc command-line tool. Use the following command:

It's as simple as that!

Final Thoughts

When troubleshooting configuration issues, especially those concerning security, thoroughly review the values.yaml files for each component to ensure proper settings.

Trino stands out for its ability to optimize queries across various data layers, whether specialized databases or object storage. It aims to minimize data transfer by pushing down queries to retrieve only the essential data required. This enables Trino to join datasets from different sources, perform further processing, or return precise results efficiently.

MinIO pairs exceptionally well with Trino due to its industry-leading scalability and performance. With the ability to handle significant workloads across AI/ML and analytics, MinIO effortlessly supports Trino queries and beyond. MinIO has created a comprehensive blueprint for data infrastructure to support exascale AI and other large scale data lake workloads. It is called the MinIO DataPod. Why? Because exascale data is the reality that is common today in today's enterprise. This remarkable performance ensures that data stored in MinIO remains readily accessible, making MinIO a reliable and high-performing choice for Trino without becoming a bottleneck.

If you have any questions on MinIO and Trino be sure to reach out to us on Slack!