MinIO Versioning, Metadata and Storage Deep Dive

It started with a SUBNET question. A customer was observing slowdowns occurring in their deployment. After some investigation we located a script the customer was running as the cause. The script was a synchronization script that uploaded the content of a directory, with a one minute delay between each run.

The destination bucket had versioning enabled since the customer was using serverside replication. The script doing the upload had a naive approach where it simply uploaded all files on every run. To keep this in check the customer used an automatic object expiration rule to delete all versions older than one day.

What we identified was that even if the files were small, the sheer number of versions that would accumulate between lifecycle runs caused significant load on the system.

While we could advise the customer to quickly work around the problem, we saw this as an opportunity to improve how we handle excessive version numbers.

Metadata structure

The MinIO server has no database. This is a design choice made early and a major factor in MinIO’s ability to scale in a fault tolerant manner across thousands of servers. Instead of a database, MinIO uses consistent hashing and the file system to store all information and content of objects.

When we implemented versioning, we investigated various options. Before versioning, we stored metadata as JSON. This made it easy to debug issues since we could directly look at the metadata. However, during our research we found that while it was convenient, we could reduce on-disk size by about 50% and CPU usage by about the same by switching to a binary format.

We decided to choose MessagePack as our serialization format. This keeps the extensibility with JSON, by allowing keys to be added/removed. The initial implementation simply is a header followed by MessagePack object with the following structure:

{

"Versions": [

{

"Type": 0, // Type of version, object with data or delete marker.

"V1Obj": { /* object data converted from previous versions */ },

"V2Obj": {

"VersionID": "", // Version ID for delete marker

"ModTime": "", // Object delete marker modified time

"PartNumbers": 0, // Part Numbers

"PartETags": [], // Part ETags

"MetaSys": {} // Custom metadata fields.

// More metadata

},

"DelObj": {

"VersionID": "", // Version ID for delete marker

"ModTime": "", // Object delete marker modified time

"MetaSys": {} // Delete marker metadata

}

}

]

}Metadata from previous versions were converted on updates, and new versions are added as “V2Obj” or “DelObj” depending on the operation.

We try to always make our benchmarks operate on real data so they are applicable to real world usage. We evaluated the time spent reading and writing up to 10,000 versions, with 10,000 parts each, as our worst case. Benchmarks showed that it took ~120ms to decode this. The memory allocations for reading were rather large, but deemed ok since most objects have 1 part, so 10,000 represented a worst case.

This was the on-disk representation for about a year. The inline data was added to the format, allowing for small objects to have their data stored in the same file as metadata. But this didn’t change the metadata representation as inline data is stored after the metadata.

This means that in cases where we only need to read the metadata we can simply stop reading the file when we have reached the end of the metadata. This can always be achieved with at most 2 continuous reads.

Object Versioning

Let’s step back and look at Object Versioning as a concept. In short, it keeps a record of object changes and allows you to step back in time. Simple clients do not need to worry about versioning and can just operate on the most recent object version.

The backend simply keeps track of the versions as they are uploaded. For example:

λ mc ls --versions play/test/ok.html

[2021-12-14 18:00:52 CET] 18KiB 83b0518c-9080-45bb-bfd3-3aecfc00e201 v6 PUT ok.html

[2021-12-14 18:00:43 CET] 0B ff1baa7d-3767-407a-b084-17c1b333ea87 v5 DEL ok.html

[2021-12-11 10:01:01 CET] 18KiB ff471de8-a96b-43c2-9553-8fc21853bf75 v4 PUT ok.html

[2021-12-11 10:00:21 CET] 18KiB d67b20e2-4138-4386-87ca-b37aa34c3b2d v3 PUT ok.html

[2021-12-11 10:00:11 CET] 18KiB 47a4981a-c01b-4c6a-9624-0fa44f61c5e9 v2 PUT ok.html

[2021-12-11 09:57:13 CET] 18KiB f1528d08-482d-4945-b8ee-e8bd4038769b v1 PUT ok.htmlThis shows 6 versions of ok.html that were written and 1 version (v5) that was deleted. The metadata will keep track of the versions.

In the simplest case managing metadata is purely a matter of appending changes. However in reality it might not be as straightforward. A disk can, for instance, be offline when a change is written. We accept the change if we can write to enough disks, but that means that when disks come back they will require healing. Replication and tiering may need to update an older version, tags can be added to versions, etc.

Pretty much any update means that we will need to check if the versions are still ordered correctly. Object versions are strictly sorted by “Modification Time”, meaning the time the object version was uploaded. With the structure above, this means we need to have all versions loaded to access this information.

The initial design was beginning to see its limitations when version count got very high. For some operations, we needed all version information to be available, and this sometimes needed more than a gigabyte of memory just for that. Using this much memory will put big limits on the number of concurrent operations that can take place, which of course is undesirable.

Design Considerations

Initially, we evaluated if storing the metadata for all versions in a single file was the way to go. We quickly rejected storing individual versions as single files. Metadata for one version is typically less than 1KB, so listing all versions would result in an explosion of random IO.

Listing a single version also returns the version count and the “Successor” modification time, meaning the timestamp of any newer version. So we need knowledge of all versions, which means that having one file per version would be counterproductive to performance.

We looked at a log-type approach where changes are appended instead of rewriting the metadata on every update. While this may be an advantage for writes, it comes with a lot of extra processing when reading. This would not only apply to individual reads, but it would also slow down listings considerably. So this was not an approach we wanted to pursue.

Instead we decided to look at which information we typically need for operations with all versions, and which information we needed for individual versions.

Most operations either operate on “latest version” or a specific version. If you do a GetObject call, you can specify a version ID and get that version, or your request is considered to be for the latest version. Most operations are similar. The only operation that operates on all versions is the ListObjectVersions call, which returns all versions of objects.

Object mutations require checking existing version IDs and modification time for sorting. Listing needs to merge versions across disks, so it needs to be able to check if metadata is the same across various disks.

If we can access this information, then there is no operation that will need to have all version metadata unpacked in memory at once. The implications for performance are dramatic.

Implementation

In practice, we decided on a rather small change that would enable all of these improvements. Instead of having all versions completely unpacked in memory, we changed it to the following structure:

// xlMetaV2 contains all versions of an object.

type xlMetaV2 struct {

// versions sorted by modification time,

// most recent version first.

versions []xlMetaV2ShallowVersion

}

// xlMetaV2ShallowVersion contains metadata information about

// a single object version.

// metadata is serialized.

type xlMetaV2ShallowVersion struct {

header xlMetaV2VersionHeader

meta []byte

}

// xlMetaV2VersionHeader contains basic information about an object version.

type xlMetaV2VersionHeader struct {

VersionID [16]byte

ModTime int64

Signature [4]byte

Type VersionType

Flags xlFlags

}We still have all versions “in memory”, but we now keep the metadata of each version in a serialized form.In practice this serialized data is just a sub-slice of the metadata as loaded from disk. This means that we only need to allocate a fixed size slice for all versions. To perform our operations we have a header with limited information about each version, just enough information that we never need to scan through all metadata.

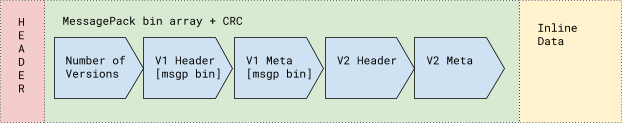

The representation on disk is also changed to accommodate for this. Previously, all metadata was stored as a big object that included all versions. Now, instead we write it like this:

- Signature with version

- Version of Header Data (integer)

- Version of Metadata (Integer)

- Version Count (integer)

Reading this header allows us to allocate the ‘versions’ inside an xlMetaV2 instance. Since all fields in a xlMetaV2ShallowVersion are fixed in size this will be the only allocation we need.

For each version there are 2 binary arrays, one that contains a serialized xlMetaV2VersionHeader and one that contains the full metadata. For all operations we only read the header and keep the serialized full metadata as is in memory. On disk, this adds about 30-40 bytes per version, even if this is duplicated data, it is still an acceptable tradeoff because of performance gains.

This means that for regular mutations that only affect a single version we only have to deserialize that specific version, apply mutations and serialize that version. Similarly for deletes and inserts, we never have to deal with the full metadata.

Object versions can be (re)sorted without moving more than the header and the serialized metadata. Furthermore we now also guarantee that saved files are stored pre-sorted. This means we can now quickly identify the latest version and getting “successor” modification time is trivial.

For the most common read operations this means we can return as soon as we have found what we are looking for. Listing can now deserialize one version at the time - saving memory and increasing performance.

Upgrading

MinIO has thousands of deployments, with billions of already stored objects, so upgrading smoothly is very important. When changing how we store objects we have a “no convert” approach, meaning we do not change stored data even if it is in an older format. With many objects, converting all of them would be a time and resource consuming task.

A major part of the task is to ensure that existing data doesn’t consume a huge amount of resources to be read. We cannot accept that an upgrade will reduce performance significantly. But we prefer not to keep duplicated code for older versions. Existing data usually ends up requiring a conversion at some point anyway, and we want to handle that as early as possible.

In this case we are able to leverage some of the strengths of MessagePack. While we still need to deserialize all versions, we can do some tricks:

- Look for the “Versions” array.

- Read the size.

- Allocate the

[]xlMetaV2ShallowVersionwe need. - For each element in the array:

- Deserialize to a temporary location

- Create a

xlMetaV2VersionHeaderbased on the deserialized data. - Observe how many bytes were consumed when deserializing.

- Create a sub-slice from the serialized data.

This is as fast as the previous version, since we are processing the same data. The only difference is that we only need one version in memory at the time, which significantly reduces memory usage.

With this trick we are able to load existing metadata with the same amount of processing as before. If the metadata needs to be written back to disk, it is now in the new format and on the next read no conversion is needed.

Scaling Benchmark and Analysis

To evaluate scaling we always try to create realistic loads but also aim for the worst possible scenario. In this case we have a clear case from our customer, where going above 1000 versions began causing performance issues. While we do make benchmarks on individual parts of the system, the real test always comes with end-to-end holistic measurements.

To evaluate MinIO’s ability to scale, we used our Warp S3 benchmarking tool. Warp makes it possible to create very specific loads. For this test we used put (PutObject) and stat (HeadObject) benchmarks. We created a number of objects, each with a varying number of versions and observed how fast we could fetch metadata from random objects/versions.

We tested on a distributed server, with a single NVME. The numbers aren’t representative of a full MinIO cluster, but only of a single server. Without further ado, let’s look at the numbers:

The vertical axis represents the number of object versions per second that has been committed to the server. Note that each sample represents an order of magnitude in terms of number of versions. Please also note that taller bars mean more operations/sec and that means greater performance.

Analyzing the numbers, with 10 and 100 versions we pretty much see the same upload speed. This is expected since we didn’t observe issues with what could be considered a “normal” number of versions. Even so, a minor speedup is good to observe.

With 1,000 versions, we observe the issue we investigated, and we are happy to see a 1.9x speedup for this case.

Looking at 10,000 versions, an interesting issue occurs. At this point our server reached IO saturation for our NVME storage at ~1.5GB/s. This switches the bottleneck from one to another part of the system, from memory to storage. The time needed to add the 10,000th version of an object is a mere 350ms.

Looking at read performance:

The first thing to note is that the scale is different. We are processing more than an order of magnitude more objects. You can see a clear benefit from not having to deserialize all object versions - MinIO scales smoothly to provide the best performance regardless of the number of objects versioned. The outcome of our optimizations is that reads of objects that have 1000 versions or more achieve a 3-4x speed increase in overall performance and system responsiveness.

Recall that this testing was done on a single server with a single NVME drive, not exactly the most powerfully equipped system for performance testing. Even in this configuration, we read object version information at about 180 requests per second so while reading 10,000 object versions is slower than reading a lesser number of objects, it is by no means unresponsive.

Conclusion and Future Improvements

Our main goal was to reduce the processing overhead of multiple versions. We reduced memory usage by several orders of magnitude and increased the speed of version processing.

The good thing about solving an issue is that there are always more challenges to address. For this iteration, we have increased the feasible number of versions by an order of magnitude. At MinIO, we are, however, never satisfied - we’re already looking into further improving performance and scalability of what is already the world’s fastest distributed object store.

We can observe that we hit an IO limit at a point, where mutations become burdened by the total metadata size. Mutating files that are in the kilobyte range is fine, but once we reach the megabyte range it becomes intensive for the system.

Right now we have a singular data format called ‘XL’. In the future we may investigate options to begin splitting header and full metadata/inline data into two files. This is suboptimal for a small number of versions, since IOPS are precious, but could be an approach we could take for 1000+ versions. Let’s call that format ‘XXL’.

There are great advantages to being in control of the data formats that a system uses. This level of control means that you can keep improving the system, and ensures that you can do so . At MinIO, our goal is to keep improving and help our customers scale. Of course, we could simply tell customers they will get the best performance by not having so many versions of the same data, but that’s not the MinIO way. We do the heavy lifting so you don’t have to. MinIO customers can focus on their applications and leave backing storage to us..

Don’t take our word for it - download MinIO and experience the difference for yourself.