Creating an ML Scenario in SAP Data Intelligence Cloud to Read and Model Data in MinIO

Enterprise customers use MinIO to build data lakehouses to store a wide variety of structured and unstructured data, and work with it using ML and analytics. Data flows into MinIO from across the enterprise and the S3 API allows applications, such as analytics and AI/ML to work with it.

I previously blogged about building data pipelines with SAP Data Intelligence Cloud, SAP HANA Cloud and MinIO. In that post, I explained how to connect MinIO to SAP Data Intelligence Cloud to import data for analysis in SAP HANA Cloud and SAP HANA on-premise.

This blog post focuses on using SAP Data Intelligence Cloud to build data pipelines, inspect, analyze and leverage machine learning features.

Getting Started with ML Scenario Manager

ML Scenario Manager helps you organize data science artifacts and manage tasks in a central location. It is a collection of design-time artifacts, like pipelines and Jupyter notebooks, and run-time artifacts, such as training runs, models and model deployments.

You must have a SAP Data Intelligence Cloud account or free trial.

If you do not already have MinIO installed, please do so. Make sure to note the API endpoint address, access key, secret key and bucket/path because you'll need to enter them when you create a connection in SAP Data Intelligence.

Please see Build Data Pipelines with SAP Data Intelligence Cloud, SAP HANA Cloud and MinIO for instructions to create a bucket, copy a file into it and then create a data connection. Please note that the bucket will only appear in SAP Data Intelligence Connection Management when data is in the bucket.

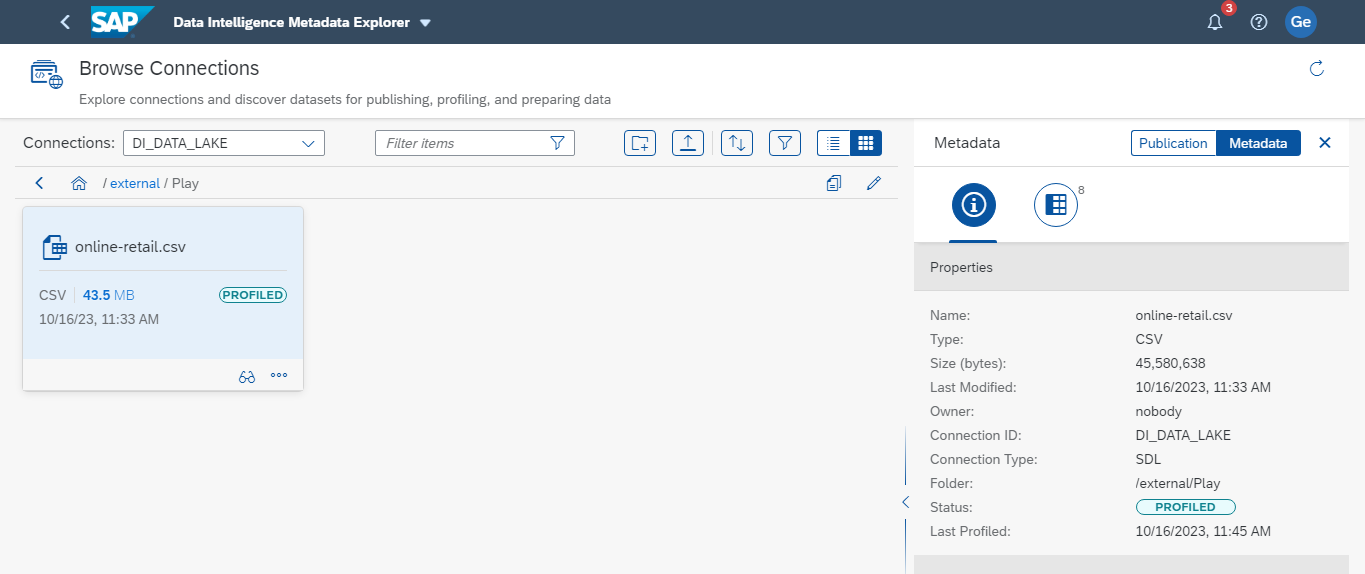

Open Metadata Explorer and under Browse Connections, click DI_DATA_LAKE, then click on External, then click on the connection to your MinIO bucket that you created in the steps above. You will see the file that you uploaded to MinIO and its complete path. In my case, the path is di-dl://external/Play/online-retail.csv. You will need to know this to register the file in ML Scenario Manager.

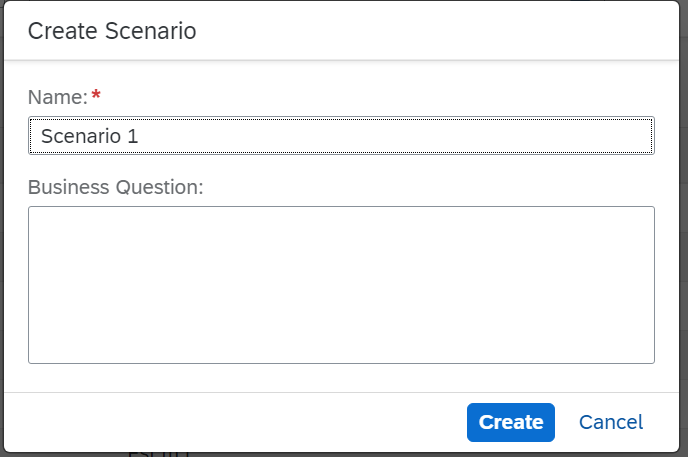

From the SAP Data Intelligence Launchpad, click ML Scenario Manager, then click Create and name your scenario. Then Click Create.

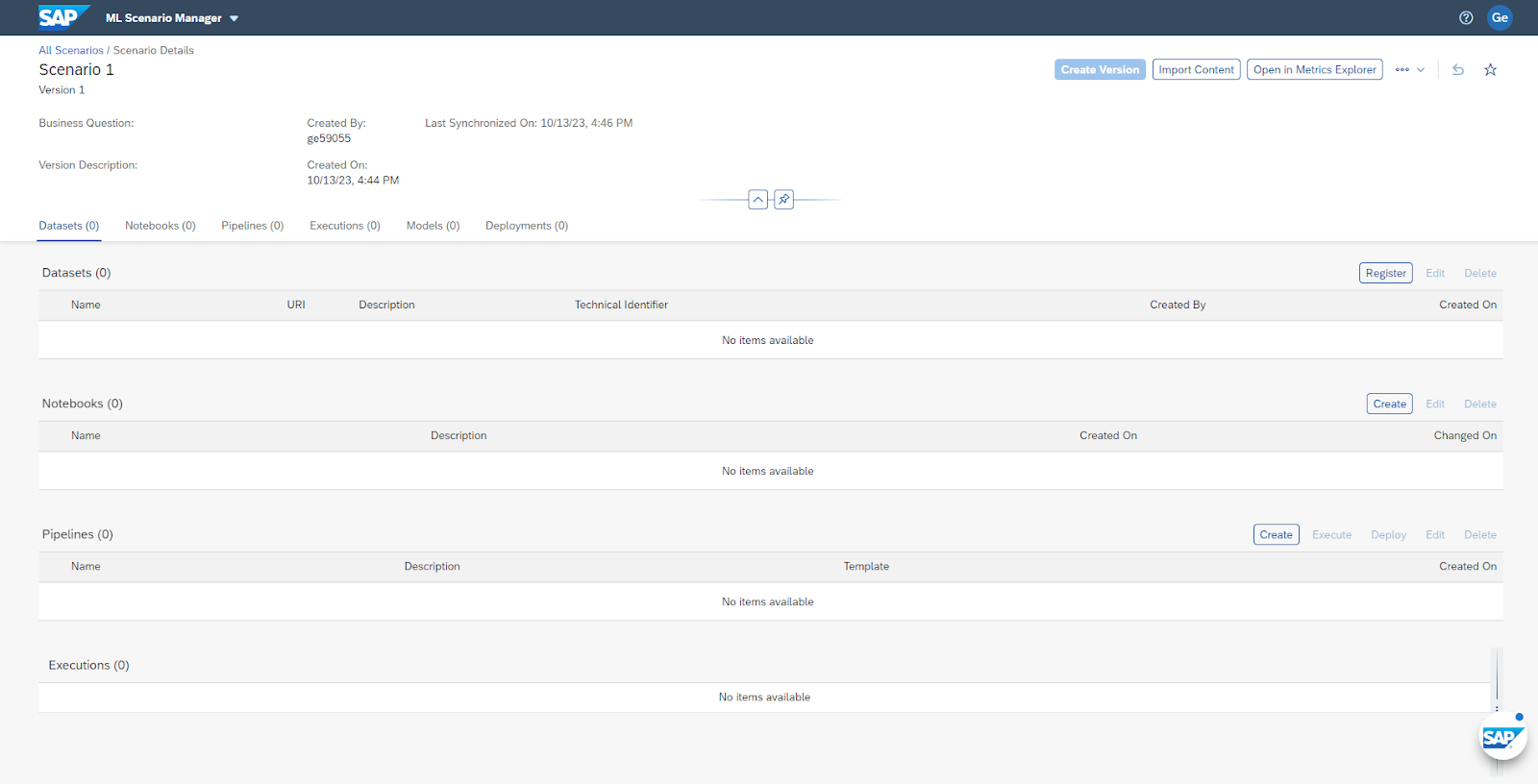

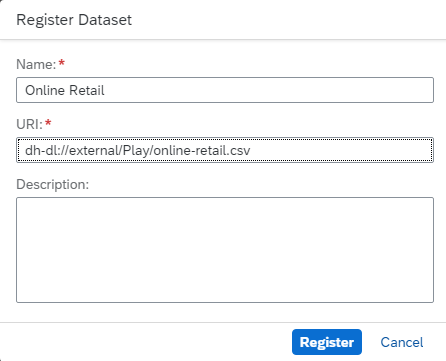

Register a Dataset

In the ML Scenario you just created, click the button to Register a Dataset and enter a name for the dataset. In the URI field, you will enter the full path to the dataset. In my case, this is di-dl://external/Play/online-retail.csv. It's optional to enter a description of the dataset. Finally, click Register.

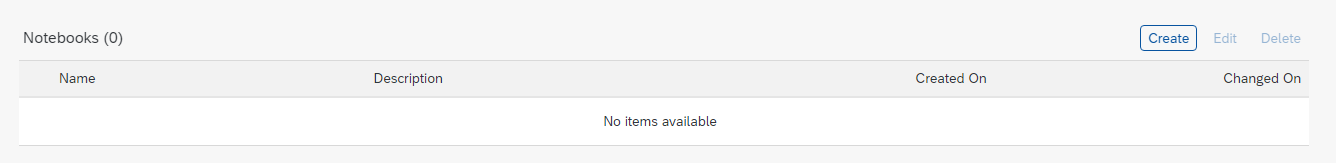

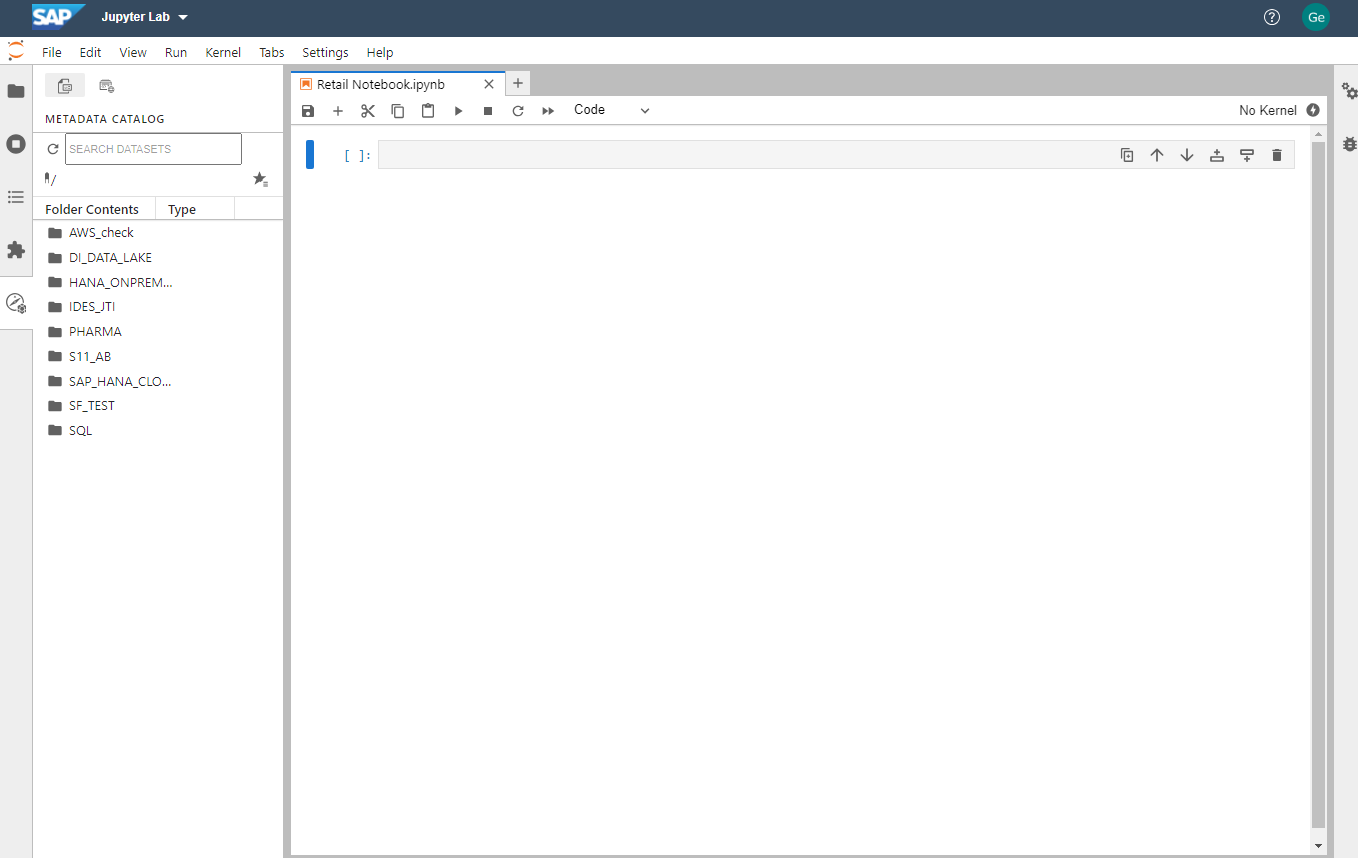

Adding a Jupyter Notebook

It's very easy to create a Jupyter notebook to experiment with your data and models.

In the Notebooks section of the ML Scenario page, click Create.

Enter a name for your notebook, then click Create. Jupyter Lab opens the notebook in a new browser window and you can begin working with it. When you open the notebook for the first time, you will be prompted to select a kernel. SAP recommends that you use Python 3.

You can script in Python to explore data and train the model.

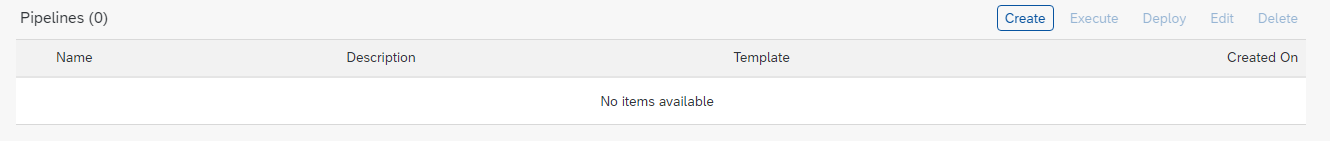

Adding a Pipeline

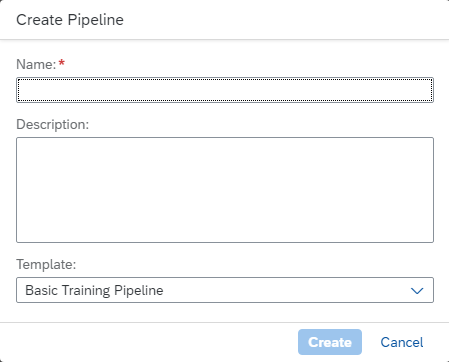

From the ML Scenario page, scroll down to the Pipelines area and click Create.

The Create Pipeline dialog box will pop up. Enter a name for the new pipeline. From the drop-down box, select a template to build the pipeline. There are quite a few templates to choose from. You can find a detailed explanation of these templates here.

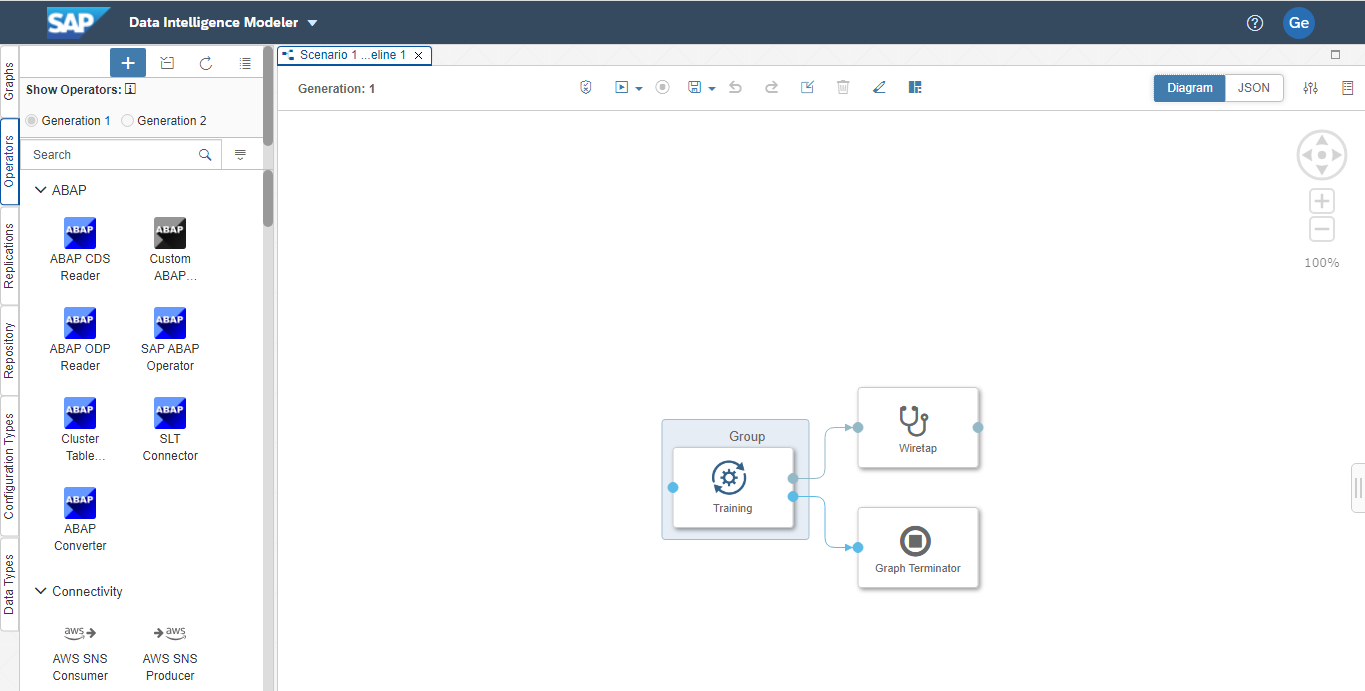

When you click Create, the Data Intelligence Modeler opens and displays the pipeline that you just created. To edit the pipeline, drag and drop Operators onto the work surface and connect them.

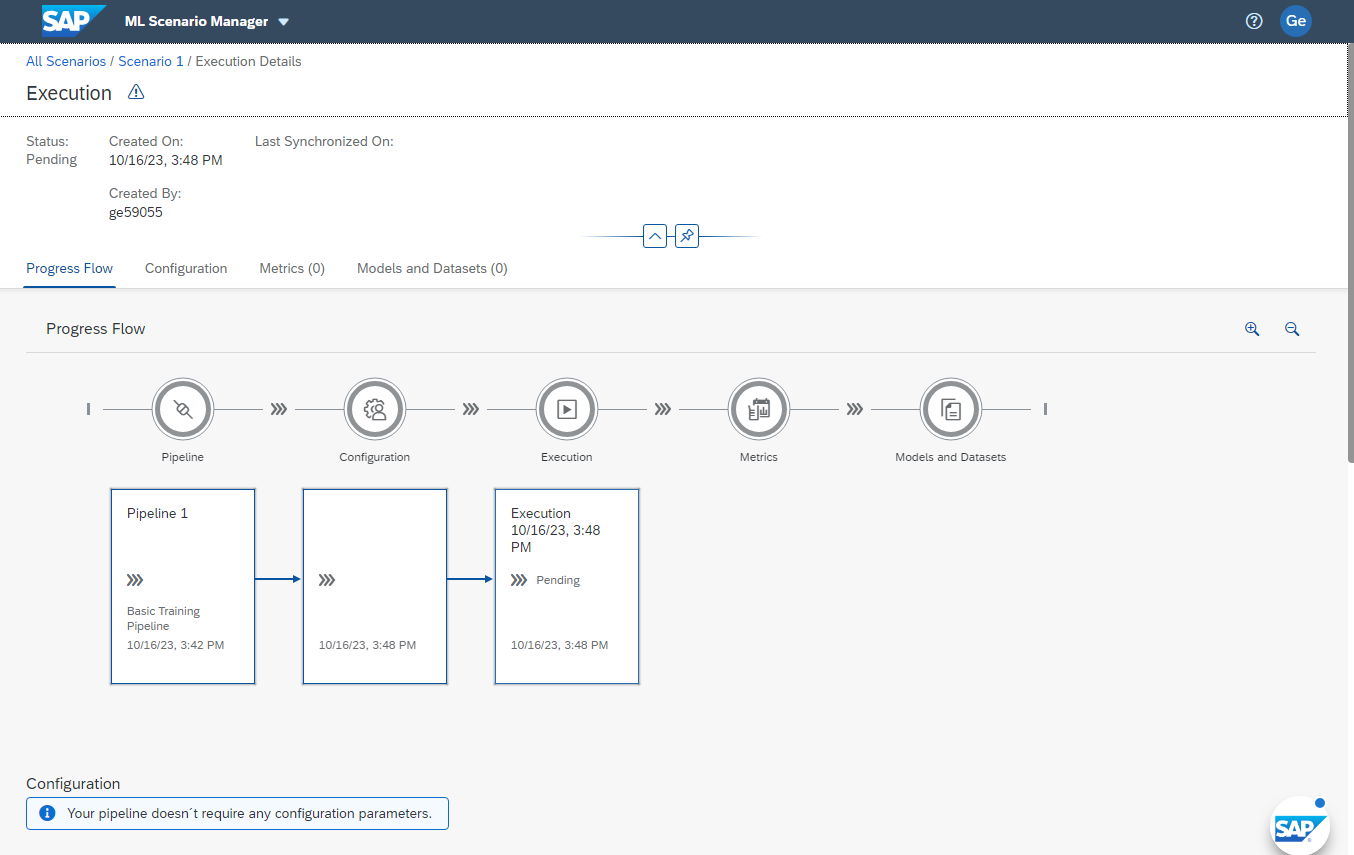

To run this model, return to the ML Scenario Manager, select the radio button for the pipeline that you want to execute and click Execute.

You will be prompted for further configuration of the pipeline should it be necessary.

Further Development

This tutorial gave a quick overview of building and executing a machine learning scenario. To take this example further, please see the excellent tutorial, SAP Data Intelligence: Create your first ML Scenario, which contains instructions for building a Jupyter notebook and pipeline that trains a linear regression model. If you prefer, there's also a version for R, SAP Data Intelligence: Create your first ML Scenario with R.

This tour of SAP Data Intelligence merely scratched the surface of what's possible. Extending the SAP Data Intelligence Data Lake to read data from MinIO object storage means that you can apply data science methods to your data, quickly and easily, using a browser-based GUI. You can use one of SAP's models or write your own, then run it on-demand or on a schedule. You could even expose the pipeline via a RESTful API and call it from another application in your cloud-native infrastructure.

Download MinIO and join our Slack community. Don't forget to check out MinIO How-To's on YouTube.