Rethinking Observability with MinIO and CloudFabrix

While the growth trajectory for data in general is extraordinary, it is the growth of log files that really stand out. As the heartbeat of digital enterprise, these files contain a remarkable amount of intelligence - across a stunning range, from security to customer behavior to operational performance.

The growth of log files, however, presents particular challenges for the enterprise. They are not “readable” per se, they require machine intelligence. They are individually small but collectively massive - running to the 10s or 100s of PBs for large organizations over time. They are unevenly distributed across the organization - often stored in a domain-specific observability application layer.

The enterprise, in turn, has looked to streamline these challenges - while retaining full fidelity copies, enabling the emerging edge and optimizing these log files both by enriching them and by trimming unwanted or unnecessary fields.

These efforts require two different, but highly complementary technologies - log intelligence and modern object storage. This post addresses how MinIO and CloudFabrix have partnered to solve this challenge.

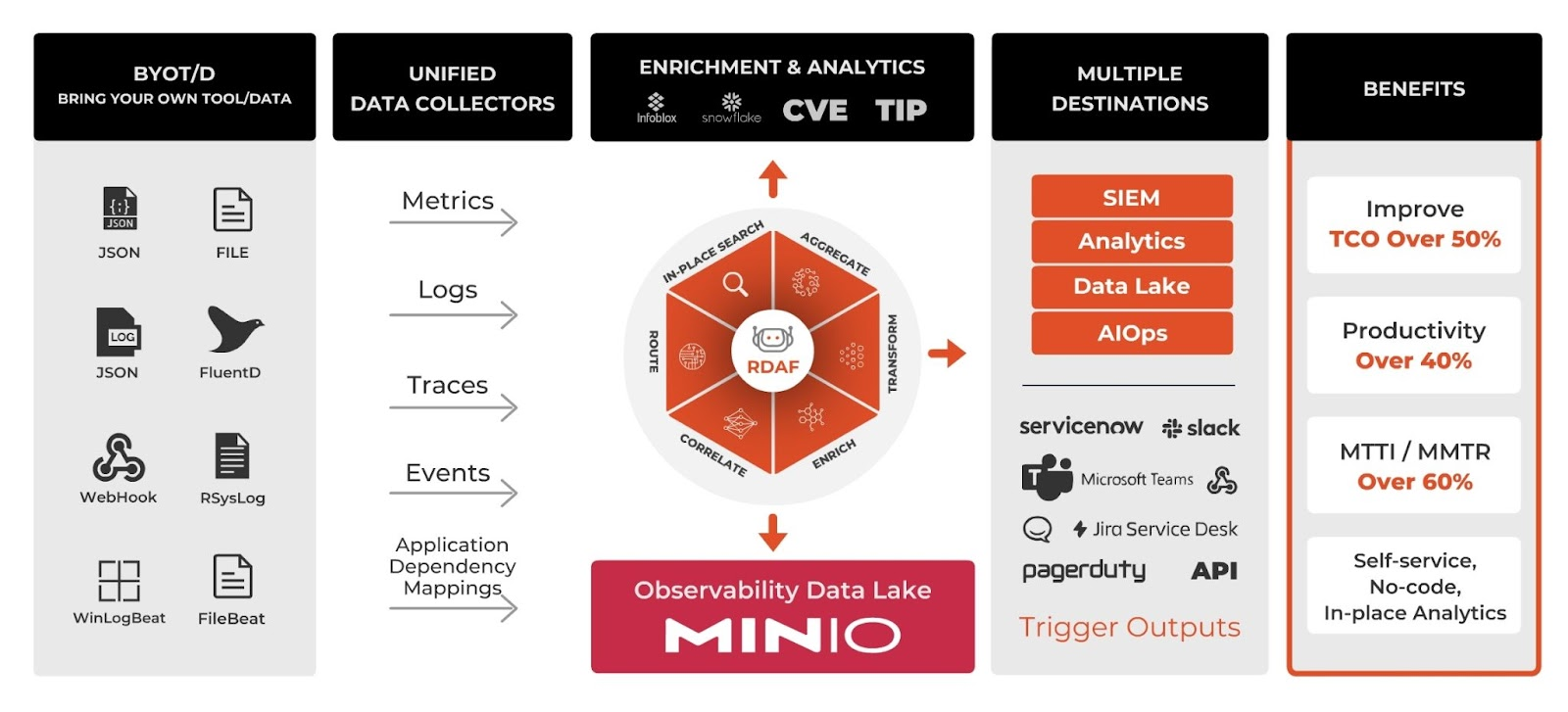

CloudFabrix is a pioneer in the data-centric AIOps platform space. They invented (with patents pending) the Robotic Data Automation Fabric Platform (RDAF). RDAF delivers integrated, enriched and actionable data pipelines to operational and analytical systems. RDAF unifies observability, AIOps and automation for operational systems and enriches analytical systems. The concept here is to create actionable intelligence to make faster and better decisions and accelerate IT planning and autonomous operations.

Current Challenges in Log Intelligence

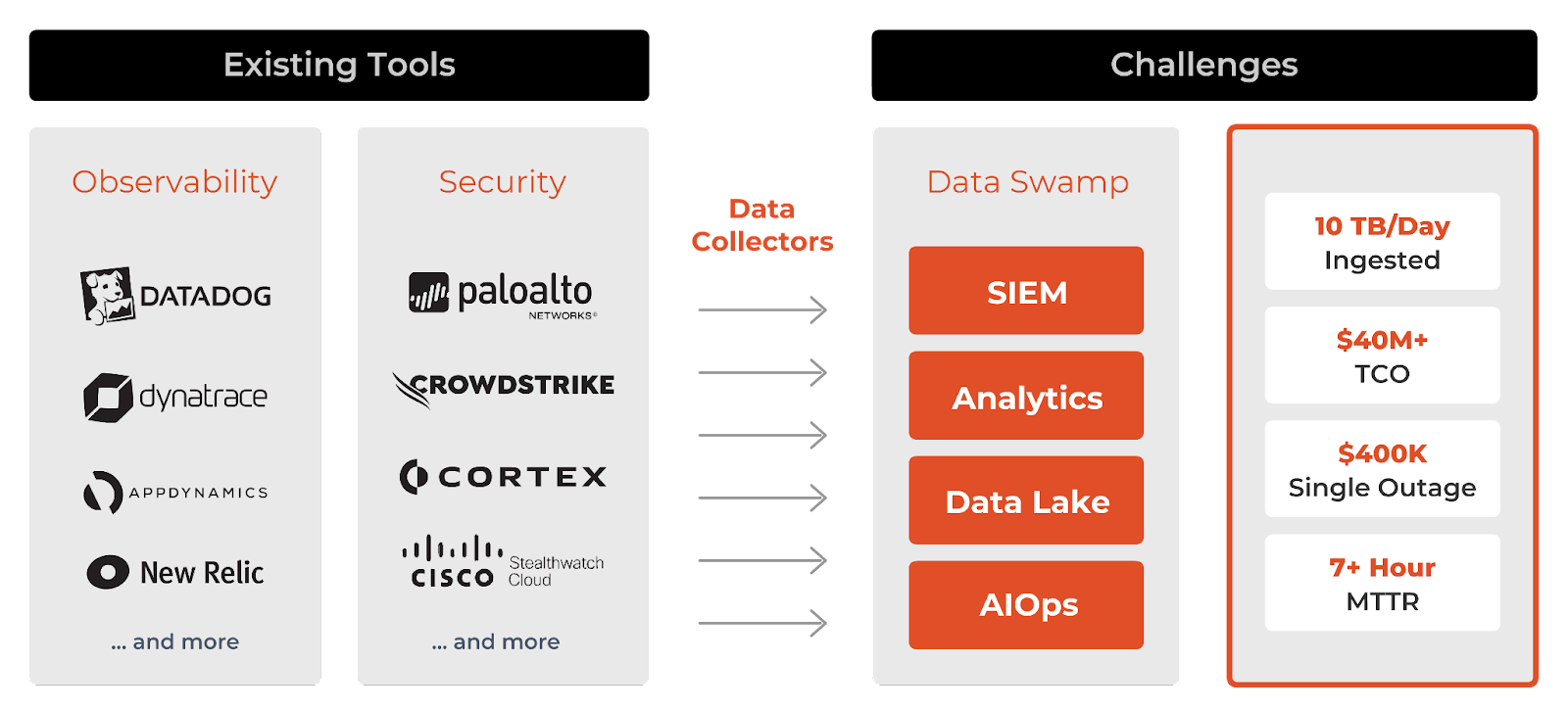

Common practice is to collect and analyze logs to make a system observable, as log files contain most of the data from full-stack alerts to events. Existing log intelligence solutions have a tendency to turn data lakes into data swamps. These solutions ingest repetitive and redundant data. More data increases complexity, compute and storage costs - driving TCO higher (primarily through licensing - think Splunk indexers). The result is Mean Time to Identify (MTTI) and Mean Time to Resolve (MTTR) are compromised.

A Different Approach

Building a next generation Log Intelligence Service serves a number of different purposes. It achieves cyber security mandates for log retention. It can prevent security breaches by optimizing Security Incident and Event Management (SIEM). It can also drive predictive business analytics, incident response, cloud automation, and orchestration workloads.

To solve for the data swamp problem, three things are needed:

1. The ability to ingest streaming data in an automated way

2. An observability pipeline that can aggregate, transform, enrich, search, correlate, route and visualize the data

3. A high performance, object storage-based data lake

At the heart of the CloudFabrix solution is the Robotic Data Automation Fabric (RDAF). The RDAF simplifies automating repetitive data integration, preparation and transformation activities using low-code workflows and data bots, including AI/ML-bots. The idea is to leverage RDAF to simplify and accelerate AIOps implementations, reduce costs and democratize the process by pre-built, any to any integrations (data, applications, connectors). The Robotic Data Automation platform allows data ingestion using a push/pull/batch mechanism.

Once ingested the RDAF Observability pipelines can then Aggregate, Transform, Enrich, Search, Correlate, Route and Visualize simultaneously to different destinations.

While multiple destinations are supported, one of the destinations is always MinIO. MinIO stores the full fidelity copy of all the streaming data. Data stored in MinIO is UTC timestamped and can be replayed on demand to address any security or compliance needs. Additionally, the data can also be searched using universal search bots.

The solution is pre-integrated, indeed, CloudFabrix developed it using MinIO.

Use Cases and Workloads

The joint solution enables the following use cases -

- Log Ingestion - Bring your own Log Tool (BYOL) and ingest data in pull/push/batch modes

- Log Reduction and Replay – Up to 40-80% log volume reduction using correlation techniques and replay using UTC timestamps, IP addresses, and certain patterns to your choice of stream

- Log Routing – Aggregate logs, normalize, transform, enrich, and route to multiple locations - data Lakes, log stores, analytic platforms, Composable dashboards and more

- Log Enrichment – Enrich logs using Geo-IP or DNS lookups from Infoblox, CVE (Common Vulnerability and Exposure) feeds, TIP (Threat Intelligence Platform) feeds

- Log Predictive Analytics – Convert logs into metrics and use a number of regression models for anomaly detection

- Edge IoT, In-place Search - Composable search compliments log intelligence by collecting and storing only what is valuable as a full-fidelity copy in the observability data lake. This allows for in-place search as needed on security breaches and compliance needs

The Payoff

By taking an informed approach to log intelligence, enterprises can deliver material savings to one of their larger IT spend items. These will occur across multiple areas, specifically:

- Up to 40% reduction in log volumes without loss of fidelity simply through the log volume reduction using correlation techniques.

- Reduction in edge to cloud bandwidth and storage costs by up to 80% by using composable in-place search at the edge and in-motion.

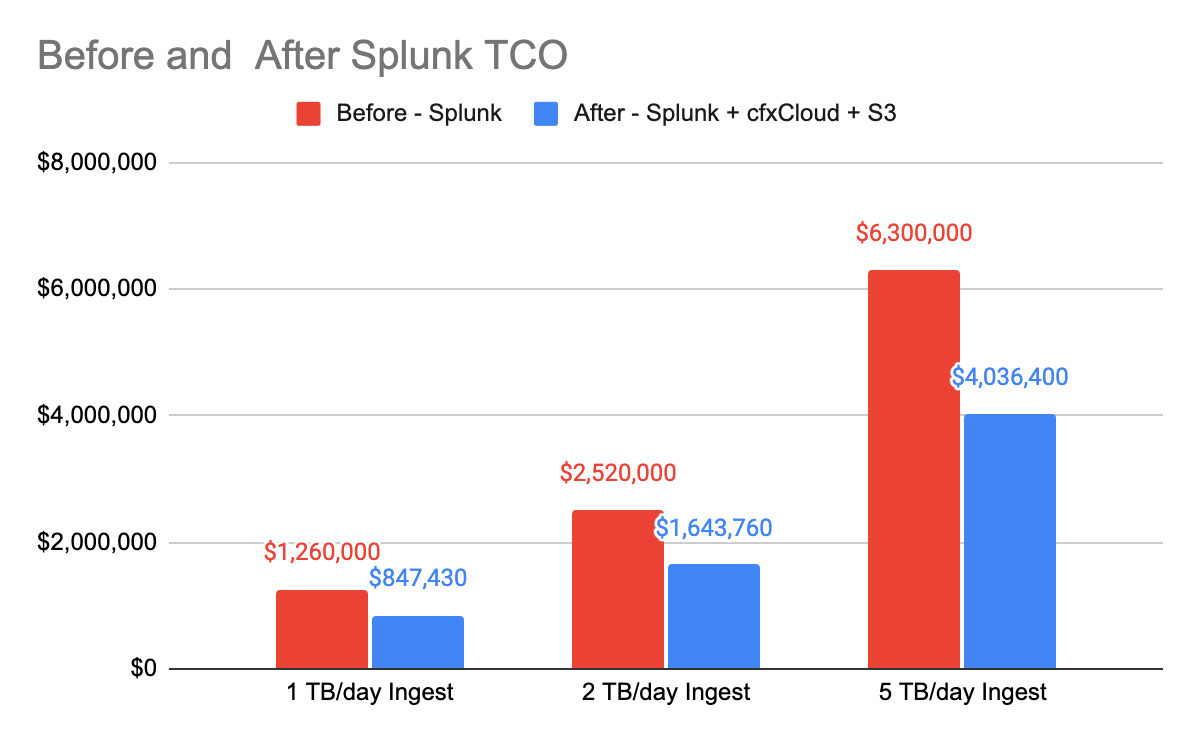

- Reduction of SEIM licensing costs by up to 40%, as shown below with 1 year TCO savings

- Optimized Ingest

- Push/pull secure ingest for streaming data and batch ingest for at rest data optimizes compute, network and storage utilization

The endgame is to deepen customer insights and enhance business outcomes while simultaneously improving MTTI and MMTR. This in turn addresses a variety of compliance issues (PII masking, GDPR governance, CCPA requirements) and enhancing how data moves into cloud environments.

Getting Started

Getting started is very simple as the Robotic Data Automation Platform is microservices based and can be deployed in AWS as a managed service, with data path on premises or customer VPC or entirely on premises. The SaaS solution is self-serve and the AWS marketplace instance is managed. It will work with the MinIO AWS marketplace instance.

As always, MinIO can be found here if you want to try it out locally.

One of the nice things about the integration is that it is a light lift. A POC can be conducted with disparate data sources like JSON, File, Syslog ( TCP/UDP), Rsyslog, Fluentd, Filebeat, Webhook, open telemetry and cloud tools and a broad range of destinations like SIEM, Operational Intelligence and Visual decision boards.

Check it out and let us know what you think. We are always keen to get feedback in this workload - it spawns giant amounts of data that require performance at scale - making it a perfect workload for us.