S3 Benchmark: MinIO on NVMe

Well written software is fast software. When MinIO was conceived it was designed from scratch to be simple, to scale (because simple things scale better) and to be fast. Simplicity and scale have their own subjective and objective measures - but fast is generally a numbers game.

When you take well-written, fast software and pair it with fast hardware the results can be quite impressive.

That is exactly what we saw in our S3 Benchmark work on NVMe SSDs.

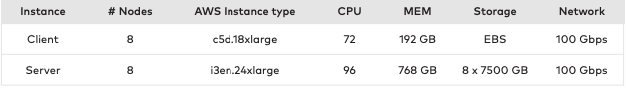

For this round we returned to the S3-benchmark by wasabi-tech to perform our tests. This tool conducts benchmark tests from a single client to a single endpoint. During our evaluation, this simple tool produced consistent and reproducible results over multiple runs.

The selected hardware included bare-metal, storage optimized instances with local NVMe drives and 100 GbE networking. This is top-of-the-line for CPU/SSD inside of AWS.

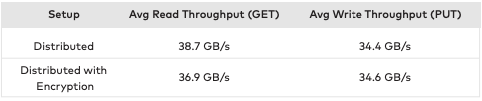

As one might expect, the combination of fast hardware and fast software delivered some impressive results:

MinIO was able to effectively saturate the 100GBe network. In order to understand network utilization pattern, we measured bandwidth utilization on each node every two seconds. Across all 8 nodes average network bandwidth utilization during the write phase was 77 Gbit/sec and during the read phase average network bandwidth utilization was 84.6 Gbit/sec. This represents client traffic as well as internode traffic. The portion of this bandwidth available to clients is about half for both reads and writes. Higher throughput can be expected if a dedicated network was available for internode traffic.

Note that the write benchmark is slower than read because benchmark tools do not account for

write amplification (traffic from parity data generated during writes). In this case, the 100 Gbit

network is the bottleneck as MinIO gets close to hardware performance for both reads and writes.

While noted in the HDD post the encryption point bears repeating here. The overhead associated with encryption is negligible and enables enterprises to implement best practices with no performance penalty to speak of. This is a function of MinIO’s highly optimized encryption algorithms which leverage SIMD (Single Instructions Multiple Data) which provides data-level parallelism on a unit (vector of data). As a result, a single instruction is executed in parallel on multiple data points as opposed to executing multiple instructions.

Performance numbers like this dramatically expand the portfolio of use cases to cover everything from streaming analytics to Spark, Presto, Tensorflow and others making the object layer a first class citizen in the enterprise analytics data stack.

The impact of such performance will vary by industry and workload. The financial services industry, for example is rapidly standardizing on NVMe. Performance is paramount for them and the incremental cost of NVMe over HDD is easily justified.

Similar decisions are being made in the autonomous car space and the aerospace industry. Across industries, workload types matter too with business critical, analytics workloads getting NVMe for Spark, Presto, Flink and Tensorflow. As we showed in the HDD post, you can still generate impressive numbers using HDD, but if you need your answers right now...NVMe is the way to go.

All of the details on the benchmark are published here and we encourage you to replicate the results on your own.

If you would like to dive deeper into the results, drop us a note on hello@min.io or hit the form at the bottom of the page.