The Architect's Guide to the Multi-Cloud

The line between hybrid cloud and multicloud is blurry at this point. The hybrid cloud is certainly more expansive in its definition (public, on-prem, edge). The multi-cloud generally refers to multiple public cloud. What makes it blurry is that the cloud is a mentality - not a physical location. As a result, we see the terms used interchangeably these days.

One thing is clear, however, it that independent of the definitions - the first principles required for success on both are remarkably similar.

As hot as the hybrid cloud is these days, the multicloud is just as prevalent. Every large enterprise is a multicloud enterprise. Every public cloud poster company has data on another public cloud. The reasons are diverse — lock in, pricing, applications, customer demand, operational agility — but the result is the same. Multicloud is no longer an accident — it is a critical part of an enterprise’s cloud strategy.

The questions we will look to answer in this post are: what type of enterprises benefit most significantly from multicloud and what are the keys for success?

Let’s start with the drivers of success and then turn our attention to the enterprises that will benefit most significantly from them.

There are two core elements to success in the multicloud. The first is Kubernetes and the second is object storage. Everything else is a distant third.

The Kubernetes Magic

At the most basic level, the value of Kubernetes lies in its ability to treat infrastructure as code — delivering full scale automation to both stateful and stateless components of the software stack.

Kubernetes is the dominant approach to large-scale cloud computing, because it lets developers focus on functionality (we will come back to this) without agonizing over manageability or portability. It also lets IT automate the operational minutiae that can erode uptime.

The result is that applications come to market faster, scale seamlessly and can run anywhere.

The magic of Kubernetes is that it is the gift that keeps on giving. The more you put into Kubernetes, the more it can automate and abstract; and the greater the value you can extract. That means applications, infrastructure and data. If you only use Kubernetes for the applications, you are only tapping into a fractional amount of the value. For this reason, Kubernetes disproportionately rewards the bold. That has led to the parallel rise of object storage, because it can be put into the container more easily than legacy storage options like block and file.

The Object Storage Wave

As noted, CPU, Network and Storage are physical layers to be abstracted by Kubernetes. They have to be abstracted, so that applications and data stores can run as containers anywhere. In particular, the data stores include all persistent services (databases, message queues and object stores).

From the Kubernetes perspective, object stores are not different from any other key value stores or databases. The storage layer is reduced to physical or virtual drives underneath. The need to run persistent data stores as containers arises from hybrid cloud portability. Leaving essential services to external physical appliances or the public cloud takes away the benefits of Kubernetes automation.

Traditionally, applications relied on databases to store and work with structured data; and storage (such as local drives or distributed file systems) to house all of their unstructured and even semi-structured data. However, the rapid rise in unstructured data challenged this model. As developers quickly learned, POSIX (The Portable Operating System Interface) was too chatty, had too much overhead to allow the application to perform at scale, and was confined to the data center (since it was never meant to provide access across regions and continents).

This led them to object storage, which is designed for RESTful APIs (as pioneered by AWS S3). Now applications were free of any burden to handle local storage, making them effectively stateless — since the state is with the remote storage system.

Today, applications are built from the ground up with this expectation. Well-designed modern applications that deal with some kind of data (logs, metadata, blobs, etc), conform to the cloud native (RESTful API) design principle, by saving the state to a relevant storage system.

This has made object storage the primary storage class of the cloud, as evidenced by public cloud’s reliance on object storage (and pricing of block and file), along with the emergence of high-performance object storage on the private cloud and at the edge.

But there is a catch…

Kubernetes is the great leveler. S3-compatible object storage is the perfect storage class of Kubernetes. Combine and repeat right?

Unfortunately not. It turns out that S3-compatible object storage cannot be found on other public clouds. Each of the public cloud storage services — Google Cloud Storage, Azure Blob Storage, Alibaba Object Storage Service, and IBM Cloud Object Storage — have introduced their own proprietary APIs and are fundamentally incompatible with each other.

GCP — not S3-compatible. Azure — not S3-compatible. Alibaba — not S3-compatible. IBM Cloud — not S3-compatible. Oracle Cloud — not S3-compatible.

This is inherently solvable — and Kubernetes is the key once again. The answer comes in Kubernetes-native, software-defined object storage. With that you have everything you need to run your application, big or small, on every cloud, on-prem and edge environment out there.

Who Benefits from Kubernetes-native, software defined object storage

There is a superb post from the team at Andreessen Horowitz on the cost of the cloud to public, cloud-first companies. In that post, the team builds on something we have been saying for several quarters now: that the cloud is a great place to learn, to focus on the product and to be agile, but at scale it simply doesn’t make sense long term.

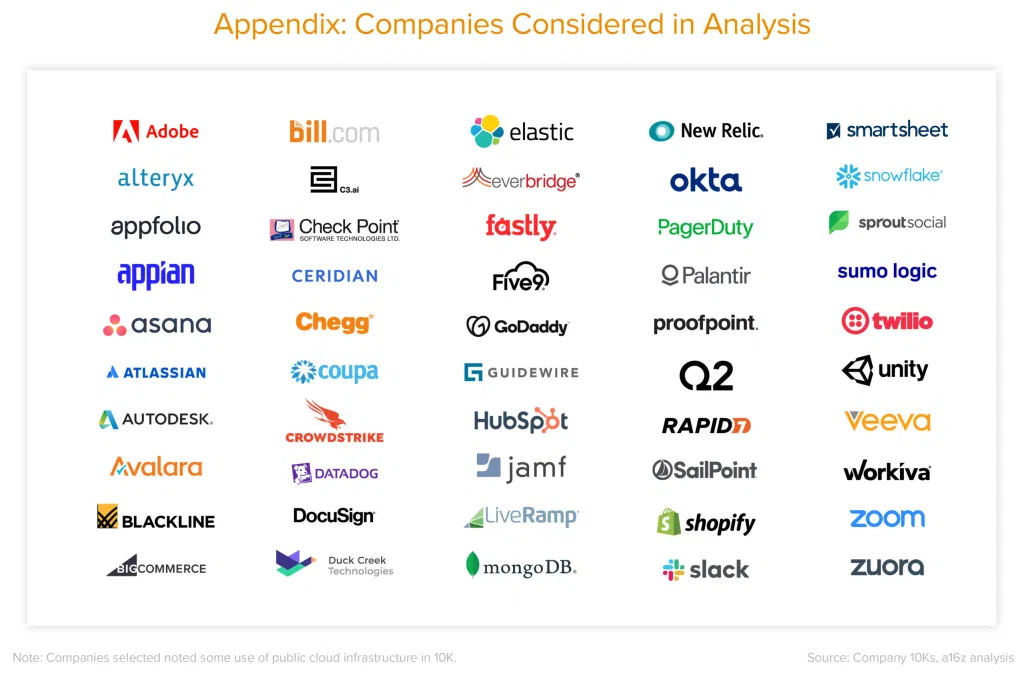

Think about the cloud native elite. A16Z identifies this list:

They have each been built with elastic compute, networking and storage as foundational components and self-service/multi-tenancy as the vehicle for customer engagement. But that is not what made them successful, nor are those characteristics exclusive to the public cloud. These businesses succeeded at scaling by having a laser focus on their product, which almost always meant running on a single public cloud platform. That public cloud platform was disproportionately AWS for obvious reasons — it was where the customers were. It created an exceptionally powerful cycle for AWS and an exceptionally profitable one.

The choice of a public cloud was important, but it wasn’t game changing. There are tens of thousands of businesses that are built on top of AWS, but very few of them make the Cloud 100 lists. The ones that do have great product/market fit, deliver simplicity, are easy to use, have transparent economics and can cloud-natively scale up and down dynamically.

Every one of these companies has (or is now facing) a legitimate game changing question. If I have to keep growing, I have to be on more clouds...so how do I achieve that? It is not just other public clouds — the question is broader and includes private clouds, Kubernetes distributions, and for some it even means edge clouds.

There are two choices for technology strategy teams to consider. One, build out expertise and write code specifically for each cloud’s set of APIs that they want to pursue. Two, find a solution that abstracts the infrastructure, so they can simplify application architecture into a single code base capable of running on any cloud.

I think you know where this is going.

Bespoke cloud integrations turn out to be a terrible idea. Each additional native integration is an order of magnitude more complexity. The third cloud isn’t easier than each of the first two — it is actually harder. It turns out to be an n-body problem — it’s harder to abstract the inevitable inconsistencies in functionality and performance, and harder to manage the compromises associated with the lowest common denominator. If you invest in dedicated teams, you are investing $5-$10M per platform, per year, in engineers (if you can attract and onboard them). Storage costs will vary. So will the cost of the loss of that tribal knowledge. In the end, it results in buggy and unmaintainable application code.

Modern Storage at Scale

While Kubernetes solves a ton of challenges in this regard and has commoditized significant portions of the infrastructure stack in the cloud, the sticking point has been storage. Block and File are not cloud native by nature and the POSIX API has never been a good compliment for Kubernetes from a scalability perspective, or an operational and maintainability perspective. For Kubernetes to truly be successful, it needs modern storage.

Modern storage at scale is object storage. Full stop.

When these enterprises can pair Kubernetes with S3-compatible, high-performance, software-defined object storage, they can solve the problem. Now those businesses can attack every cloud from a product-first perspective, abstracting the infrastructure completely. This actually is game changing.

When you choose S3-compatible, high-performance, software-defined object storage (promotional plug — that’s us) you are likely investing a small fraction in engineers per year plus storage costs (or hardware costs in the case of private/edge clouds), making the economics a no-brainer — even if you made your product available on 20 platforms.

Enterprises even have the option to roll their own infrastructure clouds, with innovative colocation providers like Equinix and others. Here, you do a long term lease on the infrastructure — achieving pricing predictability, service guarantees and global reach. You then point your Azure, AWS, Oracle and Tanzu instances there. Yes, you pay for bandwidth; but depending on the application type, given it is primarily read operations, it will be materially less expensive.

The Multicloud Playbook

Here is the playbook for multicloud architects.

Step 1: Build a great business on the public cloud by focusing solely on your product. It is fast, easy and economically viable to a point. We like AWS as a starting point because of S3. Yes, it may seem odd for a competitor to recommend a competitor, but the entire world owes them a debt of gratitude for popularizing S3.

Step 2: Develop your Kubernetes chops. Build expertise in developing your software to run on Kubernetes, such that it will be resilient, performant and scalable. Now is the time to learn the intricacies of getting the most out of the code you’re writing, in containers, on Kubernetes. If you are in the public cloud already (and followed our advice) you have object storage chops — likely S3.

Step 3: Plot your global domination starting with another cloud, based on your own customer and strategy considerations. Use Kubernetes and MinIO (or another S3-compatible, high-performance, software-defined, Kubernetes-native object store) to make the move.

Step 4: Go big. Every public cloud. Every Kubernetes distro. Your own private cloud. Be agnostic from a customer perspective.

It seems overly simple, but truthfully it works and many of the companies on the list above are already doing it exactly this way. You can try it for yourself in an afternoon.