The Lifecycle of the Cloud

The double whammy of inflation (and correspondingly high interest rates for the foreseeable future), demand destruction and an uncertain outlook have companies aggressively looking for cost savings. Now.

While reducing headcount is a current play for most of the tech industry, there is real money in the data stack. Every company is a data company at this juncture - it doesn’t matter if you disassemble cars or build satellites. Data drives everything. Furthermore, every company is exposed to the cloud (operating model, not location which will be specifically identified as public or private when used that way). It may be the tooling (RESTful APIs, automation, microservices), applications or the infrastructure itself - but it is all cloud these days.

The change in economic conditions has changed the collective conformity as it relates to the public cloud. The tap tap tapping began a couple of years ago, but became more jackhammer-like when Martin Casado and Sarah Wang penned their superb piece of research entitled The Cost of Cloud, a Trillion Dollar Paradox.

It was highly controversial at the time, so much so that Casado made a point of saying that it was NOT a call for repatriation (it was). There is virtue in the cloud. This is a fact. Casado and Wang summed it up very nicely:

“You’re crazy if you don’t start in the cloud; you’re crazy if you stay on it.”

Think of this as the new cloud operating lifecycle.

While Casado’s post got a lot more views than ours (and had a lot better data), we were a full year ahead of him with our piece, “When Companies Should Go to the Public Cloud + When they Should Come Back.”

The net of our piece is as follows:

The public cloud is one of the foundational components of the enterprise technology stack. It is a multi-billion dollar business for nearly a dozen companies, led by the exceptionally talented and driven team at Amazon. Amazon’s empire was built on customer obsession and simplicity. Need compute? Done. Need storage? Done. Want to expand that storage? Done. Want to analyze the data inside that storage? Done.

Amazon eliminated the friction associated with cloud technologies and shifted the balance of power to the developer community and away from IT.

With the concept of elasticity, the public cloud optimized on flexibility, developer agility and CAPEX. Furthermore, with a growing suite of accompanying services (databases, AI/ML) that were beyond the capability of anyone but the most sophisticated hyper-scalers, the public cloud was a massive accelerant of the shift from IT to developers. In the span of a few years, developers became the engine of value creation in the enterprise.

This absolutely worked. Until the bills started to balloon.

Ultimately, the public cloud, for all its benefits, doesn’t deliver cost-saving at scale; it delivers productivity gains - but only to a point. The ease of spinning up an instance, coupled with the ease of forgetting about it, results in massive bills. The mere act of interacting with your data generates egress costs, which have been shown to be egregiously predatory. This is particularly true when the applications are data intensive (high volume/velocity/variety read and write calls) - they just are not sustainable in the public cloud.

If your enterprise is dealing with petabytes, the economics favor the private cloud. Yes, that means building out the infrastructure (or leasing it from someone like Equinix) including real estate, HW, power/cooling, but the economics are still highly favorable as we will demonstrate.

The public cloud is an amazing place to learn the cloud-native way and to get access to a portfolio of cloud-native applications, but it is not an amazing place to scale – unless of course, you are the landlord (AWS, GCP, Azure, Alibaba).

Optimizing for Scale: The Private Cloud

The place to scale is on the private cloud, using the same technologies that you used on the public cloud: S3 API compatible object storage, dense compute, high-speed networking, Kubernetes, containers and microservices.

This methodology offers the ideal mix of operational costs, flexibility and control. It is true that you will take on CAPEX for hardware, but by starting small and taking advantage of key cloud lessons (elasticity and scaling by component), enterprises can minimize the initial outlay and maximize the operational savings.

Related: What is a Private Cloud? Benefits & Use Cases

The net effect is to create superior TCO and therefore ROI through repatriation to the private cloud. This will be the defining play for enterprises in 2023.

Our CEO, AB Periasamy, has an analogy that is perfect here: the public cloud is like a nice hotel. Plenty of amenities, secure, spacious etc. It is priced like a nice hotel too. People don’t live in nice hotels - they stay there for a period of time to achieve a certain objective (business trip, vacation) because it gets too expensive otherwise.

The private cloud is like an apartment (ie. opex with fixed costs) or a home (ie. capex). Monthly costs are predictable and consistent. There is some friction with moving (analogous to expanding), but when your workloads are known, you shouldn’t have to move often.

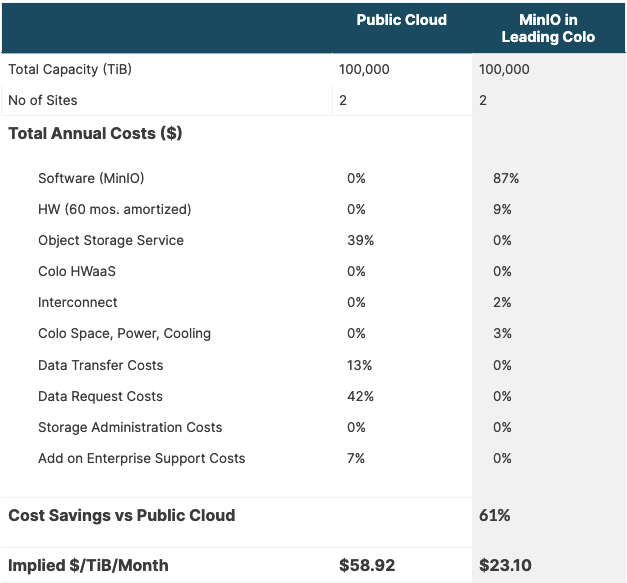

We recently conducted an analysis with the team at one of the world’s largest colos and wanted to make this concrete for you. We absolutely encourage you to reach out and tell us if you think anything is wrong in the analysis. We use published data from one of the leading public clouds - and you can expect it to be similar across them all with some variation. This is not to pick on the public cloud providers – they are partners of MinIO and our colo partner – it is simply to illustrate the new lifecycle of the cloud: start in the public cloud and operationalize on the private cloud.

The below charts and table goes into the details of the cost breakdown for running data infrastructure in Equinix Colocation services as opposed to using the AWS S3 service.

Key Assumptions Used for the TCO analysis:

The above table lists all the key categories of costs associated with a public cloud and MinIO running in a high end colo. Below is the overview of the key categories used for this TCO analysis and and explanation of how the two options contribute to these costs:

- Software: It means object storage software used to realize the S3 solution. In the case of the public cloud provider there is no explicit software cost as it is a service. MinIO Software pricing is available here - https://min.io/pricing. For the 100PiB colo model, MinIO software costs contribute to 87% of the overall cost. While this may come across as very high, it does not include the components listed below (Transfer/Request) and has every imaginable enterprise feature from S3 compatibility to active-active, multi-site replication (something the public cloud options will likely never have). In other words, all the costs that customers pay to realize these capabilities as separate variable costs in the public cloud are packaged into the software costs as annual fixed costs.

- HW: This is the cost of the hardware required to host MinIO software in a Colo or a data center of choice. In the case of the public cloud there is no explicit HW cost incurred as it is a service. MinIO’s software is HW agnostic and requires minimal CPU and memory resources. MinIO’s HW page offers detailed guidance on how to choose HW for MinIO. The beauty of the MinIO solution is that it offers superior price performance for a given HW. This is a function of MinIO’s modern architecture which essentially maxes out any HW profile. In order to make the economical HW costs transparent to our customers, we have also made several calculators available (HW, Erasure Code, Pricing). For the current analysis, we have used this pricing calculator to estimate the cost of HW required to provision 100PB usable capacity. We also assumed that the HW costs are inclusive of the Maintenance, Support and Warranty costs.

- The Object Storage Service: According to the provider (and broadly applicable) “You pay for storing objects in your buckets. The rate you’re charged depends on your objects' size, how long you stored the objects during the month, and the storage class— ranging from frequent to infrequent to really infrequent. You pay a monthly monitoring and automation charge per object stored in the intelligent-tiering storage class to monitor access patterns and move objects between access tiers. There are no retrieval charges, and no additional tiering charges apply when objects are moved between access tiers.” For the purposes of this analysis - all data is stored in object storage.

- Networking/Interconnect: This is the basic networking infrastructure cost related to Colo. The public cloud doesn’t charge for this service explicitly. Based on this pricing and our best efforts estimation, the cost of was 2% of the overall cost for MinIO running on this colo (who is known for their connectivity).

- Colo: This is the cost to host MinIO on commodity HW in a shared data center. The public doesn’t charge for this cost explicitly.

- Data Transfer Costs: This includes all bandwidth-related costs that public cloud charges under the “Data Transfer” tab). Our best efforts estimate 13% for public cloud transfer costs and assumes 100TiB of data transferred from Cloud to Internet; 100TiB of data transferred within Cloud (Backbone); 50PiB of data replicated from one site in US east to another site in US west. Running MinIO in a colo does not result in charges for data transfer separately beyond the fixed costs related to the aforementioned interconnect.

- Data Request Costs: In the public cloud there are various costs associated with various types of data requests. For example, you pay for requests made against your buckets and objects. Request costs are based on the request type, and are charged on the quantity of requests as listed in the table below. This includes interface charges to browse your storage, including charges for GET, LIST, and other requests that are made to facilitate browsing. Charges are accrued at the same rate as requests that are made using the API/SDK. Request types include: PUT, COPY, POST, LIST, GET, SELECT, Lifecycle Transition, and Data Retrievals.

For our current analysis, we assumed that for the given 100PiB capacity of data on a monthly basis 50B objects are written, read, encrypted and transitioned using data lifecycle services at a high level. Per our analysis this cost of 42% of the total public cloud cost but is highly variable and can be significantly higher for sustained data-intensive workloads, e.g. Spark. Also note that MinIO doesn’t charge for these features explicitly, and the public cloud’s data request charges can alone surpass the overall cost of running MinIO in a colo. - Add on Enterprise Support Costs: We assumed an average support cost of 7% of the overall costs based on the guidance provided in different clouds. MinIO doesn’t charge for any additional support. It is included in the commercial license. When it comes to support, we have turned into a software problem and solved it using MinIO SUBNET.

- Implied $/TiB/Month: This is the fully loaded cost (for 100PiB) per TiB per month for using two regions vs running MinIO in two colos.

Based on the retail price of the public cloud service and the overall costs associated with a top end colo (space, cooling, power), HW and MinIO SW costs, the cost of running data infrastructure in the private cloud can be significantly lower for large scale capacity (10PiB+ aggregate capacity).

These numbers only get better at larger capacities.

It goes without saying that if you are talking about more than 10PiB you are going to get the full attention of both MinIO and any public cloud player. The result could move the needle - particularly in favor of the MinIO/colo solution. Savings could potentially approach 90% or so.

This isn’t a race to the bottom. Cost is but one factor to consider in your overall analysis, but if your costs are getting bigger with each passing quarter, a reset of 90% without having to change a single line of code (just update the bucket names) should be appealing - particularly in this economy.

This was recently validated by a MinIO customer who repatriated over 500PiB of AWS S3 data to their private cloud. In this particular case the cost savings over five years are greater than 50x.

As noted in the above analysis of cost break down, over 60% of the public cloud storage cost is attributed to data transfer and service costs which are highly variable and dependent on the nature of the computer workloads that access this data (e.g. Database, Cloud Native Applications, Spark Pipelines, AI/ML Training and Inferencing etc.). By migrating the data infrastructure to a Colo, customers not only avoid the data transfer and request costs completely, but also convert their infrastructure setup and operational costs to a fixed and predictable cost on an annual or multi-year basis.

Totaling Up the Private Cloud

The cost to run your own 100 PiB private cloud, with state of the art hardware, 24/7 direct-to-engineer support, panic button access and annual performance reviews is at the most $0.023 per GB per month based on pre-negotiated pricing metrics. Let’s circle back and compare that to what we calculated above.

Summarizing the One Year Costs

At a maximum fully loaded unit cost (includes hardware) of $0.023 per GB per month, MinIO and and our colo partner represent the best economics - by a considerable amount. The combination is over 60% less expensive than standard pricing from the public cloud (note we are using the latest non-discounted prices).

If you want to understand the breakeven point for building your own private cloud vs. any public cloud service) - let’s talk, there are some factors that we can take you through that will be educational if nothing else.

The overall point is not to make price the sole arbiter of decision making. It is but one component of which there are many depending on the business and the workload. Indeed, there are benefits to be had for a colocation model around performance, security, control, optionality and flexibility - in addition to cost. That is why many innovative enterprises are engaged in large scale repatriation strategies, because they realize the cloud is here to stay and they have a choice about what and where cloud that is - and they are picking the private cloud when they understand the parameters of their workloads.

Don’t take our word for it, you can test this out yourself. Download MinIO. Questions? Inquire on our Slack channel or shoot us a note on hello@min.io.