The Story of DirectPV

In 2020, MinIO implemented Direct Persistent Volumes (DirectPV) for Kubernetes-based deployments of MinIO Storage. DirectPV is similar to LocalPV but dynamically provisioned.

In this post, I will describe the interesting design decisions that went into creating DirectPV. But before diving into the design details, let’s start with a quick review of Direct Persistent Volumes vs. Network Persistent Volumes.

What is DirectPV?

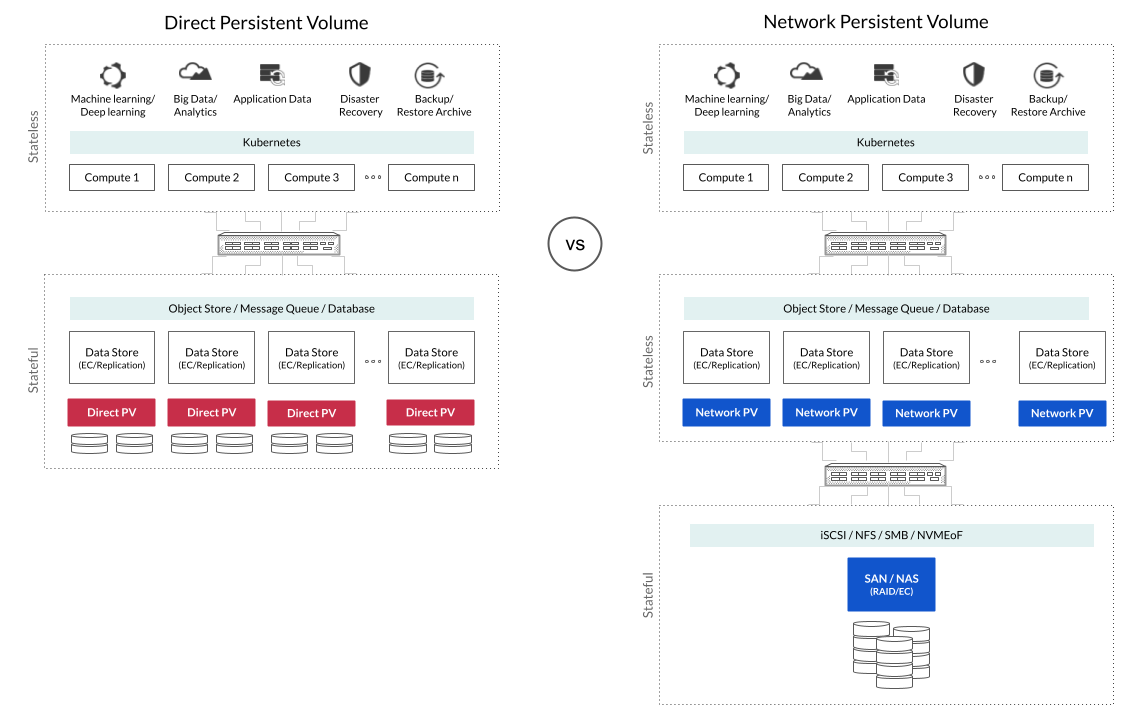

DirectPV is a Kubernetes Container Storage Interface (CSI) driver for Direct Attached Storage. It is used to provision local volumes for the workloads. In a simpler sense, the workloads using DirectPV will directly use the local disks instead of remote disks. Unlike SAN or NAS based CSI drivers (Network PV) which adds yet another layer of replication/erasure coding and extra network hops in the data path. This additional layer of disaggregation results in increased complexity and poor performance.

With DirectPV, you can discover, format, and mount the drives to make them ready for provisioning. Once the drives are initialized, the volumes for the workloads can be scheduled on the drives using the “directpv-min-io” storage class.

For more information, refer https://github.com/minio/directpv

Challenges in designing

“Simplicity is the ultimate sophistication.” — Leonardo da Vinci

More than explaining the functionality and use cases of DirectPV, I would like to cover some of the interesting design decisions taken during the development phase.

Here are some of the primary challenges faced during the design phase of DirectPV.

Challenge 1: Matching the local state and remote state of the drive

DirectPV saves and maintains the local drive states in Kubernetes custom resources. Some states, like drive names and orderings, may not be persistent on node reboots or during drive hot swaps. Continuously keeping the local device states in sync with the drive states that DirectPV remembers is necessary because it deals with sensitive operations like formatting, mounting, etc. This is a critical part, and if not handled properly, it may lead to data loss.

The earlier versions of DirectPV match the local drive (from the host) and the remote drive (Kubernetes drive resource) by identifying properties like WWID, serial number of the drive and partition UUID etc.

This approach had the following problems

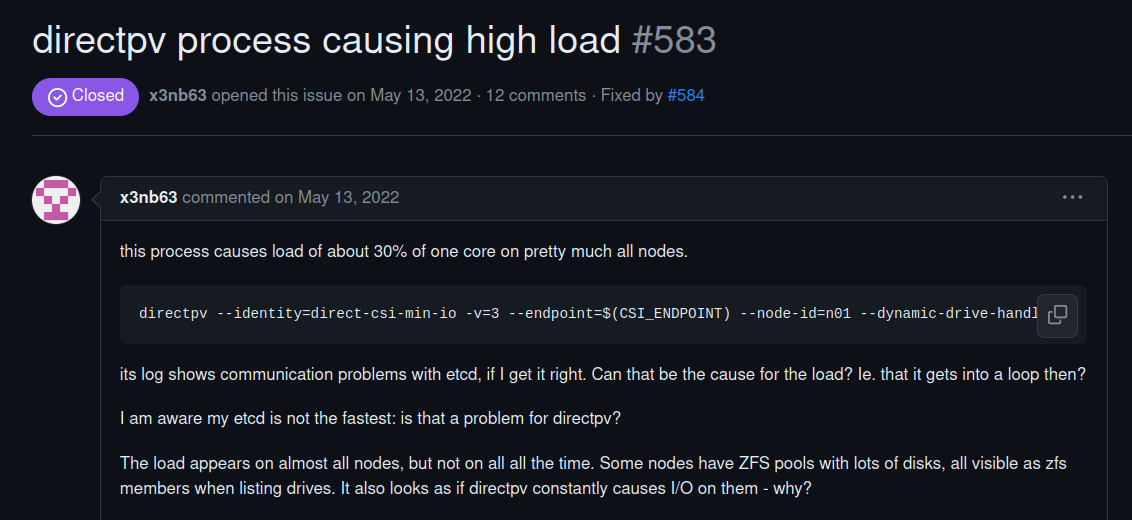

(a) Probing the devices was costlier, taxing and had adverse side effects.

We performed IOCTL calls to read the drive's S.M.A.R.T (Self-Monitoring, Analysis, and Reporting Technology) serial number information. As a side effect, this backfired with too many CHANGE udev events, causing CPU spikes in the DirectPV udev listeners.

(b) Matching the drives by their properties is critical

As previously mentioned, there is a need to continuously keep the drives in sync with the local states because DirectPV does local host-level operations like formatting, mounting, etc. Earlier versions of DirectPV matched the drives with multiple properties like WWID, serial number, major-minor, etc. Such states weren’t reliable for matching due to discrepancies across different drive vendors. Not all the drive vendors have that identical information present about the drives. The risk here is that the drives may be incorrectly formatted and could be improperly mapped for the volumes. One such example is explained in this issue.

Solution:

Now, we have seen potential challenges in probing the devices for information and maintaining the local and remote drive states in sync. We discovered a simple way to Descope the drives managed by DirectPV.

We moved from managing all the drives in the cluster to just managing the drives that were provided to DirectPV. The design was changed a little bit to accomplish this.

As shown above, in the legacy versions of DirectPV, as soon as the server starts, it probes all the drives in all nodes in the cluster and creates corresponding drive objects in Kubernetes (custom resources). These drive objects are listed and formatted. As stated earlier, if the drive states are not reliable, this may lead to formatting the wrong drive.

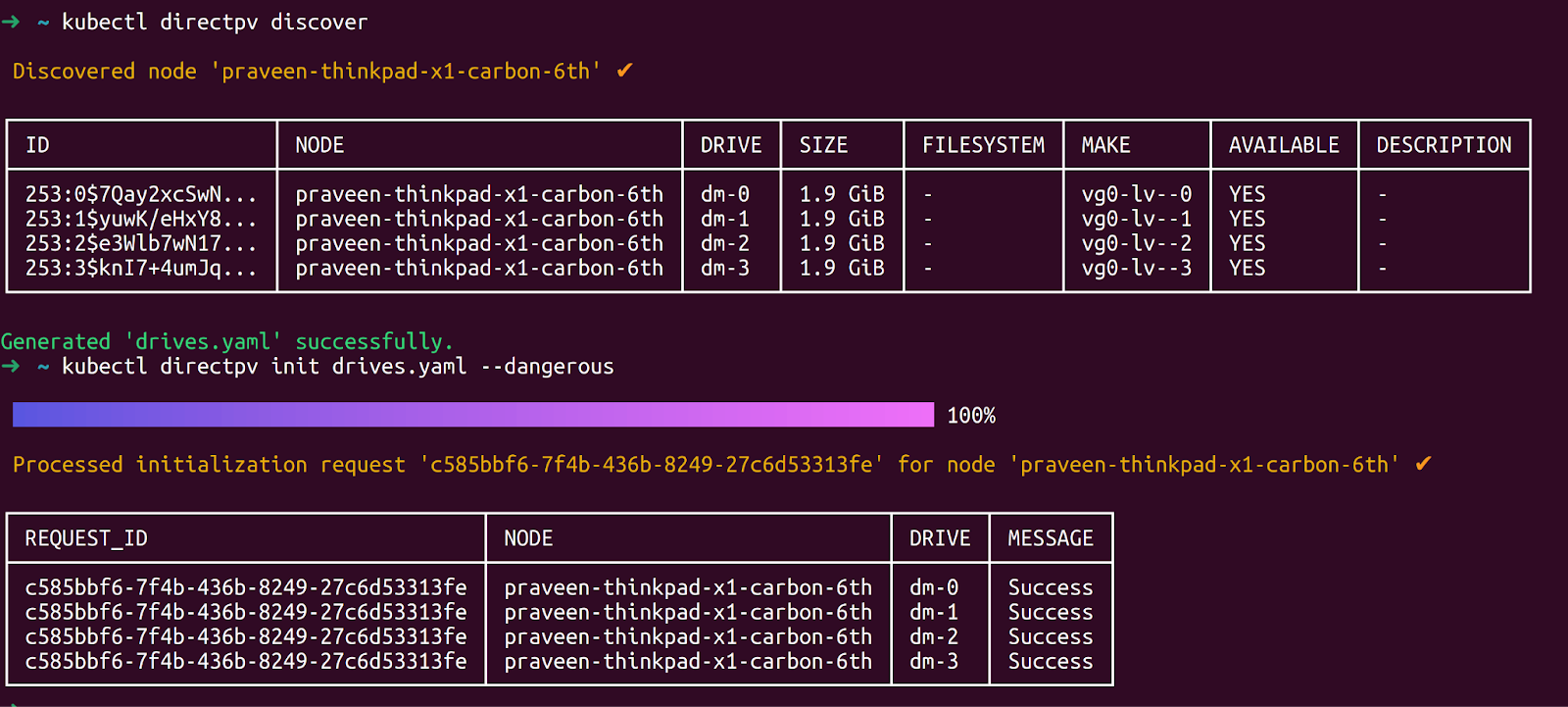

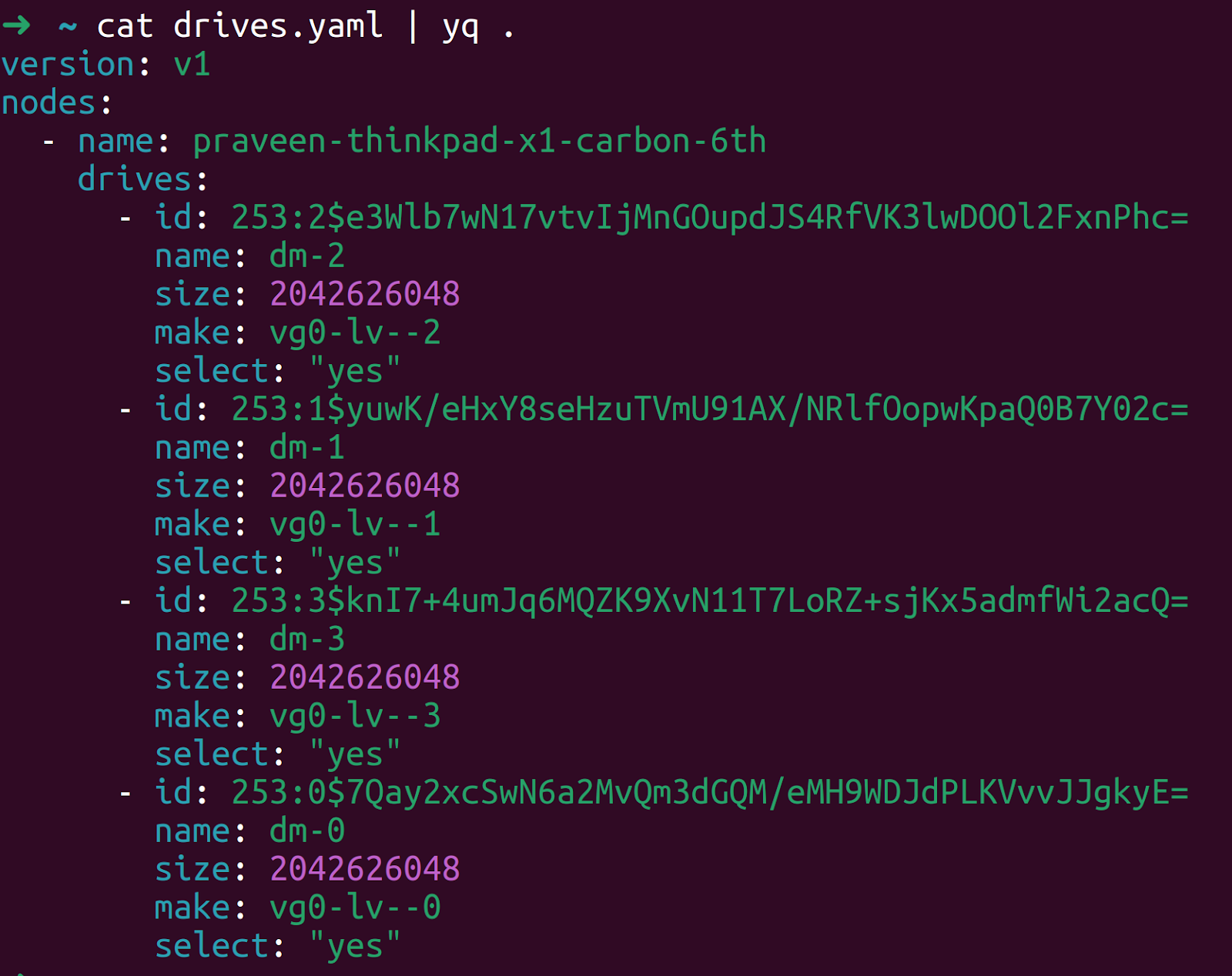

The latest version of DirectPV performs an on-demand probe. It probes the drives from the cluster in real time when the user executes the command `kubectl directpv discover`. Unlike the legacy design, this is stateless, and no drive states are saved in Kubernetes. The user reviews the probed drives' output and then adds them to DirectPV by issuing the `kubectl directpv init` command.

As a part of the initialization process, the drive will be formatted with a unique file system UUID. Upon successful execution, a drive object will be created with the UUID. This FSUUID is unique for an “initialized” drive and we can reliably use it for matching. It provides a strong fact and evidence that if the FSUUID of the initialized drives change in the host by tampering or overwriting it by any external processes, the drives cannot be matched and will be considered as a corrupted drive. We no longer need any other costlier probes other than this.

Challenge 2: Hitman-style declarative formatting and retries

By definition, event controllers in Kubernetes are declarative and play a crucial role in maintaining the desired state of the resource. It retries on any errors until it reaches the desired state. This has been a successful approach in Kubernetes to manage and maintain the states of the resources.

The legacy versions of DirectPV followed a similar approach. The drive (custom resource) event controller listens for format drive requests and reacts. When formatting fails, the controller retries with an incremental backoff. This approach is unsafe when the state of the drives changes during the periodic retries. It is like “sending a hitman to hunt down the disk and keep retrying.”

Solution:

We changed the design to abide by the following rules:

- No automatic retries for formatting

- Verify the drive and be 100% sure before formatting

The initialization (formatting) is real-time, with no automatic retries. Most importantly, no critical drive operations are done on cached drive states.

If the initialization succeeds or fails, the error will be communicated and the user will retry the initialization.

The config file provided to the init command will have drive hashes that are unique for a drive. Before formatting, the DirectPV server will verify if this hash in the request matches the calculated hash. If not, then it errors out, complaining that the state has changed.

Summary

"Simplicity is not just about removing clutter. It's about bringing clarity to complexity…” - Anand Babu Periasamy

DirectPV represents a big step forward for MinIO customers running MinIO Storage on Kubernetes. Getting there was not easy. This post looked at two high-level challenges the MinIO engineering faced when implementing DirectPV. These challenges were Hitman-style retries and matching the local state with the remote state of the drive.

If you have any questions be sure to reach out to us on Slack!