Using K8ssandra to Backup and Restore Cassandra with MinIO

Apache Cassandra is a distributed NoSQL database that enjoys broad popularity with the developer community. From Ably to Yelp, Cassandra is commonly deployed because of its ability to quickly process queries across petabytes of data and scale to hundreds or thousands of nodes. With relative ease Cassandra can be deployed to any environment, it scales seamlessly both up and out, and operates well in distributed mode. It is also flexible in storing its data, it can handle high volume without compromising on performance and speed. It is open source so when fixes need to go out the iteration cycle is quick and the Cassandra query language (CQL) is easy to learn for anyone who has worked with SQL syntax.

Cassandra is commonly used for writing intensive data models like metrics / time series data, historical viewed pages, taxi tracking, etc. What folks stress most when it comes to Cassandra is having the right data model with the appropriate use case. And, of course, make sure you tune your JVM properly in order to have a pleasant Cassandra experience.

Cassandra comes in a few different flavors:

- The Apache version which is the original open source of Cassandra that was developed by Meta/Facebook. It has all the core features that are required to run Cassandra but support is community based. You have to be familiar with the command line tools to manage and operate the cluster.

- The Datastax version of Cassandra was built on top of the open source but a with focus on security and supportability for the enterprise. For instance, one cool feature it provides is the OpsCenter, a browser-based console UI that shows the state of the cluster and can be used to perform operational tasks.

There are several ways to deploy Cassandra; bare metal, VMs, containers, etc. Today we’ll deploy it to a Kubernetes cluster with the help of K8ssandra. This will set up all the necessary scaffolding for our Cassandra cluster to come up and running in no time.

No matter which method you use to deploy Cassandra,it makes sense to pair it with cloud-native object storage to make the most of it. MinIO is the perfect complement to Cassandra/K8ssandra because of its industry-leading performance and scalability. MinIO’s combination of scalability and high-performance puts every data-intensive workload, not just Cassandra, within reach. MinIO has created a comprehensive blueprint for data infrastructure to support exascale AI and other large scale data lake workloads. It is called the MinIO DataPod. Why? Because exascale data is the reality that is common today in today's enterprise.

MinIO was designed and built to be Kubernetes-native and cloud-native, and to scale seamlessly from TBs to EBs and beyond. MinIO tenants are fully isolated from each other in their own namespace. By following the Kubernetes plugin and operator paradigm, MinIO fits seamlessly into existing DevOps practices and toolchains, making it possible to automate Cassandra backup operations.MinIO makes a safe home for Cassandra backups. Data written to MinIO is immutable, versioned and protected by erasure coding. Let’s look at how you can backup and restore data fast to minimize downtime with MinIO.

Prerequisites

Before we get started there are some prerequisites you need to have ready for our installation.

- Helm

- Kubernetes cluster (Minikube, Kind etc.)

In this guided tour we will use Minikube as our Kubernetes cluster of choice but any properly configured Kubernetes cluster will work.

Cassandra

There are several ways we can install Apache Cassandra. In this example we will deploy Cassandra in a semi-automated way using K8ssandra.

Configuring

We will deploy a simple Cassandra cluster with the following spec, save this yaml as k8ssandra.yaml.

We will not go through the entire spec but we will go through some of the key components below:

Storage Classes

There are a couple different storage classes that you can use but the main requirement is that the VOLUMEBINDINGMODE should be WaitForFirstConsumer.

The default storage class mode is not supported so let's create a rancher local-path storage class.

Now you should see a second storage class with the proper config

Medusa

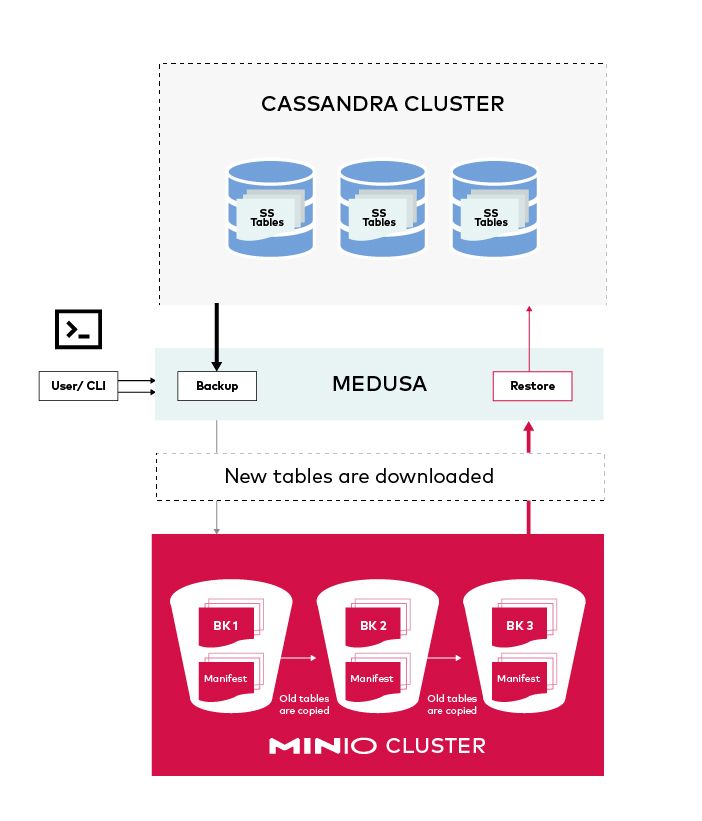

Medusa is a component of the Apache Cassandra ecosystem. At its core Medusa orchestrates the backup and restore of the Cassandra cluster.

The fields in particular of interest are:

hostportstorageSecretbucketName

These fields allow you to connect to MinIO to perform backup and restore operations.

Storage Secret

The storage secret is a Kubernetes Secret that we’ll create with credentials to connect to MinIO. Put the below contents in a file called minio-medusa-secret.yaml:

metadata.namemust matchstorageSecretfield ink8ssandra.yaml.aws_access_key_idandaws_secret_access_keymust match MinIO’saccessKeyandsecretKey, respectively.

Apply minio-medusa-secret.yaml resource in the cluster

kubectl apply -f minio-medusa-secret.yaml

- Verify with

kubectl get secret medusa-bucket-key

Installing

To install add K8ssandra Helm repo and update helm

- Add repo

helm repo add k8ssandra https://helm.k8ssandra.io/stable - Verify with

helm repo list - Run

helm repo updateto pull latest updates

Install Apache Cassandra using K8ssandra, ensure the secret medusa-bucket-key exists.

helm install -f k8ssandra.yaml k8ssandra k8ssandra/k8ssandra

The above command should do the following:

- Create a Cassandra cluster named

k8ssandra. - Deploy the cluster to the

defaultKubernetes namespace.

Wait 5 minutes at least until the cluster comes fully online. If you run kubectl get pods you should see all of them in Running state.

Let’s take this opportunity to explore debugging methodology. This is a little outside the scope of an introductory tutorial, but it will be helpful in the long run.

Debugging

Let’s describe the pod to see what is it stuck on

kubectl describe pod k8ssandra-dc1-default-sts-0

We can see from the above describe output the medusa container is having issues, lets see its logs to see if we can determine what the issue is:

kubectl logs k8ssandra-dc1-default-sts-0 -c medusa

Ah hah! It looks like there is an issue connecting to MinIO service. Which makes sense, because we do not have any service named minio running in the cluster.

Tip: If you run into any issues with backups/restores and need additional debug info, the medusa container is where you would find most of the details.

MinIO

As we found out, for the medusa container to come up we need to first get MinIO online. We will use Helm to set up MinIO as well.

Installing

To install add MinIO Helm repo and update helm

- Add repo

helm repo add minio https://helm.min.io/ - Verify with

helm repo list - Run

helm repo updateto pull latest updates

Install MinIO using the helm command:

helm install --set accessKey=miniok8ssandra_key,secretKey=miniok8ssandra_secret,defaultBucket.enabled=true,defaultBucket.name=k8ssandra-medusa minio minio/minioaccessKeyandsecretKeymust matchaws_access_key_idandaws_secret_access_keyrespectively inminio-medusa-secret.yaml.defaultBucket.namemust matchbucketNameink8ssandra.yaml.

The above command will do the following:

- Create a deployment and service named

minio. - Deploy MinIO to the

defaultKubernetes namespace.

If you wait a few minutes and run kubectl get pods you should see all of them in Running state. But if you are impatient like me and want to see the medusa container come up quickly, just delete k8ssandra-dc1-default-sts-0 pod.

kubectl delete pod k8ssandra-dc1-default-sts-0

After about a minute or so you should see all the pods Running:

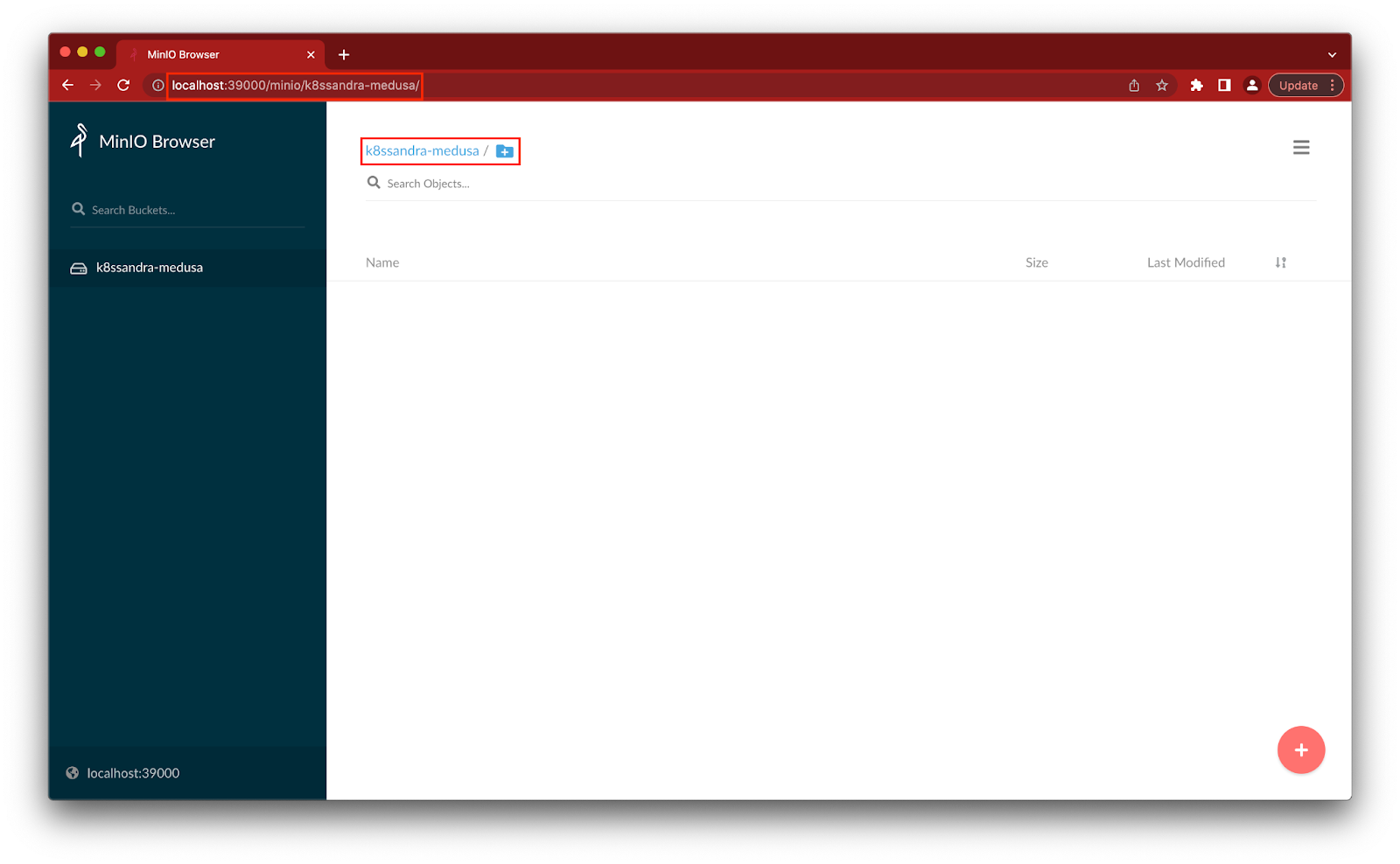

Verify Bucket

How do we check if Medusa was able to connect to MinIO? Well there is a very simple way. If we port forward the MinIO console and log in we should see a bucket named k8ssandra-medusa.

kubectl port-forward service/minio 39000:9000- Using a browser, go to

http://localhost:39000 - Login with

accessKeyandsecretKeyfor username and password, respectively. - If you see the bucket, then Medusa was able to successfully connect to MinIO.

Backup

In order to test the backup there are a few prerequisites:

- cqlsh: The utility that is used to interact with Cassandra clusters.

- Test data which we can hydrate, delete and restore to show the capabilities.

Install cqlsh

In order to interact with Cassandra we’ll use a utility called cqlsh.

Install cqlsh using pip

pip3 install cqlsh

Get Cassandra Superuser credentials

kubectl get secret k8ssandra-superuser -o jsonpath="{.data.username}" | base64 --decode ; echokind kubectl get secret k8ssandra-superuser -o jsonpath="{.data.password}" | base64 --decode ; echo

Open port-forward for cqlsh to access our Cassandra cluster

kubectl port-forward svc/k8ssandra-dc1-stargate-service 8080 8081 8082 9042

Log in to cqlsh

cqlsh -u <username> -p <password>

Hydrate data

Once you are able to log in, you should see a prompt such as below:

<username>@cqlsh>

Copy and paste the following blob into the REPL then run it

Once the above blob has been run let’s verify the data has been entered properly

SELECT * FROM medusa_test.users;

Now we can finally back up to MinIO using the following command, because this is a small data set it shouldn’t take too long.

helm install demo-backup k8ssandra/backup --set name=backup1,cassandraDatacenter.name=dc1

Be sure to note the name of the backup which is required later in this tutorial for restoring.

Verify the status of the backup. If the below command returns a timestamp, it means the backup was successful.

kubectl get cassandrabackup backup1 -o jsonpath={.status.finishTime}

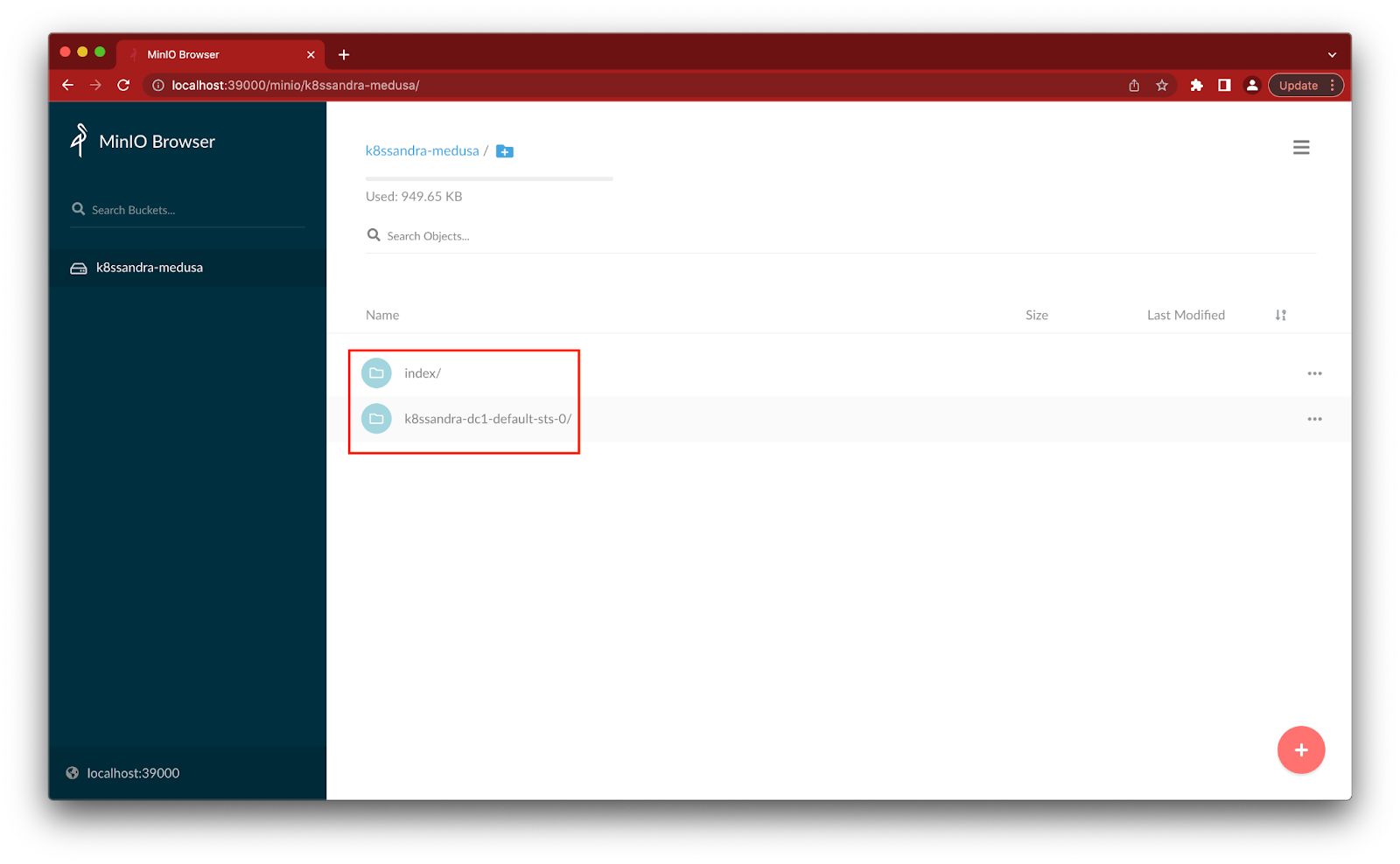

You should verify that the backup was created in MinIO console as well:

- Set port forward

kubectl port-forward service/minio 39000:9000 - Go to MinIO Console

http://localhost:39000

Restore

Now that we were able to confirm that the backup was successful, as an SRE/DevOps engineer you would also want to test to ensure you are able to restore it. Test the restore with your entire dataset to ensure you are able to restore everything.

Delete data

While still in cqlsh> REPL, delete the existing data.

TRUNCATE medusa_test.users;

Verify the data has been in fact removed

SELECT * FROM medusa_test.users;

When we had data there were 4 rows and now we have 0.

Fetch Backup

Similar to how we ran the backup, we’ll use helm to restore as well

helm install demo-restore k8ssandra/restore --set name=restore-backup1,backup.name=backup1,cassandraDatacenter.name=dc1

In the above command backup.name should match the name of the original backup.

Check the status of the backup

kubectl get cassandrarestore restore-backup1 -o jsonpath={.status}

When you see finishTime in the output that means the restore has completed successfully. Another way you can verify this is by getting the list of users again

SELECT * FROM medusa_test.users;

Conclusion

There you go! Let’s recap what we did:

- Using K8ssandra we deployed Cassandra to Kubernetes

- We deployed MinIO object storage

- We successfully backed up and restored the data in Apache Cassandra to and from MinIO object storage.

You can take this one step further and perform a disaster recovery scenario where you can bring up a new cluster from scratch, connect Medusa to MinIO, and start restoring from a previously backed up copy of the data. Give it a try and let us know how it goes!

If you have any questions regarding how to set this up, be sure to join our Slack channel here.