Western Digital OpenFlex Data24 Performance Testing

We recently benchmarked MinIO S3 performance on the Western Digital OpenFlex Data24 using the WARP benchmark tool.

Western Digital’s OpenFlex Data24 NVMe-oF Storage Platform extends the high performance of NVMe flash to shared storage. It provides low-latency sharing of NVMe SSDs over a high-performance Ethernet fabric to deliver similar performance to locally attached NVMe SSDs. Industry-leading connectivity, using Western Digital RapidFlex network adapters, allows up to six hosts to be attached without a switch. This high density and capacity storage array is built to deliver high availability and enterprise-class reliability. You can learn more about the OpenFlex Data24, including configuration and pricing with MinIO, on our Reference Hardware page.

The OpenFlex Data24 was configured with two namespaces per NVMe SSD, an MTU of 2200 and all six 100 GbE fabric ports using a single subnet. This was done to provide 8 drives per server, over a single 100 GbE fabric port/IP.

The results of our performance tests were, quite simply, astounding.

We ran the WARP S3 benchmark to measure READ/GET and WRITE/PUT performance of MinIO using the OpenFlex Data24. WARP is an open source S3 performance benchmark tool developed and maintained by MinIO.

We measured 54.76 GB/sec average read throughput (GET) and 24.69 GB/sec average write throughput (PUT) in our testing.

This blazing performance is why MinIO is frequently used to deliver resource-intensive workloads such as advanced analytics, AI/ML and other modern, cloud-native applications. This would be an excellent hardware choice for replacing an HDFS data lake, building an internal S3 replacement when repatriating data or building an internal object-storage-as-a-service on Kubernetes to support DevOps teams.

Let’s dive into details.

Architecture

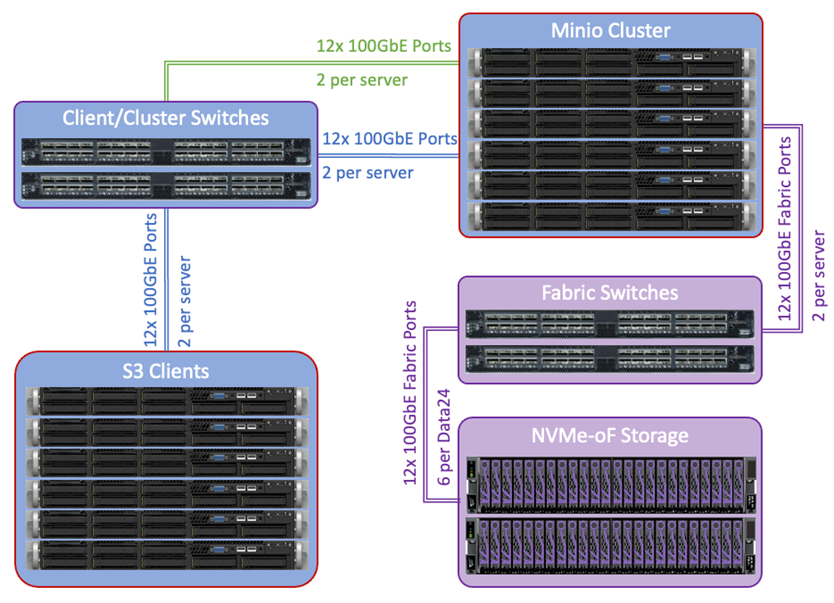

Our test environment consisted of six MinIO object storage servers connected to one OpenFlex Data24 through a Mellanox Sn2700 fabric switch. Both the switch and servers were configured following the Western Digital Lossless Best Practice Guide (available from Western Digital sales). The latest release of MinIO (2022-02-14) was installed and configured following standard setup procedures plus additional per server configuration to enable lossless networking and connect to OpenFlex Data24 devices on boot prior to MinIO services starting.

To closely replicate a real customer deployment, we paired the storage cluster (MinIO servers and OpenFlex Data24) with six load generation clients running the WARP S3 benchmarking tool to generate S3 traffic and measure performance. Each client was provisioned with CentOS 8.3.2011, Mellanox ConnectX-5 100 GbE Network cards, AMD Epyc 7542 CPU, and 512 GB RAM.

Everything was connected via 100 Gbps Ethernet, with all networks physically segmented for maximum availability and performance.

System Evaluation

Each of the 6 MinIO server nodes was equipped with CentOS 8.3.2001, Mellanox ConnectX-6 100 GbE Network cards, Intel Xeon Gold 6354 CPU, and 512GB RAM. Each server was provided with 8 NVMe drives residing in the OpenFlex Data24.

We ran dperf and fio to determine raw drive performance. The dperf results indicate a maximum of 9.2 GB/sec for READ and 7.4 GB/sec for WRITE throughput and the fio results indicate 8.56 GB/sec maximum READ and 6.97 GB/sec WRITE throughput for each server. Based on these results, a calculated theoretical max for this configuration of a 6 node MinIO cluster is 52.2 or 51.36 GB/sec for GET and 22.2 or 20.91 GB/sec for PUT operations. Note that in these calculations, the raw WRITE throughput was cut for the cluster estimates to account for data parity overhead.

A standard MinIO object storage configuration was set up with both a client and a cluster network. The client network is for servicing IO requests of clients and applications. The cluster network is for replicating or erasure encoding data and distributing it to storage nodes. For this solution, which incorporates the OpenFlex Data24, we required an additional network to support NVMe-oF storage fabric. The key to achieving performance is to make all network segments as symmetrical as possible. To this point we recommend using 100GbE networking across the entire solution.

In virtually all cases with MinIO, the network is the bottleneck, but not here because of the additional 100 GbE network used to spread data across the cluster. As a result, MinIO was able to take full advantage of the available underlying server and storage hardware performance. Tests were conducted on a 100 Gb/sec network which had a maximum bandwidth available to each node of 12.5GB/sec (100 Gbit/sec equates to 12.5 Gbyte/sec, (8Gbit = 1Gbyte)). So, the aggregate bandwidth for the six nodes should allow up to 72.5 GB/sec (600 Gb/sec) for GET and 36.25 GB/sec (300Gb/sec) for PUT operations. This lends more evidence that the idealized base deployment configuration is the recommended configuration offering twice the number of drive paths for each node in the MinIO cluster.

Results

We ran WARP in multiple configurations (objects ranging in size between 4KiB to 128KiB) to measure optimal READ/GET and WRITE/PUT performance. The results, summarized below, are stunning.

This testing maxed out the 8 SSD drives per server. A single SSD drive was capable of 1.07 GB/sec throughput. With 8 SSD drives assigned to each MinIO node, we could expect a maximum of 8.6GB/sec throughput per node for a total throughput of 51.60 GB/sec.

Looking at bandwidth, we recorded a maximum of 51 GiB/sec (54.76 GB/sec) for GET and 22 GiB/sec (23.62 GB/sec) for PUT traffic. These results are consistent with the estimated maximum throughput.

As you can see, MinIO on the OpenFlex Data24 outperformed our estimates. What a great surprise!

A Powerful Combination

Object storage performance is a critical requirement for successful cloud-native application development and deployment. Benchmark after benchmark have confirmed that MinIO is, without doubt, the fastest object store on the planet. Combining our highly scalable and performant software with Western Digital’s dense, flexible and fast hardware enables you to field one of the fastest and most user-friendly object storage environments possible.

Performance results like those reported here further underscore the suitability of object storage to meet demanding use cases in streaming analytics, AI/ML and data lake analytics.

Download the full report, OpenFlex Data24 and MinIO Object Storage Solution Test Report, for more details, including specific steps for you to replicate these tests on your own hardware or cloud instances.

If you want to discuss the results in detail or ask questions about benchmarking your environment, please join us on slack or email us at hello@min.io.