Agentic AI with Model Context Protocol and AIStor

The Model Context Protocol (MCP) from Anthropic represents a unique approach to Agentic AI tooling as compared to many of its competitors. Rather than building a framework (software that calls your code) or a library (software that your code can call), MCP focuses on the protocol needed for different parts of an agent to communicate with each other. This has the advantage that the various parts of an agent can communicate across disparate environments and even organizational boundaries, allowing for inter-organizational orchestrations.

Another important development in the software industry is that the amount of unstructured data worldwide is growing at an unprecedented rate. This is because the universe by its very nature is unstructured - there is nothing structured about it - data is not captured by sensors or perceived by our senses as nicely organized tables, rows and columns that can be given a precise data type. As technology advances, we are becoming increasingly capable of capturing more and more unstructured data that represents the world around us. This data, in turn, is causing a fundamental change in what software systems are capable of. In the good old days, conventional software systems used only structured data to automate repetitive tasks. Today, modern systems can also utilize unstructured data to train models that predict (traditional AI), generate new data (generative AI), and potentially take action based on this data (Agentic AI).

This post explores how a protocol-based agentic toolkit and the use of AIStor for storing the world's data can be combined to build agents that surpass the capabilities of generative AI. Since agentic AI builds upon the capabilities of generative AI, let’s start with a brief comparison of generative AI and agentic AI.

Comparing Generative AI to Agentic AI

Generative AI utilizes large language models (LLMs) that employ “zero-shot prompting.” In other words, the LLM is asked to create a response as fast as possible using only “top of mind” information, or information readily accessible from parametric memory. For example, let’s say you send a question to an LLM. Essentially, you are asking the LLM to do the following: “Please answer my question start to finish in one pass without using the backspace button, delete button or arrow keys to go back and redo any part of your answer. Do not break down my question into smaller tasks and do not review your answer for accuracy.” Zero-shot prompting is sometimes referred to as asking the LLM to think fast.

Surprisingly, LLMs can generate coherent and organized responses using zero-shot prompts because they are machines. If the human mind tried to communicate in this fashion, the result would be a stream of words that made little sense. Think about your thought process when answering a question. You will notice yourself breaking the original question down into smaller questions that are easier to answer, putting all your answers together to form an answer to the original question and then just before you speak, you will review the answer and possibly revise it. All humans think this way - no one is so smart that their mind can operate in a zero-shot fashion and produce a result as good as a planned and revised response.

Agentic AI is a method for utilizing LLMs with complete knowledge of an organization’s data, enabling them to build and execute the logic required to surpass zero-shot prompting. This can be done by providing a “few-shot” prompt to an LLM that has been fine-tuned on an organization’s proprietary data and business logic. Below is an example of a “few-shot” prompt that answers the same hypothetical question presented earlier. This is also known as letting the LLM think slowly.

Few-shot prompt example:

- Break down my question into smaller questions.

- Write a draft response to each smaller question.

- Consider the smaller questions that need revision or more research.

- Do you need any additional research?

- Revise your answers.

- Put everything together.

- Review your final response.

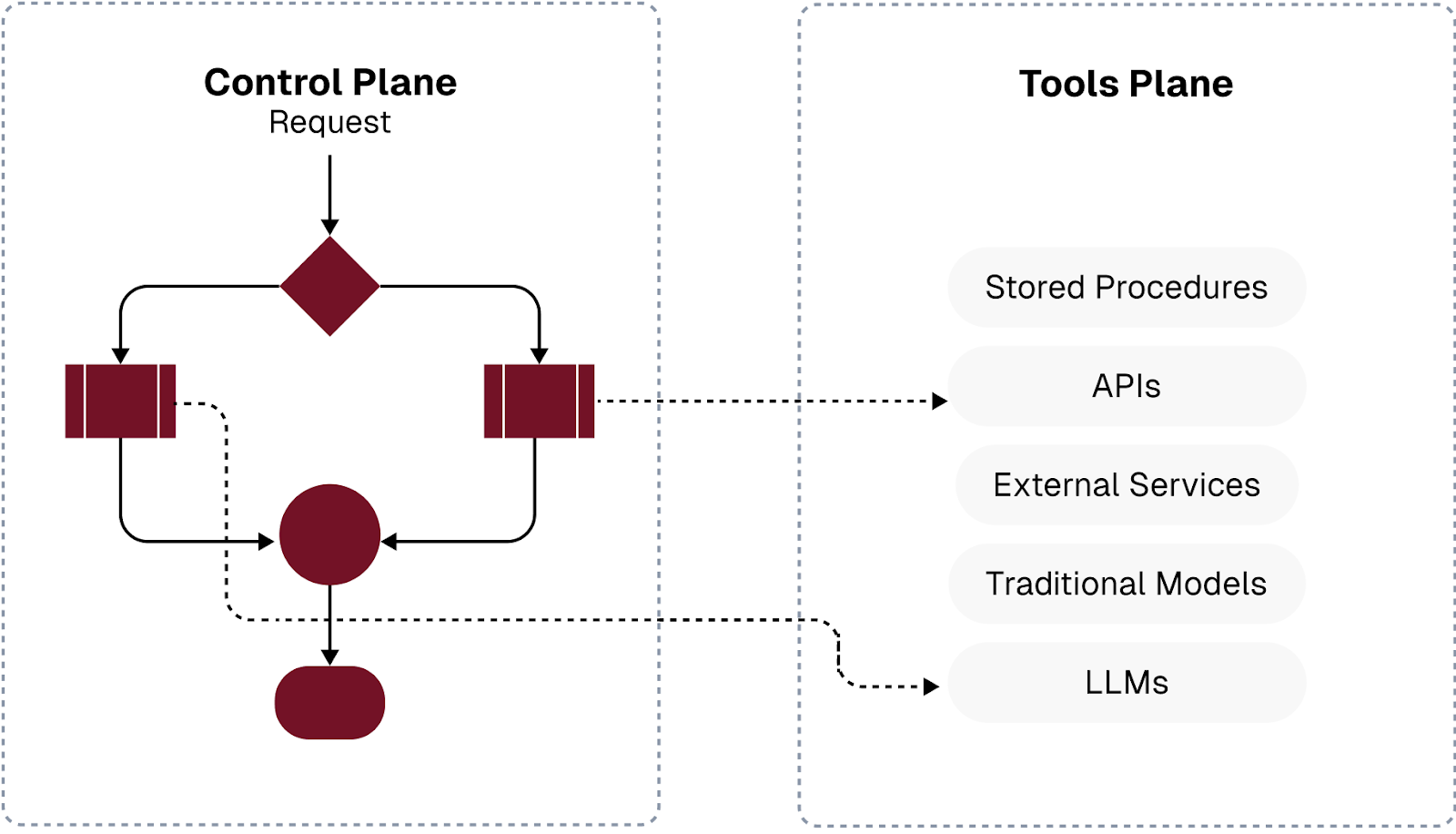

The assumption with the tasks above is that the smaller questions can be paired with an internal tool that the controlling LLM is aware of, and the responses (or answers) are accompanied by a certainty grade indicating how confident the tool is in the answer. The process outlined above is commonly broken down into a control plane (the controlling LLM) and a tools plane (the additional tools needed to further educate a controlling LLM for the task at hand). For more information on how this works, check out The Architect’s Guide to Understanding Agentic AI. A logic diagram of the control plane and the tools plane is shown below.

Source: The Architect’s Guide to Understanding Agentic AI

Next, let’s look at the advantages of a standard protocol for connecting the different moving parts of an agent.

Interoperability with MCP

An advantage of a protocol-based approach for connecting the control plane and the tools plane shown above is interoperability. Teams within the same organization can reuse tools in the tools plane that are written in different programming languages and run on disparate environments. This is analogous to the early days of distributed computing, where components could only talk to each other if they were implemented using the same programming language. For example, a Java component could talk to another Java component, but a Java component could not talk to a C# component. In other words, a sender and receiver could understand each other only if they were coded in the same programming language. Eventually, the industry standardized on REST as a way to represent data and requests for an action. Components adopting REST became services. Today, services written in any language can communicate with any other service. MCP will provide the same benefit to agentic AI. (MCP is actually a flavor of REST.) Services in the control plane and the tools plane will be interoperable regardless of implementation details and their runtime environment.

Another benefit of a protocol-based approach, as described above, is the possibility of open-source tool repositories. Anthropic has already kicked this off with its open-source repository of MCP servers. All the tools listed in this GitHub repository can participate in an agentic workflow. If other organizations were to create additional repositories of MCP servers, then all servers, regardless of where they came from, would be able to interoperate with each other.

Unlocking Knowledge within Unstructured Data

Unstructured data is the foundation for training the LLM that makes up the control plane and all the models used as services within the tools plane. Agents need to plan, reason, learn, and act in environments that are not neatly labeled or structured. Below is a breakdown of how unstructured data can be used in agentic AI, organized by dataset type and use case:

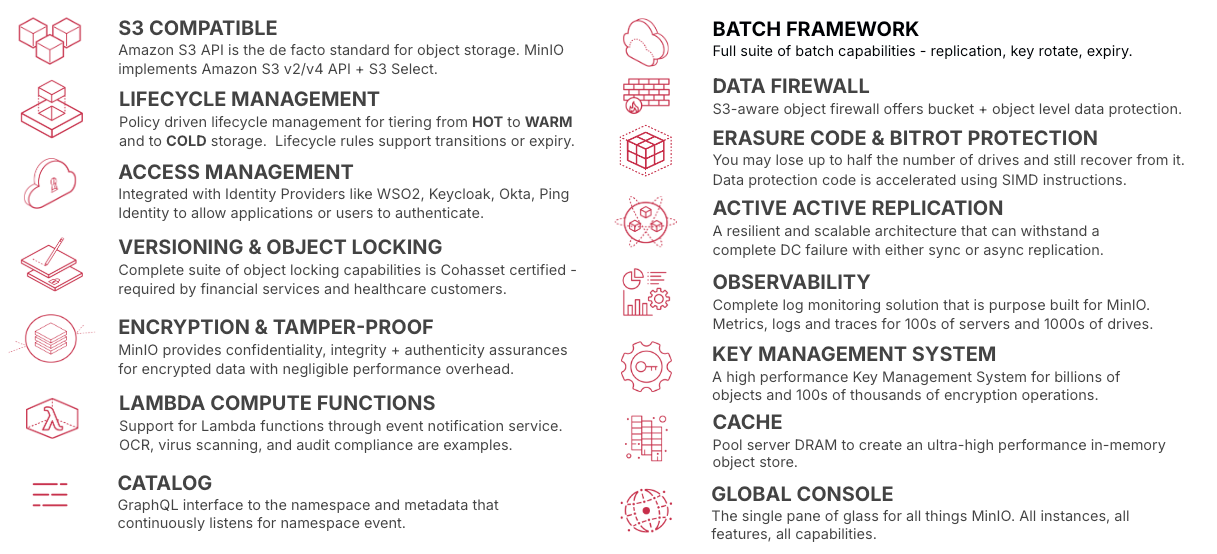

Before agentic AI, saving the above datasets was a “nice to have” component of an organization's overall data infrastructure. With agentic AI, these datasets are a “must-have.” An organization cannot build agents without this data. AIstor is the perfect solution for these datasets. To get started, merely install AIStor and create a bucket for each dataset. As your workloads evolve, consider using AIStor’s enterprise features. To keep only hot data within your primary installation, use lifecycle management to move cold data to cheaper storage. AIstor’s replication can provide disaster recovery for mission-critical data. For improved network performance, consider using S3 over RDMA. Customers using GPUs for model training and inference will benefit from GPUDirect Storage, which sends data directly to GPUs, offloading the CPU.

Art of the Possible

Agentic AI uses artificial intelligence to automate what is impossible to automate with conventional software. Consider a hypothetical factory agent responsible for managing factory equipment, product quality, and customer communication. Here are some of the things such a factory agent could do:

- Scan product images as they come off the assembly line, looking for defects.

- Defective products are diverted, so they are not sent to a customer, causing dissatisfaction.

- For known issues, the factory agent interacts with the equipment to take direct action, adjusting equipment settings to correct the problem. This is a “think slow” task. There may be many actions to choose from, which may need to be combined in unique ways to correct the current issue.

- For issues the factory agent can not fix, the malfunctioning equipment is turned off, and a notification is sent to human repair personnel. This is another example of thinking slowly, whereby the factory agent has to know that it is not confident in its options.

- Since downtime is costly, it is common for parts to be replaced after they reach a certain lifetime, even if they are not malfunctioning. The factory agent tracks the lifetime of every part within all equipment, efficiently scheduling downtime and human resources. (This is another think-slow task.)

- As products come off the assembly line, the factory agent emails customers to inform them that their orders are on their way.

Conclusion

Anthropic’s Model Context Protocol and the explosion of unstructured data are creating a perfect storm for AI agents to thrive. MCP's interoperability will allow agent services to be connected in ways that would not be possible using frameworks and libraries that are programming language-specific. Interoperability will also facilitate open-source repositories of services. At the same time, the exposition of unstructured data allows new knowledge to flow into agentic workflows. AIStor’s storage features make it easy for organizations to get a quick start and scale as their needs grow.

If you have any questions, please feel free to contact us at hello@min.io or Slack.