The Catalog’s “IT” Moment and What it Means For Object Storage and AI

In a modern datalake, catalogs serve as the backbone for organizing and querying data efficiently. Recent news stories, including Databricks’s acquisition of Tabular and Snowflake’s open-sourcing of Polaris, have given catalogs an "it" moment. However, the industry is at a crossroads, with diverse implementations creating a fragmented ecosystem. What can be done to ease the division within this community?

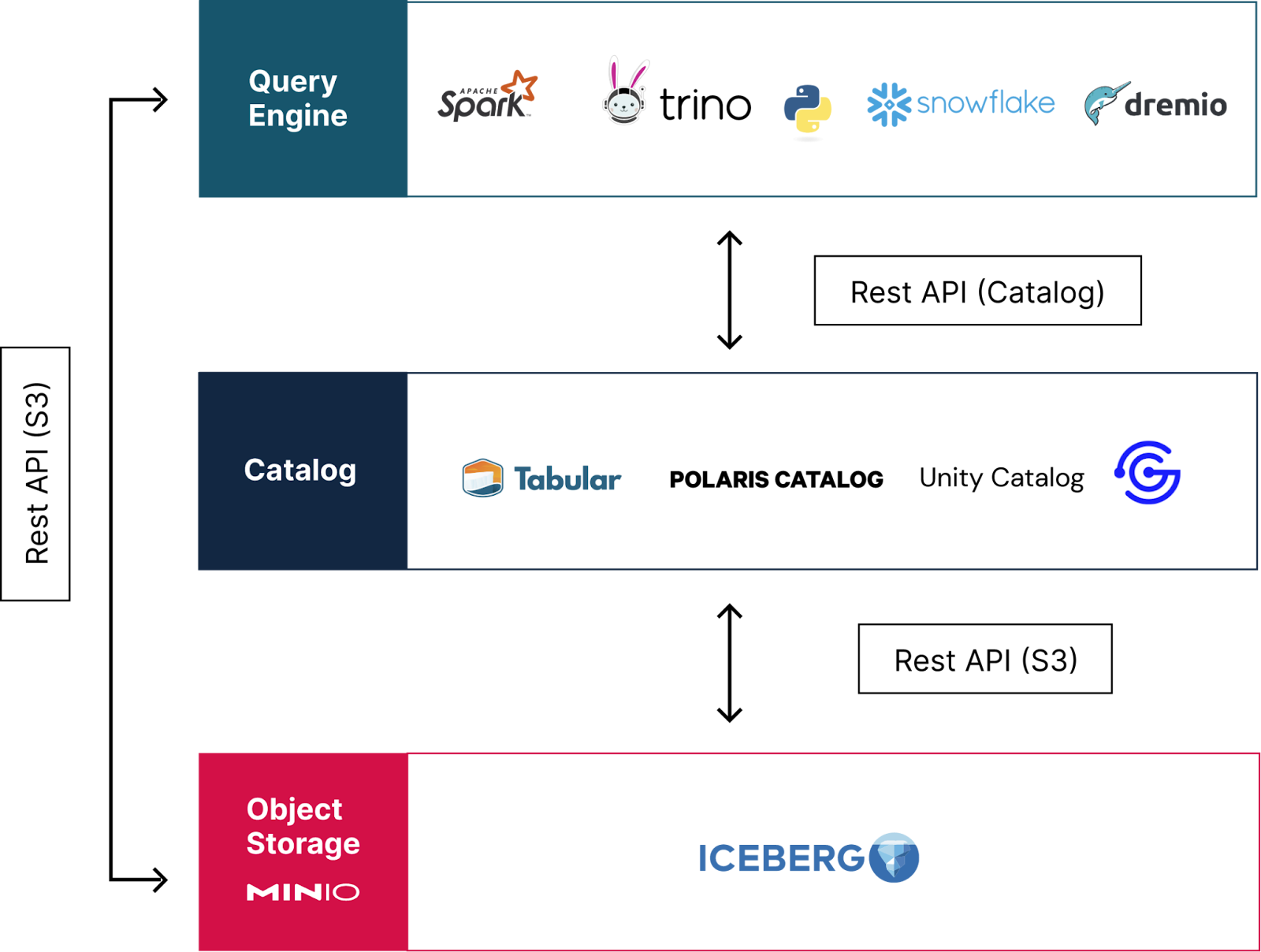

Already many of the vendors are coalescing around Apache Iceberg and are either optimized for or are offering support for Iceberg’s REST catalog API. At MinIO, we are big fans of adopting well-established, industry-standard REST APIs. Our commitment to this approach is evident in our strong compatibility with Amazon’s S3 API.

We have found that rather than stifling innovation, standardization supports it and allows software vendors to focus on the needs of their users rather than the basics. This user focus ends up driving innovation throughout the market. Standardization and its benefits can and should be extended to other components of the modern data stack, such as catalogs. Aligning on an open standard could streamline operations, foster innovation, and enhance the value of modern datalakes particularly for AI and ML workloads.

Defining REST Catalog API

The Apache Iceberg REST catalog API provides a standardized interface to manage metadata and access table data within a modern datalake. This API facilitates seamless integration in any language, including Python, Rust, Java, and more, with any catalog, eliminating the need for custom connectors. The core functions of the REST catalog API include creating, listing, and dropping tables, as well as retrieving and updating table metadata.

By implementing this API, different catalog services can interoperate, allowing users to leverage the strengths of various catalog implementations without being locked into a single vendor or technology. Each of these implementations interacts with the storage layer and provides metadata services, enabling the consistent management of Iceberg tables across different environments.

A proposal was made in April 2024 for the REST catalog to move many operations from the client side to the server side. This change will enhance the REST catalogs’ already impressive extensibility, enabling enterprise features like Nessie’s Git-like catalog versioning. Once adopted, it would establish a standardized interface for all catalogs, fostering innovation and new benefits within the Apache Iceberg framework.

Related: What is a Catalog and Why Do You Need One?

Catalogs in the Modern Datalake

The role of catalogs in the modern datalake is to provide metadata required for executing queries on data. This metadata is essential for managing, organizing, and querying large volumes of data stored in the storage layer of the modern datalake. As with any other layer in the modern datalake, performance, simplicity and the ability to deploy anywhere is critical, foundational functionality. In other words, catalogs work best when the storage layer is optimized for modern datalakes.

This definition of catalogs is not to be mistaken with MinIO Catalog. MinIO Catalog is a metadata management tool that allows administrators to query object namespace metadata using a GraphQL interface. It provides real-time, seamless integration within the MinIO Global Console, enabling efficient data governance, audit, compliance checks, and operational analytics. Key features include automatic indexing, real-time access, and optimization of space utilization without external services. The catalog simplifies complex queries and enhances metadata management across large-scale object storage environments. In other words, catalogs in general operate in the processing layer, helping to optimize query engines, while MinIO Catalog operates on the data in the data lake itself.

Catalogs in the News

Recent developments underscore the industry's shift towards standardized catalog solutions. Databricks has announced that its Unity Catalog now supports Apache Iceberg's REST catalog API. This move is part of Databricks' broader initiative to open-source Unity Catalog. Unity Catalog's open-source nature promises to reduce vendor lock-in and promote interoperability, aligning with the industry’s push towards open standards.

Snowflake's Polaris, another major development, is an open-source implementation of the Iceberg REST catalog API. This move exemplifies the shift towards standardization and interoperability, reinforcing the trend of collaborative innovation in the modern datalake community. Snowflake’s open-source strategy highlights the potential for major vendors to drive the industry towards a more unified and efficient ecosystem.

Gravitino, an emerging open-source catalog project, is also making strides in this direction. Gravitino aims to provide a lightweight and highly extensible catalog solution that fully embraces the Iceberg REST API. By focusing on modularity and ease of integration, Gravitino seeks to cater to diverse data environments, from small startups to large enterprises. Its design prioritizes flexibility, allowing users to incorporate unique features and custom workflows seamlessly. As Gravitino continues to evolve, it is poised to become a key player in the open-source catalog landscape, promoting a more interconnected and efficient data management ecosystem.

Dremio’s Nessie, an open-source catalog that allows for git-like data version control, also just announced support for the Iceberg REST API. Nessie’s unique approach allows users to track changes, create branches, and merge datasets with a level of control and transparency that was previously unavailable in the data space. Their adoption of Iceberg’s REST API lines up with their innovative approach to modern data lakes.

Standardizing Lakehouse Catalogs

The journey towards standardizing modern datalake catalogs via Apache Iceberg’s REST catalog API is both promising and necessary. Chris Riccomini most recently described a vision of future standardization in his Substack, Materialized View. Chris Riccomini’s definition of core and non-core functionalities of modern datalake catalogs is insightful. He argues that when vendors circle around an open standard for core functionalities, they are freed to innovate on vital, but non-core enterprise features.

Chris defines core functionalities as those that provide query engines with the essential metadata required for executing queries—think of this as the fundamental information schema. Non-core functionalities encompass everything else, such as user interfaces, data discovery, lineage, and governance.

By aligning around a common standard and building on the world’s most performant object storage that can be deployed anywhere, the modern datalake community can overcome fragmentation, reduce vendor lock-in, and foster a more collaborative and innovative ecosystem. This vision, while ambitious, is well within reach and holds the potential to transform how we manage and leverage data at scale.

You will achieve the best performance possible, regardless of where you run MinIO, because it takes advantage of underlying hardware (see Selecting the Best Hardware for Your MinIO Deployment) to deliver the greatest possible performance. MinIO has created a comprehensive blueprint for data infrastructure to support exascale AI and other large scale data lake workloads. It is called the MinIO DataPod. Why? Because exascale data is the reality that is common today in today's enterprise.

Learning from LanceDB

While not fully executed to its logical end, a successful example of this approach of vendors focusing on non-core functionality and ceding core-functionality to an open standard comes from LanceDB’s adoption of Apache Arrow in Lance V2. By leveraging Arrow's well-defined types and encodings, LanceDB avoided reinventing the wheel and instead focused on adding value where it mattered most. Other query engines could learn from this approach. Either by rallying around Apache Arrow’s metadata management as a standard or choosing the Iceberg REST API or any other they choose. But, by choosing a standard and sticking with it, query engine vendors can focus on key differentiators that will actually drive innovation in the market.

A New Era for Data Catalogs

The era of fragmented data catalogs is ending. As the industry rallies around open standards, like Apache Iceberg REST API, the focus can shift to innovation and user-centric development. This new era promises not only greater efficiency and interoperability but also the potential to unlock new levels of performance and capability as modern datalakes are increasingly used in AI and Machine Learning applications. MinIO, with its high-performance, scalable object storage, is poised to play a pivotal role in this transformation, helping organizations leverage their data assets more effectively than ever before. Let us know if you have any questions while implementing your AI data infrastructure backbone using MinIO at hello@min.io or on our Slack channel.