MinIO Cache: A Distributed DRAM Cache for Ultra-Performance

As the computing world has evolved and the price of DRAM has plummeted, we find that server configurations often come with 500GB or more of DRAM. When you are dealing with larger deployments, even those with ultra-dense NVMe drives, the number of servers multiplied by the DRAM on those servers can quickly add up – often to several TBs. That DRAM pool can be configured as a distributed shared pool of memory and is ideal for workloads that demand massive IOPS and throughput performance.

As a result, we built MinIO Cache for our AIStor users. MinIO Cache allows our customers to take advantage of this shared memory pool by caching frequently accessed objects. MinIO Cache will further improve performance for core AI workloads.

Points of Differentiation

MinIO Cache differentiates itself from generic caching services in that it has knowledge about the data source – which is AIStor.

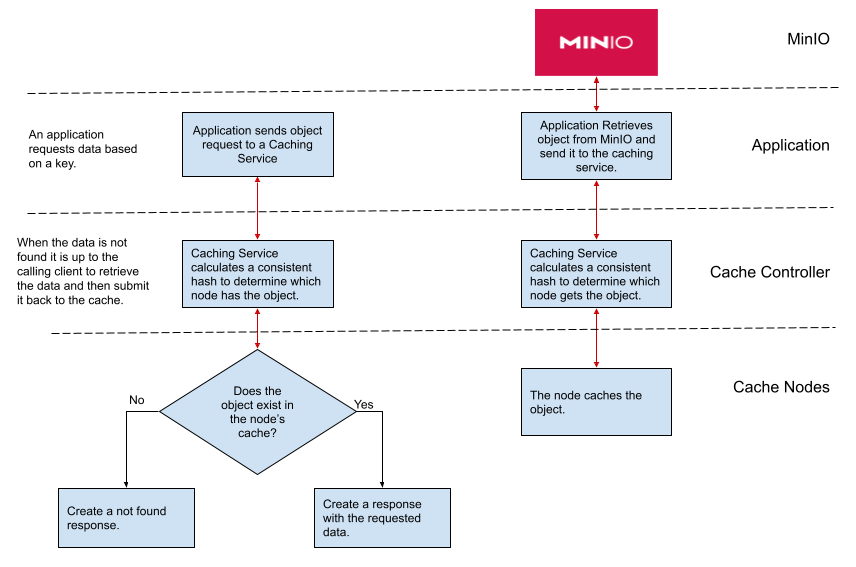

Generic caches are meant to be used with any data source. As a result, if an item is not found in the cache, the best they can do is return a “not found” response. From there, the caller must fetch the data and submit it for caching to the generic cache service.

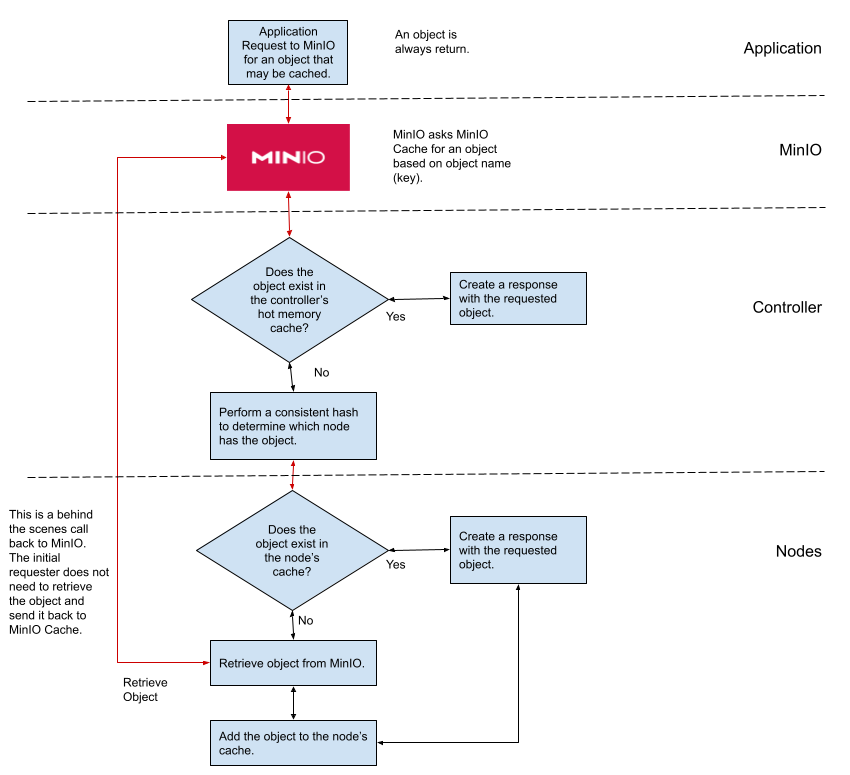

MinIO Cache, on the other hand, was purpose-built to cache MinIO objects. If an object cannot be found in its existing cache of objects, then it will automatically retrieve the object, cache it for future requests, and return the object to the caller.

Another point of differentiation is MinIO Cache’s use of the Consistent Hashing algorithm for spreading out cached object data across a cluster of cache nodes (known as Peers). Consistent Hashing ensures objects can be easily located based on their key. It also ensures that nodes contain the same amount of data so that one does not get overloaded while others are idle. More importantly, however, it spreads objects out in such a way that if a node is added or removed, there is minimal shuffling to get the system aligned. This results in a one-to-one relationship between the object’s key value and the node that holds the cached object.

I’ll go a little deeper into both of these differentiating features later in this post. For now, let’s get a better understanding of the options available within MinIO Cache and how it integrates with AIStor.

Setting Up MinIO Cache

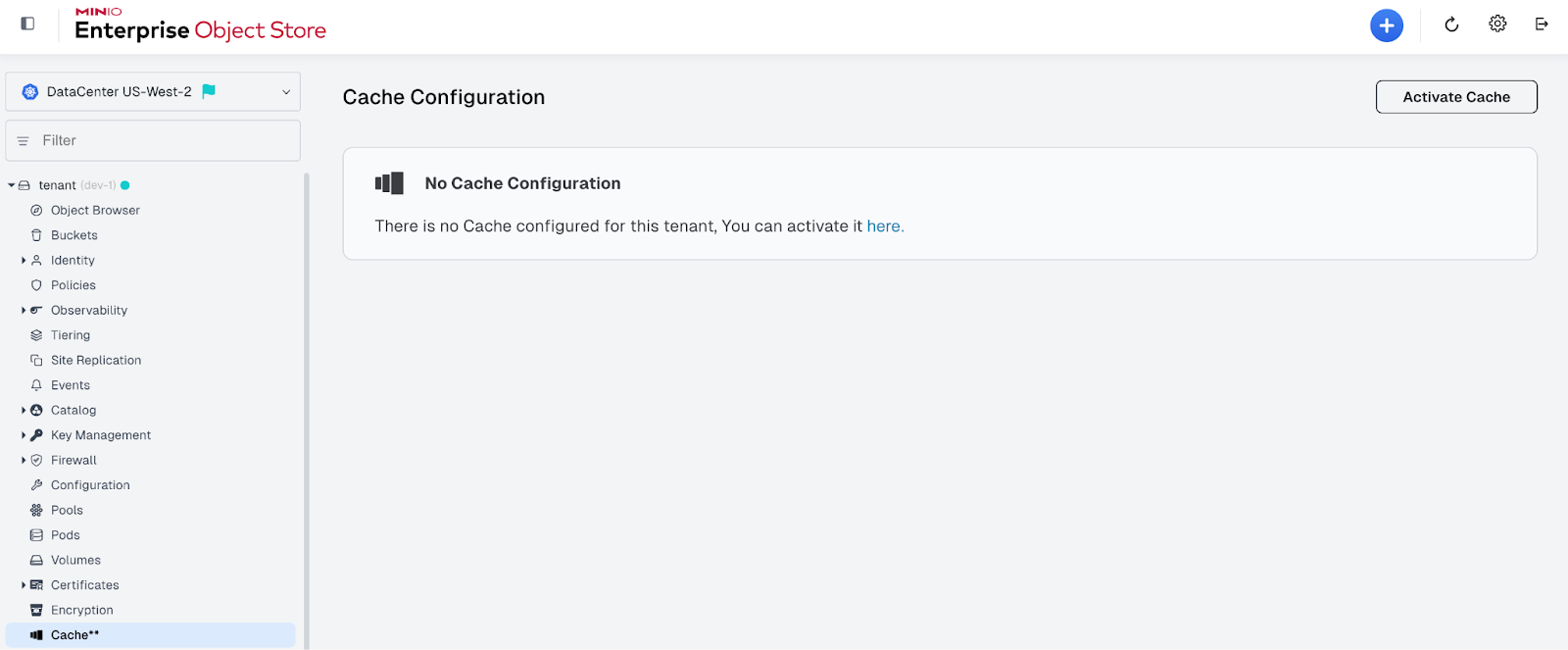

Setting up MinIO Cache is as easy as enabling the service in the MinIO Global Console and specifying the buckets that require caching. If caching has not been previously configured then the Cache dialog in the console will look something like the screen shot below. To activate the cache service click on the Activate Cache button.

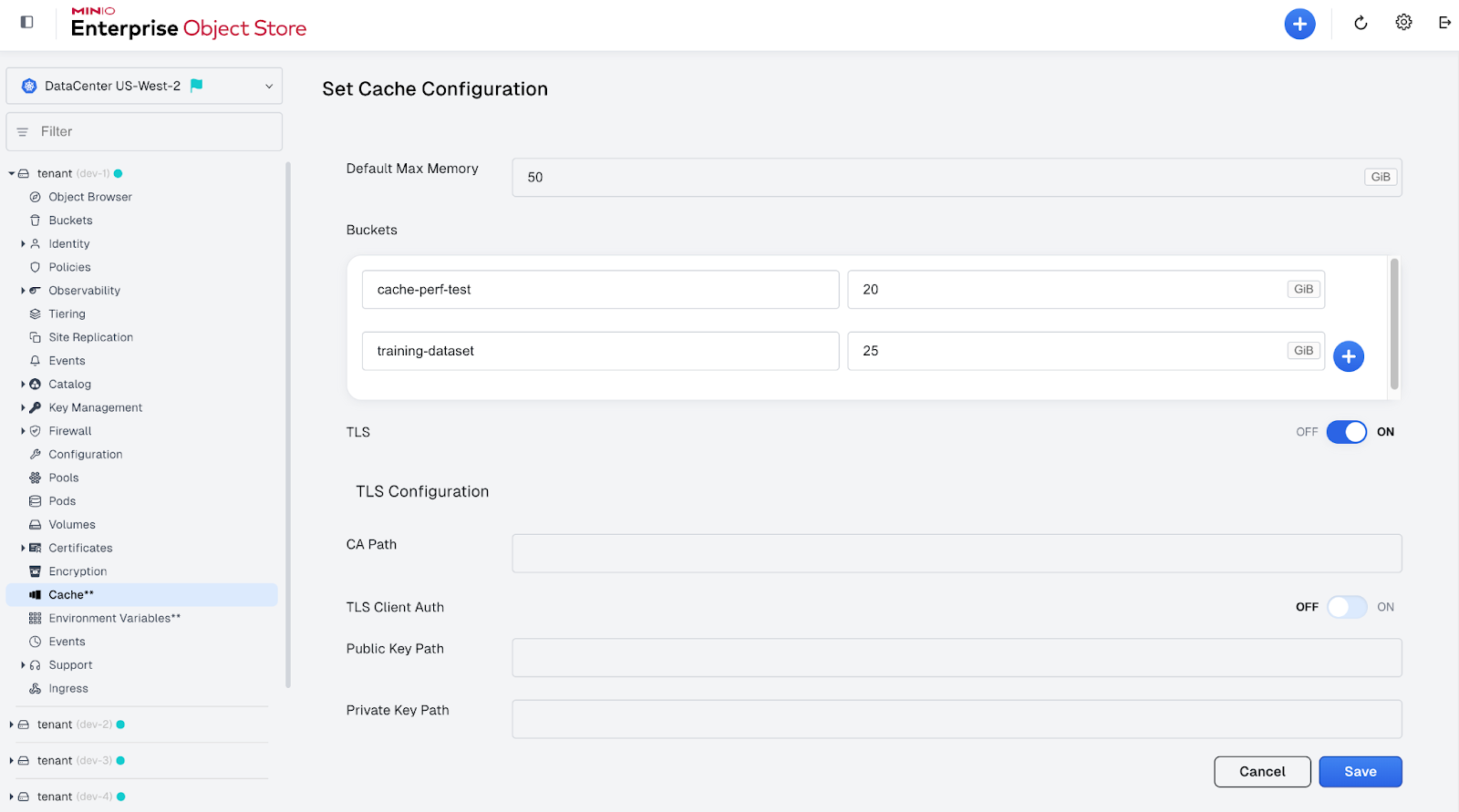

Once the Caching service has been enabled, you will need to specify the buckets that will utilize caching. This is shown in the screenshot below. First, specify a maximum memory setting. This is the maximum amount of memory that will be used for caching across all buckets. Next, specify each bucket that requires caching and the amount of memory to be used for each bucket. If you would like the caching endpoint to use TLS then specify the CA path, public key path, and private key path.

Let’s take a closer look at the features that differentiate MinIO Cache from other caching services.

A Cache Built for MinIO

If a generic caching service were to be used with AIStor, then applications requesting objects would need to check the caching service first before calling MinIO. Since MinIO Cache is built specifically for AIStor, it works behind the scenes. Applications and services call MinIO without knowledge of MinIO Cache. If the object requested is cached, then MinIO will retrieve the object from the cache. If it is not cached and it should be, then MinIO will retrieve the object, cache it, and return it to the calling service.

Cache maintenance is more efficient when a cache is built as an extension of the storage solution. For example, if a cached object is updated, then AIStor can invalidate the object in the cache or update the cache. Also, MinIO Cache uses a rolling cache to keep the total size of the cache within the limits specified in the MinIO Cache configuration. If adding a new object is going to cause the cache size to exceed the limit specified, then one or more objects will be removed based on a timestamp that indicates the last time the object was requested.

To see the benefit of MinIO Cache to an application or service that requires object storage, let’s consider the flow that would occur if a generic caching service were used with MinIO. This is shown below. All network requests are depicted with red arrows and interprocess calls are depicted with black arrows.

Now consider the flow that MinIO Cache uses. This is visualized below. For applications requesting object data, the logic implemented by MinIO Cache is easier to use. Applications using any of the MinIO SDKs make their requests the same way they would if caching was not set up at all. Caching is a behind-the-scenes operation - orchestrating calls to a third-party caching service is not necessary.

Using MinIO Cache also results in fewer network hops when the cache needs to be populated. (4 network calls.) When a caching service, like MinIO Cache, has knowledge of the data source, then it can retrieve data on behalf of a requester in the event that the data has not been previously cached. This results in a 20% reduction in network utilization (4 network calls vs. 5). It also means that an object is always returned to a requesting application.

Finally, MinIO Cache makes use of a hot memory cache in the controller for frequently requested objects – thus eliminating the need to make a network request to a node for these objects.

Consistent Hashing for Resiliency

Consistent Hashing is an algorithm for determining which node contains (or should contain) the cached object based on the object’s name (or key), which is passed in with each request to MinIO Cache. This has the advantage of eliminating the need for a large lookup table that has to be searched with each request. Consistent Hashing also spreads objects out across a cluster of nodes in such a way that minimizes the need to move objects when a node fails or when nodes are added (the cluster is scaled out).

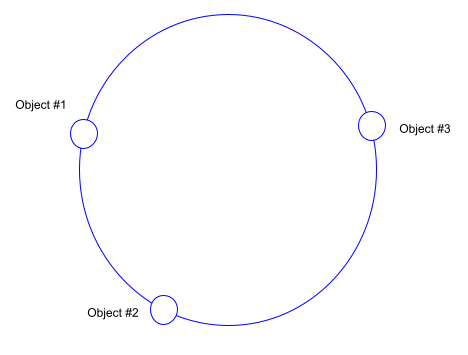

Let’s walk through a simple application of the Consistent Hashing algorithm to get an understanding of how it works and the value it provides. Determining the node in which an object should reside without maintaining a table is straightforward using a hashing algorithm that can turn a string into a number. Once this number is created, Consistent Hashing takes it and maps it to the edge of a circle. The easiest way to do this is to run the hash number through the modulo operator using 360. Example: 370 modulo 360 equals 10. The modulo operation returns the remainder of a division operation with another number. The minimum possible value, zero, would correspond to an angle of zero, the maximum possible value would correspond to an angle of almost 360 degrees (or 2𝝅 radians), and all other hash values would linearly fit somewhere in between. When completed against three objects the circle may look like this:

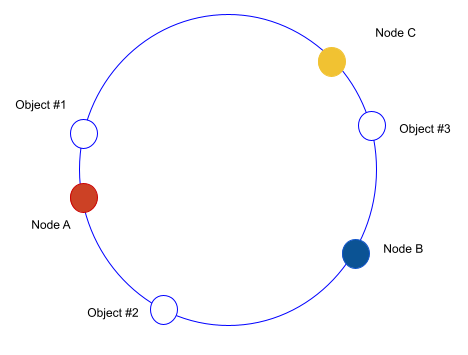

Now we do the same thing with the nodes. We place them on the edge of the circle by applying the same algorithm to their IP address or URL. Now, our circle looks like this:

To determine the node an object should reside on, you start with the objects on the circle and travel counterclockwise until you hit a node.

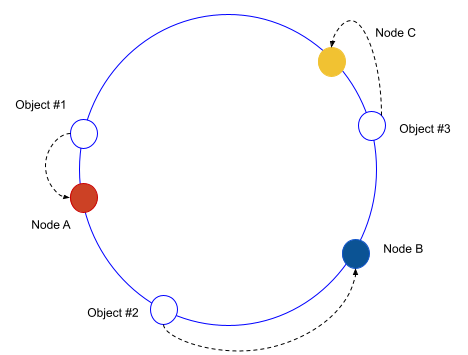

Now, as a thought experiment, pretend that a node fails and the other nodes must absorb the extra work. You will notice that existing objects do not need to be moved and the node closest to the failed node will get the extra work until the failed node recovers. Next, pretend that an additional node is added. The result is similar. The new node will slowly acquire objects reducing the load of the closest object. This simple example illustrates the efficiency of Consistent Hashing. In reality, this algorithm is more complex; read more here if it interests you.

Consistent Hashing is a distributed hashing scheme that operates independently of the number of servers or objects participating in the algorithm by assigning them a position on an abstract circle. This allows servers and objects to scale without affecting the overall system.

Summary

MinIO Cache is a caching service that uses memory to cache frequently accessed objects. Built for AIStor, MinIO Cache is the best caching service for MinIO – unlike a generic caching service that has no knowledge of MinIO Object Store. If an object is requested from MinIO Cache and it does not exist in the cache, then MinIO Cache will fetch it for you and place it in the cache for subsequent requests.

Additionally, MinIO Cache utilizes the “Consistent Hashing” scheme for efficient object management during node scale-out operations and node failures.