Kubernetes Sidecar with MinIO

MinIO is a core piece of infrastructure that more than one application depends on in an organization. MinIO powers some of the most demanding cloud-native applications such as database external tables storage, metrics and logs storage, configuration management data, AI/ML data in data lakes, big data processing with Apache Spark, Helm chart repository, among countless others.

MinIO is designed to run anywhere. It can run on-prem, in the public or private cloud, on the edge on IoT devices, virtual machines, Docker containers, and Kubernetes – anywhere you need S3 API compatible object storage. We especially embrace Kubernetes because we think of it as the future of application deployment and orchestration. We develop MinIO with Kubernetes in mind and have different ways of deploying it, such as standalone clusters or multiple tenants using an operator.

No matter which method you use to deploy MinIO into Kubernetes, ultimately your application will need to interface with it either using APIs or SDKs that we have available here. It's important to note that at times you will want to perform tasks on objects or in the cluster separate from the main application. What are these tasks?

- If you have a separate application, then a sidecar can manage logging

- Ad-hoc configuration of your application and reloading if needed

- The sidecar can handle TLS termination

To perform these various tasks, instead of modifying the main application or the container it's running in, you can run it in a separate container next to the main application as a sidecar.

What is a Sidecar?

There are so many different types of resources available in Kubernetes, so many that sometimes it's impossible to know which resource to use for what specific purpose. Sidecar is not a specific resource type such as Pod, Deployment, StatefulSet, DaemonSet, among others. It is a container just like the container launched by the `Pod` resource. Generally a pod contains only a single container with a specific purpose. But there are times when you need to deploy two containers in the same pod, perhaps due to the aforementioned reasons, this second container is called the “sidecar”.

The sidecar containers share the same resources as the main container such as Network Space and Storage Volumes. This allows the main container to access data in the sidecar and vice versa and allows them to communicate with each other using their local IPs rather than the Kubernetes DNS names.

Sidecar with MinIO

With MinIO serving as the central foundational component in infrastructure operations, it's no surprise data needs to be readily accessible, the cluster needs to be scalable and moreover it needs to be fault tolerant if and when there are hardware issues. Because of the versatile nature of MinIO and its integration with Kubernetes, they are frequently paired together because they support a myriad of application use cases.

Image Resizing

In our Kafka blog post last year, we talked about an image resizer workflow that would allow you to take an image from a MinIO bucket and then resize it using a Python script that listens to the Kafka message.

You can, for instance, run that script (snippet shown below) inside a Kubernetes sidecar container which will listen to the Kafka messages and then perform the resizing within the sidecar container.

from minio import Minio

import urllib3

from kafka import KafkaConsumer

import json

…

# Initialize MinIO client

minio_client = Minio(config["minio_endpoint"],

secure=True,

access_key=config["minio_username"],

secret_key=config["minio_password"],

http_client = http_client

)

…

# Initialize Kafka consumer

consumer = KafkaConsumer(

bootstrap_servers=config["kafka_servers"],

value_deserializer = lambda v: json.loads(v.decode('ascii'))

)

consumer.subscribe(topics=config["kafka_topic"])

try:

print("Ctrl+C to stop Consumer\n")

for message in consumer:

message_from_topic = message.value

request_type = message_from_topic["EventName"]

bucket_name, object_path = message_from_topic["Key"].split("/", 1)

# Only process the request if a new object is created via PUT

if request_type == "s3:ObjectCreated:Put":

minio_client.fget_object(bucket_name, object_path, object_path)

print("- Doing some pseudo image resizing or ML processing on %s" % object_path)

minio_client.fput_object(config["dest_bucket"], object_path, object_path)

…Here is the best part. Your main application can be in a completely different language with its own dependencies, separate from the sidecar container. The sidecar, for instance, can be our Python script and the main application can be in Golang.

Backup

Backups generally take a lot of time to complete and they each need to run at a separate time with their own resources. This way they do not interfere with the performance of the main application. So while a database or configurations might be running on the main container, the backup would be run in the sidecar container and accelerated using Jumbo.

In MongoDB you would run the following command in the sidecar

mongodump --db=examples --collection=movies --out="-" | ./jumbo_0.1-rc2_linux_amd64 put http://<Your-MinIO-Address>:9000/backup/mongo-backup-1In Percona DB it would look something like this

xtrabackup --backup --compress --compress-threads=8 --stream=xbstream --parallel=4 --target-dir=./ | backup.xbstream gzip -`` | ./jumbo_0.1-rc2_linux_amd64 put http://localhost:20091/testbackup123/percona-xtrabackupSo on and so forth. You can rinse and repeat similarly for any type of data store out there.

Other Use Cases

We could go all day, but to summarize, one of the most frequent use cases for sidecars are monitoring. For example, with OpenTelemetry, you can send cluster and pod metrics by running the agent in a sidecar container. In applications where you need distributed tracing, you can run Jaeger in a sidecar to collect traces and send it over to any metrics server of your choosing such as Prometheus, OpenTelemetry or InfluxDB.

Another prominent use case is configuration management such as Puppet and Salt or even etcd and Consul where the configuration management data needs to be synchronized every few minutes or so. This is a repetitive task that the sidecar can run continually so the application will always have the latest and greatest configurations. Even if the sidecar is down for some reason it will continue using the last known good synced configuration.

Sidecar Example

Let’s launch a small application to understand the basics of working with Kubernetes sidecar containers and how you can use them alongside your application and MinIO.

Before we dive deep into launching the sidecar, let's take a look at how simple it is to configure it. Under `spec.containers`, you would add an entry like this.

apiVersion: v1

kind: Pod

spec:

containers:

- name: main-application

image: nginx

volumeMounts:

- name: shared-logs

mountPath: /var/log/nginxIf you notice this is a “list” of containers. To add a sidecar simply add another container to the bottom of the list like so

apiVersion: v1

kind: Pod

spec:

containers:

- name: main-application

image: nginx

volumeMounts:

- name: shared-logs

mountPath: /var/log/nginx

- name: sidecar-container

image: busybox

command: ["sh","-c","while true; do cat /var/log/nginx/access.log; sleep 30; done"]

volumeMounts:

- name: shared-logs

mountPath: /var/log/nginx

volumes:

- name: shared-logs

emptyDir: {}That’s about it, that is a sidecar. Just by doing that you have given the second container access to all the data and network space to the main container and vice versa.

This example application basically reads the logs from the main container and outputs them to the Kubernetes logs. In order to access the service we need to expose this on a NodePort.

apiVersion: v1

kind: Service

metadata:

name: simple-webapp

labels:

run: simple-webapp

spec:

ports:

- port: 80

protocol: TCP

selector:

app: webapp

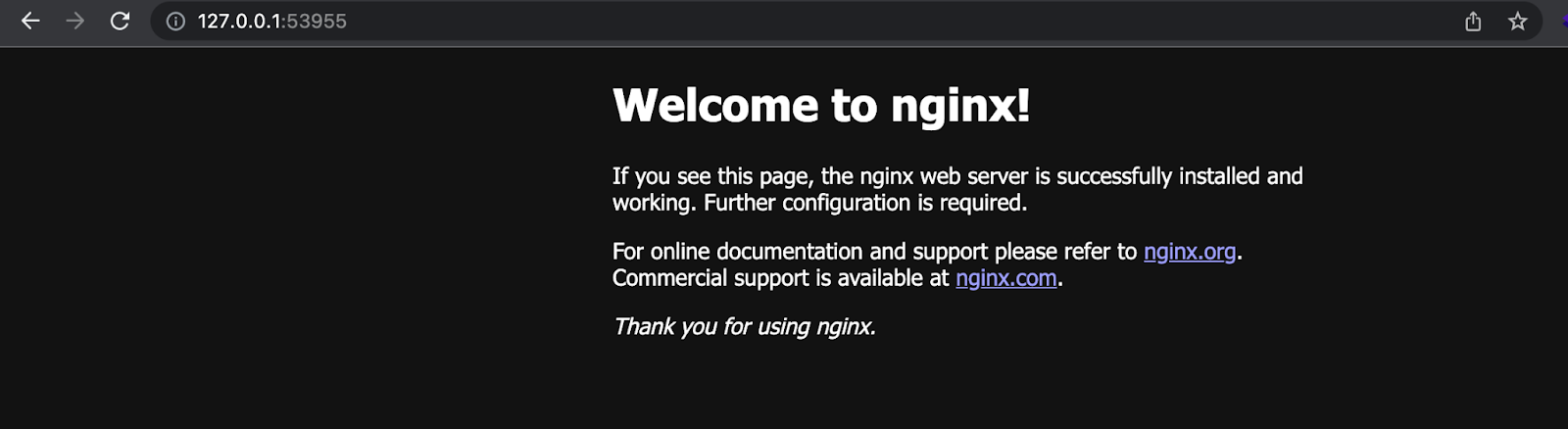

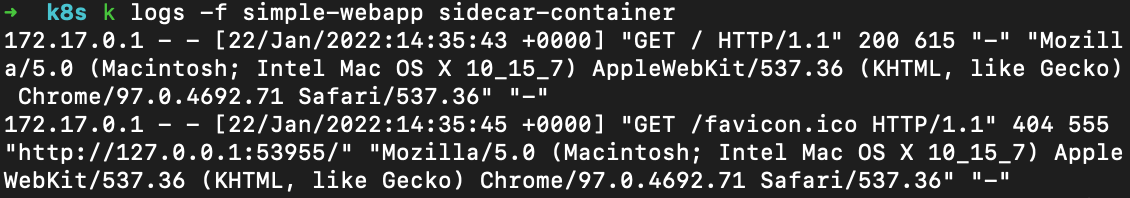

type: NodePortApply this YAML with the Deployment and Service to your choice of Kubernetes cluster. In this case, we are using a Minikube cluster.

kubectl create -f <sidecar>.yaml

Expose the service we created in minikube using the command below

minikube service --url simple-webapp

Tail the logs of the sidecar container

kubectl logs -f simple-webapp sidecar-container

Access the Nginx server using a browser

You should see something like this in the sidecar container logs

The Buddy System

As an avid motorcyclist, the sidecar reminds me of those old school motorcycles where you can take a passenger next to you in a small buggy-looking type of seat. These things are notoriously difficult to drive and things can get out of hand too quickly too fast.

The same goes for our Kubernetes sidecars. Not only can you have a sidecar but you can have more than one running in a pod. Any container other than the main one is considered a sidecar, but tread lightly, just because Kubernetes allows it doesn’t mean we should have a large monolithic app full of sidecars. You still want to have different pods and deployments for different apps and in general follow best practices when developing apps. Sidecars are useful when you have two different applications but they complement each other in some way, like logging, monitoring or any other tasks that aid the main application.

For more information on Sidecars and Kubernetes in general, ask our experts using the live chat at the bottom right of the blog and learn more about our SUBNET experience by emailing us at hello@min.io.