Using Cloud Native Object Storage to Support DevOps Best Practices

For every Amazon or Etsy running DevOps at the highest levels of velocity, there are thousands of teams like mine that I will generously call a work in progress. The old adage of a chain only being as strong as its weakest link certainly applies to DevOps. Every DevOps organization has its own strengths and weaknesses. Perhaps your CI/CD pipeline is highly automated but deployment frequency lags due to legacy approval steps. Maybe the team lacks strength with unit and regression testing and as a result there are less frequent code commits. It could be that a lack of metrics limits issue identification and as a result Mean-Time-To-Resolution (MTTR) could be improved.

Then

While it seems they’re “so 2018,” I find DevOps maturity models to be a great way for a team to self-assess and highlight those weak links and then prescribe what’s required to reach the next level. Prior to joining MinIO, my team embarked on a journey to apply DevOps principles to our culture, processes, and technologies. In many companies cultural resistance is the hardest part; I was fortunate to be part of an open source company that championed DevOps culture. We were dedicated to Agile, one might even say we were Agile nerds, but we came to realize we were working to support Agile processes and reporting tools rather than processes supporting intended outcomes. Ultimately we let go of most Agile ceremonies, keeping only daily stand ups and shifting to Kanban-based activity management. On the technology side, we were already heavily invested in Git, Jenkins, and Ansible, and between them had a good foundation of tools to strengthen our CI/CD pipeline.

Over the course of three years, we made tremendous progress and were able to go from developer desktop to production with a high degree of automation. To evaluate our progress and next steps, our leadership team had a planning session in which we assessed ourselves using a DevOps maturity model. It became clear we were deficient in unit testing and metrics, and the lack of both had become constraints to further efficiency and quality gains. Through the lens of a maturity model, we were better able to pinpoint our deficiencies and rally the team to remediate those shortcomings.

Now

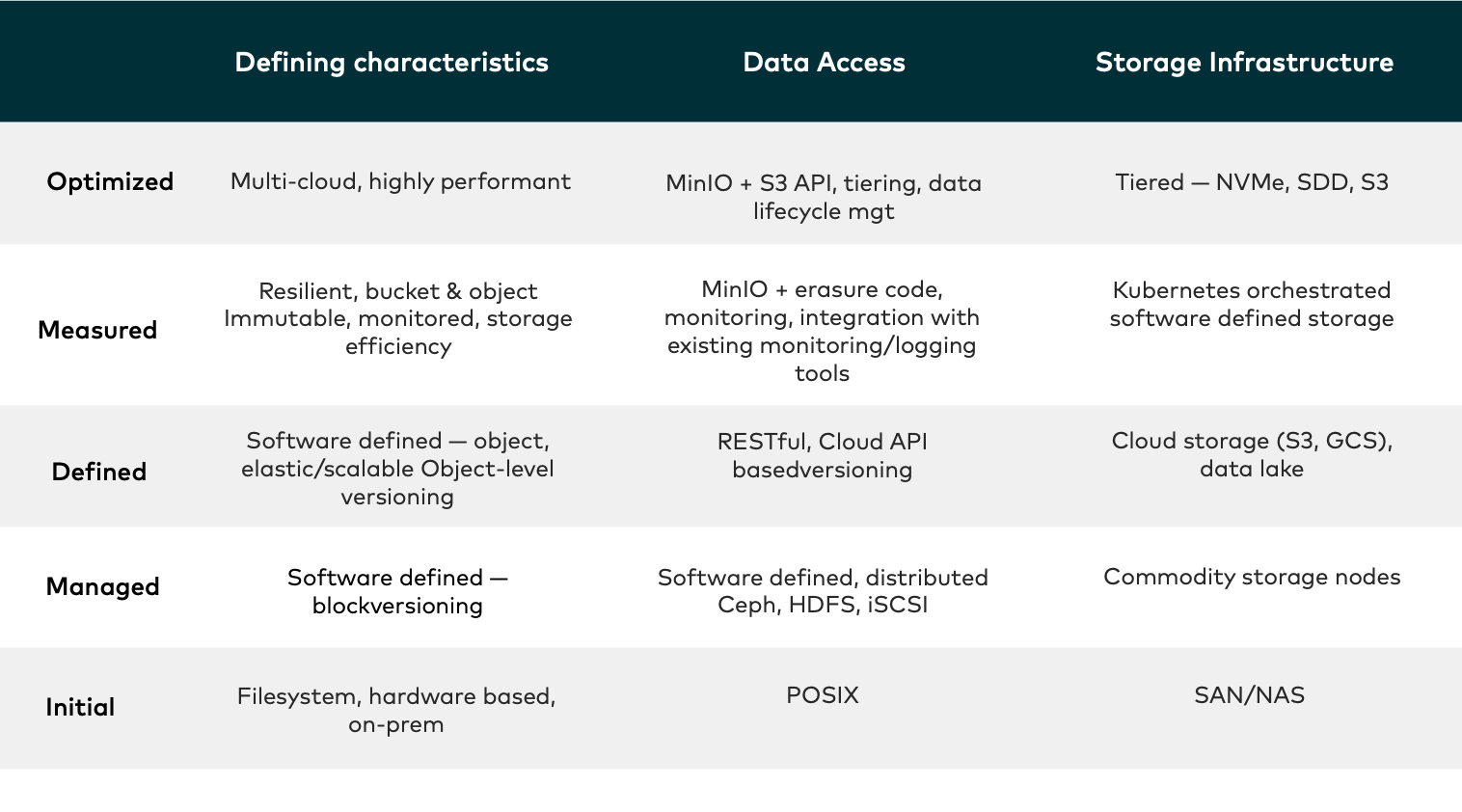

Fast forward two years and I am privileged to have joined MinIO, a leader in software-defined data storage. MinIO delivers high performance, S3 compatible object storage across Kubernetes, public and private clouds, and virtualized environments. Having settled in, I went searching for a DevOps maturity model that addressed data access and storage infrastructure and was surprised not to find an equivalent framework. Antiquated data access and storage infrastructures can be the weak link for cloud native applications.

So I decided to take a shot at extending the DevOps maturity model construct to incorporate cloud native data store architectures.

DevOps Maturity Model for Multi-Cloud Data Access and Storage Infrastructure

Legacy SAN and NAS systems, coupled with POSIX databases delivered good filesystem database performance but represent an antipattern for DevOps — it is difficult, if not impossible, to transition to a version-control based Database-as-Code model. The traditional storage systems are monolithic and rigid — an enormous amount of time gets spent working around this technical debt, perpetuating legacy APIs like JPA that allow the database to persist without being part of the DevOps architecture itself.

The introduction of software-defined block storage using commodity hardware and protocols such as HDFS was a big step forward for large, batch oriented workloads. Block storage delivered major improvements over file storage in terms of scalability and performance and Hadoop introduced an API-based interface that simplified application development and greased the skids for CI/CD.

The introduction of object storage transformed data and storage into a true “As-A-Service” model that was critical for DevOps pipelines to flourish. AWS’s S3 API further increased developer velocity and provided a vehicle to efficiently manage the exponential growth of unstructured data. By leveraging the S3 API and the inherent strengths of object storage, development teams are able to achieve tighter DevOps infinity loops as evidenced by increased deployment frequency, fewer errors, and lower MTTR for issues. Although each public cloud vendor introduced their own storage model and RESTful API, the S3 API has become the de facto standard, both a function of AWS’ market share and the capabilities of the S3 API itself.

DevOps shops operating at the top of the maturity models take advantage of Linux-based containers and Kubernetes-based orchestration to abstract applications from the underlying compute, network and storage infrastructure. The architecture is ideal for cloud native where individual services exist as discrete containers and can be upgraded (or rolled back) on the fly without disrupting application uptime. The interesting thing is that storage abstraction does not diminish the importance of data access and storage — to the contrary, cloud native applications require highly performant storage infrastructure!

DevOps and MinIO

MinIO object storage provides what traditional architectures and storage configurations can’t — performant, resilient, and extensible storage infrastructure for Kubernetes.

Within a Kubernetes cluster, MinIO delivers native, operator-based functionality that supports multiple tiers of underlying storage. The multi-tier capabilities are especially compelling when Kubernetes is deployed into a public cloud as containerized applications can leverage NVMe, HDD, and the cloud provider’s storage through a single interface. MinIO optimizes application performance while also optimizing cloud storage, network, and compute spend.

If storage and data infrastructure are not distributed, decoupled, declarative, and immutable, continuous delivery is constrained and regression in the dev-test-stage-prod cycle is more common. The decoupling tenet of continuous delivery is designed to ensure that, as your application moves through the cycle, it’s not tied to a particular implementation of your data infrastructure. MinIO solves this by implementing S3 compatibility at every level from dev to prod, and making it simple to deploy a minimal dev environment that can easily scale up and out to the cloud with a simplified REST-ful interface to your data layer.

MinIO is, by its very nature, distributed, with active-active replication of data, and the ability to continue operating when a backing drive fails. The write-once-read-many feature of MinIO’s implementation means that your data integrity is maintained no matter the deployment, and you spend less time coding around read errors or data contention issues. Plus, never worry about losing data to over-writes, with versioning in your MinIO buckets! And MinIO’s declarative deployment process means you can stand up exactly the features you need, when and where you need them, using all the common tools for Kubernetes-based deployments. All of these features together make MinIO the best choice for developing big-data-driven applications at any scale.

MinIO facilitates the separation of the data layer from the application, providing persistence and continuity as deployments are executed. Further, MinIO’s strict adherence to S3 compatibility provides developers with a familiar, de facto standard API that accelerates development and ensures interoperability across architectures. Applications can start on a really small laptop footprint and advance to multi-node and multi-datacenter production deployments without any code modifications to a cloud-backed or on-premise cluster deployment, with either MinIO services, or another S3-compliant implementation. Further, your data tiers can be more easily backed by the infrastructure that makes the most sense, for instance, keeping your hot data local on speedy NVMe, and your cold data in the cloud. Integrations with external management and security services and applications, for example identity providers such as Keycloak, Active Directory or LDAP, centralize controls, simplify deployment and provide critical protection across any cloud, on-premise and at the edge.For DevOps organizations seeking to optimize velocity via container automation and Infrastructure-as-Code, MinIO completes the optimal architecture to accelerate time to market and drive business outcomes. Feel free to try for yourself, you can download MinIO here and get help on the general Slack channel here.